@author:小道

1\由于最近在对PBR部分进行Unreal分析和SmaEngine梳理,后面觉得还是单个单个的小案例来研究和实现,最后稳定了再放入到SmaEngine。

2\发现有本很不错的书,leanOpenGL,先赛一下,,,

Shader Code

vs:

- #version 330 core

- layout (location = 0) in vec3 aPos;

- layout (location = 1) in vec2 aTexCoords;

- layout (location = 2) in vec3 aNormal;

- out vec2 TexCoords;

- out vec3 WorldPos;

- out vec3 Normal;

- uniform mat4 projection;

- uniform mat4 view;

- uniform mat4 model;

- void main()

- {

- TexCoords = aTexCoords;

- WorldPos = vec3(model * vec4(aPos, 1.0));

- Normal = mat3(model) * aNormal;

- gl_Position = projection * view * vec4(WorldPos, 1.0);

-

}

fs:

- #version 330 core

- out vec4 FragColor;

- in vec2 TexCoords;

- in vec3 WorldPos;

- in vec3 Normal;

- // material parameters

- uniform vec3 albedo;

- uniform float metallic;

- uniform float roughness;

- uniform float ao;

- // IBL

- uniform samplerCube irradianceMap;

- uniform samplerCube prefilterMap;

- uniform sampler2D brdfLUT;

- // lights

- uniform vec3 lightPositions[4];

- uniform vec3 lightColors[4];

- uniform vec3 camPos;

- const float PI = 3.14159265359;

- // ----------------------------------------------------------------------------

- float DistributionGGX(vec3 N, vec3 H, float roughness)

- {

- float a = roughness*roughness;

- float a2 = a*a;

- float NdotH = max(dot(N, H), 0.0);

- float NdotH2 = NdotH*NdotH;

- float nom = a2;

- float denom = (NdotH2 * (a2 - 1.0) + 1.0);

- denom = PI * denom * denom;

- return nom / denom;

- }

- // ----------------------------------------------------------------------------

- float GeometrySchlickGGX(float NdotV, float roughness)

- {

- float r = (roughness + 1.0);

- float k = (r*r) / 8.0;

- float nom = NdotV;

- float denom = NdotV * (1.0 - k) + k;

- return nom / denom;

- }

- // ----------------------------------------------------------------------------

- float GeometrySmith(vec3 N, vec3 V, vec3 L, float roughness)

- {

- float NdotV = max(dot(N, V), 0.0);

- float NdotL = max(dot(N, L), 0.0);

- float ggx2 = GeometrySchlickGGX(NdotV, roughness);

- float ggx1 = GeometrySchlickGGX(NdotL, roughness);

- return ggx1 * ggx2;

- }

- // ----------------------------------------------------------------------------

- vec3 fresnelSchlick(float cosTheta, vec3 F0)

- {

- return F0 + (1.0 - F0) * pow(1.0 - cosTheta, 5.0);

- }

- // ----------------------------------------------------------------------------

- vec3 fresnelSchlickRoughness(float cosTheta, vec3 F0, float roughness)

- {

- return F0 + (max(vec3(1.0 - roughness), F0) - F0) * pow(1.0 - cosTheta, 5.0);

- }

- // ----------------------------------------------------------------------------

- void main()

- {

- vec3 N = Normal;

- vec3 V = normalize(camPos - WorldPos);

- vec3 R = reflect(-V, N);

- // calculate reflectance at normal incidence; if dia-electric (like plastic) use F0

- // of 0.04 and if it's a metal, use the albedo color as F0 (metallic workflow)

- vec3 F0 = vec3(0.04);

- F0 = mix(F0, albedo, metallic);

- // reflectance equation

- vec3 Lo = vec3(0.0);

- for(int i = 0; i < 4; ++i)

- {

- // calculate per-light radiance

- vec3 L = normalize(lightPositions[i] - WorldPos);

- vec3 H = normalize(V + L);

- float distance = length(lightPositions[i] - WorldPos);

- float attenuation = 1.0 / (distance * distance);

- vec3 radiance = lightColors[i] * attenuation;

- // Cook-Torrance BRDF

- float NDF = DistributionGGX(N, H, roughness);

- float G = GeometrySmith(N, V, L, roughness);

- vec3 F = fresnelSchlick(max(dot(H, V), 0.0), F0);

- vec3 nominator = NDF * G * F;

- float denominator = 4 * max(dot(N, V), 0.0) * max(dot(N, L), 0.0) + 0.001; // 0.001 to prevent divide by zero.

- vec3 specular = nominator / denominator;

- // kS is equal to Fresnel

- vec3 kS = F;

- // for energy conservation, the diffuse and specular light can't

- // be above 1.0 (unless the surface emits light); to preserve this

- // relationship the diffuse component (kD) should equal 1.0 - kS.

- vec3 kD = vec3(1.0) - kS;

- // multiply kD by the inverse metalness such that only non-metals

- // have diffuse lighting, or a linear blend if partly metal (pure metals

- // have no diffuse light).

- kD *= 1.0 - metallic;

- // scale light by NdotL

- float NdotL = max(dot(N, L), 0.0);

- // add to outgoing radiance Lo

- Lo += (kD * albedo / PI + specular) * radiance * NdotL; // note that we already multiplied the BRDF by the Fresnel (kS) so we won't multiply by kS again

- }

- // ambient lighting (we now use IBL as the ambient term)

- vec3 F = fresnelSchlickRoughness(max(dot(N, V), 0.0), F0, roughness);

- vec3 kS = F;

- vec3 kD = 1.0 - kS;

- kD *= 1.0 - metallic;

- vec3 irradiance = texture(irradianceMap, N).rgb;

- vec3 diffuse = irradiance * albedo;

- // sample both the pre-filter map and the BRDF lut and combine them together as per the Split-Sum approximation to get the IBL specular part.

- const float MAX_REFLECTION_LOD = 4.0;

- vec3 prefilteredColor = textureLod(prefilterMap, R, roughness * MAX_REFLECTION_LOD).rgb;

- vec2 brdf = texture(brdfLUT, vec2(max(dot(N, V), 0.0), roughness)).rg;

- vec3 specular = prefilteredColor * (F * brdf.x + brdf.y);

- vec3 ambient = (kD * diffuse + specular) * ao;

- vec3 color = ambient + Lo;

- // HDR tonemapping

- color = color / (color + vec3(1.0));

- // gamma correct

- color = pow(color, vec3(1.0/2.2));

- FragColor = vec4(color , 1.0);

-

}

解释

1. IBL 与 前面的 PBR 最大的区别就是,在fs中处理ambient的不同,IBL最主要的作用就是模拟环境光:

- vec3 irradiance = texture(irradianceMap, N).rgb;

- vec3 diffuse = irradiance * albedo;

- // sample both the pre-filter map and the BRDF lut and combine them together as per the Split-Sum approximation to get the IBL specular part.

- const float MAX_REFLECTION_LOD = 4.0;

- vec3 prefilteredColor = textureLod(prefilterMap, R, roughness * MAX_REFLECTION_LOD).rgb;

- vec2 brdf = texture(brdfLUT, vec2(max(dot(N, V), 0.0), roughness)).rg;

- vec3 specular = prefilteredColor * (F * brdf.x + brdf.y);

-

vec3 ambient = (kD * diffuse + specular) * ao;

2. IBL的核心就是如何生成 irradianceMap,prefilterMap,brdfLUT 这3张texture,利用这3张texture保存的数据,进行模拟

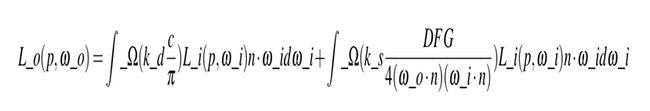

公式,因为周围的环境可以看作是一个很大的光源,所以就可以利用上面的公式来计算ambient。

3. 拆分公式

To solve the integral in a more efficient fashion we'll want to pre-process or pre-compute most of its computations. For this we'll have to delve a bit deeper into the reflectance equation:

Taking a good look at the reflectance equation we find that the diffuse k_d and specular k_s term of the BRDF are independent from each other and we can split the integral in two:

By splitting the integral in two parts we can focus on both the diffuse and specular term individually;

4. The diffuse integral 和 irradianceMap texture

Taking a closer look at the diffuse integral we find that the diffuse lambert term is a constant term (the color c, the refraction ratio k_d and π are constant over the integral) and not dependent on any of the integral variables. Given this, we can move the constant term out of the diffuse integral:

This gives us an integral that only depends on w_i (assuming p is at the center of the environment map). With this knowledge, we can calculate or pre-compute a new cubemap that stores in each sample direction (or texel) w_o the diffuse integral's result by convolution.

Convolution is applying some computation to each entry in a data set considering all other entries in the data set; the data set being the scene's radiance or environment map. Thus for every sample direction in the cubemap, we take all other sample directions over the hemisphere Ω into account.

To convolute an environment map we solve the integral for each output w_o sample direction by discretely sampling a large number of directions w_i over the hemisphere Ω and averaging their radiance. The hemisphere we build the sample directions w_i from is oriented towards the output w_o sample direction we're convoluting.

This pre-computed cubemap, that for each sample direction w_o stores the integral result, can be thought of as the pre-computed sum of all indirect diffuse light of the scene hitting some surface aligned along direction w_o. Such a cubemap is known as an irradiance map seeing as the convoluted cubemap effectively allows us to directly sample the scene's (pre-computed) irradiance from any direction w_o.

By storing the convoluted result in each cubemap texel (in the direction of w_o) the irradiance map displays somewhat like an average color or lighting display of the environment. Sampling any direction from this environment map will give us the scene's irradiance from that particular direction.

a. Load Equirectangular Map (加载一个Equirectangluar Map, 类似于一个camera,拍照360度得到的texture)

- // pbr: load the HDR environment map

- // ---------------------------------

- stbi_set_flip_vertically_on_load(true);

- int width, height, nrComponents;

- float *data = stbi_loadf(FileSystem::getPath("resources/textures/hdr/newport_loft.hdr").c_str(), &width, &height, &nrComponents, 0);

- unsigned int hdrTexture;

- if (data)

- {

- glGenTextures(1, &hdrTexture);

- glBindTexture(GL_TEXTURE_2D, hdrTexture);

- glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, width, height, 0, GL_RGB, GL_FLOAT, data); // note how we specify the texture's data value to be float

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

- stbi_image_free(data);

- }

- else

- {

- std::cout << "Failed to load HDR image." << std::endl;

-

}

b. From Equirectangular to Cubemap

- // pbr: setup cubemap to render to and attach to framebuffer

- // ---------------------------------------------------------

- unsigned int envCubemap;

- glGenTextures(1, &envCubemap);

- glBindTexture(GL_TEXTURE_CUBE_MAP, envCubemap);

- for (unsigned int i = 0; i < 6; ++i)

- {

- glTexImage2D(GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, GL_RGB16F, 512, 512, 0, GL_RGB, GL_FLOAT, nullptr);

- }

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); // enable pre-filter mipmap sampling (combatting visible dots artifact)

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

- // pbr: set up projection and view matrices for capturing data onto the 6 cubemap face directions

- // ----------------------------------------------------------------------------------------------

- glm::mat4 captureProjection = glm::perspective(glm::radians(90.0f), 1.0f, 0.1f, 10.0f);

- glm::mat4 captureViews[] =

- {

- glm::lookAt(glm::vec3(0.0f, 0.0f, 0.0f), glm::vec3(1.0f, 0.0f, 0.0f), glm::vec3(0.0f, -1.0f, 0.0f)),

- glm::lookAt(glm::vec3(0.0f, 0.0f, 0.0f), glm::vec3(-1.0f, 0.0f, 0.0f), glm::vec3(0.0f, -1.0f, 0.0f)),

- glm::lookAt(glm::vec3(0.0f, 0.0f, 0.0f), glm::vec3(0.0f, 1.0f, 0.0f), glm::vec3(0.0f, 0.0f, 1.0f)),

- glm::lookAt(glm::vec3(0.0f, 0.0f, 0.0f), glm::vec3(0.0f, -1.0f, 0.0f), glm::vec3(0.0f, 0.0f, -1.0f)),

- glm::lookAt(glm::vec3(0.0f, 0.0f, 0.0f), glm::vec3(0.0f, 0.0f, 1.0f), glm::vec3(0.0f, -1.0f, 0.0f)),

- glm::lookAt(glm::vec3(0.0f, 0.0f, 0.0f), glm::vec3(0.0f, 0.0f, -1.0f), glm::vec3(0.0f, -1.0f, 0.0f))

- };

- // pbr: convert HDR equirectangular environment map to cubemap equivalent

- // ----------------------------------------------------------------------

- equirectangularToCubemapShader.use();

- equirectangularToCubemapShader.setInt("equirectangularMap", 0);

- equirectangularToCubemapShader.setMat4("projection", captureProjection);

- glActiveTexture(GL_TEXTURE0);

- glBindTexture(GL_TEXTURE_2D, hdrTexture);

- glViewport(0, 0, 512, 512); // don't forget to configure the viewport to the capture dimensions.

- glBindFramebuffer(GL_FRAMEBUFFER, captureFBO);

- for (unsigned int i = 0; i < 6; ++i)

- {

- equirectangularToCubemapShader.setMat4("view", captureViews[i]);

- glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, envCubemap, 0);

- glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

- renderCube();

- }

-

glBindFramebuffer(GL_FRAMEBUFFER, 0);

vs:

- #version 330 core

- layout (location = 0) in vec3 aPos;

- out vec3 WorldPos;

- uniform mat4 projection;

- uniform mat4 view;

- void main()

- {

- WorldPos = aPos;

- gl_Position = projection * view * vec4(WorldPos, 1.0);

-

}

fs:

- #version 330 core

- out vec4 FragColor;

- in vec3 WorldPos;

- uniform sampler2D equirectangularMap;

- const vec2 invAtan = vec2(0.1591, 0.3183);

- vec2 SampleSphericalMap(vec3 v)

- {

- vec2 uv = vec2(atan(v.z, v.x), asin(v.y));

- uv *= invAtan;

- uv += 0.5;

- return uv;

- }

- void main()

- {

- vec2 uv = SampleSphericalMap(normalize(WorldPos));

- vec3 color = texture(equirectangularMap, uv).rgb;

- FragColor = vec4(color, 1.0);

-

}

c. Cubemap Convolution

Now, to generate the irradiance map we need to convolute the environment's lighting as converted to a cubemap. Given that for each fragment the surface's hemisphere is oriented along the normal vector N, convoluting a cubemap equals calculating the total averaged radiance of each direction w_i in the hemisphere Ω oriented along N.

There are many ways to convolute the environment map, but for this tutorial we're going to generate a fixed amount of sample vectors for each cubemap texel along a hemisphere Ω oriented around the sample direction and average the results. The fixed amount of sample vectors will be uniformly spread inside the hemisphere. Note that an integral is a continuous function and discretely sampling its function given a fixed amount of sample vectors will be an approximation. The more sample vectors we use, the better we approximate the integral.

The integral of the reflectance equation revolves around the solid angle dw which is rather difficult to work with. Instead of integrating over the solid angle dw we'll integrate over its equivalent spherical coordinates θ and φ.

We use the polar azimuth φ angle to sample around the ring of the hemisphere between 0 and 2π, and use the inclination zenith θ angle between 0 and 1 π to sample the increasing rings of the hemisphere. This will give us the updated reflectance integral:

Solving the integral requires us to take a fixed number of discrete samples within the hemisphere Ω and averaging their results. This translates the integral to the following discrete version as based on the Riemann sum given n1 and n2 discrete samples on each spherical coordinate respectively:

As we sample both spherical values discretely, each sample will approximate or average an area on the hemisphere as the image above shows. Note that (due to the general properties of a spherical shape) the hemisphere's discrete sample area gets smaller the higher the zenith angle θ as the sample regions converge towards the center top. To compensate for the smaller areas, we weigh its contribution by scaling the area by sinθ clarifying the added sin.

- // pbr: create an irradiance cubemap, and re-scale capture FBO to irradiance scale.

- // --------------------------------------------------------------------------------

- unsigned int irradianceMap;

- glGenTextures(1, &irradianceMap);

- glBindTexture(GL_TEXTURE_CUBE_MAP, irradianceMap);

- for (unsigned int i = 0; i < 6; ++i)

- {

- glTexImage2D(GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, GL_RGB16F, 32, 32, 0, GL_RGB, GL_FLOAT, nullptr);

- }

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

- glBindFramebuffer(GL_FRAMEBUFFER, captureFBO);

- glBindRenderbuffer(GL_RENDERBUFFER, captureRBO);

- glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT24, 32, 32);

- // pbr: solve diffuse integral by convolution to create an irradiance (cube)map.

- // -----------------------------------------------------------------------------

- irradianceShader.use();

- irradianceShader.setInt("environmentMap", 0);

- irradianceShader.setMat4("projection", captureProjection);

- glActiveTexture(GL_TEXTURE0);

- glBindTexture(GL_TEXTURE_CUBE_MAP, envCubemap);

- glViewport(0, 0, 32, 32); // don't forget to configure the viewport to the capture dimensions.

- glBindFramebuffer(GL_FRAMEBUFFER, captureFBO);

- for (unsigned int i = 0; i < 6; ++i)

- {

- irradianceShader.setMat4("view", captureViews[i]);

- glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, irradianceMap, 0);

- glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

- renderCube();

- }

-

glBindFramebuffer(GL_FRAMEBUFFER, 0);

fs:

- #version 330 core

- out vec4 FragColor;

- in vec3 WorldPos;

- uniform samplerCube environmentMap;

- const float PI = 3.14159265359;

- void main()

- {

- vec3 N = normalize(WorldPos);

- vec3 irradiance = vec3(0.0);

- // tangent space calculation from origin point

- vec3 up = vec3(0.0, 1.0, 0.0);

- vec3 right = cross(up, N);

- up = cross(N, right);

- float sampleDelta = 0.025;

- float nrSamples = 0.0f;

- for(float phi = 0.0; phi < 2.0 * PI; phi += sampleDelta)

- {

- for(float theta = 0.0; theta < 0.5 * PI; theta += sampleDelta)

- {

- // spherical to cartesian (in tangent space)

- vec3 tangentSample = vec3(sin(theta) * cos(phi), sin(theta) * sin(phi), cos(theta));

- // tangent space to world

- vec3 sampleVec = tangentSample.x * right + tangentSample.y * up + tangentSample.z * N;

- irradiance += texture(environmentMap, sampleVec).rgb * cos(theta) * sin(theta);

- nrSamples++;

- }

- }

- irradiance = PI * irradiance * (1.0 / float(nrSamples));

- FragColor = vec4(irradiance, 1.0);

-

}

5. Specular part

Epic Games proposed a solution where they were able to pre-convolute the specular part for real time purposes, given a few compromises, known as the split sum approximation.

Epic Games' split sum approximation solves the issue by splitting the pre-computation into 2 individ- ual parts that we can later combine to get the resulting pre-computed result we're after. The split sum approximation splits the specular integral into two separate integrals:

a. Pre-Filtered Environment Map

The first part (when convoluted) is known as the pre-filtered environment map which is (similar to the irradiance map) a pre-computed environment convolution map, but this time taking roughness into account. For increasing roughness levels, the environment map is convoluted with more scattered sample vectors, creating more blurry reflections. For each roughness level we convolute, we store the sequentially blurrier results in the pre-filtered map's mipmap levels. For instance, a pre-filtered environment map storing the pre-convoluted result of 5 different roughness values in its 5 mipmap levels looks as follows:

We generate the sample vectors and their scattering strength using the normal distribution function (NDF) of the Cook-Torrance BRDF that takes as input both a normal and view direction. As we don't know beforehand the view direction when convoluting the environment map, Epic Games makes a further approximation by assuming the view direction (and thus the specular reflection direction) is always equal to the output sample direction ω_o.

- // pbr: create a pre-filter cubemap, and re-scale capture FBO to pre-filter scale.

- // --------------------------------------------------------------------------------

- unsigned int prefilterMap;

- glGenTextures(1, &prefilterMap);

- glBindTexture(GL_TEXTURE_CUBE_MAP, prefilterMap);

- for (unsigned int i = 0; i < 6; ++i)

- {

- glTexImage2D(GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, GL_RGB16F, 128, 128, 0, GL_RGB, GL_FLOAT, nullptr);

- }

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); // be sure to set minifcation filter to mip_linear

- glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

- // generate mipmaps for the cubemap so OpenGL automatically allocates the required memory.

- glGenerateMipmap(GL_TEXTURE_CUBE_MAP);

- // pbr: run a quasi monte-carlo simulation on the environment lighting to create a prefilter (cube)map.

- // ----------------------------------------------------------------------------------------------------

- prefilterShader.use();

- prefilterShader.setInt("environmentMap", 0);

- prefilterShader.setMat4("projection", captureProjection);

- glActiveTexture(GL_TEXTURE0);

- glBindTexture(GL_TEXTURE_CUBE_MAP, envCubemap);

- glBindFramebuffer(GL_FRAMEBUFFER, captureFBO);

- unsigned int maxMipLevels = 5;

- for (unsigned int mip = 0; mip < maxMipLevels; ++mip)

- {

- // reisze framebuffer according to mip-level size.

- unsigned int mipWidth = 128 * std::pow(0.5, mip);

- unsigned int mipHeight = 128 * std::pow(0.5, mip);

- glBindRenderbuffer(GL_RENDERBUFFER, captureRBO);

- glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT24, mipWidth, mipHeight);

- glViewport(0, 0, mipWidth, mipHeight);

- float roughness = (float)mip / (float)(maxMipLevels - 1);

- prefilterShader.setFloat("roughness", roughness);

- for (unsigned int i = 0; i < 6; ++i)

- {

- prefilterShader.setMat4("view", captureViews[i]);

- glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, prefilterMap, mip);

- glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

- renderCube();

- }

- }

-

glBindFramebuffer(GL_FRAMEBUFFER, 0);

fs:

- #version 330 core

- out vec4 FragColor;

- in vec3 WorldPos;

- uniform samplerCube environmentMap;

- uniform float roughness;

- const float PI = 3.14159265359;

- // ----------------------------------------------------------------------------

- float DistributionGGX(vec3 N, vec3 H, float roughness)

- {

- float a = roughness*roughness;

- float a2 = a*a;

- float NdotH = max(dot(N, H), 0.0);

- float NdotH2 = NdotH*NdotH;

- float nom = a2;

- float denom = (NdotH2 * (a2 - 1.0) + 1.0);

- denom = PI * denom * denom;

- return nom / denom;

- }

- // ----------------------------------------------------------------------------

- // http://holger.dammertz.org/stuff/notes_HammersleyOnHemisphere.html

- // efficient VanDerCorpus calculation.

- float RadicalInverse_VdC(uint bits)

- {

- bits = (bits << 16u) | (bits >> 16u);

- bits = ((bits & 0x55555555u) << 1u) | ((bits & 0xAAAAAAAAu) >> 1u);

- bits = ((bits & 0x33333333u) << 2u) | ((bits & 0xCCCCCCCCu) >> 2u);

- bits = ((bits & 0x0F0F0F0Fu) << 4u) | ((bits & 0xF0F0F0F0u) >> 4u);

- bits = ((bits & 0x00FF00FFu) << 8u) | ((bits & 0xFF00FF00u) >> 8u);

- return float(bits) * 2.3283064365386963e-10; // / 0x100000000

- }

- // ----------------------------------------------------------------------------

- vec2 Hammersley(uint i, uint N)

- {

- return vec2(float(i)/float(N), RadicalInverse_VdC(i));

- }

- // ----------------------------------------------------------------------------

- vec3 ImportanceSampleGGX(vec2 Xi, vec3 N, float roughness)

- {

- float a = roughness*roughness;

- float phi = 2.0 * PI * Xi.x;

- float cosTheta = sqrt((1.0 - Xi.y) / (1.0 + (a*a - 1.0) * Xi.y));

- float sinTheta = sqrt(1.0 - cosTheta*cosTheta);

- // from spherical coordinates to cartesian coordinates - halfway vector

- vec3 H;

- H.x = cos(phi) * sinTheta;

- H.y = sin(phi) * sinTheta;

- H.z = cosTheta;

- // from tangent-space H vector to world-space sample vector

- vec3 up = abs(N.z) < 0.999 ? vec3(0.0, 0.0, 1.0) : vec3(1.0, 0.0, 0.0);

- vec3 tangent = normalize(cross(up, N));

- vec3 bitangent = cross(N, tangent);

- vec3 sampleVec = tangent * H.x + bitangent * H.y + N * H.z;

- return normalize(sampleVec);

- }

- // ----------------------------------------------------------------------------

- void main()

- {

- vec3 N = normalize(WorldPos);

- // make the simplyfying assumption that V equals R equals the normal

- vec3 R = N;

- vec3 V = R;

- const uint SAMPLE_COUNT = 1024u;

- vec3 prefilteredColor = vec3(0.0);

- float totalWeight = 0.0;

- for(uint i = 0u; i < SAMPLE_COUNT; ++i)

- {

- // generates a sample vector that's biased towards the preferred alignment direction (importance sampling).

- vec2 Xi = Hammersley(i, SAMPLE_COUNT);

- vec3 H = ImportanceSampleGGX(Xi, N, roughness);

- vec3 L = normalize(2.0 * dot(V, H) * H - V);

- float NdotL = max(dot(N, L), 0.0);

- if(NdotL > 0.0)

- {

- // sample from the environment's mip level based on roughness/pdf

- float D = DistributionGGX(N, H, roughness);

- float NdotH = max(dot(N, H), 0.0);

- float HdotV = max(dot(H, V), 0.0);

- float pdf = D * NdotH / (4.0 * HdotV) + 0.0001;

- float resolution = 512.0; // resolution of source cubemap (per face)

- float saTexel = 4.0 * PI / (6.0 * resolution * resolution);

- float saSample = 1.0 / (float(SAMPLE_COUNT) * pdf + 0.0001);

- float mipLevel = roughness == 0.0 ? 0.0 : 0.5 * log2(saSample / saTexel);

- prefilteredColor += textureLod(environmentMap, L, mipLevel).rgb * NdotL;

- totalWeight += NdotL;

- }

- }

- prefilteredColor = prefilteredColor / totalWeight;

- FragColor = vec4(prefilteredColor, 1.0);

-

}

b. Pre-Calculate The BRDF

The second part of the equation equals the BRDF part of the specular integral. If we pretend the incoming radiance is completely white for every direction (thus L(p,x) = 1.0) we can pre-calculate the BRDF's response given an input roughness and an input angle between the normal n and light direction ω_i, or n·ω_i. Epic Games stores the pre-computed BRDF's response to each normal and light direction combination on varying roughness values in a 2D lookup texture (LUT) known as the BRDF integration map. The 2D lookup texture outputs a scale (red) and a bias value (green) to the surface's Fresnel response giving us the second part of the split specular integral:

We generate the lookup texture by treating the horizontal texture coordinate (ranged between 0.0 and1.0) of a plane as the BRDF's input n · ω _i and its vertical texture coordinate as the input roughness value.

- // pbr: generate a 2D LUT from the BRDF equations used.

- // ----------------------------------------------------

- unsigned int brdfLUTTexture;

- glGenTextures(1, &brdfLUTTexture);

- // pre-allocate enough memory for the LUT texture.

- glBindTexture(GL_TEXTURE_2D, brdfLUTTexture);

- glTexImage2D(GL_TEXTURE_2D, 0, GL_RG16F, 512, 512, 0, GL_RG, GL_FLOAT, 0);

- // be sure to set wrapping mode to GL_CLAMP_TO_EDGE

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

- glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

- // then re-configure capture framebuffer object and render screen-space quad with BRDF shader.

- glBindFramebuffer(GL_FRAMEBUFFER, captureFBO);

- glBindRenderbuffer(GL_RENDERBUFFER, captureRBO);

- glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT24, 512, 512);

- glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, brdfLUTTexture, 0);

- glViewport(0, 0, 512, 512);

- brdfShader.use();

- glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

- renderQuad();

-

glBindFramebuffer(GL_FRAMEBUFFER, 0);

vs:

- #version 330 core

- layout (location = 0) in vec3 aPos;

- layout (location = 1) in vec2 aTexCoords;

- out vec2 TexCoords;

- void main()

- {

- TexCoords = aTexCoords;

- gl_Position = vec4(aPos, 1.0);

-

}

fs:

- #version 330 core

- out vec2 FragColor;

- in vec2 TexCoords;

- const float PI = 3.14159265359;

- // ----------------------------------------------------------------------------

- // http://holger.dammertz.org/stuff/notes_HammersleyOnHemisphere.html

- // efficient VanDerCorpus calculation.

- float RadicalInverse_VdC(uint bits)

- {

- bits = (bits << 16u) | (bits >> 16u);

- bits = ((bits & 0x55555555u) << 1u) | ((bits & 0xAAAAAAAAu) >> 1u);

- bits = ((bits & 0x33333333u) << 2u) | ((bits & 0xCCCCCCCCu) >> 2u);

- bits = ((bits & 0x0F0F0F0Fu) << 4u) | ((bits & 0xF0F0F0F0u) >> 4u);

- bits = ((bits & 0x00FF00FFu) << 8u) | ((bits & 0xFF00FF00u) >> 8u);

- return float(bits) * 2.3283064365386963e-10; // / 0x100000000

- }

- // ----------------------------------------------------------------------------

- vec2 Hammersley(uint i, uint N)

- {

- return vec2(float(i)/float(N), RadicalInverse_VdC(i));

- }

- // ----------------------------------------------------------------------------

- vec3 ImportanceSampleGGX(vec2 Xi, vec3 N, float roughness)

- {

- float a = roughness*roughness;

- float phi = 2.0 * PI * Xi.x;

- float cosTheta = sqrt((1.0 - Xi.y) / (1.0 + (a*a - 1.0) * Xi.y));

- float sinTheta = sqrt(1.0 - cosTheta*cosTheta);

- // from spherical coordinates to cartesian coordinates - halfway vector

- vec3 H;

- H.x = cos(phi) * sinTheta;

- H.y = sin(phi) * sinTheta;

- H.z = cosTheta;

- // from tangent-space H vector to world-space sample vector

- vec3 up = abs(N.z) < 0.999 ? vec3(0.0, 0.0, 1.0) : vec3(1.0, 0.0, 0.0);

- vec3 tangent = normalize(cross(up, N));

- vec3 bitangent = cross(N, tangent);

- vec3 sampleVec = tangent * H.x + bitangent * H.y + N * H.z;

- return normalize(sampleVec);

- }

- // ----------------------------------------------------------------------------

- float GeometrySchlickGGX(float NdotV, float roughness)

- {

- // note that we use a different k for IBL

- float a = roughness;

- float k = (a * a) / 2.0;

- float nom = NdotV;

- float denom = NdotV * (1.0 - k) + k;

- return nom / denom;

- }

- // ----------------------------------------------------------------------------

- float GeometrySmith(vec3 N, vec3 V, vec3 L, float roughness)

- {

- float NdotV = max(dot(N, V), 0.0);

- float NdotL = max(dot(N, L), 0.0);

- float ggx2 = GeometrySchlickGGX(NdotV, roughness);

- float ggx1 = GeometrySchlickGGX(NdotL, roughness);

- return ggx1 * ggx2;

- }

- // ----------------------------------------------------------------------------

- vec2 IntegrateBRDF(float NdotV, float roughness)

- {

- vec3 V;

- V.x = sqrt(1.0 - NdotV*NdotV);

- V.y = 0.0;

- V.z = NdotV;

- float A = 0.0;

- float B = 0.0;

- vec3 N = vec3(0.0, 0.0, 1.0);

- const uint SAMPLE_COUNT = 1024u;

- for(uint i = 0u; i < SAMPLE_COUNT; ++i)

- {

- // generates a sample vector that's biased towards the

- // preferred alignment direction (importance sampling).

- vec2 Xi = Hammersley(i, SAMPLE_COUNT);

- vec3 H = ImportanceSampleGGX(Xi, N, roughness);

- vec3 L = normalize(2.0 * dot(V, H) * H - V);

- float NdotL = max(L.z, 0.0);

- float NdotH = max(H.z, 0.0);

- float VdotH = max(dot(V, H), 0.0);

- if(NdotL > 0.0)

- {

- float G = GeometrySmith(N, V, L, roughness);

- float G_Vis = (G * VdotH) / (NdotH * NdotV);

- float Fc = pow(1.0 - VdotH, 5.0);

- A += (1.0 - Fc) * G_Vis;

- B += Fc * G_Vis;

- }

- }

- A /= float(SAMPLE_COUNT);

- B /= float(SAMPLE_COUNT);

- return vec2(A, B);

- }

- // ----------------------------------------------------------------------------

- void main()

- {

- vec2 integratedBRDF = IntegrateBRDF(TexCoords.x, TexCoords.y);

- FragColor = integratedBRDF;

-

}

c. With this BRDF integration map and the pre-filtered environment map we can combine both to get the result of the specular integral:

- float lod = getMipLevelFromRoughness(roughness);

- vec3 prefilteredColor = textureCubeLod(PrefilteredEnvMap, refVec, lod);

- vec2 envBRDF = texture2D(BRDFIntegrationMap, vec2(roughness, NdotV)).xy;

-

vec3 indirectSpecular = prefilteredColor * (F * envBRDF.x + envBRDF.y)

This should give you a bit of an overview on how Epic Games' split sum approximation roughly approaches the indirect specular part of the reflectance equation. Let's now try and build the pre-convoluted parts ourselves.

来自