ceph入门----ceph安装

一、安装前准备

1.1安装环境介绍

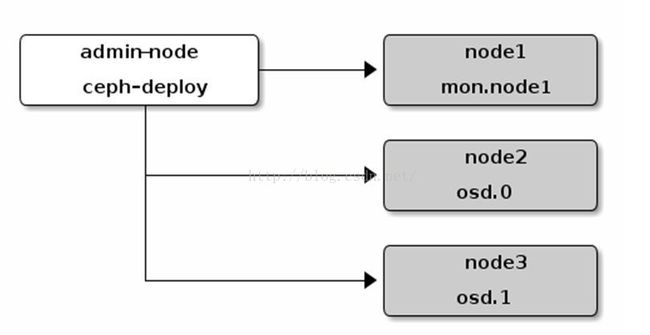

建议安装一个 ceph-deploy 管理节点和一个三节点的 Ceph 存储集群来学习ceph,架构如图所示。

我把ceph-deploy安装到node1上。

首先准备了三台机器,名字分别是ceph-node1, ceph-node2, ceph-node3

| 主机名 |

IP地址 |

作用 |

备注 |

| ceph-node1 |

10.89.153.214(外网) ,10.0.1.101,10.0.2.101 |

ceph-deploy,Mon |

10.0.1.x 是public网络,10.0.2.x是cluster网络 |

| ceph-node2 |

10.0.1.102,10.0.2.102 |

Osd |

|

| ceph-node3 |

10.0.1.103,10.0.2.103 |

Osd |

1.2 配置ceph节点

ceph-node1:

1.更改/etc/network/interfaces(待改进)

cat<< EOF >> /etc/network/interfaces

autoeth1

ifaceeth1 inet static

address10.89.153.214

gateway10.89.1.254

natmask255.255.0.0

dns-nameserver10.29.28.30

autoeth2

ifaceeth2 inet static

address 10.0.1.101

natmask255.255.255.0

autoeth3

ifaceeth3 inet static

address 10.0.2.101

natmask255.255.255.0

EOF

2.更改hostname

sed -i"s/localhost/ceph-node1/g"/etc/hostname

3.更改hosts文件

cat<< EOF >> /etc/hosts

10.0.1.101 ceph-node1

10.0.1.102 ceph-node2

10.0.1.103 ceph-node3

EOF

ceph-node2:

1.更改/etc/network/interfaces(待改进)

cat<< EOF >> /etc/network/interfaces

autoeth1

ifaceeth1 inet static

address 10.0.1.102

natmask255.255.255.0

autoeth2

ifaceeth2 inet static

address 10.0.2.102

natmask255.255.255.0

EOF

2.更改hostname

sed -i"s/localhost/ceph-node2/g"/etc/hostname

3.更改hosts文件

cat<< EOF >> /etc/hosts

10.0.1.101 ceph-node1

10.0.1.102 ceph-node2

10.0.1.103 ceph-node3

EOF

ceph-node3节点:

1.更改/etc/network/interfaces(待改进)

cat<< EOF >> /etc/network/interfaces

autoeth1

ifaceeth1 inet static

address 10.0.1.103

natmask255.255.255.0

autoeth2

ifaceeth2 inet static

address 10.0.2.103

natmask255.255.255.0

EOF

2.更改hostname

sed -i"s/localhost/ceph-node3/g"/etc/hostname

3.更改hosts文件

cat<< EOF >> /etc/hosts

10.0.1.101 ceph-node1

10.0.1.102 ceph-node2

10.0.1.103 ceph-node3

EOF

1.3ceph部署工具的安装

1.把 Ceph仓库添加到 ceph-deploy 管理节点,然后安装 ceph-deploy 。

- 添加发布密钥:

wget -q -O-'https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc' | sudoapt-key add -

- 添加Ceph软件包源,用稳定版Ceph(如 cuttlefish 、 dumpling 、 emperor 、 firefly 等等)替换掉 {ceph-stable-release} 。例如:

echo debhttp://ceph.com/debian-{ceph-stable-release}/ $(lsb_release -sc) main | sudotee /etc/apt/sources.list.d/ceph.list

- 更新你的仓库,并安装 ceph-deploy :

sudo apt-get update && sudo apt-getinstall ceph-deploy

node1操作步骤:

- 添加发布密钥:

wget -q -O-'https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc' | sudo apt-keyadd -

- 添加Ceph软件包源,用稳定版Ceph(如cuttlefish、dumpling、emperor、firefly等等)替换掉{ceph-stable-release},我们云平台用的是hammer。例如:

echo debhttp://ceph.com/debian-hammer/ $(lsb_release -sc) main | sudo tee/etc/apt/sources.list.d/ceph.list

- 更新你的仓库,并安装ceph-deploy:

sudo apt-get update&& sudo apt-get install ceph-deploy

1.4ceph节点安装:

1.安装NTP

sudo apt-get install ntp

2.安装 SSH服务器

sudo apt-get installopenssh-server

3.创建 Ceph用户,并赋sudo权限,然后切换到cephuser,

sudo useradd -d /home/cephuser -m cephuser

sudo passwd cephuser

echo "cephuser ALL = (root)NOPASSWD:ALL" | sudotee/etc/sudoers.d/cephuser

sudo chmod 0440 /etc/sudoers.d/cephuser

ssh cephuser@{主机名}

如

ssh cephuser@ceph-node1

4. 配置无密码 SSH登录

1)生成 SSH 密钥对,但不要用 sudo 或 root 用户

ssh-keygen

2) 把公钥拷贝到各 Ceph 节点

ssh-copy-id cephuser@ceph-node3

ssh-copy-id cephuser@ceph-node2

ssh-copy-id cephuser@ceph-node3

3. 新建/home/cephuser/.ssh/config,

命令如下:

mkdir -p home/cephuser/.ssh

touch/home/cephuser/.ssh/config

cat << EOF > /home/cephuser/.ssh/config

Host ceph-node1

Hostname ceph-node1

User cephuser

Host ceph-node2

Hostname ceph-node2

User cephuser

Host ceph-node3

Hostname ceph-node3

User cephuser

EOF

5./etc/sysconfig/network-scripts/ifcfg-ethx设置onboot=yes

ceph-node1:

sudomkdir -p/etc/sysconfig/network-scripts

sudotouch /etc/sysconfig/network-scripts/ifcfg-eth0

echo "onboot=yes" |sudo tee /etc/sysconfig/network-scripts/ifcfg-eth0

sudotouch /etc/sysconfig/network-scripts/ifcfg-eth1

echo "onboot=yes" |sudo tee /etc/sysconfig/network-scripts/ifcfg-eth1

sudotouch /etc/sysconfig/network-scripts/ifcfg-eth2

echo "onboot=yes" |sudo tee /etc/sysconfig/network-scripts/ifcfg-eth2

ceph-node2:

sudo mkdir -p/etc/sysconfig/network-scripts

sudotouch /etc/sysconfig/network-scripts/ifcfg-eth0

echo "onboot=yes" |sudo tee/etc/sysconfig/network-scripts/ifcfg-eth0

sudotouch /etc/sysconfig/network-scripts/ifcfg-eth1

echo "onboot=yes" |sudo tee /etc/sysconfig/network-scripts/ifcfg-eth1

ceph-node3:

sudo mkdir -p/etc/sysconfig/network-scripts

sudotouch /etc/sysconfig/network-scripts/ifcfg-eth0

echo "onboot=yes" |sudo tee/etc/sysconfig/network-scripts/ifcfg-eth0

sudotouch /etc/sysconfig/network-scripts/ifcfg-eth1

echo "onboot=yes" |sudo tee /etc/sysconfig/network-scripts/ifcfg-eth1

二、存储集群

2.1创建集群目录

在管理节点ceph-node1上创建一个目录,用于保存 ceph-deploy 生成的配置文件和密钥对

sshcephuser@ceph-node1

mkdirmy-cluster

cdmy-cluster

2.2创建一集群

下面操作在ceph-node1节点下操作

1. 创建集群

ceph-deploy new ceph-node1

2. 把 Ceph 配置文件里的默认副本数从3 改成 2 ,这样只有两个 OSD 也可以达到 active + clean 状态。把下面这行加入 [global] 段:

osd pool default size = 2

echo "osd pool default size =2" | sudo tee -a ceph.conf

3. 如果你有多个网卡,可以把 publicnetwork 写入 Ceph 配置文件的 [global] 段下

public network ={ip-address}/{netmask}

echo "public network=10.0.1.0/24" | sudo tee -a ceph.conf

4. 安装 Ceph

ceph-deploy install ceph-node1ceph-node2 ceph-node3 --no-adjust-repos

5.配置初始监视器、并收集所有密钥

ceph-deploy mon create-initial

检查

上述操作后,当前目录里应该会出现这些密钥环:

•{cluster-name}.client.admin.keyring

•{cluster-name}.bootstrap-osd.keyring

•{cluster-name}.bootstrap-mds.keyring

2.3添加两个 OSD 。

为了快速地安装,这篇快速入门把目录而非整个硬盘用于OSD守护进程。关于单独把硬盘或分区用于 OSD 及其日志,请参考 ceph-deploy osd 。

1.登录到 Ceph节点、并给 OSD 守护进程创建一目录。

ssh ceph-node2

sudo mkdir -p /var/local/osd0

ls -l /var/local/osd0

exit

ssh ceph-node3

sudo mkdir -p /var/local/osd1

ls -l /var/local/osd1

exit

ceph-deploy osd prepareceph-node2:/var/local/osd0 ceph-node3:/var/local/osd1

ceph-deploy osd activateceph-node2:/var/local/osd0 ceph-node3:/var/local/osd1

2.用 ceph-deploy把配置文件和 admin 密钥拷贝到管理节点、和 Ceph 节点,这样你每次执行 Ceph 命令行时就无需指定监视器地址和 ceph.client.admin.keyring 了。

ceph-deploy admin ceph-node1ceph-node2 ceph-node3

3.确保 ceph.client.admin.keyring的权限位正确无误。

sudo chmod +r/etc/ceph/ceph.client.admin.keyring

9. 检查集群健康状况

ceph health