CDH5.8.4-hadoop2.6.0安装hive

1.安装MariaDB

1.1 使用yum源安装MariaDB

[root@host151 ~]# yum -y install mariadb mariadb-server

1.2 启动MariaDB

[root@host151 ~]# systemctl start mariadb

设置开机自启动

[root@host151 ~]# systemctl enable mariadb

1.2 修改MariaDB配置文件

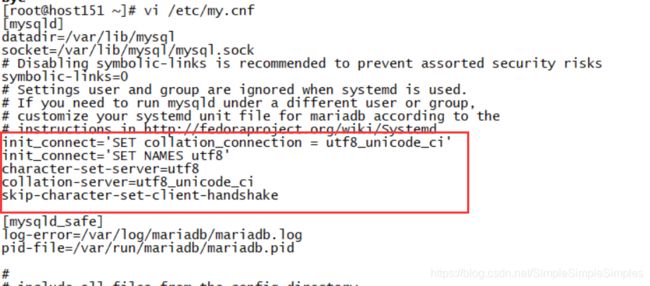

修改 /etc/my.cnf文件,在[mysqld]后面添加如下配置:

[root@host151 ~]# vi /etc/my.cnf

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

修改/etc/my.cnf.d/client.cnf,添加客户端默认字符编码

[root@host151 ~]# vi /etc/my.cnf.d/client.cnf

default-character-set=utf8![]()

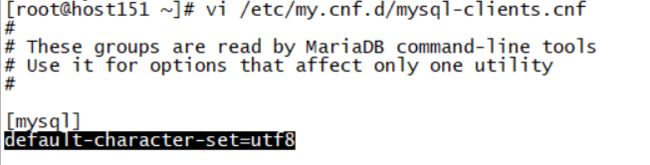

修改/etc/my.cnf.d/mysql-clients.cnf,在[mysql]后面设置字符编码为utf8

[root@host151 ~]# vi /etc/my.cnf.d/mysql-clients.cnf

default-character-set=utf8

全部配置完成,重启MariaDB

[root@host151 ~] systemctl restart mariadb

1.4 配置超级用户和密码

mysql>mysqladmin -u root password admin

1.5 root用户登录

mysql>mysql -uroot -padmin

1.6 查看mysql权限

mysql>use mysql;

mysql>select User,authentication_string,Host from user;

1.7 mysql给root开启远程访问权限

mysql>GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'admin';

mysql>flush privileges;

1.8 创建hive元数库,设置编码为latin1,设置成utf-8删除hive表卡死

mysql>create database hivedb DEFAULT CHARACTER SET latin1;

如果已有库可以修改库编码

mysql>alter database hivedb character set latin1;

1.9 创建元数据连接的用户和密码

mysql>create user 'hiveuser' identified by 'hiveuser';

1.10 授权给用户

mysql>grant all on hivedb.* to 'hiveuser'@'%' identified by 'hiveuser';

mysql>grant all on hivedb.* to 'hiveuser'@'localhost' identified by 'hiveuser';

mysql>grant all on hivedb.* to 'hiveuser'@'host150' identified by 'hiveuser';

mysql>flush privileges;

2.安装hive

2.1上传解压并重命名

[hadoop@host151 bigdata]# tar -zxvf hive-1.1.0-cdh5.8.4.tar.gz

[hadoop@host151 bigdata]# mv hive-1.1.0-cdh5.8.4 hive

2.2编辑添加全局环境变量

[hadoop@host151 bigdata]$ vim /home/hadoop/.bash_profile

export HIVE_HOME=/home/hadoop/bigdata/hive

export PATH=$HIVE_HOME/bin:$PATH

生效配置文件

[hadoop@host151 bigdata]# source /home/hadoop/.bash_profile

2.3hive日志配置

创建日志目录

[hadoop@host151 bigdata]$ mkdir –p /home/hadoop/bigdata/datas/hive/log

[hadoop@host151 bigdata]$ mkdir –p /home/hadoop/bigdata/datas/hive/execlog

[hadoop@host151 bigdata]$ mkdir –p /home/hadoop/bigdata/datas/hive/tmp

修改hive-log4j2.properties文件以下配置项

[hadoop@host151 conf]$ cp hive-log4j.properties.template hive-log4j.properties

hive.log.dir=/home/hadoop/bigdata/datas/hive/log/${sys:user.name}

修改hive-exec-log4j2.properties文件以下配置项

[hadoop@host151 conf]$ cp hive-exec-log4j.properties.template hive-exec-log4j.properties

hive.log.dir=/home/hadoop/bigdata/datas/hive/execlog/${sys:user.name}

2.4添加配置文件

先创建好,hive元数据存放的hdfs目录

[hadoop@host151 bigdata] hdfs dfs -mkdir -p /user/hive/tmp

[hadoop@host151 bigdata] hdfs dfs -mkdir -p /user/hive/warehouse

如有必要可以修改Hive存放数据的目录,启动hive全自动创建/user/hive/warehous目录,可以忽略。

[hadoop@host151 bigdata]#hdfs dfs -chmod g+w /user/hive/tmp

[hadoop@host151 bigdata]#hdfs dfs -chmod g+w /user/hive/warehouse

修改hive-site.xml,添加配置如下

[hadoop@host151 conf]$ vim hive-site.xml

hive.exec.scratchdir

/user/hive/tmp

hive.metastore.warehouse.dir

/user/hive/warehouse

hive.querylog.location

/home/hadoop/bigdata/datas/hive/tmp/${user.name}

javax.jdo.option.ConnectionURL

jdbc:mysql://host151:3306/hivedb?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

hiveuser

javax.jdo.option.ConnectionPassword

hiveuser

hive.server2.thrift.port

10000

注意:所有${system:java.io.tmpdir}替换为/home/uhadoop/datas/hive/tmp/

所有{system:user.name}替换为{user.name}

修改hadoop安装目录下/etc/hadoop/core-site.xml文件,给beeline登录用户开放权限

hadoop.proxyuser.hadoop.hosts

*

hadoop.proxyuser.hadoop.groups

*

hadoop.proxyuser.HTTP.hosts

*

hadoop.proxyuser.HTTP.groups

*

2.5修改重命名hive-env.sh

[hadoop@host151 conf]# cp hive-env.sh.template hive-env.sh

[hadoop@host151 conf]$ vim hive-env.sh

export JAVA_HOME=/opt/jdk1.8.0_131

export HADOOP_HOME=/home/hadoop/bigdata/hadoop

export HIVE_HOME=/home/hadoop/bigdata/hive

export HIVE_CONF_DIR=/home/hadoop/bigdata/hive/conf

2.6编辑/bin/hive-config.sh添加环境变量

[hadoop@host151 bin]# vim hive-config.sh

export JAVA_HOME=/opt/jdk1.8.0_131

export HIVE_HOME=/home/hadoop/bigdata/hive

export HADOOP_HOME=/home/hadoop/bigdata/hadoop

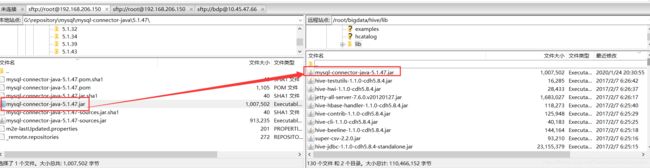

2.7上传mysql连接驱动,连接元数据

可以执行sql语句select version();查看数据库的版本,确定驱动

2.8测试hive

[hadoop@host151 bin]# hive

which: no hbase in (/root/bigdata/hive/bin:/root/bigdata/hadoop/bin:/root/bigdata/hadoop/sbin:/root/mysoftwareInstall/jdk1.8/jdk1.8.0_131/bin:/root/bigdata/hadoop/bin:/root/bigdata/hadoop/sbin:/root/mysoftwareInstall/jdk1.8/jdk1.8.0_131/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin)

Logging initialized using configuration in jar:file:/root/bigdata/hive/lib/hive-common-1.1.0-cdh5.8.4.jar!/hive-log4j.properties

WARNING: Hive CLI is deprecated and migration to Beeline is recommended.

hive> use smart_test;

OK

Time taken: 1.711 seconds

hive>

2.9beeline连接测试

启动hiveserver2,用于beeline等客户端连接hive

[hadoop@host151 bigdata]$ nohup hive --service hiveserver2 > /dev/null 2>&1 &

查看hiveserver2启动状态

[hadoop@host151 bigdata]$ sudo netstat -nptl | grep 10000

[sudo] password for hadoop:

tcp 0 0 0.0.0.0:10000 0.0.0.0:* LISTEN 78583/java

beeline连接,在命令行输入命令

[hadoop@host151 bigdata]$ beeline

进入beeline交互命令行,输入连接命令,!connect jdbc:hive2://ip:端口 用户名 密码

beeline>!connect jdbc:hive2://host151:10000 hadoop