Docker安全之cgroup的权限限制

LXC:linuxcgroup namespace

控制组 命名空间 :环境独立资源隔离

docker run 为容器创建一个独立的命名空间

docker实现隔离没有虚拟机彻底

什么是cgroup

Cgroups 是 control groups 的缩写,是 Linux 内核提供的一种可以限制、记录、隔离进程组(process groups)所使用的物理资源(如:cpu,memory,IO等等)的机制。最初由 google 的工程师提出,后来被整合进 Linux 内核。Cgroups 也是 LXC 为实现虚拟化所使用的资源管理手段,可以说没有cgroups就没有LXC。

cgroup可以干什么

-

限制进程组可以使用的资源数量(Resource limiting )。比如:memory子系统可以为进程组设定一个memory使用上限,一旦进程组使用的内存达到限额再申请内存,就会出发OOM(out of memory)。

-

进程组的优先级控制(Prioritization )。比如:可以使用cpu子系统为某个进程组分配特定cpu share。

-

记录进程组使用的资源数量(Accounting )。比如:可以使用cpuacct子系统记录某个进程组使用的cpu时间

-

进程组隔离(Isolation)。比如:使用ns子系统可以使不同的进程组使用不同的namespace,以达到隔离的目的,不同的进程组有各自的进程、网络、文件系统挂载空间。

-

进程组控制(Control)。比如:使用freezer子系统可以将进程组挂起和恢复。

cgroup中需要了解的四个概念

Subsystems: 称之为子系统,一个子系统就是一个资源控制器,比如 cpu子系统就是控制cpu时间分配的一个控制器。

Hierarchies: 可以称之为层次体系也可以称之为继承体系,指的是Control Groups是按照层次体系的关系进行组织的。

Control Groups: 一组按照某种标准划分的进程。进程可以从一个Control Groups迁移到另外一个Control

Groups中,同时Control Groups中的进程也会受到这个组的资源限制。 Tasks:在cgroups中,Tasks就是系统的一个进程。

实验是基于docker已经安装好的基础上,且使用的系统是rhel7.3

控制cpu的占用情况

[root@su1 ~]# systemctl start docker

[root@su1 ~]# mount -t cgroup ##检测cgroup时是否开启##都显示on即可

cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd)

cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,freezer)

cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,cpuacct,cpu)

cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,perf_event)

cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,cpuset)

cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,hugetlb)

cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,memory)

cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,net_prio,net_cls)

cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,devices)

cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,blkio)

cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,seclabel,pids)

cgroup子系统的层级目录

[root@su1 ~]# cd /sys/fs/cgroup

[root@su1 cgroup]# ls

blkio cpu,cpuacct freezer net_cls perf_event

cpu cpuset hugetlb net_cls,net_prio pids

cpuacct devices memory net_prio systemd

创建一个cpu控制群组

[root@su1 cgroup]# cd cpu

[root@su1 cpu]# ls

cgroup.clone_children cpuacct.usage cpu.rt_runtime_us release_agent

cgroup.event_control cpuacct.usage_percpu cpu.shares system.slice

cgroup.procs cpu.cfs_period_us cpu.stat tasks

cgroup.sane_behavior cpu.cfs_quota_us docker user.slice

cpuacct.stat cpu.rt_period_us notify_on_release

[root@su1 cpu]# mkdir x1

进入创建的目录,可以看到自动生成文件,与cpu目录里面的文件相同,伪文件

[root@su1 cpu]# cd x1

[root@su1 x1]# ls

cgroup.clone_children cpuacct.usage cpu.rt_period_us notify_on_release

cgroup.event_control cpuacct.usage_percpu cpu.rt_runtime_us tasks

cgroup.procs cpu.cfs_period_us cpu.shares

cpuacct.stat cpu.cfs_quota_us cpu.stat

测试限制cpu的使用

[root@su1 x1]# cat cpu.cfs_period_us ##cpu分配的周期

100000

[root@su1 x1]# cat cpu.cfs_quota_us

-1 ##-1表示全部使用

[root@su1 x1]# echo 20000 > cpu.cfs_quota_us

[root@su1 x1]# cat cpu.cfs_quota_us

20000 ##表示使用20%

[root@su1 x1]# dd if=/dev/zero of=/dev/null &

[1] 2777

top命令检测占用了99.9%

[root@su1 x1]# top

top - 21:43:25 up 1:49, 1 user, load average: 0.22, 0.06, 0.06

Tasks: 108 total, 2 running, 106 sleeping, 0 stopped, 0 zombie

%Cpu(s): 38.7 us, 61.3 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 1015564 total, 459664 free, 203144 used, 352756 buff/cache

KiB Swap: 2097148 total, 2097148 free, 0 used. 635364 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

2777 root 20 0 107992 608 516 R 99.9 0.1 0:21.28 dd

2778 root 20 0 161840 2236 1592 R 0.3 0.2 0:00.03 top

限制cpu使用

[root@su1 x1]# echo 2777 > tasks

[root@su1 x1]# top

top - 21:47:22 up 1:53, 1 user, load average: 1.24, 0.65, 0.30

Tasks: 108 total, 2 running, 106 sleeping, 0 stopped, 0 zombie

%Cpu(s): 6.8 us, 11.6 sy, 0.0 ni, 81.6 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 1015564 total, 459448 free, 203356 used, 352760 buff/cache

KiB Swap: 2097148 total, 2097148 free, 0 used. 635148 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

2777 root 20 0 107992 608 516 R 20.3 0.1 4:14.16 dd

5e80ed8bffa8a1a85d8f6af3ce09ba3e46565dc5d4b8225d063a914cf2010555

84dc63ea61d595ac8863018a6133bd6178122f22ee2cbcee7c78cf2e29f378ee

90a979cdd1e326175923f450f7966731ba1fd9bd718bb6fd5a50667878e9ca24

cgroup.clone_children

cgroup.event_control

cgroup.procs

cpuacct.stat

cpuacct.usage

cpuacct.usage_percpu

cpu.cfs_period_us

cpu.cfs_quota_us

cpu.rt_period_us

cpu.rt_runtime_us

cpu.shares

cpu.stat

edc582852d08a74371c36b223476b9d9fdb031381b705700887d6e7fcc6e2677

notify_on_release

tasks

创建一个docker限制其cpu使用

[root@su1 x1]# docker run --help | grep cpu

--cpu-period int Limit CPU CFS (Completely Fair

--cpu-quota int Limit CPU CFS (Completely Fair

--cpu-rt-period int Limit CPU real-time period in

--cpu-rt-runtime int Limit CPU real-time runtime in

-c, --cpu-shares int CPU shares (relative weight)

--cpus decimal Number of CPUs

--cpuset-cpus string CPUs in which to allow execution

--cpuset-mems string MEMs in which to allow execution

[root@su1 docker]# docker run -it --name vm1 --cpu-rt-period 100000 --cpu-quota 20000 ubuntu

root@5e80ed8bffa8:/# dd if=/dev/zero of=/dev/null

[root@su1 docker]# cd 5e80ed8bffa8a1a85d8f6af3ce09ba3e46565dc5d4b8225d063a914cf2010555

[root@su1 5e80ed8bffa8a1a85d8f6af3ce09ba3e46565dc5d4b8225d063a914cf2010555]# ls

cgroup.clone_children cpuacct.usage cpu.rt_period_us notify_on_release

cgroup.event_control cpuacct.usage_percpu cpu.rt_runtime_us tasks

cgroup.procs cpu.cfs_period_us cpu.shares

cpuacct.stat cpu.cfs_quota_us cpu.stat

[root@su1 5e80ed8bffa8a1a85d8f6af3ce09ba3e46565dc5d4b8225d063a914cf2010555]# top

top - 21:56:38 up 2:02, 2 users, load average: 0.02, 0.25, 0.25

Tasks: 114 total, 3 running, 111 sleeping, 0 stopped, 0 zombie

%Cpu(s): 16.0 us, 24.3 sy, 0.0 ni, 59.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 1015564 total, 423572 free, 233684 used, 358308 buff/cache

KiB Swap: 2097148 total, 2097148 free, 0 used. 603480 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

2777 root 20 0 107992 608 516 R 20.3 0.1 6:05.44 dd

不做限制时情况如下

[root@su1 docker]# docker run -it --name vm2 ubuntu

root@94d5d362f0d6:/# dd if=/dev/zero of=/dev/null

[root@su1 5e80ed8bffa8a1a85d8f6af3ce09ba3e46565dc5d4b8225d063a914cf2010555]# top

top - 21:59:13 up 2:04, 2 users, load average: 0.78, 0.40, 0.30

Tasks: 114 total, 3 running, 111 sleeping, 0 stopped, 0 zombie

%Cpu(s): 40.0 us, 60.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 1015564 total, 422828 free, 234212 used, 358524 buff/cache

KiB Swap: 2097148 total, 2097148 free, 0 used. 602860 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

2987 root 20 0 4560 392 328 R 80.0 0.0 1:01.91 dd

限制内存

[root@su1 ~]# cd /sys/fs/cgroup/memory/

[root@su1 memory]# ls

cgroup.clone_children memory.memsw.failcnt

cgroup.event_control memory.memsw.limit_in_bytes

cgroup.procs memory.memsw.max_usage_in_bytes

cgroup.sane_behavior memory.memsw.usage_in_bytes

docker memory.move_charge_at_immigrate

memory.failcnt memory.numa_stat

memory.force_empty memory.oom_control

memory.kmem.failcnt memory.pressure_level

memory.kmem.limit_in_bytes memory.soft_limit_in_bytes

memory.kmem.max_usage_in_bytes memory.stat

memory.kmem.slabinfo memory.swappiness

memory.kmem.tcp.failcnt memory.usage_in_bytes

memory.kmem.tcp.limit_in_bytes memory.use_hierarchy

memory.kmem.tcp.max_usage_in_bytes notify_on_release

memory.kmem.tcp.usage_in_bytes release_agent

memory.kmem.usage_in_bytes system.slice

memory.limit_in_bytes tasks

memory.max_usage_in_bytes user.slice

[root@su1 memory]# mkdir x2

[root@su1 memory]# cd x2

[root@su1 x2]# ls

cgroup.clone_children memory.memsw.failcnt

cgroup.event_control memory.memsw.limit_in_bytes

cgroup.procs memory.memsw.max_usage_in_bytes

memory.failcnt memory.memsw.usage_in_bytes

memory.force_empty memory.move_charge_at_immigrate

memory.kmem.failcnt memory.numa_stat

memory.kmem.limit_in_bytes memory.oom_control

memory.kmem.max_usage_in_bytes memory.pressure_level

memory.kmem.slabinfo memory.soft_limit_in_bytes

memory.kmem.tcp.failcnt memory.stat

memory.kmem.tcp.limit_in_bytes memory.swappiness

memory.kmem.tcp.max_usage_in_bytes memory.usage_in_bytes

memory.kmem.tcp.usage_in_bytes memory.use_hierarchy

memory.kmem.usage_in_bytes notify_on_release

memory.limit_in_bytes tasks

memory.max_usage_in_bytes

[root@su1 ~]# python

Python 2.7.5 (default, Feb 20 2018, 09:19:12)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-28)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> 256 * 1024 *1024 ##256兆换算为字节

268435456

[root@su1 x2]# cat memory.limit_in_bytes

9223372036854771712

[root@su1 x2]# cat memory.memsw.limit_in_bytes

9223372036854771712

[root@su1 x2]# echo 268435456 > memory.limit_in_bytes

[root@su1 x2]# cat memory.limit_in_bytes

268435456

测压工具

[root@su1 x2]# yum install -y libcgroup-tools-0.41-15.el7.x86_64

[root@su1 x2]# cd /dev/shm 该目录中的会被直接写入内存中

[root@su1 shm]# ls

[root@su1 shm]# free -m

total used free shared buff/cache available

Mem: 991 208 324 7 459 607

Swap: 2047 0 2047

[root@su1 shm]# dd if=/dev/zero of=bigfile bs=1M count=100

100+0 records in

100+0 records out

104857600 bytes (105 MB) copied, 0.0396777 s, 2.6 GB/s

[root@su1 shm]# free -m

total used free shared buff/cache available

Mem: 991 208 223 107 559 507

Swap: 2047 0 2047

[root@su1 shm]# dd if=/dev/zero of=bigfile bs=1M count=300 ##没有限制的情况下

300+0 records in

300+0 records out

314572800 bytes (315 MB) copied, 0.203411 s, 1.5 GB/s

[root@su1 shm]# cgexec -g memory:x2 dd if=/dev/zero of=bigfile bs=1M count=100

100+0 records in

100+0 records out

104857600 bytes (105 MB) copied, 0.035836 s, 2.9 GB/s

[root@su1 shm]# cgexec -g memory:x2 dd if=/dev/zero of=bigfile bs=1M count=300

300+0 records in

300+0 records out

314572800 bytes (315 MB) copied, 0.214675 s, 1.5 GB/s

编辑文件做内存限制

[root@su1 ~]# cd /sys/fs/cgroup/memory/x2/

[root@su1 x2]# echo 268435456 >memory.memsw.limit_in_bytes

-bash: echo: write error: Device or resource busy

[root@su1 shm]# ls

bigfile

[root@su1 shm]# rm -fr bigfile

[root@su1 x2]# echo 268435456 >memory.memsw.limit_in_bytes

[root@su1 shm]# cgexec -g memory:x2 dd if=/dev/zero of=bigfile bs=1M count=300

Killed

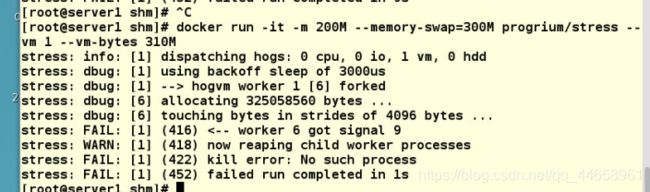

docker中对内存的控制

[root@su1 ~]# docker run --help | grep memory

--kernel-memory bytes Kernel memory limit

-m, --memory bytes Memory limit

--memory-reservation bytes Memory soft limit

--memory-swap bytes Swap limit equal to memory plus swap:

--memory-swappiness int Tune container memory swappiness (0 to

[root@su1 ~]# docker run -it --memory 256M --memory-swap=256M ubuntu

root@ac0a9a612910:/#

[root@su1 memory]# cd docker/

[root@su1 docker]# ls

84dc63ea61d595ac8863018a6133bd6178122f22ee2cbcee7c78cf2e29f378ee

90a979cdd1e326175923f450f7966731ba1fd9bd718bb6fd5a50667878e9ca24

ac0a9a6129106cdaec596bdf926f419984994d8898fa53322a22b1710078de38

cgroup.clone_children

cgroup.event_control

cgroup.procs

edc582852d08a74371c36b223476b9d9fdb031381b705700887d6e7fcc6e2677

memory.failcnt

memory.force_empty

memory.kmem.failcnt

memory.kmem.limit_in_bytes

memory.kmem.max_usage_in_bytes

memory.kmem.slabinfo

memory.kmem.tcp.failcnt

memory.kmem.tcp.limit_in_bytes

memory.kmem.tcp.max_usage_in_bytes

memory.kmem.tcp.usage_in_bytes

memory.kmem.usage_in_bytes

memory.limit_in_bytes

memory.max_usage_in_bytes

memory.memsw.failcnt

memory.memsw.limit_in_bytes

memory.memsw.max_usage_in_bytes

memory.memsw.usage_in_bytes

memory.move_charge_at_immigrate

memory.numa_stat

memory.oom_control

memory.pressure_level

memory.soft_limit_in_bytes

memory.stat

memory.swappiness

memory.usage_in_bytes

memory.use_hierarchy

notify_on_release

tasks

[root@su1 docker ]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ac0a9a612910 ubuntu "/bin/bash" About a minute ago Up About a minute stoic_meitner

edc582852d08 httpd "httpd-foreground" 2 hours ago Up 2 hours 0.0.0.0:32772->80/tcp musing_herschel

84dc63ea61d5 httpd "httpd-foreground" 2 hours ago Up 2 hours 0.0.0.0:32771->80/tcp web2

90a979cdd1e3 httpd "httpd-foreground" 2 hours ago Up 2 hours 0.0.0.0:32770->80/tcp web1

[root@su1 docker]# cd ac0a9a6129106cdaec596bdf926f419984994d8898fa53322a22b1710078de38

[root@su1 ac0a9a6129106cdaec596bdf926f419984994d8898fa53322a22b1710078de38]# cat memory.limit_in_bytes

268435456

[root@su1 ac0a9a6129106cdaec596bdf926f419984994d8898fa53322a22b1710078de38]# cat memory.memsw.limit_in_bytes

268435456

一个测试容器,可以使用该容器进行测试

限制用户读写内存速度

[root@su1 x3]# vi /etc/resolv.conf

[root@su1 x3]# cd /etc/sysconfig/network-scripts/

[root@su1 network-scripts]# vi ifcfg-eth0

[root@su1 network-scripts]# cd

[root@su1 ~]# vi /etc/cgrules.conf

[root@su1 ~]# systemctl start cgred

[root@su1 ~]# useradd dd

[root@su1 ~]# su - dd

[dd@su1 ~]$ cd /dev/shm

[dd@su1 shm]$ dd if=/dev/zero of=dd bs=1M count=200

200+0 records in

200+0 records out

209715200 bytes (210 MB) copied, 0.483075 s, 434 MB/s

[dd@su1 shm]$ dd if=/dev/zero of=dd bs=1M count=400

dd: error writing ‘dd’: No space left on device

241+0 records in

240+0 records out

252686336 bytes (253 MB) copied, 0.46849 s, 539 MB/s

限制磁盘写入速度

划分磁盘 格式化磁盘

[root@su1 ~]# mkfs.xfs /dev/vda

meta-data=/dev/vda isize=512 agcount=4, agsize=1310720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242880, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@su1 ~]# mount /dev/vda /mnt/

[root@su1 ~]# cd /sys/fs/cgroup/blkio/

[root@su1 blkio]# ls

blkio.io_merged blkio.throttle.read_bps_device

blkio.io_merged_recursive blkio.throttle.read_iops_device

blkio.io_queued blkio.throttle.write_bps_device

blkio.io_queued_recursive blkio.throttle.write_iops_device

blkio.io_service_bytes blkio.time

blkio.io_service_bytes_recursive blkio.time_recursive

blkio.io_serviced blkio.weight

blkio.io_serviced_recursive blkio.weight_device

blkio.io_service_time cgroup.clone_children

blkio.io_service_time_recursive cgroup.event_control

blkio.io_wait_time cgroup.procs

blkio.io_wait_time_recursive cgroup.sane_behavior

blkio.leaf_weight docker

blkio.leaf_weight_device notify_on_release

blkio.reset_stats release_agent

blkio.sectors system.slice

blkio.sectors_recursive tasks

blkio.throttle.io_service_bytes user.slice

blkio.throttle.io_serviced

[root@su1 blkio]# mkdir x3

[root@su1 blkio]# cd x3/

[root@su1 x3]# ll /dev/vda ##查看设备号

brw-rw----. 1 root disk 252, 0 11月 10 00:08 /dev/vda

[root@su1 x3]# ls

blkio.io_merged blkio.sectors_recursive

blkio.io_merged_recursive blkio.throttle.io_service_bytes

blkio.io_queued blkio.throttle.io_serviced

blkio.io_queued_recursive blkio.throttle.read_bps_device

blkio.io_service_bytes blkio.throttle.read_iops_device

blkio.io_service_bytes_recursive blkio.throttle.write_bps_device

blkio.io_serviced blkio.throttle.write_iops_device

blkio.io_serviced_recursive blkio.time

blkio.io_service_time blkio.time_recursive

blkio.io_service_time_recursive blkio.weight

blkio.io_wait_time blkio.weight_device

blkio.io_wait_time_recursive cgroup.clone_children

blkio.leaf_weight cgroup.event_control

blkio.leaf_weight_device cgroup.procs

blkio.reset_stats notify_on_release

blkio.sectors tasks

[root@su1 x3]# python

Python 2.7.5 (default, Feb 20 2018, 09:19:12)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-28)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> 1024 * 1024

1048576

>>>

[root@su1 x3]# echo "252:0 1048576" > blkio.throttle.write_bps_device

[root@su1 x3]# cat blkio.throttle.write_bps_device

252:0 1048576

[root@su1 x3]# cgexec -g blkio:x3 dd if=/dev/zero of=/mnt/testfile bs=1M count=10

10+0 records in

10+0 records out

10485760 bytes (10 MB) copied, 0.00510456 s, 2.1 GB/s ##写入缓存的

[root@su1 x3]# cgexec -g blkio:x3 dd if=/dev/zero of=/mnt/testfile bs=1M count=10 oflag=direct 当前block io限制只对direct io 有效(不适用文件系统缓存)将跳过内存缓存oflag=direct

10+0 records in

10+0 records out

10485760 bytes (10 MB) copied, 10.0021 s, 1.0 MB/s ##实现了速度控制

docker中对磁盘写入速度的控制

[root@docker blkio]# docker run --help |grep device

--blkio-weight-device list Block IO weight (relative device

--device list Add a host device to the container

--device-cgroup-rule list Add a rule to the cgroup allowed

devices list

--device-read-bps list Limit read rate (bytes per second)

from a device (default [])

--device-read-iops list Limit read rate (IO per second)

from a device (default [])

--device-write-bps list Limit write rate (bytes per

second) to a device (default [])

--device-write-iops list Limit write rate (IO per second)

to a device (default [])

[root@su1 x3]# docker run -it --name vm3 --device-write-bps /dev/vda:1MB ubuntu

root@21c508ac3bc2:/#dd if=/dev/zero of=westos bs=1M count=10 oflag=direct

10+0 records in

10+0 records out

10485760 bytes (10 MB) copied, 10.0021 s, 1.0 MB/s

[root@su1 blkio]# cd docker/

[root@su1 docker]# ls

21c508ac3bc245a798d62f55618d50f321484d9bad5345b284c312b15efa5842

84dc63ea61d595ac8863018a6133bd6178122f22ee2cbcee7c78cf2e29f378ee

90a979cdd1e326175923f450f7966731ba1fd9bd718bb6fd5a50667878e9ca24

blkio.io_merged

blkio.io_merged_recursive

blkio.io_queued

blkio.io_queued_recursive

blkio.io_service_bytes

blkio.io_service_bytes_recursive

blkio.io_serviced

blkio.io_serviced_recursive

blkio.io_service_time

blkio.io_service_time_recursive

blkio.io_wait_time

blkio.io_wait_time_recursive

blkio.leaf_weight

blkio.leaf_weight_device

blkio.reset_stats

blkio.sectors

blkio.sectors_recursive

blkio.throttle.io_service_bytes

blkio.throttle.io_serviced

blkio.throttle.read_bps_device

blkio.throttle.read_iops_device

blkio.throttle.write_bps_device

blkio.throttle.write_iops_device

blkio.time

blkio.time_recursive

blkio.weight

blkio.weight_device

cgroup.clone_children

cgroup.event_control

cgroup.procs

edc582852d08a74371c36b223476b9d9fdb031381b705700887d6e7fcc6e2677

notify_on_release

tasks

[root@su1 docker]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

21c508ac3bc2 ubuntu "/bin/bash" About a minute ago Up About a minute vm3

edc582852d08 httpd "httpd-foreground" 4 hours ago Up 4 hours 0.0.0.0:32772->80/tcp musing_herschel

84dc63ea61d5 httpd "httpd-foreground" 4 hours ago Up 4 hours 0.0.0.0:32771->80/tcp web2

90a979cdd1e3 httpd "httpd-foreground" 4 hours ago Up 4 hours 0.0.0.0:32770->80/tcp web1

[root@su1 docker]# cd 21c508ac3bc245a798d62f55618d50f321484d9bad5345b284c312b15efa5842

[root@su1 21c508ac3bc245a798d62f55618d50f321484d9bad5345b284c312b15efa5842]# ls

blkio.io_merged blkio.sectors_recursive

blkio.io_merged_recursive blkio.throttle.io_service_bytes

blkio.io_queued blkio.throttle.io_serviced

blkio.io_queued_recursive blkio.throttle.read_bps_device

blkio.io_service_bytes blkio.throttle.read_iops_device

blkio.io_service_bytes_recursive blkio.throttle.write_bps_device

blkio.io_serviced blkio.throttle.write_iops_device

blkio.io_serviced_recursive blkio.time

blkio.io_service_time blkio.time_recursive

blkio.io_service_time_recursive blkio.weight

blkio.io_wait_time blkio.weight_device

blkio.io_wait_time_recursive cgroup.clone_children

blkio.leaf_weight cgroup.event_control

blkio.leaf_weight_device cgroup.procs

blkio.reset_stats notify_on_release

blkio.sectors tasks

[root@su1 21c508ac3bc245a798d62f55618d50f321484d9bad5345b284c312b15efa5842]# cat blkio.throttle.write_bps_device

252:0 1048576