Phoenix 之kerberos 环境下 JDBC 操作Demo

一、操作环境

ambari 2.4.3 + hdp 2.5.3 + hbase 1.1.2 + phoenix4.7 + kerberos + centos6.9

二、代码如下:

package ycb.service;

import java.io.IOException;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.ArrayList;

import org.apache.hadoop.security.UserGroupInformation;

import org.apache.log4j.Logger;

import ycb.utils.FileUtils;

/**

* @author YeChunBo

* @time 2018年3月28日

*

* 类说明 :测试phoenix JDBC简单样例(已开启kerberos安全认证机制)

*/

public class PhoenixDemo {

private static Logger log = Logger.getLogger(PhoenixDemo.class);

/**

* 获取连接

*/

public Connection getConn() {

// 连接hadoop环境,进行 Kerberos认证

org.apache.hadoop.conf.Configuration conf = new org.apache.hadoop.conf.Configuration();

conf.set("hadoop.security.authentication", "Kerberos");

// linux 环境会默认读取/etc/krb.cnf文件,win不指定会默认读取C:/Windows/krb5.ini

if (System.getProperty("os.name").toLowerCase().startsWith("win")) {

System.setProperty("java.security.krb5.conf", "C:/Windows/krbconf/pro/krb5.ini");

}

UserGroupInformation.setConfiguration(conf);

Connection conn = null;

try {

UserGroupInformation.loginUserFromKeytab("test/bigdata9.bigdata.com.cn@bmsoft.com.cn", "./conf/test.keytab");

Class.forName("org.apache.phoenix.jdbc.PhoenixDriver");

String url = "jdbc:phoenix:bigdata04,bigdata05,bigdata06:2181"; // 这里配置zookeeper server的地址,可单个也可多个

conn = DriverManager.getConnection(url);

} catch (ClassNotFoundException e) {

log.error(e.getMessage());

e.printStackTrace();

} catch (SQLException e1) {

log.error(e1.getMessage());

e1.printStackTrace();

} catch (IOException e2) {

log.error(e2.getMessage());

e2.printStackTrace();

}

return conn;

}

/**

* 通过phoenix 创建表、插入数据、索引、查询数据

*

* @param phoenix demo

*/

public void phoenixDemo() {

Connection conn = getConn();

ResultSet rs = null;

Statement stmt = null;

try {

stmt = conn.createStatement();

stmt.execute("drop table if exists test");

stmt.execute("create table test (mykey integer not null primary key, mycolumn varchar)");

stmt.execute("create index test_idx on test(mycolumn)");

for (int i = 0; i < 100; i++) {

stmt.executeUpdate("upsert into test values ("+ i +",'The num is "+ i +"')");

}

conn.commit();

PreparedStatement statement = conn.prepareStatement("select mykey from test where mycolumn='The num is 88'");

rs = statement.executeQuery();

while (rs.next()) {

log.info("-------------The num is ---------------" + rs.getInt(1));

}

} catch (SQLException e) {

log.error(e.getMessage());

e.printStackTrace();

} finally {

closeRes(conn,stmt,rs);

}

}

/**

* 通过Phoenix 将hbase数据导入到CSV

*

* @param querySql

* @param fileName

*/

public void hbaseData2CSV(String querySql, String fileName) {

Connection conn = null;

ResultSet rs = null;

Statement statement = null;

try {

conn = getConn();

statement = conn.createStatement();

int count = 0;

ArrayList dataList = new ArrayList();

StringBuffer dataLineBuf = new StringBuffer();

rs = statement.executeQuery(querySql);

ResultSetMetaData m = rs.getMetaData();

int columns = m.getColumnCount();

while (rs.next()) {

dataLineBuf.setLength(0);

count = count + 1;

for (int i = 1; i <= columns; i++) {

dataLineBuf.append(rs.getString(i)).append(",");

}

dataList.add(dataLineBuf.toString());

}

// 将查询出来的数据输出到CSV文件中

FileUtils.arrayList2CSVFile(dataList, fileName);

} catch (Exception e) {

log.error(e.getMessage());

e.printStackTrace();

} finally {

closeRes(conn,statement,rs);

}

}

/**

* 关闭资源

* @param conn

* @param statement

* @param rs

*/

public void closeRes(Connection conn, Statement statement, ResultSet rs) {

try {

if (conn != null) {

conn.close();

}

if (statement != null)

statement.close();

if (rs != null)

rs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

PhoenixDemo phoenixDemo = new PhoenixDemo();

phoenixDemo.phoenixDemo();

System.out.println("----------------------------------------------");

phoenixDemo.hbaseData2CSV("select * from test limit 100", "/tmp/phoenixTest/test.csv");

}

}

运行结果如下所示:

[INFO ] 2018-03-29 18:07:24,360 method:ycb.service.PhoenixDemo.phoenixDemo(PhoenixDemo.java:85)

-------------The num is ---------------88

[root@bigdata07 phoenixTest]# more test.csv

0,The num is 0,

1,The num is 1,

10,The num is 10,

11,The num is 11,

12,The num is 12,三、测试多线程获取hbase中对应的数据并测试效率

测试样例代码如下:

package ycb.thread;

import ycb.service.PhoenixDemo;

/**

* @author YeChunBo

* @time 2018年3月28日

*

* 类说明:测试phoenix 进行多线程读取hbase数据并输入到csv中

*/

public class PhoenixThread implements Runnable {

public String threadName;

PhoenixDemo phoenixDemo = new PhoenixDemo();

public PhoenixThread(String name, PhoenixDemo phoenixDemo) {

threadName = name;

this.phoenixDemo = phoenixDemo;

}

public void run() {

phoenixDemo.hbaseData2CSV("SELECT * FROM \"t_hbase1\" limit 1000000",

"/tmp/phoenixTest/t_hbase1_" + threadName + ".csv");

}

public static void main(String[] args) {

new Thread(new PhoenixThread("ThreadA", new PhoenixDemo())).start();

new Thread(new PhoenixThread("ThreadB", new PhoenixDemo())).start();

new Thread(new PhoenixThread("ThreadC", new PhoenixDemo())).start();

}

}

测试结果如下所示,100万数据从hbase中读取到csv不到10秒全部写完:

-rw-r--r--. 1 root root 1896494 Mar 29 17:49 t_hbase1_ThreadA.csv

-rw-r--r--. 1 root root 1896494 Mar 29 17:49 t_hbase1_ThreadB.csv

-rw-r--r--. 1 root root 1896494 Mar 29 17:49 t_hbase1_ThreadC.csv

[root@bigdata20 phoenixTest]# more t_hbase1_ThreadA.csv

1,1,696083Ԫ,http://blog.csdn.net/s20082043/article/details/8534092,hello world avz7qgu77wog3r6c5qw8426b4ape432523974591we9t5u314356hzy1kxj7x8g39a2l9tl7734mbxn3oa2192kaq9381,

10,10,391968Ԫ,http://blog.csdn.net/s20082043/article/details/3138741,hello world avz7qgu77wog3r6c5qw8426b4ape432523974591we9t5u314356hzy1kxj7x8g39a2l9tl7734mbxn3oa2192kaq93810,

100,100,528644Ԫ,http://blog.csdn.net/s20082043/article/details/3381080,hello world avz7qgu77wog3r6c5qw8426b4ape432523974591we9t5u314356hzy1kxj7x8g39a2l9tl7734mbxn3oa2192kaq938100,

1000,1000,328084Ԫ,http://blog.csdn.net/s20082043/article/details/7711796,hello world avz7qgu77wog3r6c5qw8426b4ape432523974591we9t5u314356hzy1kxj7x8g39a2l9tl7734mbxn3oa2192kaq9381000,

10000,10000,582678Ԫ,http://blog.csdn.net/s20082043/article/details/7523690,hello world avz7qgu77wog3r6c5qw8426b4ape432523974591we9t5u314356hzy1kxj7x8g39a2l9tl7734mbxn3oa2192kaq93810000,

100000,100000,201643Ԫ,http://blog.csdn.net/s20082043/article/details/6580911,hello world avz7qgu77wog3r6c5qw8426b4ape432523974591we9t5u314356hzy1kxj7x8g39a2l9tl7734mbxn3oa2192kaq938100000,

1000000,1000000,null,null,null,

附写文件的代码如下:

package ycb.utils;

import java.io.BufferedWriter;

import java.io.File;

import java.io.FileOutputStream;

import java.io.OutputStreamWriter;

import java.util.ArrayList;

import java.util.Iterator;

import org.apache.log4j.Logger;

public class FileUtils {

private static Logger log = Logger.getLogger(FileUtils.class);

/**

* 将ArrayList集合中的数据输出到文件中

* @param userMap 数据集合

* @param fileName 文件名:全路径

* @return

*/

public static boolean arrayList2CSVFile(ArrayList dataList,String fileName ){

boolean flag = true;

File file = new File(fileName);

OutputStreamWriter out = null;

BufferedWriter bfw = null;

try {

out = new OutputStreamWriter(new FileOutputStream(file),"GBK");

bfw = new BufferedWriter(out);

Iterator it = dataList.iterator();

String strLine = null;

int count = 0;

while(it.hasNext()){

strLine = it.next();

bfw.write(strLine.toString());

bfw.newLine();

count = count + 1;

if (count % 1000 == 0) {

log.info("线程:" + Thread.currentThread().getName() + "成功插入【" + count +"】");

bfw.flush();

}

}

bfw.flush();

} catch (Exception e) {

System.out.println("将数据写到文件时出现异常: " + e.getMessage());

flag = false;

e.printStackTrace();

}

return flag;

}

}

测试后发现:只需要hbase-site.xml配置文件跟hdp对应版本的phoenix client jar包便可。

五、遇到的坑

- 1、创建二级索引时报如下错

[INFO ] 2018-03-29 17:56:27,617 method:org.apache.hadoop.hbase.client.HBaseAdmin$CreateTableFuture.postOperationResult(HBaseAdmin.java:779)

Created TEST

[ERROR] 2018-03-29 17:56:27,642 method:ycb.service.PhoenixDemo.phoenixDemo(PhoenixDemo.java:88)

ERROR 1029 (42Y88): Mutable secondary indexes must have the hbase.regionserver.wal.codec property set to org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec in the hbase-sites.xml of every region server. tableName=TEST_IDX

java.sql.SQLException: ERROR 1029 (42Y88): Mutable secondary indexes must have the hbase.regionserver.wal.codec property set to org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec in the hbase-sites.xml of every region server. tableName=TEST_IDX

at org.apache.phoenix.exception.SQLExceptionCode$Factory$1.newException(SQLExceptionCode.java:441)

at org.apache.phoenix.exception.SQLExceptionInfo.buildException(SQLExceptionInfo.java:145)

at org.apache.phoenix.schema.MetaDataClient.createIndex(MetaDataClient.java:1195)

at org.apache.phoenix.compile.CreateIndexCompiler$1.execute(CreateIndexCompiler.java:85)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:343)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:331)

at org.apache.phoenix.call.CallRunner.run(CallRunner.java:53)

at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:329)

at org.apache.phoenix.jdbc.PhoenixStatement.execute(PhoenixStatement.java:1440)

at ycb.service.PhoenixDemo.phoenixDemo(PhoenixDemo.java:77)

at ycb.service.PhoenixDemo.main(PhoenixDemo.java:162)根据报错日志配置hbase对应的属性便可

change hbase.regionserver.wal.codec from

org.apache.hadoop.hbase.regionserver.wal.WALCellCodec

to org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec

具体界面如下所示:

修改完上述属性需要重启Hbase,同样需要重新进入phoenix命令才会生效。

-2、没有对应的操作权限

[ERROR] 2018-03-29 18:03:30,178 method:ycb.service.PhoenixDemo.phoenixDemo(PhoenixDemo.java:88)

org.apache.hadoop.hbase.security.AccessDeniedException: Insufficient permissions for user 'keke_user/bigdata04.bigdata.com.cn@BIGDATA.ORG.CN' (action=create)

at org.apache.ranger.authorization.hbase.AuthorizationSession.publishResults(AuthorizationSession.java:261)

at org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor.authorizeAccess(RangerAuthorizationCoprocessor.java:595)

at org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor.requirePermission(RangerAuthorizationCoprocessor.java:664)

at org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor.preCreateTable(RangerAuthorizationCoprocessor.java:769)

at org.apache.ranger.authorization.hbase.RangerAuthorizationCoprocessor.preCreateTable(RangerAuthorizationCoprocessor.java:496)

at org.apache.hadoop.hbase.master.MasterCoprocessorHost$11.call(MasterCoprocessorHost.java:222)

at org.apache.hadoop.hbase.master.MasterCoprocessorHost.execOperation(MasterCoprocessorHost.java:1146)

at org.apache.hadoop.hbase.master.MasterCoprocessorHost.preCreateTable(MasterCoprocessorHost.java:218)

at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:1603)

at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:462)

at org.apache.hadoop.hbase.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java:57204)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2127)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:107)

at org.apache.hadoop.hbase.ipc.RpcExecutor.consumerLoop(RpcExecutor.java:133)

at org.apache.hadoop.hbase.ipc.RpcExecutor$1.run(RpcExecutor.java:108)

at java.lang.Thread.run(Thread.java:745)

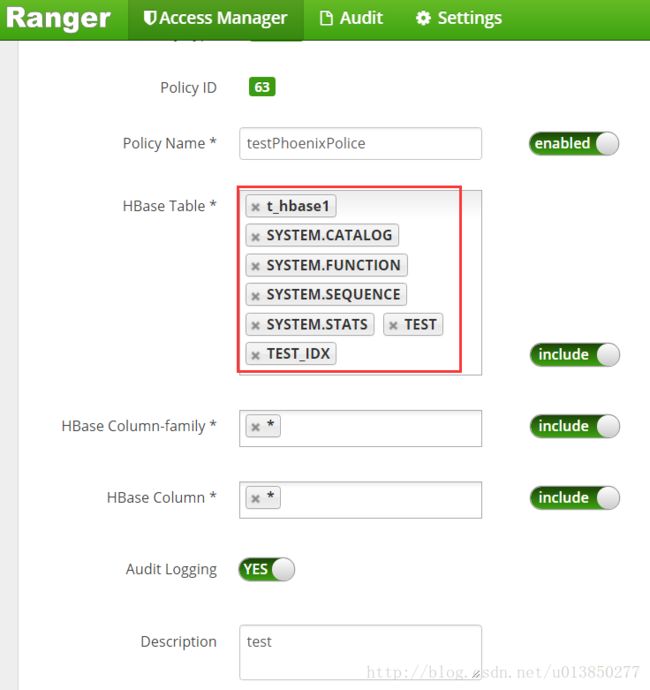

org.apache.phoenix.exception.PhoenixIOException: org.apache.hadoop.hbase.security.AccessDeniedException: Insufficient permissions for user 'keke_user/bigdata04.bigdata.com.cn@BIGDATA.ORG.CN' (action=create)根据日志对该kerberos用户进行授权便可,笔者是通过Ranger进行用户权限管理的。修改界面如下所示:

其中 TEST为将要创建的表,TEST_IDX 为要创建的索引名。

3、如果需要通过Eclipse进行远程访问Hbase数据,则首先需要该Hbase环境支持直接通过Eclipse进行远程访问。

Hbase支持远程访问的条件如下所示:

1、hbase 所有对应的master 和 regionserver对应的端口需要对外可访问,默认是16000,16020。

2、因为hbase client 会通过Zookeeper来访问hbase数据,所以也需要将所有zookeeper对应的访问端口开放,默认是2181。

3、同时需要配置win对应的host文件,具体路径如下:C:\Windows\System32\drivers\etc的hosts。也就是在该文件中添加集群中对应的ip及映射名。

笔者在第三步时自己坑了自己一把:

windows中hosts配置如下:

10.189.75.4 bigdata04 bigdata04.bigdata.com.cn

10.189.75.5 bigdata05 bigdata05.bigdata.com.cn

10.189.75.6 bigdata06 bigdata06.bigdata.com.cn

10.189.75.7 bigdata07 bigdata07.bigdata.com.cn

10.189.75.8 bigdata08 bigdata08.bigdata.com.cn

10.189.75.9 bigdata09 bigdata09.bigdata.com.cn

10.189.75.41 bigdata41 bigdata41.bigdata.com.cneclispe执行出现的错误如下所示:

[INFO ] 2018-06-08 16:02:53,064 method:org.apache.phoenix.shaded.org.apache.zookeeper.ZooKeeper.(ZooKeeper.java:438)

Initiating client connection, connectString=bigdata04:2181,bigdata05:2181,bigdata06:2181 sessionTimeout=180000 watcher=org.apache.hadoop.hbase.zookeeper.PendingWatcher@78ffe6dc

[INFO ] 2018-06-08 16:02:53,088 method:org.apache.phoenix.shaded.org.apache.zookeeper.ClientCnxn$SendThread.logStartConnect(ClientCnxn.java:1019)

Opening socket connection to server bigdata06/10.189.75.6:2181. Will not attempt to authenticate using SASL (unknown error)

[INFO ] 2018-06-08 16:02:53,092 method:org.apache.phoenix.shaded.org.apache.zookeeper.ClientCnxn$SendThread.primeConnection(ClientCnxn.java:864)

Socket connection established to bigdata06/10.189.75.6:2181, initiating session

[INFO ] 2018-06-08 16:02:53,102 method:org.apache.phoenix.shaded.org.apache.zookeeper.ClientCnxn$SendThread.onConnected(ClientCnxn.java:1279)

Session establishment complete on server bigdata06/10.189.75.6:2181, sessionid = 0x362f5ba9b76402c, negotiated timeout = 40000

[WARN ] 2018-06-08 16:02:53,685 method:org.apache.hadoop.hdfs.shortcircuit.DomainSocketFactory.(DomainSocketFactory.java:117)

The short-circuit local reads feature cannot be used because UNIX Domain sockets are not available on Windows.

[INFO ] 2018-06-08 16:02:53,728 method:org.apache.phoenix.metrics.Metrics.initialize(Metrics.java:44)

Initializing metrics system: phoenix

[WARN ] 2018-06-08 16:02:53,781 method:org.apache.hadoop.metrics2.impl.MetricsConfig.loadFirst(MetricsConfig.java:125)

Cannot locate configuration: tried hadoop-metrics2-phoenix.properties,hadoop-metrics2.properties

[INFO ] 2018-06-08 16:02:53,832 method:org.apache.hadoop.metrics2.impl.MetricsSystemImpl.startTimer(MetricsSystemImpl.java:376)

Scheduled snapshot period at 10 second(s).

[INFO ] 2018-06-08 16:02:53,833 method:org.apache.hadoop.metrics2.impl.MetricsSystemImpl.start(MetricsSystemImpl.java:192)

phoenix metrics system started

[WARN ] 2018-06-08 16:03:12,467 method:org.apache.hadoop.hbase.ipc.RpcClientImpl$Connection$1.run(RpcClientImpl.java:664)

Couldn't setup connection for keke_user/bigdata04.bigdata.com.cn@BIGDATA.ORG.CN to hbase/bigdata41@BIGDATA.ORG.CN

[WARN ] 2018-06-08 16:03:30,162 method:org.apache.hadoop.hbase.ipc.RpcClientImpl$Connection$1.run(RpcClientImpl.java:664)

Couldn't setup connection for keke_user/bigdata04.bigdata.com.cn@BIGDATA.ORG.CN to hbase/bigdata41@BIGDATA.ORG.CN 根据上述错误(Couldn’t setup connection for keke_user/bigdata04.bigdata.com.cn@BIGDATA.ORG.CN to hbase/bigdata41@BIGDATA.ORG.CN)发现实际上去连接时取的是hosts中ip后面对应的第一个映射名,但笔者在kerberos中配置机器是对应ip第二个映射名的,所以需要将hosts中的内容修改成如下所示:

10.189.75.4 bigdata04.bigdata.com.cn bigdata04

10.189.75.5 bigdata05.bigdata.com.cn bigdata05

10.189.75.6 bigdata06.bigdata.com.cn bigdata06

10.189.75.7 bigdata07.bigdata.com.cn bigdata07

10.189.75.8 bigdata08.bigdata.com.cn bigdata08

10.189.75.9 bigdata09.bigdata.com.cn bigdata09

10.189.75.41 bigdata41.bigdata.com.cn bigdata41