kfk安装

一:kafka简介:

Kafka 被称为下一代分布式消息系统,是非营利性组织ASF(Apache Software Foundation,简称为ASF)基金会中的一个开源项目,比如HTTP Server、Hadoop、ActiveMQ、Tomcat等开源软件都属于Apache基金会的开源软件,类似的消息系统还有RbbitMQ、ActiveMQ、ZeroMQ,最主要的优势是其具备分布式功能、并且结合zookeeper可以实现动态扩容。

http://www.infoq.com/cn/articles/apache-kafka

安装环境:

三台服务器:

IP分别是:192.168.56.11 192.168.56.12 192.168.56.13

三台服务器分别配置hosts文件:

cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.56.11 linux-host1.exmaple.com

192.168.56.12 linux-host2.exmaple.com

192.168.56.13 linux-host3.exmaple.com

1.1:下载安装并验证zookeeper:

1.1.1:kafka下载地址:

http://kafka.apache.org/downloads.html

1.1.2:zookeeper 下载地址:

http://zookeeper.apache.org/releases.html

1.1.3:安装zookeeper:

zookeeper集群特性:整个集群种只要有超过集群数量一半的zookeeper工作只正常的,那么整个集群对外就是可用的,假如有2台服务器做了一个zookeeper集群,只要有任何一台故障或宕机,那么这个zookeeper集群就不可用了,因为剩下的一台没有超过集群一半的数量,但是假如有三台zookeeper组成一个集群,那么损坏一台就还剩两台,大于3台的一半,所以损坏一台还是可以正常运行的,但是再损坏一台就只剩一台集群就不可用了。那么要是4台组成一个zookeeper集群,损坏一台集群肯定是正常的,那么损坏两台就还剩两台,那么2台不大于集群数量的一半,所以3台的zookeeper集群和4台的zookeeper集群损坏两台的结果都是集群不可用,一次类推5台和6台以及7台和8台都是同理,所以这也就是为什么集群一般都是奇数的原因。

下载后的安装文件上传到各服务器的/usr/local/src目录然后分别执行以下操作。

1.1.3.1:Serer1 配置:

[root@linux-host1 ~]# cd /usr/local/src/

[root@linux-host1 src]# yum install jdk-8u151-linux-x64.tar.gz -y

[root@linux-host1 src]# tar xvf zookeeper-3.4.11.tar.gz

[root@linux-host1 src]# ln -sv /usr/local/src/zookeeper-3.4.11 /usr/local/zookeeper

[root@linux-host1 src]# mkdir /usr/local/zookeeper/data

[root@linux-host1 src]# cp /usr/local/zookeeper/conf/zoo_sample.cfg /usr/local/zookeeper/conf/zoo.cfg

[root@linux-host1 src]# vim /usr/local/zookeeper/conf/zoo.cfg

[root@linux-host1 src]# grep “1” /usr/local/zookeeper/conf/zoo.cfg

tickTime=2000 #服务器之间或客户端与服务器之间的单次心跳检测时间间隔,单位为毫秒

initLimit=10 #集群中leader服务器与follower服务器第一次连接最多次数

syncLimit=5 # leader 与 follower 之间发送和应答时间,如果该follower 在设置的时间内不能与leader 进行通信,那么此 follower 将被视为不可用。

clientPort=2181 #客户端连接 Zookeeper 服务器的端口,Zookeeper 会监听这个端口,接受客户端的访问请求

dataDir=/usr/local/zookeeper/data #自定义的zookeeper保存数据的目录

server.1=192.168.56.11:2888:3888 #服务器编号=服务器IP:LF数据同步端口:LF选举端口

server.2=192.168.56.12:2888:3888

server.3=192.168.56.13:2888:3888

[root@linux-host1 src]# echo “1” > /usr/local/zookeeper/data/myid

1.1.3.2:Server2 配置:

[root@linux-host2 ~]# cd /usr/local/src/

[root@linux-host2 src]# yum install jdk-8u151-linux-x64.tar.gz -y

[root@linux-host2 src]# tar xvf zookeeper-3.4.11.tar.gz

[root@linux-host2 src]# ln -sv /usr/local/src/zookeeper-3.4.11 /usr/local/zookeeper

[root@linux-host2 src]# mkdir /usr/local/zookeeper/data

[root@linux-host2 src]# cp /usr/local/zookeeper/conf/zoo_sample.cfg /usr/local/zookeeper/conf/zoo.cfg

[root@linux-host2 src]# vim /usr/local/zookeeper/conf/zoo.cfg

[root@linux-host2 src]# grep “2” /usr/local/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

clientPort=2181

dataDir=/usr/local/zookeeper/data

server.1=192.168.56.11:2888:3888

server.2=192.168.56.12:2888:3888

server.3=192.168.56.13:2888:3888

[root@linux-host2 src]# echo “2” > /usr/local/zookeeper/data/myid

1.1.3.3:Server3 配置:

[root@linux-host3 ~]# cd /usr/local/src/

[root@linux-host3 src]# yum install jdk-8u151-linux-x64.tar.gz -y

[root@linux-host3 src]# tar xvf zookeeper-3.4.11.tar.gz

[root@linux-host3 src]# ln -sv /usr/local/src/zookeeper-3.4.11 /usr/local/zookeeper

[root@linux-host3 src]# mkdir /usr/local/zookeeper/data

[root@linux-host3 src]# cp /usr/local/zookeeper/conf/zoo_sample.cfg /usr/local/zookeeper/conf/zoo.cfg

[root@linux-host3 src]# vim /usr/local/zookeeper/conf/zoo.cfg

[root@linux-host3 src]# grep “3” /usr/local/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

clientPort=2181

dataDir=/usr/local/zookeeper/data

server.1=192.168.56.11:2888:3888

server.2=192.168.56.12:2888:3888

server.3=192.168.56.13:2888:3888

[root@linux-host3 src]# echo “3” > /usr/local/zookeeper/data/myid

1.1.3.4:各服务器启动zookeeper:

[root@linux-host1 ~]# /usr/local/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/…/conf/zoo.cfg

Starting zookeeper … STARTED

[root@linux-host2 src]# /usr/local/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/…/conf/zoo.cfg

Starting zookeeper … STARTED

[root@linux-host3 src]# /usr/local/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/…/conf/zoo.cfg

Starting zookeeper … STARTED

1.1.3.5:查看各zookeeper状态:

[root@linux-host1 ~]# /usr/local/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/…/conf/zoo.cfg

Mode: follower

[root@linux-host2 ~]# /usr/local/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/…/conf/zoo.cfg

Mode: leader

[root@linux-host3 ~]# /usr/local/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/…/conf/zoo.cfg

Mode: follower

1.1.3.6:zookeeper简单操作命令:

#连接到任意节点生成数据:

[root@linux-host3 data]# /usr/local/zookeeper/bin/zkCli.sh -server 192.168.56.11:2181

[zk: 192.168.56.11:2181(CONNECTED) 3] create /test “hello”

#在其他zookeeper节点验证数据:

[root@linux-host2 src]# /usr/local/zookeeper/bin/zkCli.sh -server 192.168.56.12:2181

[zk: 192.168.56.12:2181(CONNECTED) 0] get /test

hello

cZxid = 0x100000004

ctime = Fri Dec 15 11:14:07 CST 2017

mZxid = 0x100000004

mtime = Fri Dec 15 11:14:07 CST 2017

pZxid = 0x100000004

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 5

numChildren = 0

1.2:安装并测试kafka:

1.2.1:Server1安装kafka:

[root@linux-host1 src]# tar xvf kafka_2.11-1.0.0.tgz

[root@linux-host1 src]# ln -sv /usr/local/src/kafka_2.11-1.0.0 /usr/local/kafka

[root@linux-host1 src]# vim /usr/local/kafka/config/server.properties

21 broker.id=1

31 listeners=PLAINTEXT://192.168.56.11:9092

103 log.retention.hours=24 #保留指定小时的日志内容

123 zookeeper.connect=192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181 #所有的zookeeper地址

1.2.2:Server2安装kafka:

[root@linux-host2 src]# tar xvf kafka_2.11-1.0.0.tgz

[root@linux-host2 src]# ln -sv /usr/local/src/kafka_2.11-1.0.0 /usr/local/kafka

[root@linux-host2 src]# vim /usr/local/kafka/config/server.properties

21 broker.id=2

31 listeners=PLAINTEXT://192.168.56.12:9092

123 zookeeper.connect=192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181

1.2.3:Server3安装kafka:

[root@linux-host3 src]# tar xvf kafka_2.11-1.0.0.tgz

[root@linux-host3 src]# ln -sv /usr/local/src/kafka_2.11-1.0.0 /usr/local/kafka

[root@linux-host3 src]# vim /usr/local/kafka/config/server.properties

21 broker.id=3

31 listeners=PLAINTEXT://192.168.56.13:9092

123 zookeeper.connect=192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181

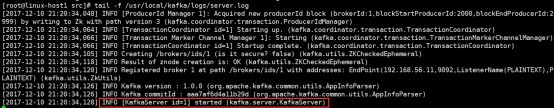

1.2.4:分别启动kafka:

1.2.4.1:Serevr1启动kafka:

[root@linux-host1 src]# /usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties #以守护进程的方式启动

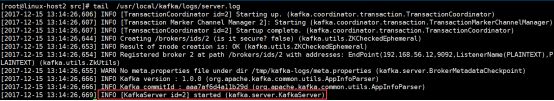

1.2.4.2:Serevr2启动kafka:

[root@linux-host2 src]# /usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

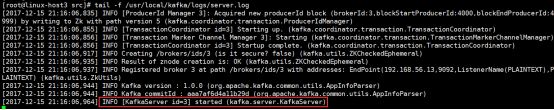

1.2.4.3:Serevr3启动kafka:

[root@linux-host3 src]# /usr/local/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

#/usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties & #此方式zookeeper会在shell断开后关闭

1.2.5:测试kafka:

1.2.5.1:验证进程:

[root@linux-host1 ~]# jps

10578 QuorumPeerMain

11572 Jps

11369 Kafka

[root@linux-host2 ~]# jps

2752 QuorumPeerMain

8229 Kafka

8383 Jps

[root@linux-host3 ~]# jps

12626 Kafka

2661 QuorumPeerMain

12750 Jps

1.2.5.2:测试创建topic:

创建名为logstashtest,partitions(分区)为3,replication(复制)为3的topic(主题):

在任意kafaka服务器操作:

[root@linux-host2 ~]# /usr/local/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181 --partitions 3 --replication-facto

r 3 --topic logstashtest

Created topic “logstashtest”.

1.2.5.3:测试获取topic:

可以在任意一台kafka服务器进行测试:

[root@linux-host3 ~]# /usr/local/kafka/bin/kafka-topics.sh --describe --zookeeper 192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181 --topic logstashtest

状态说明:logstashtest有三个分区分别为1、2、3,分区0的leader是3(broker.id),分区0有三个副本,并且状态都为lsr(ln-sync,表示可以参加选举成为leader)。

1.2.5.4:删除topic:

[root@linux-host3 ~]# /usr/local/kafka/bin/kafka-topics.sh --delete --zookeeper 192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181 --topic logstashtest

Topic logstashtest is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.

1.2.5.5:获取所有topic:

[root@linux-host1 ~]# /usr/local/kafka/bin/kafka-topics.sh --list --zookeeper 192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181

__consumer_offsets

nginx-accesslog-5612

system-log-5612

1.2.6:kafka命令测试消息发送:

1.2.6.1:创建topic:

[root@linux-host3 ~]# /usr/local/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181 --partitions 3 --replication-factor 3 --topic messagetest

Created topic “messagetest”.

1.2.6.2:发送消息:

[root@linux-host2 ~]# /usr/local/kafka/bin/kafka-console-producer.sh --broker-list 192.168.56.11:9092,192.168.56.12:9092,192.168.56.13:9092 --topic messagetest

hello

kafka

logstash

ss

oo

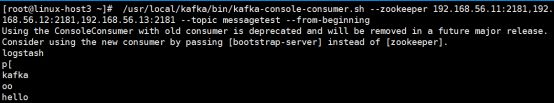

1.2.6.3:其他kafka服务器测试获取数据:

#Server1:

[root@linux-host1 ~]# /usr/local/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181 --topic messagetest --from-beginning

#Server2:

1.2.7:使用logstash测试向kafka写入数据:

1.2.7.1:编辑logstash配置文件:

[root@linux-host3 ~]# vim /etc/logstash/conf.d/logstash-to-kafka.sh

input {

stdin {}

}

output {

kafka {

topic_id => “hello”

bootstrap_servers => “192.168.56.11:9092”

batch_size => 5

}

stdout {

codec => rubydebug

}

}

1.2.7.2:验证kafka收到logstash数据:

[root@linux-host1 ~]# /usr/local/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.56.11:2181,192.168.56.12:2181,192.168.56.13:2181 --topic hello --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] inste

ad of [zookeeper].

2017-12-15T14:33:00.684Z linux-host3.exmaple.com hello

2017-12-15T14:33:31.127Z linux-host3.exmaple.com test

[root@linux-host2 ~]# /usr/local/kafka/bin/kafka-console-producer.sh --broker-list 192.168.56.11:9

092,192.168.56.12:9092,192.168.56.13:9092 --topic messagetest>hello

kafka

logstash

a-Z ↩︎

a-Z ↩︎

a-Z ↩︎