Windows下用Eclipse创建一个spark程序三步曲(Java版)

作者:翁松秀

用Eclipse创建一个spark程序三步曲(Java版)

-

- 用Eclipse创建一个spark程序三步曲(Java版)

- Step1:创建Maven工程

- Step2:添加maven依赖

- Step3:编写程序

- 用Eclipse创建一个spark程序三步曲(Java版)

在动手写第一个spark程序之前,得具备以下条件

前提条件:

1. 已经安装有Maven插件的Eclipse,最新版的Eclipse Photon自带Maven插件。

2. 已经安装Maven项目管理工具,要安装的猛戳【Windows搭建Maven环境】

3. 已经搭建spark的开发环境,要搭建的猛戳【Windows搭建spark开发环境】

Step1:创建Maven工程

【File】→【new】→【project…】,找到Maven节点,选择Maven Project

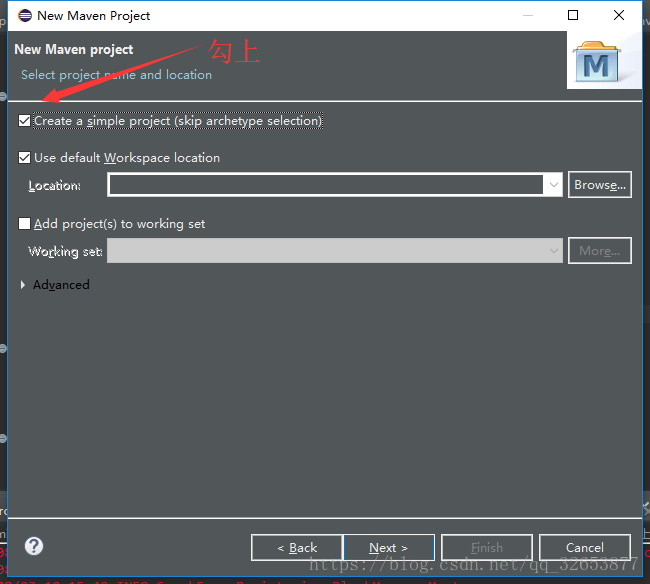

Next之后把”Create a simple project(skip archetype selection)“勾上,先创建一个简单的工程,不用管那些花里胡哨的,跑起来再说。

勾上之后Next,这里需要填两个Id,Group Id和Artifact Id,Group Id就是(域+公司),比如说Apache的Spark,Group Id是org.apache,Artifact Id是spark,这个是为了在maven中唯一标识一个Artifact采用的命名,不过你大可随便填,我这里Group Id就填org.apache,Artifact Id填spark。

Step2:添加maven依赖

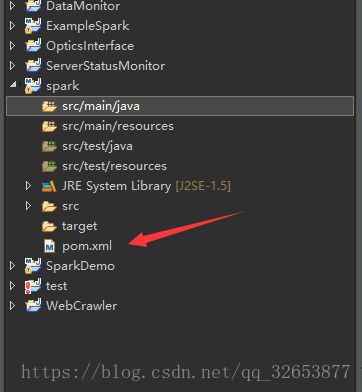

Finish之后我们的Maven工程就建好了,打开工程目录下的pom.xml配置文件,加载spark程序所需要的一些依赖。

你的pom.xml文件应该是这样的,可能版本和Group Id和Artifact Id不同。

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>org.apachegroupId>

<artifactId>sparkartifactId>

<version>0.0.1-SNAPSHOTversion>

projec>从下面的配置文件中复制< version > < /version >以下的部分放到你的pom.xml< version > < /version >下面,或者复制整个文件,到时候自己改一下

< modelVersion >,< groupId >,< artifactId >,< version >

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>org.apachegroupId>

<artifactId>sparkartifactId>

<version>1.0-SNAPSHOTversion>

<repositories>

<repository>

<id>centralid>

<name>Central Repositoryname>

<url>http://maven.aliyun.com/nexus/content/repositories/centralurl>

<layout>defaultlayout>

<snapshots>

<enabled>falseenabled>

snapshots>

repository>

repositories>

<build>

<plugins>

<plugin>

<artifactId>maven-assembly-pluginartifactId>

<version>2.2version>

<configuration>

<archive>

<manifest>

<mainClass>code.demo.spark.JavaWordCountmainClass>

manifest>

archive>

<descriptorRefs>

<descriptorRef>

jar-with-dependencies

descriptorRef>

descriptorRefs>

configuration>

plugin>

plugins>

build>

<properties>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<spark.version>1.6.0spark.version>

<scala.version>2.10scala.version>

<hadoop.version>2.6.0hadoop.version>

properties>

<dependencies>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_${scala.version}artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_${scala.version}artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-hive_${scala.version}artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming_${scala.version}artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>2.7.0version>

dependency>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.12version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-apiartifactId>

<version>1.6.6version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-log4j12artifactId>

<version>1.6.6version>

dependency>

<dependency>

<groupId>log4jgroupId>

<artifactId>log4jartifactId>

<version>1.2.16version>

dependency>

<dependency>

<groupId>dom4jgroupId>

<artifactId>dom4jartifactId>

<version>1.6.1version>

dependency>

<dependency>

<groupId>jaxengroupId>

<artifactId>jaxenartifactId>

<version>1.1.6version>

dependency>

<dependency>

<groupId>args4jgroupId>

<artifactId>args4jartifactId>

<version>2.33version>

dependency>

<dependency>

<groupId>jlinegroupId>

<artifactId>jlineartifactId>

<version>2.14.5version>

dependency>

dependencies>

<artifactId>ExampleSparkartifactId>

project>编辑完成之后保存,eclipse会自动加载所需要的依赖,时间可能有点久,取决于你的网速和maven的镜像,如果maven是国内的阿里云可能会比较快,maven搭建阿里云镜像猛戳【Maven搭建阿里云镜像】

Step3:编写程序

加载完之后会在工程目录下看到一个依赖Maven Dependencies

在src/main/java下创建sparkDemo包和SparkDemo.java,复制粘贴下面的代码,这是官网计算π的example,为了简单起见我把迭代参数去掉了,默认迭代10次。程序里也设置运行的master为local,所以直接在本地的eclipse上运行即可。

package sparkDemo;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.Function2;

import java.util.ArrayList;

import java.util.List;

public final class SparkDemo {

public static void main(String[] args) throws Exception {

SparkConf sparkConf = new SparkConf().setAppName("JavaSparkPi").setMaster("local");

JavaSparkContext jsc = new JavaSparkContext(sparkConf);

long start = System.currentTimeMillis();

int slices = 10;

int n = 100000 * slices;

List l = new ArrayList(n);

for (int i = 0; i < n; i++) {

l.add(i);

}

/*

JavaSparkContext的parallelize:将一个集合变成一个RDD

- 第一个参数一是一个 Seq集合

- 第二个参数是分区数

- 返回的是RDD[T]

*/

JavaRDD dataSet = jsc.parallelize(l, slices);

int count = dataSet.map(new Function() {

private static final long serialVersionUID = 1L;

public Integer call(Integer integer) {

double x = Math.random() * 2 - 1;

double y = Math.random() * 2 - 1;

return (x * x + y * y < 1) ? 1 : 0;

}

}).reduce(new Function2() {

private static final long serialVersionUID = 1L;

public Integer call(Integer integer, Integer integer2) {

return integer + integer2;

}

});

long end = System.currentTimeMillis();

System.out.println("Pi is roughly " + 4.0 * count / n+",use : "+(end-start)+"ms");

jsc.stop();

jsc.close();

}

}

运行结果:

Welcome to Spark!