View、Window、WindowManager---Choreographer源码阅读

参考文章

Android 之理解 VSYNC 信号

Android应用性能优化系列视频双语字幕讲解 By Google

Android Project Butter分析

Android Choreographer 源码分析

Android系统Choreographer机制实现过程

相关源码地址

android_view_DisplayEventReceiver

DisplayEventDispatcher.h

DisplayEventDispatcher.cpp

looper.cpp

一、概念

相关概念:

- 1.收到vsync信号之后, 顺序执行input、animation、traversal三个操作, 然后等待下一个信号, 再次顺序执行三个操作.

- 2.假设在第二个信号到来之前, 所有的操作都执行完成了, 即draw操作完成了, 那么第二个信号来到时, 此时界面将会更新为第一帧的内容.

- 3.第二个信号到来时, draw操作没有按时完成, 导致第三个时钟周期内显示的还是第一帧的内容, 这时就是出现丢帧的问题

- 4.UI必须至少等待16ms的间隔才会绘制下一帧, 所以连续两次setTextView只会触发一次重绘

接下来从源码的角度分析上面结论是如何而来

二、ViewRootImpl.requestLayout

2.1 ViewRootImpl.requestLayout

public void requestLayout() {

if (!mHandlingLayoutInLayoutRequest) {

// 1.线程校验, 创建View的线程才能进行View的绘制操作

checkThread();

// 2.设置标识为true, 开始进行绘制

mLayoutRequested = true;

// 3.进行绘制三部曲:measure、layout、draw

scheduleTraversals();

}

}

- 通过调用requestLayout()完成View的

measure、layout、draw绘制操作

2.2 ViewRootImpl.scheduleTraversals

void scheduleTraversals() {

/**

* 1.mTraversalScheduled默认为false, scheduleTraversals该方法被第一次调用

* 时, 被置为true, 在unscheduleTraversals()、doTraversal()中会被置为false

*

*/

if (!mTraversalScheduled) {

mTraversalScheduled = true;

/**

* 2.设置同步栅栏, 防止UI线程中同步消息执行, 这样做为了加快vsync的响应速度, 如果

* 设置, vsync到来的时候, 正在执行一个同步消息, 那么UI更新的Task就会被延迟执行

*/

mTraversalBarrier = mHandler.getLooper().getQueue().postSyncBarrier();

// 3.发送消息, 请求vsync垂直同步信号, 注意这里传入的是mTraversalRunnable, 对应

// CallbackRecord.run方法

mChoreographer.postCallback(

Choreographer.CALLBACK_TRAVERSAL, mTraversalRunnable, null);

if (!mUnbufferedInputDispatch) {

scheduleConsumeBatchedInput();

}

notifyRendererOfFramePending();

pokeDrawLockIfNeeded();

}

}

2.3 postSyncBarrier同步栅栏

private int postSyncBarrier(long when) {

// Enqueue a new sync barrier token.

// We don't need to wake the queue because the purpose of a barrier is to stall it.

synchronized (this) {

final int token = mNextBarrierToken++;

final Message msg = Message.obtain();

msg.markInUse();

msg.when = when;

msg.arg1 = token;

Message prev = null;

Message p = mMessages;

if (when != 0) {

while (p != null && p.when <= when) {

prev = p;

p = p.next;

}

}

if (prev != null) { // invariant: p == prev.next

msg.next = p;

prev.next = msg;

} else {

msg.next = p;

mMessages = msg;

}

return token;

}

}

- 注意到这里没有给Message.target复制的逻辑, 接下来再看MessageQueue.next方法

Message next() {

for (;;) {

synchronized (this) {

// Try to retrieve the next message. Return if found.

final long now = SystemClock.uptimeMillis();

Message prevMsg = null;

Message msg = mMessages;

if (msg != null && msg.target == null) {

/**

* 1.如果target == null, 会进行遍历链表操作, 如果获取到的

* 节点Message是同步Message, 则继续遍历链表获取下一个节点

*/

do {

prevMsg = msg;

msg = msg.next;

} while (msg != null && !msg.isAsynchronous());

}

}

}

}

关于Handler.post, Handler.sendMessage这些发送的也都是同步消息, 代码暂时不表

2.4 Choreographer.postCallback

private void postCallbackDelayedInternal(int callbackType,

Object action, Object token, long delayMillis) {

synchronized (mLock) {

final long now = SystemClock.uptimeMillis();

final long dueTime = now + delayMillis;

mCallbackQueues[callbackType].addCallbackLocked(dueTime, action, token);

if (dueTime <= now) {

scheduleFrameLocked(now);

}

}

}

2.5 Choreographer.scheduleFrameLocked

private void scheduleFrameLocked(long now) {

// 1.使用mFrameScheduled标志位保证在当前申请的vsync信号到来之前不会再去请求vsync信号

if (!mFrameScheduled) {

mFrameScheduled = true;

if (USE_VSYNC) {

//2.如果是在同一个线程中, 则立即调用scheduleVsyncLocked方法发送一个vsync信号

if (isRunningOnLooperThreadLocked()) {

scheduleVsyncLocked();

} else {

//3.如果不在同一个线程中, 通过Handler方法mLooper所在线程发送vsync信号

Message msg = mHandler.obtainMessage(MSG_DO_SCHEDULE_VSYNC);

msg.setAsynchronous(true);

mHandler.sendMessageAtFrontOfQueue(msg);

}

}

}

}

- 因为16ms内申请两个vsync没有意义, 所以这里使用mFrameScheduled标志位保证在当前申请的vsync没有到来之前, 不会再去请求新的vsync

- 当接收到vsync的回调之后, 会在doFrame方法中开始绘制之前将该标识位置为false, 用于接收下一个vsync信号

2.6 Choreographer.scheduleVsyncLocked

Choreographer.scheduleVsyncLocked

---mDisplayEventReceiver.scheduleVsync()

---nativeScheduleVsync()

//

private static native void nativeScheduleVsync(long receiverPtr);

- 1.receiverPtr指向DisplayEventReceiver初始化时获取Native层的NativeDisplayEventReceiver的引用

- 2.发送完vsync信号之后, 便是静静的等待底层回调vsync信号. 当native层回调vsync信号时, 回到了FrameDisplayEventReceiver.dispatchVsync信号

三、Choreographer

Choreographer的作用:负责获取VSYNC信号(脉冲信号)并控制UI线程完成图像绘制.

当收到VSYNC信号时, 去调用使用者通过postCallback设置的回调函数, 目前一共定义了三种类型的回调, 它们分别是:

- CALLBACK_INPUT: 优先级最高, 和输入事件处理有关

- CALLBACK_ANIMATION: 优先级其次, 和Animation的处理有关

- CALLBACK_TRAVERSAL: 优先级最低, 和UI等控件绘制有关

3.1 Choreographer与线程的关系

public ViewRootImpl(Context context, Display display) {

mChoreographer = Choreographer.getInstance();

}

public static Choreographer getInstance() {

return sThreadInstance.get();

}

- Choreographer线程单例, 一个线程对应一个Choreographer实例, 同一个线程中的每个ViewRootImpl共享同一个Choreographer

3.2 Choreographer初始化

private Choreographer(Looper looper, int vsyncSource) {

mLooper = looper;

// 1.创建消息处理的Handler

mHandler = new FrameHandler(looper);

// 2.如果系统使用了vsync机制,则注册一个FrameDisplayEventReceiver接收器

mDisplayEventReceiver = USE_VSYNC

? new FrameDisplayEventReceiver(looper, vsyncSource)

: null;

// 3.标记上一个frame的渲染时间

mLastFrameTimeNanos = Long.MIN_VALUE;

// 4.帧率, 手机上为16ms/帧

mFrameIntervalNanos = (long)(1000000000 / getRefreshRate());

// 5.创建回调数组

mCallbackQueues = new CallbackQueue[CALLBACK_LAST + 1];

// 6.初始化数组, 在下一帧开始渲染时会回调对应的CallbackQueue

for (int i = 0; i <= CALLBACK_LAST; i++) {

mCallbackQueues[i] = new CallbackQueue();

}

// b/68769804: For low FPS experiments.

setFPSDivisor(SystemProperties.getInt(ThreadedRenderer.DEBUG_FPS_DIVISOR, 1));

}

- 变量USE_VSYNC用于表示系统是否使用了VSYNC同步机制, 该值是通过读取系统属性debug.choreographer.vsync来获取的. 如果系统使用了vsync同步机制, 则创建一个FrameDisplayEventReceiver对象用于请求并接收Vsync事件.

四、FrameHandler

Choreographer初始化时创建FrameHandler对象

4.1 FrameHandler初始化

private final class FrameHandler extends Handler {

@Override

public void handleMessage(Message msg) {

switch (msg.what) {

case MSG_DO_FRAME://开始渲染下一帧的操作

doFrame(System.nanoTime(), 0);

break;

case MSG_DO_SCHEDULE_VSYNC://请求vsync信号

doScheduleVsync();

break;

case MSG_DO_SCHEDULE_CALLBACK://请求执行callback操作

doScheduleCallback(msg.arg1);

break;

}

}

}

MSG_DO_FRAME: 开始渲染下一帧

MSG_DO_SCHEDULE_VSYNC:请求vsync信号

MSG_DO_SCHEDULE_CALLBACK:请求执行callback

五、FrameDisplayEventReceiver

5.1 FrameDisplayEventReceiver概念

vsync信号由SurfaceFlinger实现并定时发送, FrameDisplayEventReceiver主要是用来接收同步脉冲信号vsync.

public DisplayEventReceiver(Looper looper, int vsyncSource) {

//1.获取当前线程的消息队列

mMessageQueue = looper.getQueue();

//2.持有native层的NativeDisplayEventReceiver的引用

mReceiverPtr = nativeInit(new WeakReference<DisplayEventReceiver>(this), mMessageQueue,

vsyncSource);

mCloseGuard.open("dispose");

}

nativeInit涉及到native层代码, 暂时涉及到native层的代码只简单的阅读一下顺便熟悉C/C++的语言

5.2 android_view_DisplayEventReceiver.nativeInit

/**

* 1.receiverWeak -> java层DisplayEventReceiver

* 2.mMessageQueue -> java层当前线程的MessageQueue

*/

static jlong nativeInit(JNIEnv* env, jclass clazz, jobject receiverWeak,

jobject messageQueueObj, jint vsyncSource) {

sp<MessageQueue> messageQueue = android_os_MessageQueue_getMessageQueue(env, messageQueueObj);

if (messageQueue == NULL) {

jniThrowRuntimeException(env, "MessageQueue is not initialized.");

return 0;

}

//1.创建Native层的NativeDisplayEventReceiver引用, 该实例对应持有java层的

// FrameDisplayEventReceiver

//2.NativeDisplayEventReceiver顶层父类是LooperCallback

sp<NativeDisplayEventReceiver> receiver = new NativeDisplayEventReceiver(env,

receiverWeak, messageQueue, vsyncSource);

//3.监听mReceive所获取的文件句柄, 一旦有数据到来, 则回调NativeDisplayEventReceiver

// 中复写的LooperCallback对象的handleEvent

status_t status = receiver->initialize();

receiver->incStrong(gDisplayEventReceiverClassInfo.clazz); // retain a reference for the object

return reinterpret_cast<jlong>(receiver.get());

}

其中receiver.initialize中有涉及到addFd函数

5.3 NativeDisplayEventReceiver.initialize

status_t DisplayEventDispatcher::initialize() {

status_t result = mReceiver.initCheck();

//1.添加文件描述符, 第三个参数EVENT_INPUT

int rc = mLooper->addFd(mReceiver.getFd(), 0, Looper::EVENT_INPUT, this, NULL);

if (rc < 0) {

return UNKNOWN_ERROR;

}

return OK;

}

六、vsync回调流程

vsync信号由SurfaceFlinger定时发送, 唤醒DispSyncThread线程, 再到EventThread线程, 然后通过BitTube直接传递到目标进程所对应的目标线程, 执行handleEvent方法—这段话全部为摘抄

接下来省略一大堆C++代码, 只画流程

android_view_DisplayEventReceiver.handleEvent

---android_view_DisplayEventReceiver.processPendingEvents

---android_view_DisplayEventReceiver.dispatchVsync

---FrameDisplayEventReceiver.dispatchVsync

因此最终回调到java层的FrameDisplayEventReceiver.dispatchVsync方法

6.1 FrameDisplayEventReceiver.dispatchVsync

private void dispatchVsync(long timestampNanos, long physicalDisplayId, int frame) {

onVsync(timestampNanos, physicalDisplayId, frame);

}

由此可见, 当发生了vsync信号时, 会回调到FrameDisplayEventReceiver.onVsync方法.

6.2 FrameDisplayEventReceiver.onVsync

@Override

public void onVsync(long timestampNanos, long physicalDisplayId, int frame) {

/**

* 1.将vsync事件通过Handler发送到MessageQueue中。如果队列中没有时间戳早于帧时间的消息,

* 则将立即处理vsync事件。否则,将首先处理早于vsync事件的消息。

* 2.总结: vsync信号到来时, 如果其他事件正在执行, 则继续执行当前的事件, 反之执行vsync信号

* 对应的事件, 如果非UI事件迟迟不能结束, 则会导致vsync信号被延迟, 给人的感觉就是丢帧

*/

long now = System.nanoTime();

if (mHavePendingVsync) {

} else {

mHavePendingVsync = true;

}

mTimestampNanos = timestampNanos;

mFrame = frame;

// 2.传递this回调run方法

Message msg = Message.obtain(mHandler, this);

msg.setAsynchronous(true);

// 3.mHandler: FrameHandler

mHandler.sendMessageAtTime(msg, timestampNanos / TimeUtils.NANOS_PER_MS);

}

6.3 FrameDisplayEventReceiver.run

public void run() {

mHavePendingVsync = false;

doFrame(mTimestampNanos, mFrame);

}

6.4 FrameDisplayEventReceiver.doFrame

// 1.frameTimeNanos: 底层vsync信号到达的时间戳

void doFrame(long frameTimeNanos, int frame) {

final long startNanos;

synchronized (mLock) {

if (!mFrameScheduled) {

return; // no work to do

}

long intendedFrameTimeNanos = frameTimeNanos;

// 2.开始执行的时间戳(纳秒)

startNanos = System.nanoTime();

/**

* 3.jitterNanos: FrameDisplayEventReceiver接收到VSYNC信号到被执行的时间差

* 理论上jitterNanos应当 = 0, 由于某些原因导致绘制的开始时间被延迟, 如果

* jitterNanos > mFrameIntervalNanos, 也就是说绘制的起始时间被延误而且延误

* 超过了一个时钟周期, 开始绘制的时间被延迟且超过一个时钟周期, 也必然会导致

* 绘制结束的时间被延迟且超过一个时钟周期, 即vsync的时间间隔, 如果不作处理, 下

* 一次vsync信号到来时, 则由于本次draw并没有结束, 进而会出现掉帧的情况, 而且

* 依次下去, jitterNanos与vsync信号会出现持续性不同步的问题.

* 3.1 所以在打印掉帧相关日志之后, 会对时间戳进行偏移, 保证该时间戳能够和vsync信号

* 保持同步.

*/

final long jitterNanos = startNanos - frameTimeNanos;

// 4.如果这个时间 > 帧率(16.7ms), 则认为存在耗时操作

if (jitterNanos >= mFrameIntervalNanos) {

// 5.总时间间隔 / 帧率 = 掉帧数

final long skippedFrames = jitterNanos / mFrameIntervalNanos;

// 6.掉帧数 大于默认值, 打印掉帧数量相应的log

if (skippedFrames >= SKIPPED_FRAME_WARNING_LIMIT) {

Log.i(TAG, "Skipped " + skippedFrames + " frames! "

+ "The application may be doing too much work on its main thread.");

}

// 7.求余数 = 实际时间差 % 帧率: 偏差值

final long lastFrameOffset = jitterNanos % mFrameIntervalNanos;

// 8.打印偏差及跳帧信息

if (DEBUG_JANK) {

Log.d(TAG, "Missed vsync by " + (jitterNanos * 0.000001f) + " ms "

+ "which is more than the frame interval of "

+ (mFrameIntervalNanos * 0.000001f) + " ms! "

+ "Skipping " + skippedFrames + " frames and setting frame "

+ "time to " + (lastFrameOffset * 0.000001f) + " ms in the past.");

}

// 9.修正偏差值, 忽略偏差, 为了后续更好的同步工作

frameTimeNanos = startNanos - lastFrameOffset;

}

// 10.时间回溯, 则不进行任何工作, 等待下一个时钟信号的到来

if (frameTimeNanos < mLastFrameTimeNanos) {

if (DEBUG_JANK) {

Log.d(TAG, "Frame time appears to be going backwards. May be due to a "

+ "previously skipped frame. Waiting for next vsync.");

}

// 11.请求下一次时钟信号

scheduleVsyncLocked();

return;

}

// 12.记录当前frame信息

mFrameInfo.setVsync(intendedFrameTimeNanos, frameTimeNanos);

// 13.将标识位置为false, 在向底层发送vsync信号时, 将标识位置为true, 收到vsync

// 回调之后将标识位置为false, 也就是说在没有开始进行当前帧的绘制之前, 不会再次

// 发送vsync信号, 即不会通知cpu和gpu进行下一帧的准备工作.

mFrameScheduled = false;

mLastFrameTimeNanos = frameTimeNanos;

}

try {

// 13.执行相关的callback

AnimationUtils.lockAnimationClock(frameTimeNanos / TimeUtils.NANOS_PER_MS);

// 13.1 input事件

doCallbacks(Choreographer.CALLBACK_INPUT, frameTimeNanos);

// 13.2 animation事件

doCallbacks(Choreographer.CALLBACK_ANIMATION, frameTimeNanos);

doCallbacks(Choreographer.CALLBACK_INSETS_ANIMATION, frameTimeNanos);

// 13.3 traversal事件

doCallbacks(Choreographer.CALLBACK_TRAVERSAL, frameTimeNanos);

doCallbacks(Choreographer.CALLBACK_COMMIT, frameTimeNanos);

} finally {

AnimationUtils.unlockAnimationClock();

}

}

- SurfaceFlinger定时发送vsync信号之后, 最终会回调到FrameDisplayEventReceiver.doFrame方法, 然后在doFrame方法中依次发送

四个事件:CALLBACK_INPUT、CALLBACK_ANIMATION、CALLBACK_TRAVERSAL、CALLBACK_COMMIT

6.4.1 doFrame流程总结: 设置当前Frame的启动时间

- 判断是否跳帧, 若跳帧修正当前Frame的启动时间到最近的vsync信号, 如果没跳帧, 当前frame启动时间直接设置为当前vsync信号时间, 修正完时间后, 无论当前Frame是否跳帧, 使得当前Frame的启动时间与vsync信号还是在一个节奏上, 可能延后了一到几个周期, 但是节奏点还是吻合的.

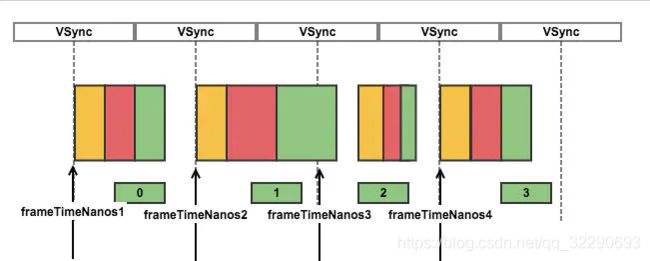

- 1.Frame3的startTime > vsync3的startTime但是Frame3StartTime - Vsync3StartTime < VsyncIntervalTime, 即doFrame方法中的

jitterNanos < mFrameIntervalNanos的情况, 此时直接使用该mFrameIntervalNanos(也就是Vsync3StartTime)时间进行回调 - 2.由于第二个Frame执行时间, 第三个Frame实际启动时间比第三个vsync信号到来时间要晚, 因为这时候延时比较小, 没有超过一个时钟周期, 系统还是将frameTimeNanos传给回调, 回调拿到的时间和vsync信号同步.

- 1.Frame3的startTime > Vsync3的startTime且时间间隔超过了一个时钟周期, 对应doFrame方法中的

jitterNanos >= mFrameIntervalNanos, 此时对mFrameIntervalNanos进行偏移, 偏移到前一个Vsync的startTime. - 2.由于第二个Frame执行时间超过两个时钟周期, 导致第三个Frame延后执行时间大于一个时钟周期, 系统认为这时影响较大, 判定为跳帧了, 将第三个Frame的时间修正为frameTimeNanos4, 比vsync真正到来的时间晚了一个时钟周期.

时间修正既保证了doFrame操作和vsync保持同步节奏, 又保证实际启动时间与记录的时间点相差不会太大, 便于同步及分析

6.4.2 doCallbacks回调

doCallbacks回调依次传入四个事件: CALLBACK_INPUT、CALLBACK_ANIMATION、CALLBACK_TRAVERSAL、CALLBACK_COMMIT

void doCallbacks(int callbackType, long frameTimeNanos) {

CallbackRecord callbacks;

synchronized (mLock) {

final long now = System.nanoTime();

callbacks = mCallbackQueues[callbackType].extractDueCallbacksLocked(

now / TimeUtils.NANOS_PER_MS);

mCallbacksRunning = true;

if (callbackType == Choreographer.CALLBACK_COMMIT) {

final long jitterNanos = now - frameTimeNanos;

if (jitterNanos >= 2 * mFrameIntervalNanos) {

final long lastFrameOffset = jitterNanos % mFrameIntervalNanos

+ mFrameIntervalNanos;

frameTimeNanos = now - lastFrameOffset;

mLastFrameTimeNanos = frameTimeNanos;

}

}

}

try {

for (CallbackRecord c = callbacks; c != null; c = c.next) {

// 执行CallbackRecord.run方法

c.run(frameTimeNanos);

}

} finally {

synchronized (mLock) {

mCallbacksRunning = false;

do {

final CallbackRecord next = callbacks.next;

recycleCallbackLocked(callbacks);

callbacks = next;

} while (callbacks != null);

}

}

}

6.4.3 CallbackRecord.run

public void run(long frameTimeNanos) {

if (token == FRAME_CALLBACK_TOKEN) {

((FrameCallback)action).doFrame(frameTimeNanos);

} else {

((Runnable)action).run();

}

}

- 结合

2.4Choreographe.postCallbacktoken指向RootViewImpl.mTraversalRunnable, 进入到else中调用mTraversalRunnable.run方法

6.5 RootViewImpl.doTraversal

TraversalRunnable.run() -> RootViewImpl.doTraversal()

void doTraversal() {

if (mTraversalScheduled) {

mTraversalScheduled = false;

// 1.移除同步栅栏

mHandler.getLooper().getQueue().removeSyncBarrier(mTraversalBarrier);

// 2.开始绘制

performTraversals();

}

}

- 1、doTraversal会先将栅栏移除, 然后处理performTraversals, 进行测量、布局、绘制, 提交当前帧给SurfaceFlinger进行图层合成显示, 以上多个boolean变量保证了每16ms最多执行一次UI重绘, 这也是目前Android存在60FPS上限的原因.

- 2、vsync同步信号需要用户主动去请求才会收到, 并且是单次有效的.