Docker(二)网络基础

docker网络

docker网络原理

Docker Network

[root@mail /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

3f5d7624918a bridge bridge local

0d2af6ce6648 host host local

606c6ae1b257 none null local

docker network ls #查看docker网络模式

- bridge:桥接式网络

- host:本机主机网络

- none:封闭式网络

注意:以上只是简单概述,后面内容会自己讲解这三种网络模式

Docker Network bridge

Docker安装完成后默认会生成一个docker0的网卡,该网卡被称为 nat桥,使用的是bridge网络模式,当我们启动一个容器,Docker server会为相应的容器创建一份网卡,一半在宿主机上,我们使用ifconfig可以看到,另一半在容器里面,同样使用默认的bridge网络模式,并且这些容器在宿主机的网卡还被关联到了Docker0上我们可以使用 brctl命令来查看容器网卡是怎么关联到docker0网卡上的

[root@mail /]# yum install bridge-utils -y #安装brctl命令

[root@mail /]# brctl show #查看关联在docker0上的网卡接口

bridge name bridge id STP enabled interfaces

docker0 8000.0242601bccc1 no veth34f612f

veth545339c

vethc68cecf

#注意:brctl show命令可以看到虚拟接口veth34f612f、veth545339c、vethc68cecf被关联到了docker0上

[root@mail /]# docker ps -a #而我们也是正好启动了三台容器

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2e9d14fd164c nginx "/bin/bash" 11 days ago Up 11 days 80/tcp nginx

3985a490bf99 centos "/bin/bash" 11 days ago Up 11 days centos

234e58b7e8be tomcat "/bin/bash" 11 days ago Up 11 days 8080/tcp tomcat

使用 ip link show 命令查看容器接口被关联到了Docker0网卡上

[root@mail /]# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:c6:fb:66 brd ff:ff:ff:ff:ff:ff

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:60:1b:cc:c1 brd ff:ff:ff:ff:ff:ff

31: veth34f612f@if30: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether c2:52:ae:54:eb:33 brd ff:ff:ff:ff:ff:ff link-netnsid 2

35: veth545339c@if34: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 1e:bc:1c:e5:50:15 brd ff:ff:ff:ff:ff:ff link-netnsid 1

39: vethc68cecf@if38: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 16:dd:63:ad:7b:ed brd ff:ff:ff:ff:ff:ff link-netnsid 0

每个接口的开始都显示了接口编号后缀都是以“@ifxx”结尾,编号和使用brctl show命令查看到的是一致的;这是我们上面所说的一份网卡的一份接口,而另一个接口编号为网卡编号后面的后缀“@ifxx”,而此接口是在容器内。

Docker Network host

名称空间

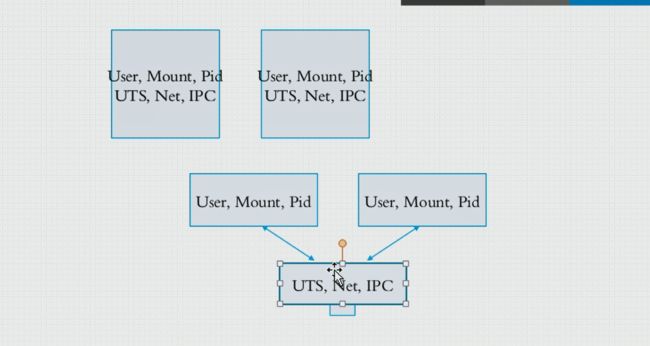

USer、Mount、Pid、IPC、Network、UTS

- 这张图是容器有独立的User,mount,pid,但UTS、Net、IPC却是和宿主机共享的

- 拥有独立的用户组,独立的挂载文件系统,独立的进程;但却有共同主机名、域名,共同的IP地址,共同的消息队列等;这也是我们的第二种docker网络模式host。

- 所谓的host就是宿主机,让容器使用宿主机的名称空间User、mount、pid

- host模式中容器之间通信可以通过lo接口来进行通信,因为它们是共同的网络,例如一台容器装了reids,一台容器装了nginx,在任何一 个容器以及宿主机上可以使用 127.0.0.1:port来访问相应的应用

Docker Network none

none网络代表空,空网络,意味着他们没有网络,只有lo接口,就相当你买了个主机,里面没有网卡,有些机器只需要进行计算,图片编码,视频转码等计算型服务器亦不需要联网

Docker Network模式总结

四种网络模型:

第一种Closed container,none:只有lo接口,没有网络

第二种bridge container,bridge:桥接式接口,通过容器的虚拟接口,连接到Docker0桥上,这个桥接是nat桥

第三种Joined container,host:称为联盟网络,就是我们上面讲的host模式,有独立的User,mount,pid,但UTS、Net、IPC却是和宿主机共享的,他们可以通过lo接口通信

第四种open container,开放式网络:直接共享与物理机的名称空间,名称空间内的所有东西,所以称为开放式网络,它的网卡是你的物理网络接口,物理网卡能够看到哪些,它就可以看到哪些网络

注意:如我们在创建容器时没有指定网络,那么统统为第二种网络桥接式模式:bridge网络

Docker与iptbales的前世今因

当我们启动一个容器,就会在宿主机的iptbales上生成一条规则

- iptables给docker提供网络的隔离和网络nat转发及发布

- docker使用 docker run -p等命令发布的端口都会在iptbales中自动添加相应的策略,并且iptabels的默认策略不会影响到docker所发布的端口信息

[root@mail /]# iptables -t nat -vnL | grep docker0

0 0 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

代表从任何接口进来,只要不是从docker0网络出去的,源地址为172.17.0.0/16段的地址,无论到达任何地址,我们都会为它做MASQUERADE(地址伪装),相当于SNAT,而且还是自动SNAT,所谓的自动SNAT就是选择一个合适的源地址自动进行伪装

Docker 容器网络信息

创建容器时如果不指定网络模式,默认为default网络,default网络为bridge

[root@mail /]# docker network inspect bridge #查看birdge网络详细信息

[

{

"Name": "bridge",

"Id": "3f5d7624918a975e014b3364c288ec2c78742fd25416a5eaa5c61f9bbdf8a8ea",

"Created": "2018-10-13T10:06:10.573182391+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16", #bridge网络所属的网络为172.17.0.0,在这个网络内开始分配地址

"Gateway": "172.17.0.1" #docker容器的网关地址

}

]

},

"Internal": false,

"Attachable": false,

"Containers": {

"234e58b7e8be6a2ce8894dd883866d313f08b678692b7a6b5a6af28b17ee0123": {

"Name": "tomcat",

"EndpointID": "1095f0c4e719bc613be520dee3ff75827867538824d1681056eb986a95980eb7",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

},

"2e9d14fd164c3358a00fe50a2b264a358468679acfd2de30f9aafac388f76c1b": {

"Name": "nginx",

"EndpointID": "f0f71c635242ddb5cc3a518fa1e902c4dc4eab761dd338e3c7159807cca41244",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"3985a490bf998f5c35b9e794e0027c72cecacbdc267ac27727be044f5cab2874": {

"Name": "centos",

"EndpointID": "df97637c706cbddf1e47726f609f21f3fbb3350a0a8318e89a0aa89994520bf3",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0", #这个地方可以看到brigde网络使用的网卡为docker0

"com.docker.network.driver.mtu": "1500"

},

"Labels": {

}

}

]

查看容器的详细信息

[root@mail /]# docker container inspect nginx | grep -C 15 Networks

"LinkLocalIPv6PrefixLen": 0,

"Ports": {

"80/tcp": null

},

"SandboxKey": "/var/run/docker/netns/7ac8fc7bf1d1",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "f0f71c635242ddb5cc3a518fa1e902c4dc4eab761dd338e3c7159807cca41244",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:02",

"Networks": {

"bridge": {

#可以看到该容器使用的网络模式为bridge

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "3f5d7624918a975e014b3364c288ec2c78742fd25416a5eaa5c61f9bbdf8a8ea",

"EndpointID": "f0f71c635242ddb5cc3a518fa1e902c4dc4eab761dd338e3c7159807cca41244",

"Gateway": "172.17.0.1", #网关是172.17.0.1

"IPAddress": "172.17.0.2", #地址是172.17.0.2

"IPPrefixLen": 16, #掩码长度

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02" #虚拟网卡的mac地址

}

}

docker网络案例

Docker启动设置

注意:Docker启动时可以指定一些容器内的操作,主要介绍以下几种

1. Docker启动时可以指定网络模式,如 bridge网络、host网络、none网络。如果不指定默认为bridge网络。

2. Docker启动时可以指定容器主机名称。如果不指定默认为 CONTAINER ID,也就是容器ID。

3. Docker启动时可以指定容器的DNS,如果不指定默认为宿主机的DNS。

4. Docker启动时可以指定容器的DNS搜索域,如果不指定默认为宿主机的搜索域,如果宿主机没有搜索域,容器则也没有。

5. Docker启动时可以指定hosts解析文件,如果不指定则为默认hosts文件。

1.指定网络模式。参数 –network

docker run --name busybox_network_bridge -itd --network bridge busybox:latest #指定bridge网络模式

docker inspect busybox_network_bridge | grep NetworkMode #查看容器所使用的网络模式

"NetworkMode": "bridge",

docker run --name busybox_network_host -itd --network host busybox:latest #指定host网络模式

docker inspect busybox_network_host | grep NetworkMode #查看容器所使用的网络模式

"NetworkMode": "host",

docker run --name busybox_network_none -itd --network none busybox:latest #指定none网络模式

docker inspect busybox_network_none | grep NetworkMode #查看容器所使用的网络模式

"NetworkMode": "none",

2.指定主机名称 。参数 -h hostname

#不指定hostname,默认和Container ID一致

[root@Docker-node1 ~]# docker run --name busybox_hostname -it busybox:latest

/ # hostname

75c204a638b1

/ # exit

[root@Docker-node1 ~]# docker ps -a | grep busybox_hostname

75c204a638b1 busybox:latest "sh" 23 seconds ago Exited (0) 12 seconds ago busybox_hostname

#指定hostname

[root@Docker-node1 ~]# docker run --name busybox_hostname -it -h busybox_node1 busybox:latest

/ # hostname

busybox_node1

3.指定DNS。参数 –dns

# 不指定dns,默认和宿主机DNS一致

[root@Docker-node1 ~]# docker run --name busybox_dns -it busybox:latest

/ # cat /etc/resolv.conf

nameserver 8.8.8.8

nameserver 8.8.4.4

# 指定dns

[root@Docker-node1 ~]# docker run --name busybox_dns -it --dns 114.114.114.114 busybox:latest

/ # cat /etc/resolv.conf

nameserver 114.114.114.114

4.指定DNS搜索域。参数 –dns-search

# 不指定dns搜索域,默认和宿主机一致

[root@Docker-node1 ~]# docker run --name busybox_dns_search -it busybox:latest

/ # cat /etc/resolv.conf

nameserver 8.8.8.8

nameserver 8.8.4.4

# 指定搜索域

[root@Docker-node1 ~]# docker run --name busybox_dns_search -it --dns-search linux.io busybox:latest

/ # cat /etc/resolv.conf

search linux.io

nameserver 8.8.8.8

nameserver 8.8.4.4

/ # nslookup -type=A xuwl.xyz

Server: 8.8.8.8

Address: 8.8.8.8:53

Non-authoritative answer:

Name: xuwl.xyz

Address: 111.230.237.127

5.指定host解析文件。参数 –add-host servername:IP

# 不指定host解析文件

[root@Docker-node1 ~]# docker run --name busybox_hosts -it busybox:latest

/ # cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 074c94bd85e5

#指定添加解析文件

[root@Docker-node1 ~]# docker run --name busybox_hosts -it --add-host xuwl.xyz:111.230.237.127 busybox:latest

/ # cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

111.230.237.127 xuwl.xyz

172.17.0.3 fef9738edefc

6.汇总

docker run --name busybox -itd --network bridge -h busybox --dns 114.114.114.114 --dns-search linux.io --add-host xuwl.xyz:111.230.237.127 busybox:latest

Docker联盟式网络

联盟式容器是指用某个已存在容器网络接口的容器,接口被联盟内的各容器共享使用;联盟式容器彼此间虽然共享同一个网络名称空间,但其它名称空间如User、Mount、等还是隔离的;联盟式容器彼此间存在端口冲突的可能性,因此,通常只会在多个容器上的程序需要程序lookback接口互相通信、或对某已存的容器网络属性进行监控时才使用此种模式的网络模型。

实例:

[root@Docker-node1 ~]# docker run --name docker_node1 -itd busybox:latest #先启动一个docker_node1容器

4a996ae891c03eae21fbeb2709857bec4c14c320b603672c289a802699d80f16

[root@Docker-node1 ~]# docker inspect --format='{

{range .NetworkSettings.Networks}}{

{.IPAddress}}{

{end}}' docker_node1

172.17.0.4 #查看docker_node1容器IP地址

[root@Docker-node1 ~]# docker run --name docker_node2 -it --network container:docker_node1 busybox:latest #参数 --network container:联盟容器名称;启动docker_node2容器并和docker_node1联盟

/ # ifconfig #查看docker_node2地址

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:04

inet addr:172.17.0.4 Bcast:172.17.255.255 Mask:255.255.0.0 #该地址和docker_node1一致,即为联盟

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # exit

[root@Docker-node1 ~]# docker start docker_node2

docker_node2

[root@Docker-node1 ~]# docker ps -a | grep docker_node #两台容器都为启动状态

98056b3f5652 busybox:latest "sh" 39 seconds ago Up 16 seconds docker_node2

4a996ae891c0 busybox:latest "sh" About a minute ago Up About a minute docker_node1

测试 隔离名称空间

# 挂载文件系统Mount:在docker_node1容器上创建/test文件,在docker_node2容器中并没有共享,还是为隔离状态

[root@Docker-node1 ~]# docker exec -it docker_node1 /bin/sh

/ # mkdir /tmp/test

/ # exit

[root@Docker-node1 ~]# docker exec -it docker_node2 /bin/sh

/ # ls /tmp/

/ # exit

测试共享空间

IP地址以及端口Network:在docker_node1上启动web服务并指定网页位置及地址端口,在docker_node2上通过lookbaup接口依然可以访问到该服务

[root@Docker-node1 ~]# docker exec -it docker_node1 /bin/sh

/ # mkdir /var/www/html

/ # echo 'Welcome to docker_node1

' > /var/www/html/index.html

/ # httpd -h /var/www/html/ -p 8888

/ # wget -O - -q 127.0.0.1:8888

<h1>Welcome to docker_node1</h1>

/ # exit

[root@Docker-node1 ~]# docker exec -it docker_node2 /bin/sh

/ # wget -O - -q 127.0.0.1:8888

<h1>Welcome to docker_node1</h1>

/ # netstat -tul #可以看到虽然是在docker_node2上,但端口也是共享监听

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 :::8888 :::* LISTEN

/ # ls /tmp/ #再看下本地文件系统,依然没有被共享

Docker开放式网络

开放式网络模式内的容器会和宿主机共享所有的名称空间,UTS、IPC、Network、User、PID、Mount

实例:

# 查看宿主机网卡:宿主机一共有三块网卡

[root@Docker-node1 ~]# ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:75ff:fe56:ea47 prefixlen 64 scopeid 0x20<link>

ether 02:42:75:56:ea:47 txqueuelen 0 (Ethernet)

RX packets 7 bytes 402 (402.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 15 bytes 1292 (1.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.56.5 netmask 255.255.255.0 broadcast 192.168.56.255

inet6 fe80::20c:29ff:fe50:fd19 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:50:fd:19 txqueuelen 1000 (Ethernet)

RX packets 8588 bytes 720296 (703.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4524 bytes 501823 (490.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 32 bytes 2592 (2.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 32 bytes 2592 (2.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

创建开放式网络容器

[root@Docker-node1 ~]# docker run --name docker_host -it --network host busybox:latest

/ # ifconfig #可以看到该容器与宿主机共享了所有的网卡

docker0 Link encap:Ethernet HWaddr 02:42:75:56:EA:47

inet addr:172.17.0.1 Bcast:172.17.255.255 Mask:255.255.0.0

inet6 addr: fe80::42:75ff:fe56:ea47/64 Scope:Link

UP BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:7 errors:0 dropped:0 overruns:0 frame:0

TX packets:15 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:402 (402.0 B) TX bytes:1292 (1.2 KiB)

eth0 Link encap:Ethernet HWaddr 00:0C:29:50:FD:19

inet addr:192.168.56.5 Bcast:192.168.56.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe50:fd19/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:9217 errors:0 dropped:0 overruns:0 frame:0

TX packets:4825 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:771216 (753.1 KiB) TX bytes:546933 (534.1 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:32 errors:0 dropped:0 overruns:0 frame:0

TX packets:32 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2592 (2.5 KiB) TX bytes:2592 (2.5 KiB)

/ # netstat -anplt #共享了所有的端口信息

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN -

tcp 0 0 192.168.56.5:22 192.168.56.1:49162 ESTABLISHED -

tcp 0 0 :::22 :::* LISTEN -

tcp 0 0 ::1:25 :::* LISTEN -

网络测试

/ # mkdir /var/www/html

/ # echo 'Welcome to docker_host!

' /var/www/html/index.html

<h1>Welcome to docker_host!</h1> /var/www/html/index.html

/ # httpd -h /var/www/html/ -p 12345

/ # netstat -anplt

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN -

tcp 0 0 192.168.56.5:22 192.168.56.1:49162 ESTABLISHED -

tcp 0 0 :::22 :::* LISTEN -

tcp 0 0 :::12345 :::* LISTEN 11/httpd

tcp 0 0 ::1:25 :::* LISTEN -

#网页测试,使用任何一个网卡加相应端口即可访问该网页

/ # wget -O - -q 127.0.0.1:12345

<h1>Welcome to docker_host!</h1>

/ # wget -O - -q 172.17.0.1:12345

<h1>Welcome to docker_host!</h1>

/ # wget -O - -q 192.168.56.5:12345

<h1>Welcome to docker_host!</h1>

Docker封闭式网络

这种容器的网络模式意义不大,一般用不到

[root@Docker-node1 ~]# docker run --name docker_none -it --network none busybox:latest

/ # ifconfig

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # echo 'Welcome to Docker_none!

' > /var/www/index.html

/ # httpd -h /var/www/ -p 9040

/ # netstat -tul

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 :::9040 :::* LISTEN

/ # wget -O - -q 127.0.0.1:9040

<h1>Welcome to Docker_none!</h1>

Docker修改默认网桥网段

Docker默认使用的网桥网段为172.17.0.网段,有时候公司内部的网段会和该网段冲突,导致nas挂载失败,这是笔者亲身遇到的情况。

自定义网段的属性信息:

更改网桥段需要更改/etc/docker/daemon.json配置文件

以下是解释关于网桥网段的信息,并不是所有加入该配置文件的信息

{

"bip": "10.20.0.1/24",

"fixed-cidr": "10.20.0.0/16",

"fixed-cidr-v6": "2001:db8::/64",

"mtu": 1500,

"default-gateway": "10.20.1.1",

"default-gateway-v6": "2001:db8:abcd::89",

"dns": ["10.20.1.2","10.20.1.3"]

}

参数解释:

bip:指定的是docker0桥的IP地址和掩码,系统会自动推算出docker0桥的网络,docker会自动为启动的容器分配一个IP,以dhcp的方式发放地址

mtu:指定最大传输单元

default-gateway:指定默认网关,每个容器启动时获取容器地址还能获取默认网关

default-gateway-v6:网关的IPv6地址

dns:容器启动之后自动获取相应的DNS地址

注意:核心选项为bip,即bridge ip之意,用于指定docker0桥自身的IP地址;你只要改了bip,其它选项可通过此地址计算得出。

需要配置的信息如下:

[root@Docker-node1 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["http://hub-mirror.c.163.com"],

"bip": "10.0.0.1/16" #只需要添加bip选项即可,其它选项会通过该网段的Docker0地址自动计算得出

}

[root@Docker-node1 ~]# systemctl restart docker

[root@Docker-node1 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.0.0.1 netmask 255.255.0.0 broadcast 10.0.255.255

inet6 fe80::42:75ff:fe56:ea47 prefixlen 64 scopeid 0x20<link>

ether 02:42:75:56:ea:47 txqueuelen 0 (Ethernet)

RX packets 7 bytes 402 (402.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 15 bytes 1292 (1.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@Docker-node1 ~]# docker start busybox

busybox

[root@Docker-node1 ~]# docker inspect --format='{

{range .NetworkSettings.Networks}}{

{.IPAddress}}{

{end}}' busybox

10.0.0.2

[root@Docker-node1 ~]# docker start busybox_network_bridge

busybox_network_bridge

[root@Docker-node1 ~]# docker inspect --format='{

{range .NetworkSettings.Networks}}{

{.IPAddress}}{

{end}}' busybox_network_bridge

10.0.0.3

Docker远程连接

监听本地端口

dockerd守护进程的C/S,其默认仅监听Unix Socket格式的地址,/var/run/docker.sock;如果需要远程连接,就需要监听TCP套接字,就是再加入监听 unix的其它端口;监听TCP套接字需要修改/etc/docker/daemon.json加入以下内容

#添加hosts,添加监听tcp协议的8484端口,0.0.0.0为本机的所有地址,后面原本监听的/var/run/docker.sock文件也给添加上

[root@Docker-node1 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["http://hub-mirror.c.163.com"],

"bip": "10.0.0.1/16",

"hosts": ["tcp://0.0.0.0:8484", "unix:///var/run/docker.sock"]

}

[root@Docker-node1 ~]# systemctl restart docker

[root@Docker-node1 ~]# ss -anplt | grep 8484

LISTEN 0 128 :::8484 :::* users:(("dockerd",pid=6248,fd=5)

远程连接docker

监听了TCP套接字8484端口以后呢,可以使用其它Docker机器,来远程管控该Docker

#被管控端:

[root@Docker-node1 ~]# hostname

Docker-node1

#管控端:

[root@docker-node2 ~]# hostname

docker-node2

#如果在此没有连接上,需要把Docker_node1上iptables清空下 iptables -F命令

[root@docker-node2 ~]# docker -H 192.168.56.5:8484 image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

redis latest 1babb1dde7e1 10 days ago 94.9MB

tomcat latest 05af71dd9251 12 days ago 463MB

nginx latest dbfc48660aeb 13 days ago 109MB

busybox latest 59788edf1f3e 3 weeks ago 1.15MB

[root@docker-node2 ~]# docker -H 192.168.56.5:8484 ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

caa65e325009 busybox:latest "sh" 42 minutes ago Exited (0) 34 minutes ago docker_none

c34eb8b3c761 busybox:latest "sh" About an hour ago Exited (137) 10 minutes ago docker_host

98056b3f5652 busybox:latest "sh" About an hour ago Exited (137) About an hour ago docker_node2

4a996ae891c0 busybox:latest "sh" About an hour ago Exited (137) About an hour ago docker_node1

b17d30fdf398 busybox:latest "sh" 2 hours ago Exited (137) 10 minutes ago busybox

fef9738edefc busybox:latest "sh" 2 hours ago Exited (0) 2 hours ago busybox_hosts

fcffbd38a4aa busybox:latest "sh" 2 hours ago Exited (0) 2 hours ago busybox_dns_search

1729959c758f busybox:latest "sh" 2 hours ago Exited (0) 2 hours ago busybox_dns

40c540863ddc busybox:latest "sh" 2 hours ago Exited (0) 2 hours ago busybox_hostname

de59791b605e busybox:latest "sh" 2 hours ago Exited (137) About an hour ago busybox_network_none

9f8a445ceb4b busybox:latest "sh" 2 hours ago Exited (137) About an hour ago busybox_network_host

651943132a06 busybox:latest "sh" 2 hours ago Exited (137) 10 minutes ago busybox_network_bridge

[root@docker-node2 ~]# docker -H 192.168.56.5:8484 start busybox

busybox

[root@docker-node2 ~]# docker -H 192.168.56.5:8484 ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b17d30fdf398 busybox:latest "sh" 2 hours ago Up 3 seconds busybox

Docker容器端口发布的四种方式

Docker发布端口主要由 -p 选项来进行对宿主机的映射,发布中如果未指定协议,默认为TCP

1. -p :将指定的容器端口映射至宿主机所有地址的一个动态端口

2. -p :::将指定的容器端口映射至宿主机指定的动态端口

3. -p ::将容器端口映射至指定的主机端口

4. -p :::将指定的容器端口映射至主机指定的端口

1. -p<.containerPort>:将指定的容器端口映射至宿主机所有地址的一个动态端口

[root@Docker-node1 ~]# docker run --name nginx_node1 -itd -p 80 nginx:latest

c629d4de3ace27252e5444ae0b1bcf8a1cea8e9a936689a05a5a3b8e86f4cbc8

[root@Docker-node1 ~]# docker port nginx_node1

80/tcp -> 0.0.0.0:32768 #这个地方可以看到容器nginx_node1的80端口被映射到了宿主机的所有网卡的32768端口

[root@Docker-node1 ~]# docker inspect --format='{

{range .NetworkSettings.Networks}}{

{.IPAddress}}{

{end}}' nginx_node1

10.0.0.3

[root@Docker-node1 ~]# iptables -t nat -vnL | grep 10.0.0.3

0 0 MASQUERADE tcp -- * * 10.0.0.3 10.0.0.3 tcp dpt:80

0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:32768 to:10.0.0.3:80

访问测试:

在Docker_node2上访问被映射后的宿主机端口:

[root@docker-node2 ~]# curl 192.168.56.5:32768 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:12:50 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

注意:因为该方式是把容器的某个端口映射到宿主机的所有网卡的某个动态端口上,so,应该物理机的所有网卡的32768端口都可以访问,以下进行测试

在Docker本机访问其它两个网卡的32768端口:

[root@Docker-node1 ~]# curl 10.0.0.1:32768 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:15:10 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

[root@Docker-node1 ~]# curl 127.0.0.1:32768 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:15:25 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

2.-p <.ip>::<.containerPort>:将指定的容器端口映射至宿主机指定的动态端口

[root@Docker-node1 ~]# docker run --name nginx_node2 -itd -p 192.168.56.5::80 nginx:latest

14f33d431db5d11854be7883bcccb15f0a7fdc4ca51e02e6f136ff4c2f44e798

[root@Docker-node1 ~]# docker port nginx_node2

80/tcp -> 192.168.56.5:32772

[root@Docker-node1 ~]# docker inspect --format='{

{range .NetworkSettings.Networks}}{

{.IPAddress}}{

{end}}' nginx_node2

10.0.0.4

[root@Docker-node1 ~]# iptables -t nat -vnL | grep 10.0.0.4

0 0 MASQUERADE tcp -- * * 10.0.0.4 10.0.0.4 tcp dpt:80

0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 192.168.56.5 tcp dpt:327712to:10.0.0.4:80

访问测试:

[root@Docker-node1 ~]# curl http://192.168.56.5:32772 -I #访问被映射地址的相应端口

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:29:50 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

注意:该映射方式是把容器的指定端口映射到宿主机指定网卡的某个动态端口,因此只有上面被指定映射的网卡可以通过32772端口访问,其它网卡显然被拒,以下测试

[root@Docker-node1 ~]# curl http://10.0.0.1:32772 -I

curl: (7) Failed connect to 10.0.0.1:32772; Connection refused

[root@Docker-node1 ~]# curl http://127.0.0.1:32772 -I

curl: (7) Failed connect to 127.0.0.1:32772; Connection refused

[root@Docker-node1 ~]# curl http://10.0.0.4:80 -I #但是访问容器自身的端口还是可以的呀

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:30:17 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

3.-p <.hostPort>:<.containerPort>:将容器端口映射至指定的主机端口

[root@Docker-node1 ~]# docker run --name nginx_node3 -itd -p 8888:80 nginx:latest

71a3314e4ad121cc34f7dc261e3ee3bdb8ccec18e3d49b7a3bb55aba241ab977

[root@Docker-node1 ~]# docker port nginx_node3

80/tcp -> 0.0.0.0:8888 #将容器的80端口映射到宿主机的所有地址上的8888端口

[root@Docker-node1 ~]# docker inspect --format='{

{range .NetworkSettings.Networks}}{

{.IPAddress}}{

{end}}' nginx_node3

10.0.0.5

[root@Docker-node1 ~]# iptables -t nat -vnL | grep 10.0.0.5

0 0 MASQUERADE tcp -- * * 10.0.0.5 10.0.0.5 tcp dpt:80

0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8888 to:10.0.0.5:80

访问测试宿主机的所有地址

[root@Docker-node1 ~]# curl http://127.0.0.1:8888 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:35:41 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

[root@Docker-node1 ~]# curl http://10.0.0.1:8888 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:35:48 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

[root@Docker-node1 ~]# curl http://192.168.56.5:8888 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:35:54 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

4 -p <.ip>:<.hostPort>:<.containerPort>:将指定的容器端口映射至主机指定的端口<.hostPort>

[root@Docker-node1 ~]# docker run --name nginx_node4 -itd -p 192.168.56.5:8080:80 nginx:latest

00fd8fd1f7eb83ab37bc4cf71a9373da169d3ec4f31ca20318756f4538eb5b30

[root@Docker-node1 ~]# docker port nginx_node4

80/tcp -> 192.168.56.5:8080

[root@Docker-node1 ~]# docker inspect --format='{

{range .NetworkSettings.Networks}}{

{.IPAddress}}{

{end}}' nginx_node4

10.0.0.6

[root@Docker-node1 ~]# iptables -t nat -vnL | grep 10.0.0.6

0 0 MASQUERADE tcp -- * * 10.0.0.6 10.0.0.6 tcp dpt:80

0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 192.168.56.5 tcp dpt:8080 to:10.0.0.6:80

访问测试:

[root@Docker-node1 ~]# curl http://192.168.56.5:8080 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:40:10 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

[root@Docker-node1 ~]# curl http://127.0.0.1:8080 -I

curl: (7) Failed connect to 127.0.0.1:8080; Connection refused

[root@Docker-node1 ~]# curl http://10.0.0.1:8080 -I

curl: (7) Failed connect to 10.0.0.1:8080; Connection refused

[root@Docker-node1 ~]# curl http://10.0.0.6:80 -I

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Mon, 29 Oct 2018 12:40:32 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

iptables规则策略

以下为我们使用docker run -p命令来做docker端口映射|DNAT发布时所自动产生的iptables规则

[root@Docker-node1 ~]# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT 2 packets, 156 bytes)

pkts bytes target prot opt in out source destination

20 1224 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 2 packets, 156 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 4 packets, 240 bytes)

pkts bytes target prot opt in out source destination

11 660 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT 4 packets, 240 bytes)

pkts bytes target prot opt in out source destination

2 120 MASQUERADE all -- * !docker0 10.0.0.0/16 0.0.0.0/0

0 0 MASQUERADE tcp -- * * 10.0.0.3 10.0.0.3 tcp dpt:80

0 0 MASQUERADE tcp -- * * 10.0.0.4 10.0.0.4 tcp dpt:80

0 0 MASQUERADE tcp -- * * 10.0.0.5 10.0.0.5 tcp dpt:80

0 0 MASQUERADE tcp -- * * 10.0.0.6 10.0.0.6 tcp dpt:80

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

3 180 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:32768 to:10.0.0.3:80

2 120 DNAT tcp -- !docker0 * 0.0.0.0/0 192.168.56.5 tcp dpt:32772 to:10.0.0.4:80

2 120 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8888 to:10.0.0.5:80

1 60 DNAT tcp -- !docker0 * 0.0.0.0/0 192.168.56.5 tcp dpt:8080 to:10.0.0.6:80

RADE tcp -- * * 10.0.0.5 10.0.0.5 tcp dpt:80

0 0 MASQUERADE tcp -- * * 10.0.0.6 10.0.0.6 tcp dpt:80

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

3 180 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:32768 to:10.0.0.3:80

2 120 DNAT tcp -- !docker0 * 0.0.0.0/0 192.168.56.5 tcp dpt:32772 to:10.0.0.4:80

2 120 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8888 to:10.0.0.5:80

1 60 DNAT tcp -- !docker0 * 0.0.0.0/0 192.168.56.5 tcp dpt:8080 to:10.0.0.6:80