Android 音视频学习:MediaCodec录制MP4文件

概述

这片博客的目标

- 完成音频的硬编码和硬解码

- 完成视频的硬编码和硬解码

- 完成音视频的录制MP4

此篇博客仅作为笔记使用,以防以后忘记

MediaCodec介绍

在Android 4.1版本提供了MediaCodec来访问设备的编解码器,它采用的是硬件编解码,所以在速度上比软解码更有优势

MediaCodec的工作流程

俩边的Client分别代表输入端和输出端

- 使用者输入端用MediaCodec请求一个一个空的ByteBuffer,填充数据后将他传递给MediaCodec去处理

- MediaCodec处理完之后将处理后的数据输出到一个空的ByteBuffer中

- 使用者从MediaCodec中获取输出的ByteBuffer,消耗掉里面的数据,使用完输出的ByteBuffer后

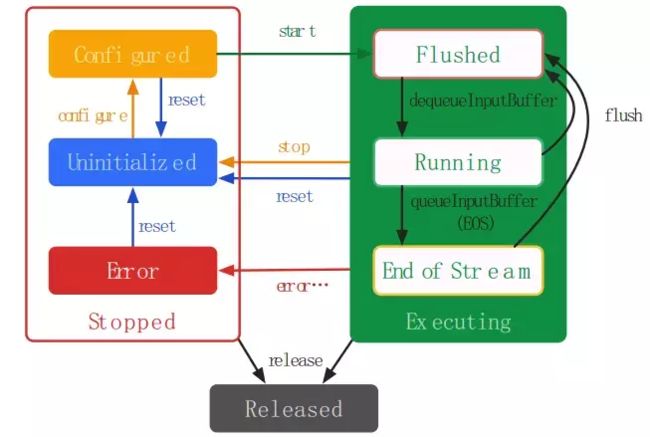

MediaCodec的生命周期

MediaCodec的声明周期有三种:Stopped、Executing、Released

Stopped包含三种状态:Uninitialized、Configured、Error

Executing包含三种状态:Flushed、Running、End-of-Stream

Stopped的三种子状态

- Uninitialized:当创建了一个MediaCodec对象,此时处于 Uninitialized状态,可以在任何状态下调用reset方法,返回Uninitialized状态

- Configured:当使用configure(…)方法对MediaCodec进行配置是进入Configured状态

- Error:MediaCodec当遇到错误时进入Error状态,

Executing的三种子状态

- Flushed:在调用start方法MediaCodec进入Flushed状态,此时MediaCodec拥有所有的缓存,可以在Executing状态的任何时候调用flush()方法进入Flushed状态

- Running:一旦第一个输入队列

input buffer被移出队列,MediaCodec就转入Running状态,这种状态占据了MediaCodec的大部分的声明周期,可以通过调用stop返回Uninitialized状态 - End-of-Stream:讲一个带有end-of-stream标志的输入buffer入队列时,MediaCodec将进入End-of-Stream状态,这种状态下MediaCodec不在接收之后的输入buffer,但他依然产生输出buffer,直到End-of-Stream标记输出

Released

当使用完MediaCodec之后,调用release方法释放资源,进入最终的Released状态

MediaCodec API简介

createDecoderByType/createEncoderByType

根据特定的MIME类型比如(video/avc)来创建MediaCodec

createByCodecName

直到组件的确切名称(OMX.google.mp3.decoder)根据确切的名称创建MediaCodec,组件名称可以根据MediaCodecList类来获取

configure

public void configure(

MediaFormat format,

Surface surface, MediaCrypto crypto, int flags);

-

MediaFormat format:数据所需要的格式, -

Surface surface:用于解码器输出的渲染,如果解码器不生成原始视频输出,或想配置输出解码器的ByteBuffer,则传null, -

MediaCrypto crypto:指定一个crypto对象,对媒体数据进行安全解密,对于非安全的解码器传null, -

int flags:当组件是解码器时指定为CONFIGURE_FLAG_ENCODE

MediaFormat

封装描述灭提数据格式的信息,以及可选特性的元数据

媒体数据格式为key/value,key是字符串,值可以是integer、long、float、String或ByteBuffer

特性元数据指定为string/boolean

dequeueInputBuffer

public final int dequeueInputBuffer(long timeoutUs)

返回用于填充有效数据输入buffer的索引,如果当前没有可用buffer则返回-1

- long timeoutUs:用于等待返回可用buffer的时间

- timeoutUs == 0立马返回

- timeoutUs < 0无限期等待可用buffer

- timeoutUs > 0等待timeoutUs时间

queueInputBuffer

将填充好的buffer发给MediaCodec

public native final void queueInputBuffer(

int index,

int offset, int size, long presentationTimeUs, int flags)

int index:dequeueInputBuffer方法返回的索引int offset:开始输入时buffer的偏移量int size:有效的输入字节数long presentationTimeUs:此buffer的PTS(以微秒为单位)。int flags:这个目前我也不太理解,我直接传 0

dequeueOutputBuffer

从MediaCodec中获取输出buffer

public final int dequeueOutputBuffer(

@NonNull BufferInfo info, long timeoutUs)

返回值

- 返回

INFO_TRY_AGAIN_LATER而timeoutUs指定为了非负值,表示超时了。 - 返回

INFO_OUTPUT_FORMAT_CHANGED表示输出格式已更改,后续数据将遵循新格式。

参数:

- BufferInfo info:输出buffer的metadata

- long timeoutUs:跟上方一样超时时间

releaseOutputBuffer

使用此方法把输出的buffer返回给codec或者渲染到surface上

public void releaseOutputBuffer (int index,

boolean render)

boolean render:如果codec配置了有效的surface,当这个参数为true时,则把数据渲染到surface上,一旦不使用此buffer,surafce将buffer返还给codec

实现

直接上代码了

视频数据nv21硬编码为h264

public class CameraToH264 {

public ArrayBlockingQueue<byte[]> yuv420Queue = new ArrayBlockingQueue<>(10);

private boolean isRuning;

private byte[] input;

private int width;

private int height;

private MediaCodec mediaCodec;

private MediaMuxer mediaMuxer;

private int mVideoTrack=-1;

private long nanoTime;

public void init(int width, int heigth) {

nanoTime = System.nanoTime();

this.width = width;

this.height = heigth;

MediaFormat videoFormat = MediaFormat.createVideoFormat("video/avc", width, heigth);

videoFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar);

videoFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 30);

videoFormat.setInteger(MediaFormat.KEY_BIT_RATE, width * heigth * 5);

videoFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

try {

mediaCodec = MediaCodec.createEncoderByType("video/avc");

mediaCodec.configure(videoFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mediaCodec.start();

mediaMuxer = new MediaMuxer("sdcard/aaapcm/camer1.mp4", MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

} catch (IOException e) {

e.printStackTrace();

}

}

public void putData(byte[] buffer) {

if (yuv420Queue.size() >= 10) {

yuv420Queue.poll();

}

yuv420Queue.add(buffer);

}

public void startEncoder() {

new Thread(new Runnable() {

@Override

public void run() {

isRuning = true;

while (isRuning) {

if (yuv420Queue.size() > 0) {

input = yuv420Queue.poll();

byte[] yuv420sp = new byte[width * height * 3 / 2];

// 必须要转格式,否则录制的内容播放出来为绿屏

NV21ToNV12(input, yuv420sp, width, height);

input = yuv420sp;

} else {

input = null;

}

if (input != null) {

ByteBuffer[] inputBuffers = mediaCodec.getInputBuffers();

ByteBuffer[] outputBuffers = mediaCodec.getOutputBuffers();

int inputBufferIndex = mediaCodec.dequeueInputBuffer(0);

if (inputBufferIndex >= 0) {

ByteBuffer inputBuffer = inputBuffers[inputBufferIndex];

inputBuffer.clear();

inputBuffer.put(input);

mediaCodec.queueInputBuffer(inputBufferIndex, 0, input.length, (System.nanoTime() - nanoTime) / 1000, 0);

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mediaCodec.dequeueOutputBuffer(bufferInfo, 0);

if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

mVideoTrack = mediaMuxer.addTrack(mediaCodec.getOutputFormat());

Log.d("mmm", "改变format");

if (mVideoTrack >= 0) {

mediaMuxer.start();

Log.d("mmm", "开始混合");

}

}

while (outputBufferIndex > 0) {

ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

if (mVideoTrack >= 0) {

mediaMuxer.writeSampleData(mVideoTrack, outputBuffer, bufferInfo);

Log.d("mmm", "正在写入");

}

mediaCodec.releaseOutputBuffer(outputBufferIndex, false);

outputBufferIndex = mediaCodec.dequeueOutputBuffer(bufferInfo, 0);

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

Log.e("mmm", "video end");

}

}

}

}

Log.d("mmm", "停止写入");

mediaMuxer.stop();

mediaMuxer.release();

mediaCodec.stop();

mediaCodec.release();

}

}).start();

}

public void stop() {

isRuning = false;

}

private void NV21ToNV12(byte[] nv21, byte[] nv12, int width, int height) {

if (nv21 == null || nv12 == null) return;

int framesize = width * height;

int i = 0, j = 0;

System.arraycopy(nv21, 0, nv12, 0, framesize);

for (i = 0; i < framesize; i++) {

nv12[i] = nv21[i];

}

for (j = 0; j < framesize / 2; j += 2) {

nv12[framesize + j - 1] = nv21[j + framesize];

}

for (j = 0; j < framesize / 2; j += 2) {

nv12[framesize + j] = nv21[j + framesize - 1];

}

}

}

- putData方法,是外部输入视频数据,我们这里是从相机拿到的数据,其格式为nv21

- init方法:初始化MediaFormat,为编码器设置帧率,码率等关键参数,初始化MediaCodec用于编码,初始化MediaMuxer用于合成MP4文件

- NV21ToNV12:转换nv21格式为nv12格式

音频数据pcm编码为aac

public class PcmToAAC {

private MediaCodec encoder;

private MediaMuxer mediaMuxer;

private boolean isRun;

private int mAudioTrack;

private long prevOutputPTSUs;

private Queue queue;

public void init() {

queue = new Queue();

queue.init(1024 * 100);

try {

MediaFormat audioFormat = MediaFormat.createAudioFormat(MediaFormat.MIMETYPE_AUDIO_AAC, 16000, 1);

//设置比特率

audioFormat.setInteger(MediaFormat.KEY_BIT_RATE, 64000);

audioFormat.setInteger(MediaFormat.KEY_AAC_PROFILE, MediaCodecInfo.CodecProfileLevel.AACObjectLC);

audioFormat.setInteger(MediaFormat.KEY_CHANNEL_COUNT, 1);

audioFormat.setInteger(MediaFormat.KEY_SAMPLE_RATE, 16000);

encoder = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_AUDIO_AAC);

encoder.configure(audioFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

encoder.start();

mediaMuxer = new MediaMuxer("sdcard/pcm.aac", MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

Log.d("mmm", "accinit");

} catch (IOException e) {

e.printStackTrace();

}

}

public void putData(byte[] buffer, int len) {

queue.addAll(buffer);

}

public void start() {

isRun = true;

Log.d("mmm", "aacstart");

new Thread(new Runnable() {

@Override

public void run() {

ByteBuffer[] inputBuffers = encoder.getInputBuffers();

ByteBuffer[] outputBuffers = encoder.getOutputBuffers();

while (isRun) {

byte[] bytes = new byte[640];

int all = queue.getAll(bytes, 640);

if (all < 0) {

try {

Thread.sleep(50);

continue;

} catch (InterruptedException e) {

e.printStackTrace();

}

}

int inputBufferindex = encoder.dequeueInputBuffer(0);

if (inputBufferindex > 0) {

ByteBuffer inputBuffer = inputBuffers[inputBufferindex];

inputBuffer.clear();

inputBuffer.put(bytes);

encoder.queueInputBuffer(inputBufferindex, 0, bytes.length, getPTSUs(), 0);

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferindex = encoder.dequeueOutputBuffer(bufferInfo, 0);

if (outputBufferindex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

Log.d("mmm", "改变format");

mAudioTrack = mediaMuxer.addTrack(encoder.getOutputFormat());

if (mAudioTrack >= 0) {

mediaMuxer.start();

Log.d("mmm", "开始混合");

}

} else if (outputBufferindex == MediaCodec.INFO_TRY_AGAIN_LATER) {

Log.d("mmm", "try-later");

}

while (outputBufferindex > 0) {

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

bufferInfo.size = 0;

}

bufferInfo.presentationTimeUs = getPTSUs();

ByteBuffer outputBuffer = outputBuffers[outputBufferindex];

// outputBuffer.position(bufferInfo.offset);

if (mAudioTrack >= 0) {

mediaMuxer.writeSampleData(mAudioTrack, outputBuffer, bufferInfo);

Log.d("mmm", "写入文件");

}

prevOutputPTSUs = bufferInfo.presentationTimeUs;

encoder.releaseOutputBuffer(outputBufferindex, false);

outputBufferindex = encoder.dequeueOutputBuffer(bufferInfo, 0);

}

}

Log.d("mmm", "aacstop");

encoder.stop();

encoder.release();

mediaMuxer.stop();

mediaMuxer.release();

}

}).start();

}

public void stop() {

isRun = false;

}

private long getPTSUs() {

long result = System.nanoTime() / 1000L;

// presentationTimeUs should be monotonic

// otherwise muxer fail to write

if (result < prevOutputPTSUs)

result = (prevOutputPTSUs - result) + result;

return result;

}

}

- putData方法,是外部输入pcm音频数据,我们这里是从AudioRecoder拿到的pcm数据

- init方法:初始化MediaFormat,为编码器设置声道数和采样率等关键参数,初始化MediaCodec用于编码,初始化MediaMuxer用于合成wav文件

采集音频和视频数据,合成MP4音视频文件

public class AudioThread extends Thread {

private static final int TIMEOUT_USEC = 10000;

private static final String MIME_TYPE = "audio/mp4a-latm";

private static final int SAMPLE_RATE = 16000;

private static final int BIT_RATE = 64000;

private MediaFormat audioFormat;

private MediaCodec mMediaCodec;

private final Queue queue;

private boolean isRun;

private long prevOutputPTSUs;

private MuxerThread muxerThread;

private MediaMuxer mediaMuxer;

private int audiotrack;

public AudioThread(MuxerThread muxerThread) {

Log.d("mmm", "AudioThread");

this.muxerThread = muxerThread;

queue = new Queue();

queue.init(1024 * 100);

preper();

}

private void preper() {

audioFormat = MediaFormat.createAudioFormat(MIME_TYPE, SAMPLE_RATE, 1);

audioFormat.setInteger(MediaFormat.KEY_BIT_RATE, BIT_RATE);

audioFormat.setInteger(MediaFormat.KEY_CHANNEL_COUNT, 1);

audioFormat.setInteger(MediaFormat.KEY_SAMPLE_RATE, SAMPLE_RATE);

try {

mMediaCodec = MediaCodec.createEncoderByType(MIME_TYPE);

mMediaCodec.configure(audioFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mMediaCodec.start();

Log.d("mmm", "preper");

} catch (IOException e) {

e.printStackTrace();

}

isRun = true;

}

public void addAudioData(byte[] data) {

if (!isRun) return;

queue.addAll(data);

}

public void audioStop() {

isRun = false;

}

@Override

public void run() {

while (isRun) {

byte[] bytes = new byte[640];

int all = queue.getAll(bytes, 640);

if (all < 0) {

try {

Thread.sleep(50);

continue;

} catch (InterruptedException e) {

e.printStackTrace();

}

}

ByteBuffer[] inputBuffers = mMediaCodec.getInputBuffers();

ByteBuffer[] outputBuffers = mMediaCodec.getOutputBuffers();

int inputBufferIndex = mMediaCodec.dequeueInputBuffer(TIMEOUT_USEC);

if (inputBufferIndex > 0) {

ByteBuffer inputBuffer = inputBuffers[inputBufferIndex];

inputBuffer.clear();

inputBuffer.put(bytes);

mMediaCodec.queueInputBuffer(inputBufferIndex, 0, bytes.length, getPTSUs(), 0);

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_USEC);

do {

if (outputBufferIndex == MediaCodec.INFO_TRY_AGAIN_LATER) {

} else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

outputBuffers = mMediaCodec.getOutputBuffers();

} else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

Log.e("mmm", "audioINFO_OUTPUT_FORMAT_CHANGED");

MediaFormat format = mMediaCodec.getOutputFormat(); // API >= 16

if (muxerThread != null) {

Log.e("mmm", "添加音轨 INFO_OUTPUT_FORMAT_CHANGED " + format.toString());

muxerThread.addTrackIndex(MuxerThread.TRACK_AUDIO, format);

}

} else if (outputBufferIndex < 0) {

Log.e("mmm", "encoderStatus < 0");

} else {

final ByteBuffer encodedData = outputBuffers[outputBufferIndex];

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

bufferInfo.size = 0;

}

if (bufferInfo.size != 0 && muxerThread != null && muxerThread.isStart()) {

bufferInfo.presentationTimeUs = getPTSUs();

muxerThread.addMuxerData(new MuxerData(MuxerThread.TRACK_AUDIO, encodedData, bufferInfo));

prevOutputPTSUs = bufferInfo.presentationTimeUs;

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

}

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_USEC);

} while (outputBufferIndex >= 0);

}

mMediaCodec.stop();

mMediaCodec.release();

Log.d("mmm", "audiomMediaCodec");

}

private long getPTSUs() {

long result = System.nanoTime() / 1000L;

// presentationTimeUs should be monotonic

// otherwise muxer fail to write

if (result < prevOutputPTSUs)

result = (prevOutputPTSUs - result) + result;

return result;

}

}

public class VideoThread extends Thread {

private final MuxerThread muxerThread;

private final int mWidth;

private final int mHeigth;

public static final int IMAGE_HEIGHT = 1080;

public static final int IMAGE_WIDTH = 1920;

// 编码相关参数

private static final String MIME_TYPE = "video/avc"; // H.264 Advanced Video

private static final int FRAME_RATE = 25; // 帧率

private static final int IFRAME_INTERVAL = 10; // I帧间隔(GOP)

private static final int TIMEOUT_USEC = 10000; // 编码超时时间

private static final int COMPRESS_RATIO = 256;

private static final int BIT_RATE = IMAGE_HEIGHT * IMAGE_WIDTH * 3 * 8 * FRAME_RATE / COMPRESS_RATIO; // bit rate CameraWrapper.

private final Vector<byte[]> frameBytes;

private final byte[] mFrameData;

private MediaFormat mediaFormat;

private MediaCodec mMediaCodec;

private boolean isRun;

private int videoTrack;

public VideoThread(int width, int heigth, MuxerThread muxerThread) {

Log.d("mmm", "VideoThread");

this.muxerThread = muxerThread;

this.mWidth = width;

this.mHeigth = heigth;

mFrameData = new byte[this.mWidth * this.mHeigth * 3 / 2];

frameBytes = new Vector<byte[]>();

preper();

}

private void preper() {

mediaFormat = MediaFormat.createVideoFormat(MIME_TYPE, mWidth, mHeigth);

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, BIT_RATE);

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, FRAME_RATE);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar);

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, IFRAME_INTERVAL);

try {

mMediaCodec = MediaCodec.createEncoderByType("video/avc");

mMediaCodec.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mMediaCodec.start();

Log.d("mmm", "preper");

} catch (IOException e) {

e.printStackTrace();

}

}

public void add(byte[] data) {

if (!isRun) return;

if (frameBytes.size() > 10) {

frameBytes.remove(0);

}

frameBytes.add(data);

}

@Override

public void run() {

isRun = true;

while (isRun) {

if (!frameBytes.isEmpty()) {

byte[] bytes = this.frameBytes.remove(0);

Log.e("ang-->", "解码视频数据:" + bytes.length);

NV21toI420SemiPlanar(bytes, mFrameData, mWidth, mHeigth);

ByteBuffer[] outputBuffers = mMediaCodec.getOutputBuffers();

ByteBuffer[] inputBuffers = mMediaCodec.getInputBuffers();

int inputBufferindex = mMediaCodec.dequeueInputBuffer(TIMEOUT_USEC);

if (inputBufferindex > 0) {

ByteBuffer inputBuffer = inputBuffers[inputBufferindex];

inputBuffer.clear();

inputBuffer.put(mFrameData);

mMediaCodec.queueInputBuffer(inputBufferindex, 0, mFrameData.length, System.nanoTime() / 1000, 0);

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_USEC);

do {

if (outputBufferIndex == MediaCodec.INFO_TRY_AGAIN_LATER) {

} else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

outputBuffers = mMediaCodec.getOutputBuffers();

} else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

Log.e("mmm", "videoINFO_OUTPUT_FORMAT_CHANGED");

MediaFormat newFormat = mMediaCodec.getOutputFormat();

muxerThread.addTrackIndex(MuxerThread.TRACK_VIDEO, newFormat);

} else if (outputBufferIndex < 0) {

Log.e("mmm", "outputBufferIndex < 0");

} else {

ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

Log.d("mmm", "ignoring BUFFER_FLAG_CODEC_CONFIG");

bufferInfo.size = 0;

}

if (bufferInfo.size != 0 && muxerThread.isStart()) {

muxerThread.addMuxerData(new MuxerData(MuxerThread.TRACK_VIDEO, outputBuffer, bufferInfo));

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

}

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_USEC);

} while (outputBufferIndex > 0);

}

}

mMediaCodec.stop();

mMediaCodec.release();

Log.d("mmm","videomMediaCodec");

}

public void stopvideo() {

isRun = false;

}

private static void NV21toI420SemiPlanar(byte[] nv21bytes, byte[] i420bytes, int width, int height) {

System.arraycopy(nv21bytes, 0, i420bytes, 0, width * height);

for (int i = width * height; i < nv21bytes.length; i += 2) {

i420bytes[i] = nv21bytes[i + 1];

i420bytes[i + 1] = nv21bytes[i];

}

}

}

public class MuxerThread extends Thread {

public static final String TRACK_AUDIO = "TRACK_AUDIO";

public static final String TRACK_VIDEO = "TRACK_VIDEO";

private Vector<MuxerData> muxerDatas;

private MediaMuxer mediaMuxer;

private boolean isAddAudioTrack;

private boolean isAddVideoTrack;

private static MuxerThread muxerThread = new MuxerThread();

private int videoTrack;

private int audioTrack;

private VideoThread videoThread;

private AudioThread audioThread;

private boolean isRun;

private MuxerThread() {

Log.d("mmm", "MuxerThread");

}

public static MuxerThread getInstance() {

return muxerThread;

}

public synchronized void addTrackIndex(String track, MediaFormat format) {

if (isAddAudioTrack && isAddVideoTrack) {

return;

}

if (!isAddVideoTrack && track.equals(TRACK_VIDEO)) {

Log.e("mmm", "添加视频轨");

videoTrack = mediaMuxer.addTrack(format);

if (videoTrack >= 0) {

isAddVideoTrack = true;

Log.e("mmm", "添加视频轨完成");

}

}

if (!isAddAudioTrack && track.equals(TRACK_AUDIO)) {

Log.e("mmm", "添加音频轨");

audioTrack = mediaMuxer.addTrack(format);

if (audioTrack >= 0) {

isAddAudioTrack = true;

Log.e("mmm", "添加音频轨完成");

}

}

if (isStart()) {

mediaMuxer.start();

}

}

public boolean isStart() {

return isAddAudioTrack && isAddVideoTrack;

}

public void addMuxerData(MuxerData muxerData) {

muxerDatas.add(muxerData);

}

public void addVideoData(byte[] data) {

if (!isRun) return;

videoThread.add(data);

}

public void addAudioData(byte[] data) {

if (!isRun || audioThread == null) return;

audioThread.addAudioData(data);

}

public void startMuxer(int width, int height) {

Log.d("mmm", "startMuxer");

try {

mediaMuxer = new MediaMuxer("sdcard/camer111.mp4", MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

muxerDatas = new Vector<>();

} catch (IOException e) {

e.printStackTrace();

}

isRun = true;

videoThread = new VideoThread(width, height, this);

videoThread.start();

audioThread = new AudioThread(this);

audioThread.start();

start();

}

public void exit() {

isRun = false;

videoThread.stopvideo();

audioThread.audioStop();

}

@Override

public void run() {

while (isRun) {

if (!muxerDatas.isEmpty() && isStart()) {

MuxerData muxerData = muxerDatas.remove(0);

if (muxerData.trackIndex.equals(TRACK_VIDEO) && videoTrack >= 0) {

Log.d("mmm", "写入视频" + muxerData.bufferInfo.size);

mediaMuxer.writeSampleData(videoTrack, muxerData.byteBuf, muxerData.bufferInfo);

}

if (muxerData.trackIndex.equals(TRACK_AUDIO) && audioTrack >= 0) {

Log.d("mmm", "写入音频" + muxerData.bufferInfo.size);

mediaMuxer.writeSampleData(audioTrack, muxerData.byteBuf, muxerData.bufferInfo);

}

}

}

mediaMuxer.stop();

mediaMuxer.release();

Log.d("mmm", "mediaMuxerstop");

}

}

public class Queue {

private byte[] buffer;

private int head;

private int tail;

private int count;

private int size;

public void init(int n) {

buffer = new byte[n];

size = n;

head = 0;

tail = 0;

count = 0;

}

public void add(byte data) {

if (size == count) {

// Log.d("mmm", "队列已满");

get();

}

if (tail == size) {

tail = 0;

}

buffer[tail] = data;

tail++;

count++;

}

public byte get() {

if (count == 0) {

Log.d("mmm", "队列为空");

return -1;

}

if (head == size) {

head = 0;

}

byte data = buffer[head];

head++;

count--;

return data;

}

public void addAll(byte[] data) {

synchronized (this) {

for (byte b : data) {

add(b);

}

}

}

public int getAll(byte[] data, int len) {

synchronized (this) {

if (count < len) {

return -1;

}

int j = 0;

for (int i = 0; i < len; i++) {

byte b = get();

data[i] = b;

j++;

}

return j;

}

}

}

public class MuxerData {

String trackIndex;

ByteBuffer byteBuf;

MediaCodec.BufferInfo bufferInfo;

public MuxerData(String trackIndex, ByteBuffer byteBuf, MediaCodec.BufferInfo bufferInfo) {

this.trackIndex = trackIndex;

this.byteBuf = byteBuf;

this.bufferInfo = bufferInfo;

}

}

介绍一下各个类的作用

- AudioThread:负责收集音频pcm,然后硬编码为aac,最后把编码完的数据传递给合成器MuxerThread

- VideoThread:负责收集视频yuv,然后硬编码为h264,最后把编码完的数据给合成器MuxerThread

- Queue:就是一个普通的循环队列,负责装音频数据pcm

- MuxerData:负责封装编码完成的aac和h264

- MuxerThread:负责把编码好的aac和h264,合成音视频Mp4

硬解码AAC

public void init1() {

//音频

audioExtractor = new MediaExtractor();

try {

audioExtractor.setDataSource("/sdcard/camer111.mp4");

int audioTrack = -1;

int trackCount1 = audioExtractor.getTrackCount();

for (int i = 0; i < trackCount1; i++) {

MediaFormat trackFormat1 = audioExtractor.getTrackFormat(i);

String string = trackFormat1.getString(MediaFormat.KEY_MIME);

if (string.startsWith("audio/")) {

audioTrack = i;

Log.d("mmm", "找到轨道" + audioTrack);

}

}

audioExtractor.selectTrack(audioTrack);

audiodecoder = MediaCodec.createDecoderByType(MediaFormat.MIMETYPE_AUDIO_AAC);

MediaFormat trackFormat1 = audioExtractor.getTrackFormat(audioTrack);

audiodecoder.configure(trackFormat1, null, null, 0);

audiodecoder.start();

int bufferSize = AudioTrack.getMinBufferSize(16000, AudioFormat.CHANNEL_OUT_MONO, AudioFormat.ENCODING_PCM_16BIT);

audioTrackplay = new AudioTrack(AudioManager.STREAM_MUSIC, 16000, AudioFormat.CHANNEL_OUT_MONO, AudioFormat.ENCODING_PCM_16BIT, bufferSize, AudioTrack.MODE_STREAM);

audioTrackplay.play();

} catch (IOException e) {

e.printStackTrace();

}

}

public void start1() {

new Thread(new Runnable() {

@Override

public void run() {

while (true) {

int inputBufferindex = audiodecoder.dequeueInputBuffer(0);

if (inputBufferindex > 0) {

ByteBuffer inputBuffer = audiodecoder.getInputBuffer(inputBufferindex);

int sampleSize = audioExtractor.readSampleData(inputBuffer, 0);

if (sampleSize > 0) {

Log.d("mmm", "找到数据渲染pcm");

audiodecoder.queueInputBuffer(inputBufferindex, 0, sampleSize, audioExtractor.getSampleTime(), 0);

audioExtractor.advance();

}

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = audiodecoder.dequeueOutputBuffer(bufferInfo, 0);

while (outputBufferIndex > 0) {

Log.d("mmm", "开始渲染pcm");

ByteBuffer outputBuffer = audiodecoder.getOutputBuffer(outputBufferIndex);

byte[] bytes = new byte[bufferInfo.size];

outputBuffer.get(bytes);

outputBuffer.clear();

audioTrackplay.write(bytes, 0, bufferInfo.size);

audiodecoder.releaseOutputBuffer(outputBufferIndex, true);

outputBufferIndex = audiodecoder.dequeueOutputBuffer(bufferInfo, 0);

}

}

}

}).start();

}

逻辑很简单,从MP4文件取出音轨,然后硬解码AAC为pcm,最后用AudioTrack播放pcm

硬解码h264,并且显示在surface上

public void init(SurfaceHolder surfaceHolder) {

try {

//视频

videoExtractor = new MediaExtractor();

videoExtractor.setDataSource("/sdcard/camer111.mp4");

int videoTrack = -1;

int trackCount = videoExtractor.getTrackCount();

for (int i = 0; i < trackCount; i++) {

MediaFormat trackFormat = videoExtractor.getTrackFormat(i);

String string = trackFormat.getString(MediaFormat.KEY_MIME);

Log.d("mmm", "找到轨道" +string );

if (string.startsWith("video/")) {

videoTrack = i;

Log.d("mmm", "找到轨道video" + videoTrack);

}

}

videoExtractor.selectTrack(videoTrack);

videodecoder = MediaCodec.createDecoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

MediaFormat trackFormat = videoExtractor.getTrackFormat(videoTrack);

videodecoder.configure(trackFormat, surfaceHolder.getSurface(), null, 0);

videodecoder.start();

} catch(

IOException e)

{

e.printStackTrace();

}

}

public void start() {

isRunning = true;

new Thread(new Runnable() {

@Override

public void run() {

while (isRunning) {

int inputBufferindex = videodecoder.dequeueInputBuffer(0);

if (inputBufferindex > 0) {

ByteBuffer inputBuffer = videodecoder.getInputBuffer(inputBufferindex);

int sampleSize = videoExtractor.readSampleData(inputBuffer, 0);

long sampleTime = videoExtractor.getSampleTime();

if (sampleSize > 0) {

Log.d("mmm", "找到数据渲染");

videodecoder.queueInputBuffer(inputBufferindex, 0, sampleSize, videoExtractor.getSampleTime(), 0);

videoExtractor.advance();

try {

sleep(30);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = videodecoder.dequeueOutputBuffer(bufferInfo, 0);

while (outputBufferIndex > 0) {

Log.d("mmm", "开始渲染");

videodecoder.releaseOutputBuffer(outputBufferIndex, true);

outputBufferIndex = videodecoder.dequeueOutputBuffer(bufferInfo, 0);

}

}

}

}).start();

}

逻辑很简单,先从MP4中分离出视频轨道,然后取出h264数据解码然后直接显示在surface上

h264转换为图片

这部分代码也是网上扒的,做下记录

public class H264ToBitmap {

private final int decodeColorFormat = MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible;

public static final int FILE_TypeI420 = 1;

public static final int FILE_TypeNV21 = 2;

public static final int FILE_TypeJPEG = 3;

private int outputImageFileType = FILE_TypeJPEG;

private static final int COLOR_FormatI420 = 1;

private static final int COLOR_FormatNV21 = 2;

public void videoDecode() {

try {

MediaExtractor mediaExtractor = new MediaExtractor();

mediaExtractor.setDataSource("/sdcard/eee.mp4");

int vidioTrack = selectTrack(mediaExtractor);

if (vidioTrack < 0) {

Log.d("mmm", "未找到正确轨道");

}

mediaExtractor.selectTrack(vidioTrack);

MediaFormat mediaFormat = mediaExtractor.getTrackFormat(vidioTrack);

String MIME = mediaFormat.getString(MediaFormat.KEY_MIME);

MediaCodec decoder = MediaCodec.createDecoderByType(MIME);

showSupportedColorFormat(decoder.getCodecInfo().getCapabilitiesForType(MIME));

if (isColorFormatSupported(decodeColorFormat, decoder.getCodecInfo().getCapabilitiesForType(MIME))) {

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, decodeColorFormat);

} else {

Log.d("mmm", "不支持此类型");

}

decodeFramesToImage(decoder, mediaExtractor, mediaFormat);

} catch (IOException e) {

e.printStackTrace();

}

}

private int selectTrack(MediaExtractor mediaExtractor) {

int trackCount = mediaExtractor.getTrackCount();

for (int i = 0; i < trackCount; i++) {

MediaFormat trackFormat = mediaExtractor.getTrackFormat(i);

String string = trackFormat.getString(MediaFormat.KEY_MIME);

if (string.startsWith("video/")) {

return i;

}

}

return -1;

}

private void showSupportedColorFormat(MediaCodecInfo.CodecCapabilities capabilitiesForType) {

for (int a : capabilitiesForType.colorFormats) {

Log.d("mmm", "支持格式" + a + "/");

}

}

private boolean isColorFormatSupported(int decodeColorFormat, MediaCodecInfo.CodecCapabilities capabilitiesForType) {

for (int a : capabilitiesForType.colorFormats) {

if (a == decodeColorFormat) {

return true;

}

}

return false;

}

private void decodeFramesToImage(MediaCodec decoder, MediaExtractor mediaExtractor, MediaFormat mediaFormat) {

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

boolean sawInputEOS = false;

boolean sawOutputEOS = false;

decoder.configure(mediaFormat, null, null, 0);

decoder.start();

final int width = mediaFormat.getInteger(MediaFormat.KEY_WIDTH);

final int height = mediaFormat.getInteger(MediaFormat.KEY_HEIGHT);

int outputFrameCount = 0;

while (!sawOutputEOS) {

if (!sawInputEOS) {

int inputBufferId = decoder.dequeueInputBuffer(0);

if (inputBufferId >= 0) {

ByteBuffer inputBuffer = decoder.getInputBuffer(inputBufferId);

int sampleSize = mediaExtractor.readSampleData(inputBuffer, 0);

if (sampleSize >= 0) {

long sampleTime = mediaExtractor.getSampleTime();

decoder.queueInputBuffer(inputBufferId, 0, sampleSize, sampleTime, 0);

mediaExtractor.advance();

} else {

decoder.queueInputBuffer(inputBufferId, 0, 0, 0L, MediaCodec.BUFFER_FLAG_END_OF_STREAM);

sawInputEOS = true;

}

}

}

int outputBufferId = decoder.dequeueOutputBuffer(bufferInfo, 0);

if (outputBufferId >= 0) {

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

sawOutputEOS = true;

}

if (bufferInfo.size != 0) {

outputFrameCount++;

Image outputImage = decoder.getOutputImage(outputBufferId);

Log.d("mmm", "imageformat" + outputImage.getFormat());

if (outputImageFileType != -1) {

String fileName;

switch (outputImageFileType) {

case FILE_TypeI420:

fileName = "/sdcard/aaapcm" + String.format("frame_%05d_I420_%dx%d.yuv", outputFrameCount, width, height);

dumpFile(fileName, getDataFromImage(outputImage, COLOR_FormatI420));

break;

case FILE_TypeNV21:

fileName = "/sdcard/aaapcm" + String.format("frame_%05d_NV21_%dx%d.yuv", outputFrameCount, width, height);

dumpFile(fileName, getDataFromImage(outputImage, COLOR_FormatNV21));

break;

case FILE_TypeJPEG:

fileName = "/sdcard/aaapcm/" + String.format("frame_%05d.jpg", outputFrameCount);

compressToJpeg(fileName, outputImage);

break;

}

}

outputImage.close();

decoder.releaseOutputBuffer(outputBufferId, false);

}

}

}

}

private byte[] getDataFromImage(Image image, int colorFormat) {

if (colorFormat != COLOR_FormatI420 && colorFormat != COLOR_FormatNV21) {

Log.d("mmm", "only support COLOR_FormatI420");

throw new IllegalArgumentException("only support COLOR_FormatI420 " + "and COLOR_FormatNV21");

}

if (!isImageFormatSupported(image)) {

Log.d("mmm", "不支持图片类型");

throw new RuntimeException("can't convert Image to byte array, format " + image.getFormat());

}

Rect crop = image.getCropRect();

int format = image.getFormat();

int width = crop.width();

int height = crop.height();

Image.Plane[] planes = image.getPlanes();

byte[] data = new byte[width * height * ImageFormat.getBitsPerPixel(format) / 8];

byte[] rowData = new byte[planes[0].getRowStride()];

int channelOffset = 0;

int outputStride = 1;

for (int i = 0; i < planes.length; i++) {

switch (i) {

case 0:

channelOffset = 0;

outputStride = 1;

break;

case 1:

if (colorFormat == COLOR_FormatI420) {

channelOffset = width * height;

outputStride = 1;

} else if (colorFormat == COLOR_FormatNV21) {

channelOffset = width * height + 1;

outputStride = 2;

}

break;

case 2:

if (colorFormat == COLOR_FormatI420) {

channelOffset = (int) (width * height * 1.25);

outputStride = 1;

} else if (colorFormat == COLOR_FormatNV21) {

channelOffset = width * height;

outputStride = 2;

}

break;

}

ByteBuffer buffer = planes[i].getBuffer();

int rowStride = planes[i].getRowStride();

int pixelStride = planes[i].getPixelStride();

int shift = (i == 0) ? 0 : 1;

int w = width >> shift;

int h = height >> shift;

buffer.position(rowStride * (crop.top >> shift) + pixelStride * (crop.left >> shift));

for (int row = 0; row < h; row++) {

int length;

if (pixelStride == 1 && outputStride == 1) {

length = w;

buffer.get(data, channelOffset, length);

channelOffset += length;

} else {

length = (w - 1) * pixelStride + 1;

buffer.get(rowData, 0, length);

for (int col = 0; col < w; col++) {

data[channelOffset] = rowData[col * pixelStride];

channelOffset += outputStride;

}

}

if (row < h - 1) {

buffer.position(buffer.position() + rowStride - length);

}

}

}

return data;

}

private static boolean isImageFormatSupported(Image image) {

int format = image.getFormat();

switch (format) {

case ImageFormat.YUV_420_888:

case ImageFormat.NV21:

case ImageFormat.YV12:

return true;

}

return false;

}

private void dumpFile(String fileName, byte[] data) {

FileOutputStream outStream;

try {

outStream = new FileOutputStream(fileName);

} catch (IOException ioe) {

throw new RuntimeException("Unable to create output file " + fileName, ioe);

}

try {

outStream.write(data);

outStream.close();

} catch (IOException ioe) {

throw new RuntimeException("failed writing data to file " + fileName, ioe);

}

}

private void compressToJpeg(String fileName, Image image) {

FileOutputStream outStream;

try {

outStream = new FileOutputStream(fileName);

} catch (IOException ioe) {

throw new RuntimeException("Unable to create output file " + fileName, ioe);

}

Rect rect = image.getCropRect();

YuvImage yuvImage = new YuvImage(getDataFromImage(image, COLOR_FormatNV21), ImageFormat.NV21, rect.width(), rect.height(), null);

yuvImage.compressToJpeg(rect, 100, outStream);

Log.d("mmm", "写入图片" + fileName);

}

}

播放器音视频同步

上方已经可以单独播放MP4的音频和视频,如何一起播放音频和视频并且让他们同步,这个我还没解决,之后有时间在继续研究

参考

https://www.jianshu.com/p/f5a1c9318524

https://glumes.com/post/android/mediacodec-encode-yuv-to-h264/

https://zhuanlan.zhihu.com/p/45224834

https://www.cnblogs.com/renhui/p/7478527.html