Haproxy + Pacemaker 实现高可用负载均衡(二)

Pacemaker

server1 和 server2 均安装pacemaker 和 corosync

server1 和 server2 作相同配置

[root@server1 ~]# yum install -y pacemaker corosync

[root@server1 ~]# cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf

[root@server1 ~]# vim /etc/corosync/corosync.conf

34 service {

35 name: pacemaker

36 ver: 0

37 }

8 yum install -y pacemaker corosync

9 cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf

10 vim /etc/corosync/corosync.conf

# Please read the corosync.conf.5 manual page

compatibility: whitetank

totem {

version: 2

secauth: off

threads: 0

interface {

ringnumber: 0

bindnetaddr: 172.25.54.0

mcastaddr: 226.94.1.54

mcastport: 5405

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: yes

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service {

name: pacemaker

ver: 0

}[root@server1 ~]# yum install -y crmsh-1.2.6-0.rc2.2.1.x86_64.rpm pssh-2.3.1-2.1.x86_64.rpm安装管理工具,链接:crmsh and pssh

[root@server1 ~]# crm //进入管理界面

crm(live)# configure

crm(live)configure# show //查看默认配置

node server1

node server2

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2"

crm(live)configure#

在另一台服务器上我们也可以实施监控查看

Server2:

[root@server1 ~]# crm_mon //调出监控

Last updated: Sat Aug 4 15:07:13 2018

Last change: Sat Aug 4 15:00:04 2018 via crmd on server1

Stack: classic openais (with plugin)

Current DC: server1 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

//ctrl+c退出监控

server1

crm(live)configure# property stonith-enabled=false //禁掉fence

crm(live)configure# commit //保存注意:每次修改完策略都必须保存一下,否则不生效

server2

Last updated: Sat Aug 4 15:09:55 2018

Last change: Sat Aug 4 15:09:27 2018 via cibadmin on server1

Stack: classic openais (with plugin)

Current DC: server1 - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

Online: [ server1 server2 ][root@server2 rpmbuild]# crm_verify -VL //检查语法

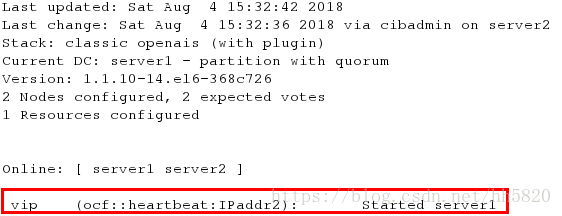

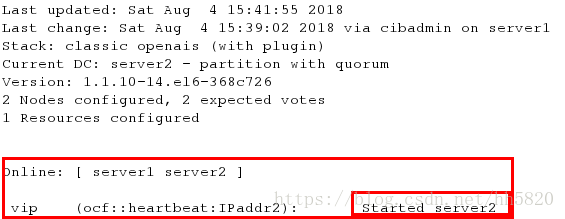

- 添加VIP

crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=172.25.54.100 cidr_netmask=24 op monitor interval=1min

crm(live)configure# commit[root@server1 ~]# /etc/init.d/corosync stop

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:.. [ OK ]

[root@server1 ~]# [root@server1 ~]# /etc/init.d/corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@server1 ~]#[root@server1 ~]# crm

crm(live)# configure

crm(live)configure# show

node server1

node server2

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.54.100" cidr_netmask="24" \

op monitor interval="1min"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false"

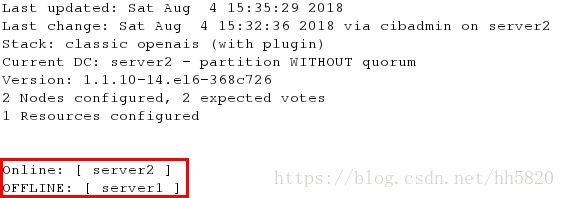

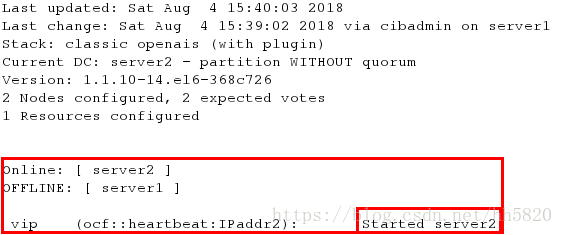

crm(live)configure# property no-quorum-policy=ignore ##设置为ignore做实验,这时即使一个节点挂掉了,另一个节点也会正常工作

crm(live)configure# commit

crm(live)configure# bye

bye

[root@server1 ~]#[root@server1 ~]# /etc/init.d/corosync stop

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:. [ OK ]

[root@server1 ~]# [root@server1 ~]# /etc/init.d/corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@server1 ~]# [root@server1 ~]# crm

crm(live)# configure

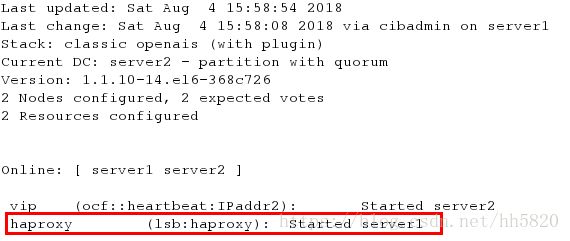

crm(live)configure# primitive haproxy lsb:haproxy op monitor interval=1min

crm(live)configure# commit

crm(live)configure#crm(live)configure# group hagroup vip haproxy //创建集群

crm(live)configure# commit

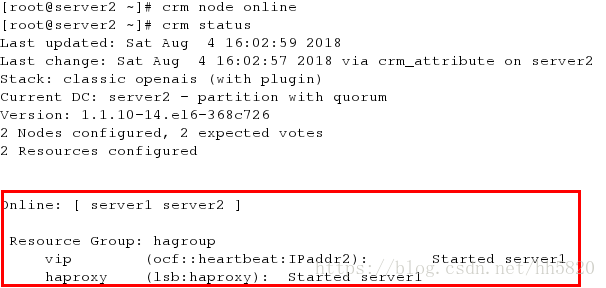

crm(live)configure#[root@server2 ~]# crm configure show

node server1

node server2 \

attributes standby="off"

primitive haproxy lsb:haproxy \

op monitor interval="1min"

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.54.100" cidr_netmask="24" \

op monitor interval="1min"

group hagroup vip haproxy

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"删除资源时,要到resource层下查看该资源是否在工作,若在工作,停掉资源,再到configure层删除

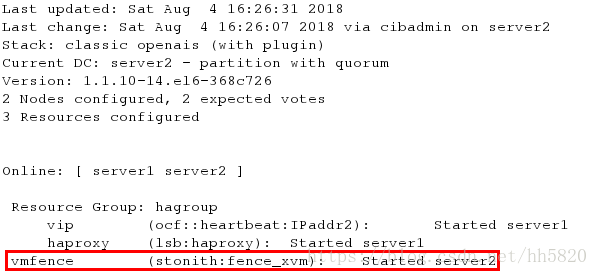

Fence

- server1 和 server2

yum install fence-virt-0.2.3-15.el6.x86_64 -y

[root@server1 ~]# ll /etc/cluster/

total 4

-rw-r--r-- 1 root root 128 Aug 4 16:18 fence_xvm.key

[root@server1 ~]#[root@server2 ~]# ll /etc/cluster/

total 4

-rw-r--r-- 1 root root 128 Aug 4 16:20 fence_xvm.key

[root@server2 ~]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=true //启用fence

crm(live)configure# primitive vmfence stonith:fence_xvm params pcmk_host_map="server1:vm1;server2:vm2" op monitor interval=1min

crm(live)configure# commit

crm(live)configure# bye

bye

[root@server2 ~]#

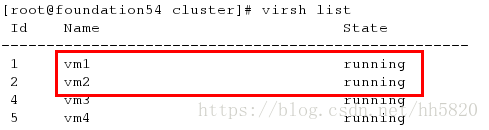

[root@server1 ~]# [root@server1 ~]# echo c >/proc/sysrq-trigger //内核崩溃

此时server2会自动接替server1的工作,而server1则会后台自动重启

待server1重启成功后,再重启corosync服务