Hadoop完全分布式部署集群、增加节点以及删除节点

实验环境:

(可在上一篇博客中查看 server1配置情况.)

| 主机名 | ip | |

|---|---|---|

| server1 | 172.25.254.1 | 主节点 |

| server2 | 172.25.254.2 | 从节点 |

| server3 | 172.25.254.3 | 从节点 |

[hadoop@server1 hadoop-2.7.3]$ sbin/stop-yarn.sh ## 停掉server1的服务

[hadoop@server1 hadoop-2.7.3]$ sbin/stop-dfs.sh

搭建Hadoop集群节点:

主节点server1:

[root@server1 ~]# yum install -y nfs-utils

[root@server1 ~]# vim /etc/exports

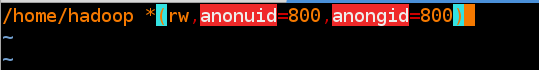

[root@server1 ~]# cat /etc/exports

/home/hadoop *(rw,anonuid=800,anongid=800)

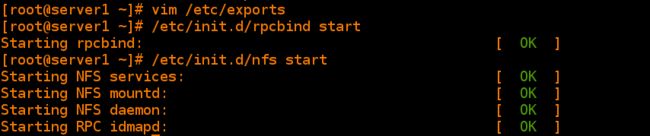

[root@server1 ~]# /etc/init.d/rpcbind start 依次启动不然nfs启动会报错

Starting rpcbind: [ OK ]

[root@server1 ~]# /etc/init.d/nfs start

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS mountd: [ OK ]

Starting NFS daemon: [ OK ]

[root@server1 ~]# showmount -e 刷新

Export list for server1:

/home/hadoop *

从节点server2和server3:(2\3操作一致)

[root@server2 ~]# yum install -y nfs-utils ##安装nfs

[root@server2 ~]# /etc/init.d/rpcbind start ###启动服务

[root@server2 ~]# /etc/init.d/nfs start

[root@server2 ~]# useradd -u 800 hadoop ##建立用户

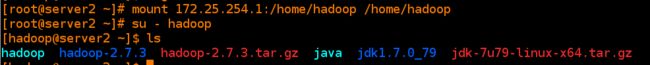

[root@server2 ~]# mount 172.25.45.1:/home/hadoop/ /home/hadoop/ ###挂载目录,进行同步

[root@server2 ~]# su - hadoop

[hadoop@server2 ~]$ ls ##查看同步的目录

ssh测试:

[hadoop@server1 ~]$ ssh 172.25.45.2

[hadoop@server1 ~]$ ssh 172.25.45.3

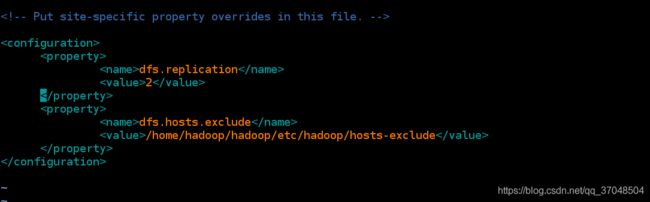

修改文件

[hadoop@server1 ~]$ cd hadoop/etc/hadoop/

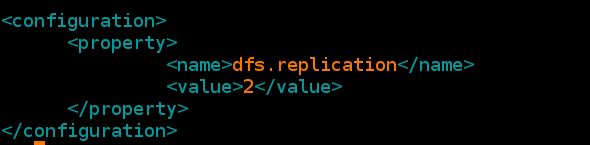

[hadoop@server1 hadoop]$ vim hdfs-site.xml

dfs.replication

2

[hadoop@server1 hadoop]$ vim slaves

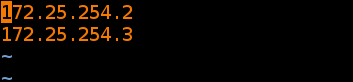

172.25.254.2

172.25.254.3

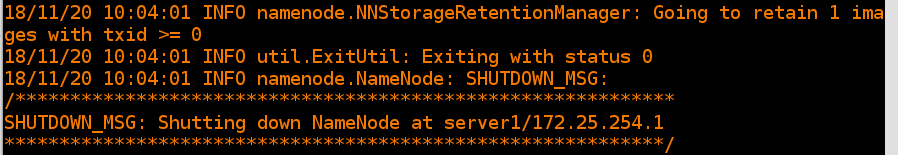

重新进行服务初始化

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

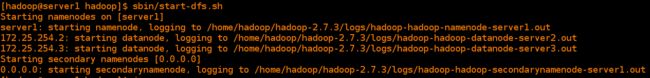

[hadoop@server1 hadoop]$ sbin/start-dfs.sh ##开启服务

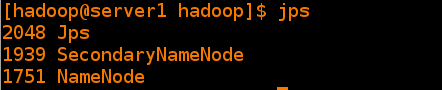

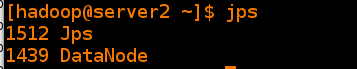

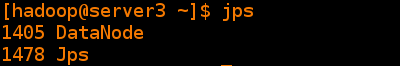

查看java进程

server1打开namenode节点

server2、server3从节点端可以看到datanode信息

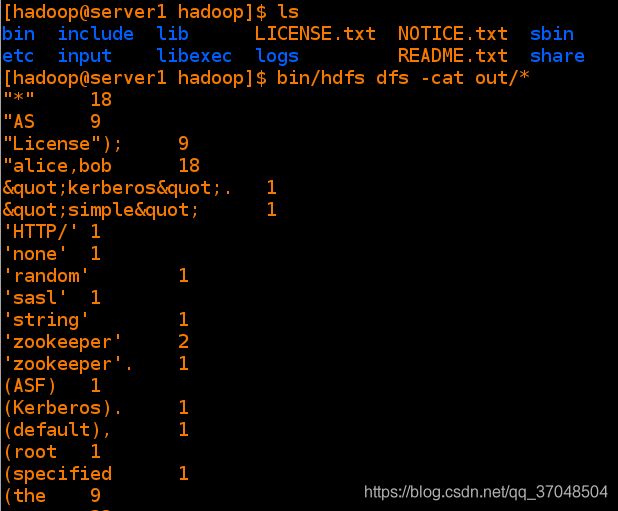

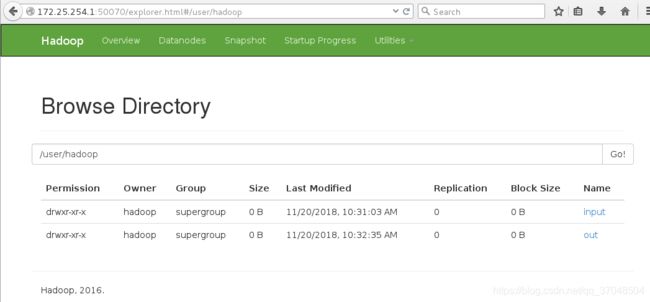

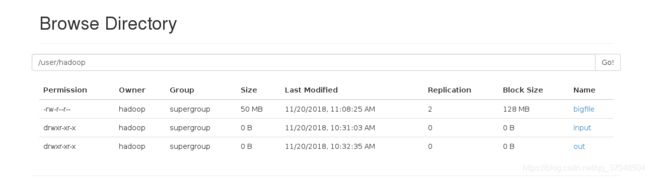

测试:重新初始化后在主节点提交信息

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc libexec logs sbin

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ mkdir input

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml input

[hadoop@server1 hadoop]$ bin/hdfs dfs -put input

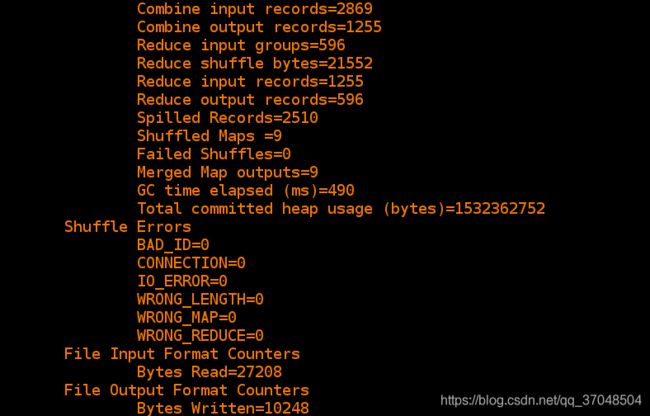

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount input out 调用jar包

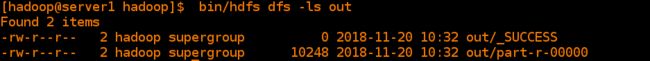

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls output 查看输出

[hadoop@server1 hadoop]$ bin/hdfs dfs -get out ## 将输出导入到out中

[hadoop@server1 hadoop]$ ls ## 出现out

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs out sbin

[hadoop@server1 hadoop]$ cd out

[hadoop@server1 out]$ ls

part-r-00000 _SUCCESS

[hadoop@server1 out]$ cat part-r-00000 ##查看信息一致

数据节点的添加和删除:

添加从节点server4:

[root@server4 ~]# yum install -y nfs-utils

[root@server4 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server4 ~]# /etc/init.d/nfs start

Starting NFS services: [ OK ]

Starting NFS mountd: [ OK ]

Starting NFS daemon: [ OK ]

Starting RPC idmapd: [ OK ]

[root@server4 ~]# useradd -u 800 hadoop

[root@server4 ~]# id hadoop

uid=800(hadoop) gid=800(hadoop) groups=800(hadoop)

[root@server4 ~]# mount 172.25.254.1:/home/hadoop /home/hadoop/

[root@server4 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 930516 17231836 6% /

tmpfs 510200 0 510200 0% /dev/shm

/dev/vda1 495844 33466 436778 8% /boot

172.25.254.1:/home/hadoop 19134336 1940224 16222080 11% /home/hadoop

[root@server4 ~]# su - hadoop

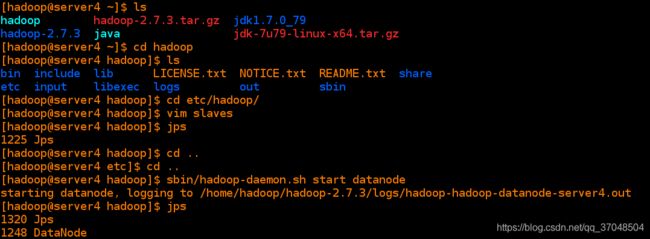

[hadoop@server4 ~]$ ls

hadoop hadoop-2.7.3.tar.gz jdk1.7.0_79

hadoop-2.7.3 java jdk-7u79-linux-x64.tar.gz

[hadoop@server4 ~]$ cd hadoop

[hadoop@server4 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs out sbin

[hadoop@server4 hadoop]$ cd etc/hadoop/

[hadoop@server4 hadoop]$ vim slaves

[hadoop@server4 hadoop]$ cat slaves

172.25.254.2

172.25.254.3

172.25.254.4

[hadoop@server4 hadoop]$ jps

1225 Jps

[hadoop@server4 hadoop]$ cd ..

[hadoop@server4 etc]$ cd ..

[hadoop@server4 hadoop]$ sbin/hadoop-daemon.sh start datanode ##开启server4的datanode

starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server4.out

[hadoop@server4 hadoop]$ jps ## 查看进程

1320 Jps

1248 DataNode

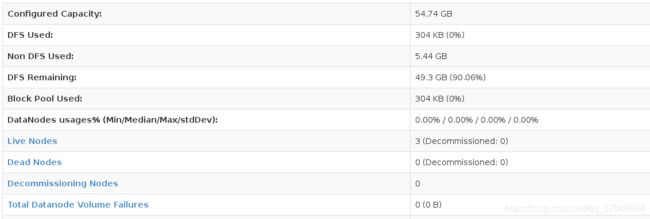

[hadoop@server1 hadoop]$ dd if=/dev/zero of=bigfile bs=1M count=50 ## 截取产生信息

50+0 records in

50+0 records out

52428800 bytes (52 MB) copied, 0.0478092 s, 1.1 GB/s

[hadoop@server1 hadoop]$ bin/hdfs dfs -put bigfile ## 提交信息

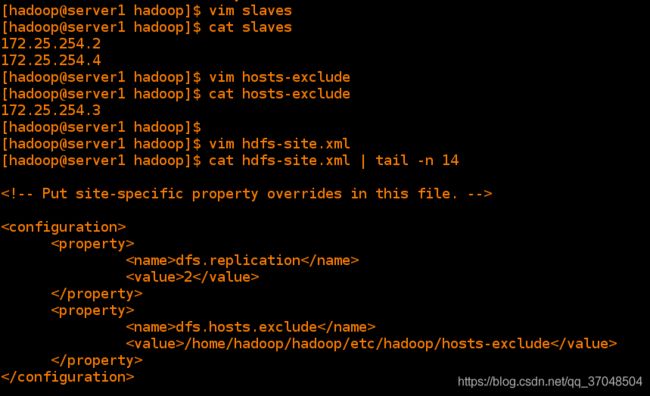

删除server3节点:

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim slaves

[hadoop@server1 hadoop]$ cat slaves

172.25.254.2

172.25.254.4

[hadoop@server1 hadoop]$ vim hosts-exclude

[hadoop@server1 hadoop]$ cat hosts-exclude

172.25.254.3

[hadoop@server1 hadoop]$ vim hdfs-site.xml

[hadoop@server1 hadoop]$ cat hdfs-site.xml | tail -n 14

dfs.replication

2

dfs.hosts.exclude

/home/hadoop/hadoop/etc/hadoop/hosts-exclude

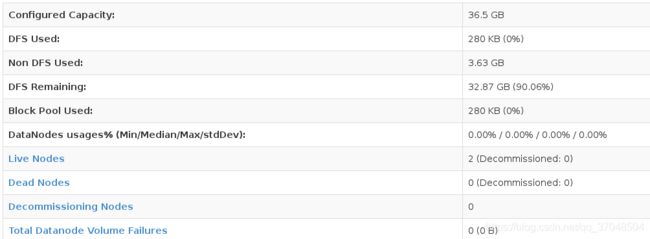

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -refreshNodes 刷新节点

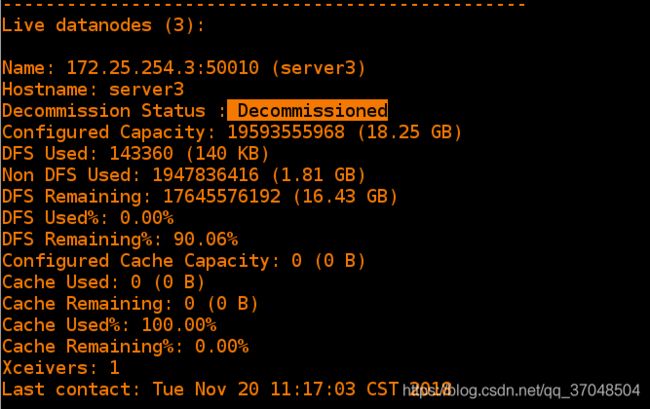

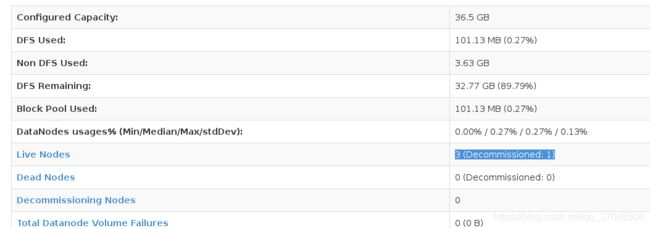

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -report 查看集群状态

Live datanodes (3):

Name: 172.25.254.3:50010 (server3)

Hostname: server3

Decommission Status : Decommission in progress ## 正在移动

Configured Capacity: 19593555968 (18.25 GB)

DFS Used: 143360 (140 KB)

Non DFS Used: 1947836416 (1.81 GB)

DFS Remaining: 17645576192 (16.43 GB)

DFS Used%: 0.00%

DFS Remaining%: 90.06%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Nov 20 11:16:12 CST 2018