Opencv(五)霍夫变换、直方图计算及对比

霍夫直线变换介绍

Hough Line Transform用来做直线检测

前提条件 – 边缘检测已经完成

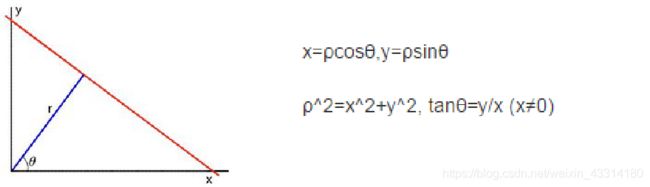

平面空间到极坐标空间转换

相关API:

相关API:

标准的霍夫变换 cv::HoughLines从平面坐标转换到霍夫空间,最终输出是 表示极坐标空间

霍夫变换直线概率 cv::HoughLinesP最终输出是直角坐标系下直线的两个点坐标

cv::HoughLines(

InputArray src, // 输入图像,必须8-bit的灰度图像

OutputArray lines, // 输出的极坐标来表示直线

double rho, // 生成极坐标时候的像素扫描步长

double theta, //生成极坐标时候的角度步长,一般取值CV_PI/180

int threshold, // 阈值,只有获得足够交点的极坐标点才被看成是直线

double srn=0;// 是否应用多尺度的霍夫变换,如果不是设置0表示经典霍夫变换

double stn=0;//是否应用多尺度的霍夫变换,如果不是设置0表示经典霍夫变换

double min_theta=0; // 表示角度扫描范围 0 ~180之间, 默认即可

double max_theta=CV_PI

) // 一般情况是有经验的开发者使用,需要自己反变换到平面空间

cv::HoughLinesP(

InputArray src, // 输入图像,必须8-bit的灰度图像

OutputArray lines, // 输出的极坐标来表示直线

double rho, // 生成极坐标时候的像素扫描步长

double theta, //生成极坐标时候的角度步长,一般取值CV_PI/180

int threshold, // 阈值,只有获得足够交点的极坐标点才被看成是直线

double minLineLength=0;// 最小直线长度

double maxLineGap=0;// 最大间隔

)

#include plines;

HoughLinesP(src_gray, plines, 1, CV_PI / 180.0, 10, 0, 10);

Scalar color = Scalar(0, 0, 255);

for (size_t i = 0; i < plines.size(); i++) {

Vec4f hline = plines[i];

line(dst, Point(hline[0], hline[1]), Point(hline[2], hline[3]), color, 3, LINE_AA);

}*/

imshow(OUTPUT_TITLE, dst);

waitKey(0);

return 0;

}

霍夫圆变换

因为霍夫圆检测对噪声比较敏感,所以首先要对图像做中值滤波。

基于效率考虑,Opencv中实现的霍夫变换圆检测是基于图像梯度的实现,分为两步:

1. 检测边缘,发现可能的圆心

2. 基于第一步的基础上从候选圆心开始计算最佳半径大小

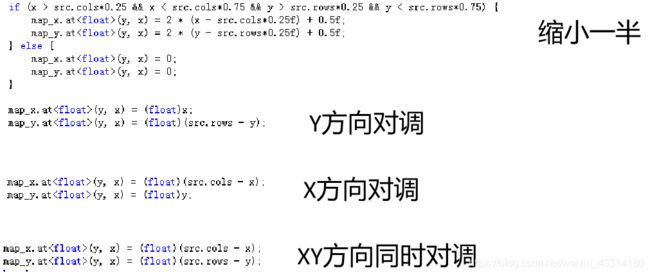

#include 像素重映射

简单点说就是把输入图像中各个像素按照一定的规则映射到另外一张图像的对应位置上去,形成一张新的图像。

API介绍cv::remap:

Remap(

InputArray src,// 输入图像

OutputArray dst,// 输出图像

InputArray map1,// x 映射表 CV_32FC1/CV_32FC2

InputArray map2,// y 映射表

int interpolation,// 选择的插值方法,常见线性插值,可选择立方等

int borderMode,// BORDER_CONSTANT

const Scalar borderValue// color

)

#include 直方图

图像直方图,是指对整个图像像在灰度范围内的像素值(0~255)统计出现频率次数,据此生成的直方图,称为图像直方图-直方图。直方图反映了图像灰度的分布情况。是图像的统计学特征。

API:equalizeHist(

InputArray src,//输入图像,必须是8-bit的单通道图像

OutputArray dst// 输出结果

)

#include **

上述直方图概念是基于图像像素值,其实对图像梯度、每个像素的角度、等一切图像的属性值,我们都可以建立直方图。这个才是直方图的概念真正意义,不过是基于图像像素灰度直方图是最常见的。 直方图最常见的几个属性:

- dims 表示维度,对灰度图像来说只有一个通道值dims=1

- bins 表示在维度中子区域大小划分,bins=256,划分为256个级别

- range 表示值得范围,灰度值范围为[0~255]之间

**

split(// 把多通道图像分为多个单通道图像

const Mat &src, //输入图像

Mat* mvbegin)// 输出的通道图像数组

calcHist(

const Mat* images,//输入图像指针

int images,// 图像数目

const int* channels,// 通道数

InputArray mask,// 输入mask,可选,不用

OutputArray hist,//输出的直方图数据

int dims,// 维数

const int* histsize,// 直方图级数

const float* ranges,// 值域范围

bool uniform,// true by default

bool accumulate// false by defaut

)

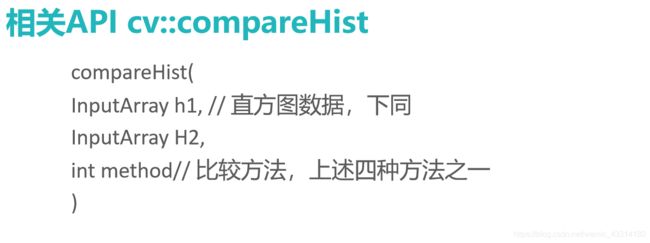

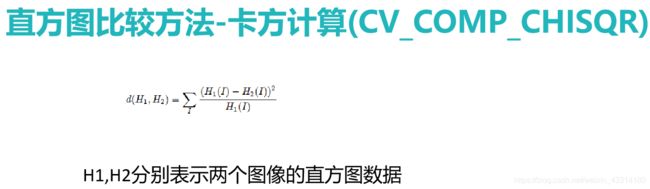

#include 直方图比较

对输入的两张图像计算得到直方图H1与H2,归一化到相同的尺度空间

然后可以通过计算H1与H2的之间的距离得到两个直方图的相似程度进

而比较图像本身的相似程度。Opencv提供的比较方法有四种:

Correlation 相关性比较

Chi-Square 卡方比较

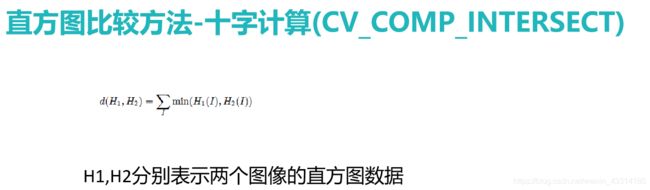

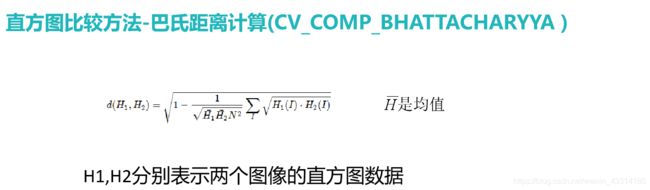

Intersection 十字交叉性

Bhattacharyya distance 巴氏距离

步骤:

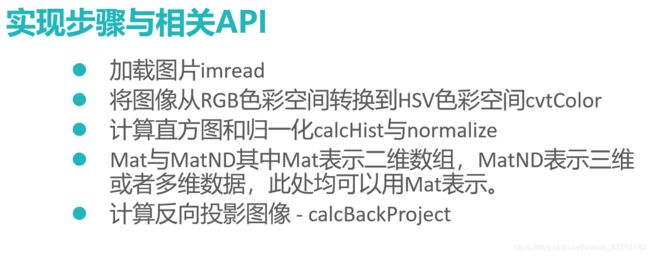

首先把图像从RGB色彩空间转换到HSV色彩空间cvtColor

计算图像的直方图,然后归一化到[0~1]之间calcHist和normalize;

使用上述四种比较方法之一进行比较compareHist

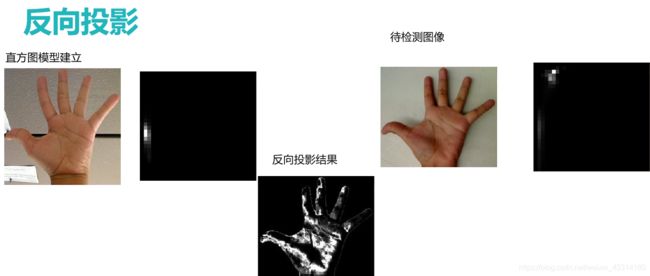

#include 直方图反向投影(Back Projection)

反向投影是反映直方图模型在目标图像中的分布情况

简单点说就是用直方图模型去目标图像中寻找是否有相似的对象。通常用HSV色彩空间的HS两个通道直方图模型

#include (i) * (400 / 255)))),

Point(i*bin_w, hist_h),

Scalar(0, 0, 255), -1);

}

imshow("Histogram", histImage);

return;

}