paper reading archives

paper reading archives

- I. bench marks

-

- 1). The Kinetics Human Action Video Dataset

- 2). Large-scale Video Classification with Convolutional Neural Networks

- 3) others: UCF101(13K videos of 101 categories) and HMDB51(6K videos of 51 classes)

- II. Action Recognition

-

- 1). A Closer Look at Spatiotemporal Convolutions for Action Recognition

- 2). 3d convolutional neural networks for human action recognition. IEEE TPAMI, 35(1):221–231, 2013

- 3). Convolutional learning of spatio-temporal features. In ECCV, pages 140–153. Springer, 2010

- 4). Learning spatiotemporal features with 3d convolutional networks. In ICCV, 2015.

- 5). [SOTA] Quo vadis, action recognition? a new model and the kinetics dataset. In CVPR, 2017

- 6). A discriminative cnn video representation for event detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1798–1807, 2015

- 7). Actionvlad: Learning spatio-temporal aggregation for action classification. In CVPR, 2017

- 8). Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4694–4702, 2015.

- 9). Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2625–2634, 2015

- 10). Unsupervised learning of video representations using lstms. In International Conference on Machine Learning, pages 843–852, 2015.

- 11). Delving deeper into convolutional networks for learning video representations. arXiv preprint arXiv:1511.06432, 2015

- 12). 3d convolutional neural networks for human action recognition. IEEE TPAMI, 35(1):221–231, 2013.

- 13). [two-stream framework] Two-stream convolutional networks for action recognition in videos. In NIPS, 2014

- 14). [two-stream framework] Deep residual learning for image recognition. In CVPR, 2016.

- 15). [two-stream framework] Spatiotemporal residual networks for video action recognition. In NIPS, 2016.

- 16). [two-stream framework] Temporal segment networks: Towards good practices for deep action recognition. In ECCV, 2016.

- 17). [two-stream framework] Actions ˜ transformations. In CVPR, 2016.

- 18). [two-stream framework] Convolutional two-stream network fusion for video action recognition. In CVPR, 2016.

- 19). Human action recognition using factorized spatio-temporal convolutional networks. In ICCV, 2015.

- 20). Learning spatio-temporal representation with pseudo-3d residual networks. In ICCV, 2017.

- 21). Long-term Temporal Convolutions for Action Recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017.

- training skills

-

- 1). Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour

I. bench marks

1). The Kinetics Human Action Video Dataset

Kinetics-400/ -600 /-700 datasets are human action videos, used for classification. Similary benchmarks include HMDB-51 and UCF-101, which are small in size compared with Kinetics. The clips are sourced from YouTube videos.

size: 300K videos of 400 human actions

2). Large-scale Video Classification with Convolutional Neural Networks

The YouTube Sports-1M Dataset

https://cs.stanford.edu/people/karpathy/deepvideo/

size: 1.1M videos of 487 fine-grained sport categories

3) others: UCF101(13K videos of 101 categories) and HMDB51(6K videos of 51 classes)

II. Action Recognition

1). A Closer Look at Spatiotemporal Convolutions for Action Recognition

R stands for residual structure, D stands for dimension:

R2D: 2D convolutions over the entire clip, the temporal information is wasted by the very first layer.

fR2D: 2D convolutions over frames, different frames independently go through entire R2D network and the outputs are fused by the final global spatiotemporal average pooling.

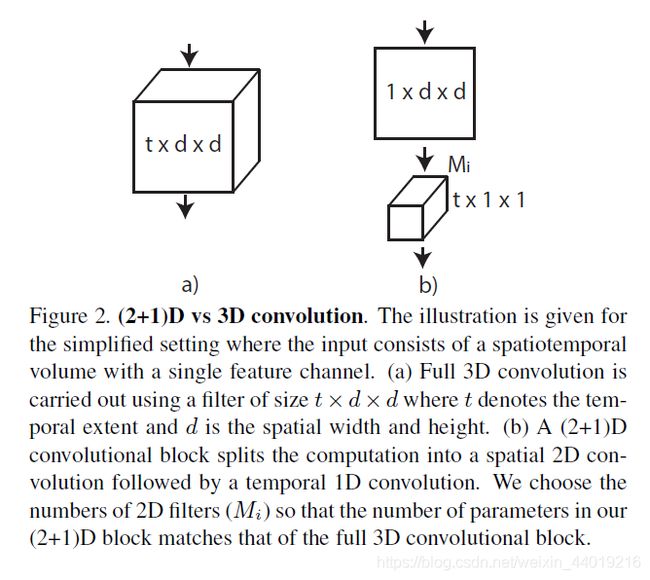

R3D: 3D convolutions. use a t x d x d convolution kernel.

MCx and rMCx: mixed 3D-2D convolutions, a combination of fR2D and R3D

R(2+1)D: (2+1)D convolutions, experimented to be the best in terms accuracy.

Why are (2+1)D convolutions better than 3D?

The first advantage is an additional nonlinear rectification between these two operations. This effectively doubles the number of nonlinearities compared to a network using full 3D convolutions for the same number of parameters, thus rendering the model capable of representing more complex functions. The second potential benefit is that the decomposition facilitates the optimization, yielding in practice both a lower training loss and a lower testing loss. In other words we find that, compared to full 3D filters where appearance and dynamics are jointly intertwined, the (2+1)D blocks (with factorized spatial and temporal components) are easier to optimize. Our experiments demonstrate that ResNets adopting (2+1)D blocks homogeneously in all layers achieve stateof- the-art performance on both Kinetics and Sports-1M.

2). 3d convolutional neural networks for human action recognition. IEEE TPAMI, 35(1):221–231, 2013

3). Convolutional learning of spatio-temporal features. In ECCV, pages 140–153. Springer, 2010

4). Learning spatiotemporal features with 3d convolutional networks. In ICCV, 2015.

5). [SOTA] Quo vadis, action recognition? a new model and the kinetics dataset. In CVPR, 2017

6). A discriminative cnn video representation for event detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1798–1807, 2015

7). Actionvlad: Learning spatio-temporal aggregation for action classification. In CVPR, 2017

8). Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4694–4702, 2015.

9). Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2625–2634, 2015

10). Unsupervised learning of video representations using lstms. In International Conference on Machine Learning, pages 843–852, 2015.

11). Delving deeper into convolutional networks for learning video representations. arXiv preprint arXiv:1511.06432, 2015

12). 3d convolutional neural networks for human action recognition. IEEE TPAMI, 35(1):221–231, 2013.

Arguably, the first 3D CNN using temporal convolutions for recognizing human actions in video

13). [two-stream framework] Two-stream convolutional networks for action recognition in videos. In NIPS, 2014

fuse deep features extracted from optical flow with the more traditional deep CNN activations computed from color RGB input.

14). [two-stream framework] Deep residual learning for image recognition. In CVPR, 2016.

with ResNet architectures

15). [two-stream framework] Spatiotemporal residual networks for video action recognition. In NIPS, 2016.

with additional connections between streams

16). [two-stream framework] Temporal segment networks: Towards good practices for deep action recognition. In ECCV, 2016.

17). [two-stream framework] Actions ˜ transformations. In CVPR, 2016.

18). [two-stream framework] Convolutional two-stream network fusion for video action recognition. In CVPR, 2016.

19). Human action recognition using factorized spatio-temporal convolutional networks. In ICCV, 2015.

20). Learning spatio-temporal representation with pseudo-3d residual networks. In ICCV, 2017.

21). Long-term Temporal Convolutions for Action Recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017.

training skills

1). Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour

The goal: to demonstrate the feasibility of, and to communicate a practical guide to, large-scale training with distributed synchronous stochastic gradient descent (SGD).

Linear Scaling Rule: When the minibatch size is multiplied by k, multiply the learning rate by k.

Gradual warm-up: In practice, with a large minibatch of size kn, we start from a learning rate of and increment it by a constant amount at each iteration such that it reaches ^ = k after 5 epochs (results are robust to the exact duration of warmup). After the warmup, we go back to the original learning rate schedule.

Batch Normalization: the BN statistics should not be computed across all workers, not only for the sake of reducing communication, but also for maintaining the same underlying loss function being optimized.