MapReduce 写入数据到MySql数据库

文章目录

-

-

- 一、软件版本

- 二、环境配置

- 三、项目结构

- 四、关键代码

- 五、执行jar包

- 六、MySql查询结果

-

Hadoop 0.19中提供的DBInputFormat和DBOutputFormat组件最终允许在Hadoop和许多关系数据库之间轻松导入和导出数据,从而使关系数据更容易地合并到您的数据处理管道中。 要在Hadoop和MySQL之间导入和导出数据,肯定需要在机器上安装Hadoop和MySQL。

一、软件版本

- jdk 1.7.0_79-b15

- hadoop 2.7.1

- mysql 5.0.22

二、环境配置

- 拷贝

mysql-connector-java-5.1.39-bin.jar到$HADOOP_HOME/lib目录。 - 创建MySQL库

mysql> use testDb;

mysql> create table studentinfo ( id integer , name varchar(32) );

mysql> insert into studentinfo values(1,'archana');

mysql> insert into studentinfo values(2,'XYZ');

mysql> insert into studentinfo values(3,'archana');

mysql> create table output ( name varchar(32),count integer );

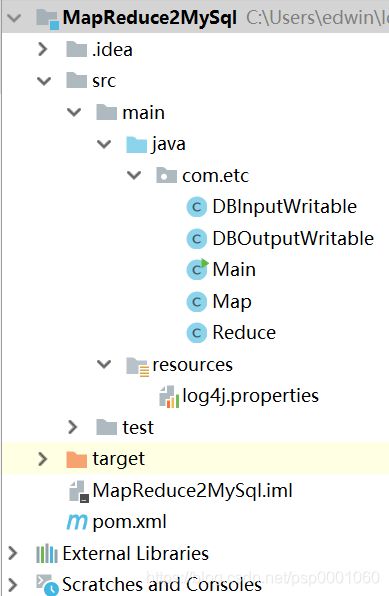

三、项目结构

要从DB访问数据,我们必须创建一个类来定义要提取并写回DB的数据。在我的项目中,我创建了一个名为DBInputWritable.java和DBOutputWritable.java的类来实现相同的目的。

四、关键代码

DBInputWritable.java

package com.etc;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.sql.ResultSet;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.lib.db.DBWritable;

public class DBInputWritable implements Writable, DBWritable

{

private int id;

private String name;

public void readFields(DataInput in) throws IOException {

}

public void readFields(ResultSet rs) throws SQLException

//Resultset object represents the data returned from a SQL statement

{

id = rs.getInt(1);

name = rs.getString(2);

}

public void write(DataOutput out) throws IOException {

}

public void write(PreparedStatement ps) throws SQLException

{

ps.setInt(1, id);

ps.setString(2, name);

}

public int getId()

{

return id;

}

public String getName()

{

return name;

}

}

DBOutputWritable.java

package com.etc;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.sql.ResultSet;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.lib.db.DBWritable;

public class DBOutputWritable implements Writable, DBWritable

{

private String name;

private int count;

public DBOutputWritable(String name, int count)

{

this.name = name;

this.count = count;

}

public void readFields(DataInput in) throws IOException {

}

public void readFields(ResultSet rs) throws SQLException

{

name = rs.getString(1);

count = rs.getInt(2);

}

public void write(DataOutput out) throws IOException {

}

public void write(PreparedStatement ps) throws SQLException

{

ps.setString(1, name);

ps.setInt(2, count);

}

}

Main

package com.etc;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.db.DBConfiguration;

import org.apache.hadoop.mapreduce.lib.db.DBInputFormat;

import org.apache.hadoop.mapreduce.lib.db.DBOutputFormat;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

public class Main

{

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration();

DBConfiguration.configureDB(conf,

"com.mysql.jdbc.Driver", // driver class

"jdbc:mysql://192.168.191.1:3306/test", // db url

"root", // user name

"root"); //password

Job job = new Job(conf);

job.setJarByClass(Main.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(DBOutputWritable.class);

job.setOutputValueClass(NullWritable.class);

job.setInputFormatClass(DBInputFormat.class);

job.setOutputFormatClass(DBOutputFormat.class);

DBInputFormat.setInput(

job,

DBInputWritable.class,

"studentinfo", //input table name

null,

null,

new String[] {

"id", "name" } // table columns

);

DBOutputFormat.setOutput(

job,

"output", // output table name

new String[] {

"name", "count" } //table columns

);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

Map

package com.etc;

import java.io.IOException;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.IntWritable;

public class Map extends Mapper<LongWritable, DBInputWritable, Text, IntWritable>

{

private IntWritable one = new IntWritable(1);

protected void map(LongWritable id, DBInputWritable value, Context ctx)

{

try

{

String[] keys = value.getName().split(" ");

for(String key : keys)

{

ctx.write(new Text(key),one);

}

} catch(IOException e)

{

e.printStackTrace();

} catch(InterruptedException e)

{

e.printStackTrace();

}

}

}

Reduce

package com.etc;

import java.io.IOException;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

public class Reduce extends Reducer<Text, IntWritable, DBOutputWritable, NullWritable>

{

protected void reduce(Text key, Iterable<IntWritable> values, Context ctx)

{

int sum = 0;

for(IntWritable value : values)

{

sum += value.get();

}

try

{

ctx.write(new DBOutputWritable(key.toString(), sum), NullWritable.get());

} catch(IOException e)

{

e.printStackTrace();

} catch(InterruptedException e)

{

e.printStackTrace();

}

}

}

Main

package com.etc;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.db.DBConfiguration;

import org.apache.hadoop.mapreduce.lib.db.DBInputFormat;

import org.apache.hadoop.mapreduce.lib.db.DBOutputFormat;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

public class Main

{

public static void main(String[] args) throws Exception

{

Configuration conf = new Configuration();

DBConfiguration.configureDB(conf,

"com.mysql.jdbc.Driver", // driver class

"jdbc:mysql://192.168.191.1:3306/test", // db url

"root", // user name

"root"); //password

Job job = new Job(conf);

job.setJarByClass(Main.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(DBOutputWritable.class);

job.setOutputValueClass(NullWritable.class);

job.setInputFormatClass(DBInputFormat.class);

job.setOutputFormatClass(DBOutputFormat.class);

DBInputFormat.setInput(

job,

DBInputWritable.class,

"studentinfo", //input table name

null,

null,

new String[] {

"id", "name" } // table columns

);

DBOutputFormat.setOutput(

job,

"output", // output table name

new String[] {

"name", "count" } //table columns

);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.etcgroupId>

<artifactId>MapReduce2MySqlartifactId>

<version>1.0-SNAPSHOTversion>

<name>MapReduce2MySqlname>

<url>http://www.example.comurl>

<properties>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<maven.compiler.source>1.7maven.compiler.source>

<maven.compiler.target>1.7maven.compiler.target>

properties>

<dependencies>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>2.7.1version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-hdfsartifactId>

<version>2.7.1version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-mapreduce-client-coreartifactId>

<version>2.7.1version>

<scope>providedscope>

dependency>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.11version>

dependency>

dependencies>

<build>

<pluginManagement>

<plugins>

<plugin>

<artifactId>maven-clean-pluginartifactId>

<version>3.1.0version>

plugin>

<plugin>

<artifactId>maven-resources-pluginartifactId>

<version>3.0.2version>

plugin>

<plugin>

<artifactId>maven-compiler-pluginartifactId>

<version>3.8.0version>

plugin>

<plugin>

<artifactId>maven-surefire-pluginartifactId>

<version>2.22.1version>

plugin>

<plugin>

<artifactId>maven-jar-pluginartifactId>

<version>3.0.2version>

plugin>

<plugin>

<artifactId>maven-install-pluginartifactId>

<version>2.5.2version>

plugin>

<plugin>

<artifactId>maven-deploy-pluginartifactId>

<version>2.8.2version>

plugin>

<plugin>

<artifactId>maven-site-pluginartifactId>

<version>3.7.1version>

plugin>

<plugin>

<artifactId>maven-project-info-reports-pluginartifactId>

<version>3.0.0version>

plugin>

plugins>

pluginManagement>

build>

project>

五、执行jar包

$ hadoop jar MapReduce2MySql-1.0-SNAPSHOT.jar com.etc.Main

六、MySql查询结果

mysql> select * from output;

+-----------+-------+

| name | count |

+-----------+-------+

| archana | 2 |

| XYZ | 1 |

+----------+--------+