ffmpeg filter amix混音实现

一、实现思路

ffmpeg的滤波filter有amix这个混音器,可以借助它来做音频的多路混音。首先我们需要编译ffmpeg并安装它,可以具备编码功能,考虑可以加mp3或者aac编码库进来,最简单的方式是编码成pcm格式直接输出到文件,用VLC也可以播放。

二、编译ffmpeg

$: git clone https://git.ffmpeg.org/ffmpeg.git

$: cd ffmpeg

$: git tag //打印出git提交标签,我们选择n2.9-dev 这个版本

$: git checkout n2.9-dev -b n2.9-dev //新建一个分支并切换到n2.9-dev这个版本

$: ./configure --disable-yasm --disable-ffplay --disable-ffprobe --disable-debug --enable-avdevice --disable-devices --disable-zlib --disable-bzlib --enable-avfilter --enable-encoders --enable-ffmpeg --enable-static --enable-gpl --enable-small --enable-nonfree --prefix=/home/xxx/ffmpegInstall_linux //编译它使用静态库编译(不编译动态库需要的自行添加即可)

$: make clean

$: make -j4

$: make install

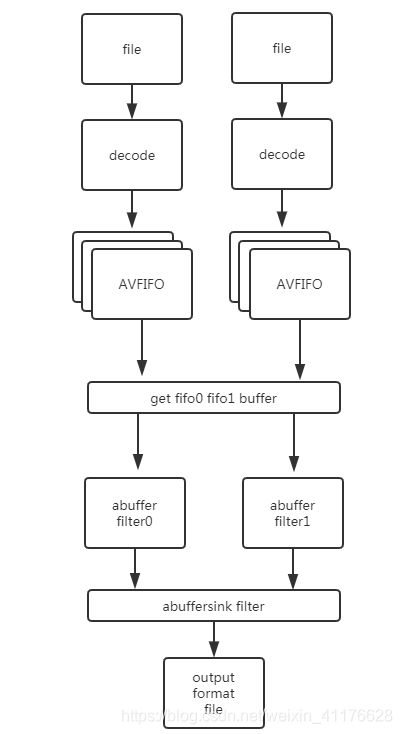

三、简易框架

如图,即将两个mp3文件通过amixfilter 混合成一个音频输出。

四、编写程序audioMix.c

1、构建输入封装器上下文input avformat

cd ~/ffmpegInstall_linux

mkdir src

新建audioMix.c文件并拷贝3个mp3文件进来

编译指令:

$: g++ -I../include -L../lib audioMix.cpp -o audioMix -lavfilter -lavformat -lavcodec -lavutil -lmp3lame -lswresample -lswscale -lavdevice -lpostproc -lpthread -lm -ldl

输入MP3格式的文件即可。或者其他编码的也行。

int OpenNetworkInput(char* inputForamt, const char* url)

{

AVInputFormat* ifmt = av_find_input_format(inputForamt);

AVDictionary* opt1 = NULL;

av_dict_set(&opt1, "rtbufsize", "10M", 0);

int ret = 0;

ret = avformat_open_input(&_fmt_ctx_net, url, ifmt, &opt1);

if (ret < 0)

{

printf("Speaker: failed to call avformat_open_input\n");

return -1;

}

ret = avformat_find_stream_info(_fmt_ctx_net, NULL);

if (ret < 0)

{

printf("Speaker: failed to call avformat_find_stream_info\n");

return -1;

}

for (int i = 0; i < _fmt_ctx_net->nb_streams; i++)

{

if (_fmt_ctx_net->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO)

{

_index_net = i;

break;

}

}

if (_index_net < 0)

{

printf("Speaker: negative audio index\n");

return -1;

}

AVCodecContext* codec_ctx = _fmt_ctx_net->streams[_index_net]->codec;

AVCodec* codec = avcodec_find_decoder(codec_ctx->codec_id);

if (codec == NULL)

{

printf("Speaker: null audio decoder\n");

return -1;

}

ret = avcodec_open2(codec_ctx, codec, NULL);

if (ret < 0)

{

printf("Speaker: failed to call avcodec_open2\n");

return -1;

}

av_dump_format(_fmt_ctx_net, _index_net, url, 0);

return 0;

}

2、构建输出封装器上下文

output format这里输出的是44100 s16 pcm 小端序格式的音频。需要其他格式的音频要改AV_CODEC_ID_PCM_S16LE 。并且需要添加编码库,ffmpeg内部不集成mp3或者aac。

这里还会构建几组fifo来存储input raw data。

int OpenFileOutput(char* fileName)

{

int ret = 0;

ret = avformat_alloc_output_context2(&_fmt_ctx_out, NULL, NULL, fileName);

if (ret < 0)

{

printf("Mixer: failed to call avformat_alloc_output_context2\n");

return -1;

}

AVStream* stream_a = NULL;

stream_a = avformat_new_stream(_fmt_ctx_out, NULL);

if (stream_a == NULL)

{

printf("Mixer: failed to call avformat_new_stream\n");

return -1;

}

_index_a_out = 0;

stream_a->codec->codec_type = AVMEDIA_TYPE_AUDIO;

AVCodec* codec_mp3 = NULL;

codec_mp3 = avcodec_find_encoder(AV_CODEC_ID_PCM_S16LE);

if (codec_mp3 == NULL)

{

printf("Mixer: failed to call avcodec_find_encoder ++++\n");

return -1;

}

stream_a->codec->codec = codec_mp3;

stream_a->codec->sample_rate = 44100;

stream_a->codec->channels = 2;

stream_a->codec->channel_layout = av_get_default_channel_layout(2);

stream_a->codec->sample_fmt = codec_mp3->sample_fmts[0];

stream_a->codec->bit_rate = 320000;

stream_a->codec->time_base.num = 1;

stream_a->codec->time_base.den = stream_a->codec->sample_rate;

stream_a->codec->codec_tag = 0;

if (_fmt_ctx_out->oformat->flags & AVFMT_GLOBALHEADER)

stream_a->codec->flags |= CODEC_FLAG_GLOBAL_HEADER;

if (avcodec_open2(stream_a->codec, stream_a->codec->codec, NULL) < 0)

{

printf("Mixer: failed to call avcodec_open2\n");

return -1;

}

if (!(_fmt_ctx_out->oformat->flags & AVFMT_NOFILE))

{

if (avio_open(&_fmt_ctx_out->pb, fileName, AVIO_FLAG_WRITE) < 0)

{

printf("Mixer: failed to call avio_open\n");

return -1;

}

}

if (avformat_write_header(_fmt_ctx_out, NULL) < 0)

{

printf("Mixer: failed to call avformat_write_header\n");

return -1;

}

bool b = (!_fmt_ctx_out->streams[0]->time_base.num && _fmt_ctx_out->streams[0]->codec->time_base.num);

av_dump_format(_fmt_ctx_out, _index_a_out, fileName, 1);

_fifo_net = av_audio_fifo_alloc(_fmt_ctx_net->streams[_index_net]->codec->sample_fmt, _fmt_ctx_net->streams[_index_net]->codec->channels, 30*_fmt_ctx_net->streams[_index_net]->codec->frame_size);

_fifo_spk = av_audio_fifo_alloc(_fmt_ctx_spk->streams[_index_spk]->codec->sample_fmt, _fmt_ctx_spk->streams[_index_spk]->codec->channels, 30*_fmt_ctx_spk->streams[_index_spk]->codec->frame_size);

_fifo_mic = av_audio_fifo_alloc(_fmt_ctx_mic->streams[_index_mic]->codec->sample_fmt, _fmt_ctx_mic->streams[_index_mic]->codec->channels, 30*_fmt_ctx_mic->streams[_index_spk]->codec->frame_size);

return 0;

}

3、构建in filter ,out filter 和filter graph(滤波过程)上下文

int InitFilter(const char* filter_desc)

{

char args_spk[512];

const char* pad_name_spk = "in0";

char args_mic[512];

const char* pad_name_mic = "in1";

char args_net[512];

const char* pad_name_net = "in2";

char args_vol[512] = "-3dB";

const char* pad_name_vol = "vol";

AVFilter* filter_src_spk = NULL;

filter_src_spk = avfilter_get_by_name("abuffer");

if(filter_src_spk == NULL)

{

printf("fail to get avfilter ..\n");

return -1;

}

AVFilter* filter_src_mic = NULL;

filter_src_mic = avfilter_get_by_name("abuffer");

if(filter_src_mic == NULL)

{

printf("fail to get avfilter ..\n");

return -1;

}

AVFilter* filter_src_net = NULL;

filter_src_net = avfilter_get_by_name("abuffer");

if(filter_src_net == NULL)

{

printf("fail to get avfilter ..\n");

return -1;

}

AVFilter* filter_src_vol = NULL;

filter_src_vol = avfilter_get_by_name("volume");

if(filter_src_vol == NULL)

{

printf("fail to get avfilter ..\n");

return -1;

}

AVFilter* filter_sink = avfilter_get_by_name("abuffersink");

AVFilterInOut* filter_output_spk = avfilter_inout_alloc();

AVFilterInOut* filter_output_mic = avfilter_inout_alloc();

AVFilterInOut* filter_output_net = avfilter_inout_alloc();

AVFilterInOut* filter_output_vol = avfilter_inout_alloc();

AVFilterInOut* filter_input = avfilter_inout_alloc();

_filter_graph = avfilter_graph_alloc();

//snprintf(args_spk, sizeof(args_spk), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%I64x",

snprintf(args_spk, sizeof(args_spk), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%"PRIx64,

_fmt_ctx_spk->streams[_index_spk]->codec->time_base.num,

_fmt_ctx_spk->streams[_index_spk]->codec->time_base.den,

_fmt_ctx_spk->streams[_index_spk]->codec->sample_rate,

av_get_sample_fmt_name(_fmt_ctx_spk->streams[_index_spk]->codec->sample_fmt),

_fmt_ctx_spk->streams[_index_spk]->codec->channel_layout);

printf("args spk : %s channel_layout : %d\n",args_spk,_fmt_ctx_spk->streams[_index_spk]->codec->channel_layout);

//snprintf(args_mic, sizeof(args_mic), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%I64x",

snprintf(args_mic, sizeof(args_mic), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%"PRIx64,

_fmt_ctx_mic->streams[_index_mic]->codec->time_base.num,

_fmt_ctx_mic->streams[_index_mic]->codec->time_base.den,

_fmt_ctx_mic->streams[_index_mic]->codec->sample_rate,

av_get_sample_fmt_name(_fmt_ctx_mic->streams[_index_mic]->codec->sample_fmt),

_fmt_ctx_mic->streams[_index_mic]->codec->channel_layout);

printf("args mic : %s channel_layout : %d\n",args_mic,_fmt_ctx_mic->streams[_index_mic]->codec->channel_layout);

//snprintf(args_mic, sizeof(args_mic), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%I64x",

snprintf(args_net, sizeof(args_net), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%"PRIx64,

_fmt_ctx_net->streams[_index_net]->codec->time_base.num,

_fmt_ctx_net->streams[_index_net]->codec->time_base.den,

_fmt_ctx_net->streams[_index_net]->codec->sample_rate,

av_get_sample_fmt_name(_fmt_ctx_net->streams[_index_net]->codec->sample_fmt),

_fmt_ctx_net->streams[_index_net]->codec->channel_layout);

printf("args net : %s channel_layout : %d\n",args_net,_fmt_ctx_net->streams[_index_net]->codec->channel_layout);

//sprintf_s(args_spk, sizeof(args_spk), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%I64x", _fmt_ctx_out->streams[_index_a_out]->codec->time_base.num, _fmt_ctx_out->streams[_index_a_out]->codec->time_base.den, _fmt_ctx_out->streams[_index_a_out]->codec->sample_rate, av_get_sample_fmt_name(_fmt_ctx_out->streams[_index_a_out]->codec->sample_fmt), _fmt_ctx_out->streams[_index_a_out]->codec->channel_layout);

//sprintf_s(args_mic, sizeof(args_mic), "time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%I64x", _fmt_ctx_out->streams[_index_a_out]->codec->time_base.num, _fmt_ctx_out->streams[_index_a_out]->codec->time_base.den, _fmt_ctx_out->streams[_index_a_out]->codec->sample_rate, av_get_sample_fmt_name(_fmt_ctx_out->streams[_index_a_out]->codec->sample_fmt), _fmt_ctx_out->streams[_index_a_out]->codec->channel_layout);

int ret = 0;

ret = avfilter_graph_create_filter(&_filter_ctx_src_spk, filter_src_spk, pad_name_spk, args_spk, NULL, _filter_graph);

if (ret < 0)

{

printf("Filter: failed to call avfilter_graph_create_filter -- src spk\n");

return -1;

}

ret = avfilter_graph_create_filter(&_filter_ctx_src_mic, filter_src_mic, pad_name_mic, args_mic, NULL, _filter_graph);

if (ret < 0)

{

printf("Filter: failed to call avfilter_graph_create_filter -- src mic\n");

return -1;

}

ret = avfilter_graph_create_filter(&_filter_ctx_src_net, filter_src_net, pad_name_net, args_net, NULL, _filter_graph);

if (ret < 0)

{

printf("Filter: failed to call avfilter_graph_create_filter -- src net\n");

return -1;

}

ret = avfilter_graph_create_filter(&_filter_ctx_sink, filter_sink, "out", NULL, NULL, _filter_graph);

if (ret < 0)

{

printf("Filter: failed to call avfilter_graph_create_filter -- sink\n");

return -1;

}

AVCodecContext* encodec_ctx = _fmt_ctx_out->streams[_index_a_out]->codec;

ret = av_opt_set_bin(_filter_ctx_sink, "sample_fmts", (uint8_t*)&encodec_ctx->sample_fmt, sizeof(encodec_ctx->sample_fmt), AV_OPT_SEARCH_CHILDREN);

if (ret < 0)

{

printf("Filter: failed to call av_opt_set_bin -- sample_fmts\n");

return -1;

}

ret = av_opt_set_bin(_filter_ctx_sink, "channel_layouts", (uint8_t*)&encodec_ctx->channel_layout, sizeof(encodec_ctx->channel_layout), AV_OPT_SEARCH_CHILDREN);

if (ret < 0)

{

printf("Filter: failed to call av_opt_set_bin -- channel_layouts\n");

return -1;

}

ret = av_opt_set_bin(_filter_ctx_sink, "sample_rates", (uint8_t*)&encodec_ctx->sample_rate, sizeof(encodec_ctx->sample_rate), AV_OPT_SEARCH_CHILDREN);

if (ret < 0)

{

printf("Filter: failed to call av_opt_set_bin -- sample_rates\n");

return -1;

}

filter_output_spk->name = av_strdup(pad_name_spk);

filter_output_spk->filter_ctx = _filter_ctx_src_spk;

filter_output_spk->pad_idx = 0;

filter_output_spk->next = filter_output_mic;

filter_output_mic->name = av_strdup(pad_name_mic);

filter_output_mic->filter_ctx = _filter_ctx_src_mic;

filter_output_mic->pad_idx = 0;

filter_output_mic->next = filter_output_net; //重點!!!!!

filter_output_net->name = av_strdup(pad_name_net);

filter_output_net->filter_ctx = _filter_ctx_src_net;

filter_output_net->pad_idx = 0;

filter_output_net->next = NULL;

filter_output_vol->name = av_strdup(pad_name_vol);

filter_output_vol->filter_ctx = _filter_ctx_src_vol;

filter_output_vol->pad_idx = 0;

filter_output_vol->next = filter_input;

filter_input->name = av_strdup("out");

filter_input->filter_ctx = _filter_ctx_sink;

filter_input->pad_idx = 0;

filter_input->next = NULL;

AVFilterInOut* filter_outputs[3];

filter_outputs[0] = filter_output_spk;

filter_outputs[1] = filter_output_mic;

filter_outputs[2] = filter_output_net;

AVFilterInOut* filter_volinputs[2];

filter_volinputs[0] = filter_output_vol;

filter_volinputs[1] = filter_input;

ret = avfilter_graph_parse_ptr(_filter_graph, filter_desc, &filter_input, filter_outputs, NULL);

if (ret < 0)

{

printf("Filter: failed to call avfilter_graph_parse_ptr\n");

return -1;

}

ret = avfilter_graph_config(_filter_graph, NULL);

if (ret < 0)

{

printf("Filter: failed to call avfilter_graph_config\n");

return -1;

}

avfilter_inout_free(&filter_input);

//av_free(filter_src_spk);

//av_free(filter_src_mic);

//av_free(filter_src_net);

avfilter_inout_free(filter_outputs);

//av_free(filter_outputs);

char* temp = avfilter_graph_dump(_filter_graph, NULL);

printf("%s\n", temp);

return 0;

}

这里需要注意const char* filter_desc 传进来的filter关系描述符

const char* filter_desc = "[in0][in1][in2]amix=inputs=3[out]";

解释一下,[in0] 这个对应上面的const char* pad_name_spk = “in0”;代表某一filter,[in0][in1][in2]代表的是输入节点,amix是混音滤波器=inputs=3这个是参数表示输入是3个,[out]表示输出。更加详细的解释可以参考[ffmpeg] 滤波这篇博客。这里的讲解比较清晰。

注意这段代码

filter_output_spk->name = av_strdup(pad_name_spk);

filter_output_spk->filter_ctx = _filter_ctx_src_spk;

filter_output_spk->pad_idx = 0;

filter_output_spk->next = filter_output_mic;

filter_output_mic->name = av_strdup(pad_name_mic);

filter_output_mic->filter_ctx = _filter_ctx_src_mic;

filter_output_mic->pad_idx = 0;

filter_output_mic->next = filter_output_net; //重點!!!!!

filter_output_net->name = av_strdup(pad_name_net);

filter_output_net->filter_ctx = _filter_ctx_src_net;

filter_output_net->pad_idx = 0;

filter_output_net->next = NULL;

filter_output_vol->name = av_strdup(pad_name_vol);

filter_output_vol->filter_ctx = _filter_ctx_src_vol;

filter_output_vol->pad_idx = 0;

filter_output_vol->next = filter_input;

filter_input->name = av_strdup("out");

filter_input->filter_ctx = _filter_ctx_sink;

filter_input->pad_idx = 0;

filter_input->next = NULL;

AVFilterInOut* filter_outputs[3];

filter_outputs[0] = filter_output_spk;

filter_outputs[1] = filter_output_mic;

filter_outputs[2] = filter_output_net;

这是有填充next的即将输入串联了起来。

4、构建输入线程

void * NetworkCapThreadProc(void *lpParam)

{

AVFrame* pFrame = av_frame_alloc();

AVPacket packet;

av_init_packet(&packet);

int got_sound;

while (_state == RUNNING)

{

packet.data = NULL;

packet.size = 0;

if (av_read_frame(_fmt_ctx_net, &packet) < 0)

{

printf("there is no context spk \n");

break;

}

if (packet.stream_index == _index_net)

{

if (avcodec_decode_audio4(_fmt_ctx_net->streams[_index_net]->codec, pFrame, &got_sound, &packet) < 0)

{

break;

}

av_free_packet(&packet);

if (!got_sound)

{

continue;

}

int fifo_net_space = av_audio_fifo_space(_fifo_net);

while(fifo_net_space < pFrame->nb_samples && _state == RUNNING)

{

usleep(10*1000);

printf("_fifo_spk full !\n");

fifo_net_space = av_audio_fifo_space(_fifo_net);

}

if (fifo_net_space >= pFrame->nb_samples)

{

//EnterCriticalSection(&_section_spk);

pthread_mutex_lock(&mutex_net);

int nWritten = av_audio_fifo_write(_fifo_net, (void**)pFrame->data, pFrame->nb_samples);

//LeaveCriticalSection(&_section_spk);

pthread_mutex_unlock(&mutex_net);

}

}

}

av_frame_free(&pFrame);

}

5、处理数据

while (_state != FINISHED)

{

if (kbhit())

{

_state = STOPPED;

break;

}

else

{

int ret = 0;

AVFrame* pFrame_spk = av_frame_alloc();

AVFrame* pFrame_mic = av_frame_alloc();

AVFrame* pFrame_net = av_frame_alloc();

AVPacket packet_out;

int got_packet_ptr = 0;

int fifo_spk_size = av_audio_fifo_size(_fifo_spk);

int fifo_mic_size = av_audio_fifo_size(_fifo_mic);

int fifo_net_size = av_audio_fifo_size(_fifo_net);

int frame_spk_min_size = _fmt_ctx_spk->streams[_index_spk]->codec->frame_size;

int frame_mic_min_size = _fmt_ctx_mic->streams[_index_mic]->codec->frame_size;

int frame_net_min_size = _fmt_ctx_net->streams[_index_net]->codec->frame_size;

printf("get mini frame size : %d ###################\n",frame_spk_min_size);

if (fifo_spk_size >= frame_spk_min_size && fifo_mic_size >= frame_mic_min_size && fifo_net_size >= frame_net_min_size)

{

tmpFifoFailed = 0;

pFrame_spk->nb_samples = frame_spk_min_size;

pFrame_spk->channel_layout = _fmt_ctx_spk->streams[_index_spk]->codec->channel_layout;

pFrame_spk->format = _fmt_ctx_spk->streams[_index_spk]->codec->sample_fmt;

pFrame_spk->sample_rate = _fmt_ctx_spk->streams[_index_spk]->codec->sample_rate;

av_frame_get_buffer(pFrame_spk, 0);

pFrame_mic->nb_samples = frame_mic_min_size;

pFrame_mic->channel_layout = _fmt_ctx_mic->streams[_index_mic]->codec->channel_layout;

pFrame_mic->format = _fmt_ctx_mic->streams[_index_mic]->codec->sample_fmt;

pFrame_mic->sample_rate = _fmt_ctx_mic->streams[_index_mic]->codec->sample_rate;

av_frame_get_buffer(pFrame_mic, 0);

pFrame_net->nb_samples = frame_net_min_size;

pFrame_net->channel_layout = _fmt_ctx_net->streams[_index_net]->codec->channel_layout;

pFrame_net->format = _fmt_ctx_net->streams[_index_net]->codec->sample_fmt;

pFrame_net->sample_rate = _fmt_ctx_net->streams[_index_net]->codec->sample_rate;

av_frame_get_buffer(pFrame_net, 0);

pthread_mutex_lock(&mutex_spk);

//EnterCriticalSection(&_section_spk);

ret = av_audio_fifo_read(_fifo_spk, (void**)pFrame_spk->data, frame_spk_min_size);

//LeaveCriticalSection(&_section_spk);

pthread_mutex_unlock(&mutex_spk);

pthread_mutex_lock(&mutex_mic);

//EnterCriticalSection(&_section_mic);

ret = av_audio_fifo_read(_fifo_mic, (void**)pFrame_mic->data, frame_mic_min_size);

//LeaveCriticalSection(&_section_mic);

pthread_mutex_unlock(&mutex_mic);

pthread_mutex_lock(&mutex_net);

//EnterCriticalSection(&_section_net);

ret = av_audio_fifo_read(_fifo_net, (void**)pFrame_net->data, frame_net_min_size);

//LeaveCriticalSection(&_section_net);

pthread_mutex_unlock(&mutex_net);

pFrame_spk->pts = av_frame_get_best_effort_timestamp(pFrame_spk);

pFrame_mic->pts = av_frame_get_best_effort_timestamp(pFrame_mic);

pFrame_net->pts = av_frame_get_best_effort_timestamp(pFrame_net);

BufferSourceContext* s = (BufferSourceContext*)_filter_ctx_src_spk->priv;

bool b1 = (s->sample_fmt != pFrame_spk->format);

bool b2 = (s->sample_rate != pFrame_spk->sample_rate);

bool b3 = (s->channel_layout != pFrame_spk->channel_layout);

bool b4 = (s->channels != pFrame_spk->channels);

ret = av_buffersrc_add_frame(_filter_ctx_src_spk, pFrame_spk);

if (ret < 0)

{

printf("Mixer: failed to call av_buffersrc_add_frame (speaker)\n");

break;

}

ret = av_buffersrc_add_frame(_filter_ctx_src_mic, pFrame_mic);

if (ret < 0)

{

printf("Mixer: failed to call av_buffersrc_add_frame (microphone)\n");

break;

}

ret = av_buffersrc_add_frame(_filter_ctx_src_net, pFrame_net);

if (ret < 0)

{

printf("Mixer: failed to call av_buffersrc_add_frame (network)\n");

break;

}

while (1)

{

AVFrame* pFrame_out = av_frame_alloc();

//AVERROR(EAGAIN) 返回这个表示还没转换完成既 不存在帧,则返回AVERROR(EAGAIN)

ret = av_buffersink_get_frame_flags(_filter_ctx_sink, pFrame_out, 0 );

if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

{

//printf("%d %d \n",AVERROR(EAGAIN),AVERROR_EOF);

}

if (ret < 0)

{

printf("Mixer: failed to call av_buffersink_get_frame_flags ret : %d \n",ret);

break;

}

if (pFrame_out->data[0] != NULL)

{

av_init_packet(&packet_out);

packet_out.data = NULL;

packet_out.size = 0;

ret = avcodec_encode_audio2(_fmt_ctx_out->streams[_index_a_out]->codec, &packet_out, pFrame_out, &got_packet_ptr);

if (ret < 0)

{

printf("Mixer: failed to call avcodec_decode_audio4\n");

break;

}

if (got_packet_ptr)

{

packet_out.stream_index = _index_a_out;

packet_out.pts = frame_count * _fmt_ctx_out->streams[_index_a_out]->codec->frame_size;

packet_out.dts = packet_out.pts;

packet_out.duration = _fmt_ctx_out->streams[_index_a_out]->codec->frame_size;

packet_out.pts = av_rescale_q_rnd(packet_out.pts,

_fmt_ctx_out->streams[_index_a_out]->codec->time_base,

_fmt_ctx_out->streams[_index_a_out]->time_base,

(AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

packet_out.dts = packet_out.pts;

packet_out.duration = av_rescale_q_rnd(packet_out.duration,

_fmt_ctx_out->streams[_index_a_out]->codec->time_base,

_fmt_ctx_out->streams[_index_a_out]->time_base,

(AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

frame_count++;

ret = av_interleaved_write_frame(_fmt_ctx_out, &packet_out);

if (ret < 0)

{

printf("Mixer: failed to call av_interleaved_write_frame\n");

}

//printf("Mixer: write frame to file\n");

}

av_free_packet(&packet_out);

}

av_frame_free(&pFrame_out);

}

}

else

{

tmpFifoFailed++;

usleep(20*1000);

if (tmpFifoFailed > 300)

{

_state = STOPPED;

usleep(30*1000);

break;

}

}

av_frame_free(&pFrame_spk);

av_frame_free(&pFrame_mic);

}

}

完整代码请下载audioMix.c