ceph 与 k8s的简单结合使用笔记

最终环境与能学到什么说明:

使用kubeadm,配置阿里云的镜像源搭建单节点的k8s环境。

使用ceph-deploy搭建 1 mon 1 mgr 3 osd 环境的ceph集群。

k8s 调用 ceph 集群的 rbd,cephfs 作为存储后端。

docker harbor 使用 ceph 对象存储,保存镜像。

学习环境机器规划:

注:笔记本至少有8G内存,少于8G内存的机器运行有点吃力。

| k8s单节点 ceph(admin node1 ceph-client) |

192.168.8.138 |

CentOS 7.x 2c/6G 挂载一块硬盘(不少于20G) k8s: v1.18.6 ceph: 12.2.13 luminous (stable) |

| node2 |

192.168.8.139 |

CentOS 7.x 2c/700M 挂载一块硬盘(不少于20G) ceph: 12.2.13 luminous (stable) |

| node3 |

192.168.8.140 |

CentOS 7.x 2c/700M 挂载一块硬盘(不少于20G) ceph: 12.2.13 luminous (stable) |

kubeadm 安装 单节点k8s篇 (操作机器138)

设置时区,主机名,时间同步,关闭防火墙,sawp,selinux。

timedatectl set-timezone 'Asia/Shanghai'

ntpdate ntp1.aliyun.com

hostnamectl set-hostname node1 && echo "192.168.8.138 node1" >> /etc/hosts

systemctl stop firewalld.service && systemctl disable firewalld.service

setenforce 0 && swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config添加yum源

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

安装docker依赖软件

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine-y

yum install yum-utils device-mapper-persistent-data lvm2 -y安装docker

### Add Docker repository.

yum-config-manager \

--add-repo \

https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

## Install Docker CE. 这一步需要等待很久,如有报错,请重复执行.

yum update -y && yum install -y \

containerd.io-1.2.13 \

docker-ce-19.03.11 \

docker-ce-cli-19.03.11

# Create /etc/docker directory.

mkdir /etc/docker

# Setup daemon.

cat > /etc/docker/daemon.json <安装kubeadm

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

yum install -y kubelet-1.18.6 kubeadm-1.18.6 kubectl-1.18.6

systemctl enable kubelet && systemctl start kubelet 初始化 K8S

kubeadm init --image-repository registry.aliyuncs.com/google_containers --apiserver-advertise-address=192.168.8.138 --kubernetes-version 1.18.6 --pod-network-cidr=10.244.0.0/16 -v 5

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config添加网络组件

curl https://docs.projectcalico.org/manifests/calico.yaml -O

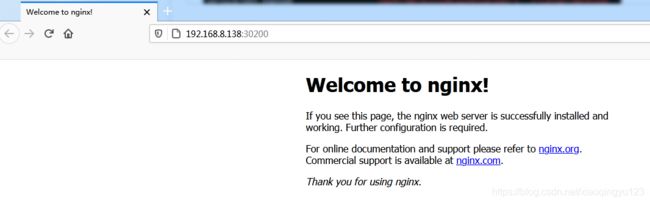

kubectl apply -f calico.yaml测试k8s功能是否正常

kubectl taint node node1 node-role.kubernetes.io/master-

kubectl run test --image=nginx -l test=test

kubectl expose pod test --port=80 --target-port=80 --type=NodePort

kubectl get service安装Ceph篇

设置主机名,时区,同步时间,关闭防火墙(全部机器操作)

#138

hostnamectl set-hostname node1

#139

hostnamectl set-hostname node2

#140

hostnamectl set-hostname node3

#以下是全部机器

#编辑hosts文件

192.168.8.138 admin node1 ceph-client

192.168.8.139 node2

192.168.8.140 node3

# all

timedatectl set-timezone 'Asia/Shanghai'

ntpdate ntp1.aliyun.com

systemctl stop firewalld.service && systemctl disable firewalld.service

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config创建业务用户,设置sudo权限,yum 源

# 所有机器

useradd cephu

passwd cephu

# 所有机器

visudo ----在root ALL=(ALL) ALL下面添加:

cephu ALL=(root) NOPASSWD:ALL

# 所有机器

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

# 138 admin 机器

# vim /etc/profile

export CEPH_DEPLOY_REPO_URL=http://mirrors.aliyun.com/ceph/rpm-luminous/el7

export CEPH_DEPLOY_GPG_URL=http://mirrors.aliyun.com/ceph/keys/release.asc

# vim /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1admin机器设置业务用户能免密登陆其他机器,安装ceph-deploy

# 138 admin 机器

yum install ceph-deploy -y

# 138 admin 机器

su - cephu

ssh-copy-id cephu@node1

ssh-copy-id cephu@node2

ssh-copy-id cephu@node3初始化ceph集群,以下ceph-deploy操作全部在admin节点mycluster文件夹下操作

# 138 机器 以下ceph-deploy操作全部在admin节点mycluster文件夹下操作

su - cephu

mkdir my-cluster

cd my-cluster/

# 编辑 ceph-deploy 配置文件

vim ~/.ssh/config

# 加以下内容

Host node1

Hostname node1

User cephu

Host node2

Hostname node2

User cephu

Host node3

Hostname node3

User cephu

#

chmod 644 ~/.ssh/config

wget https://files.pythonhosted.org/packages/5f/ad/1fde06877a8d7d5c9b60eff7de2d452f639916ae1d48f0b8f97bf97e570a/distribute-0.7.3.zip

unzip distribute-0.7.3.zip

cd distribute-0.7.3/

sudo python setup.py install

ceph-deploy new node1

ceph-deploy install --release luminous node1 node2 node3

ceph-deploy mon create-initial

ceph-deploy admin node1 node2 node3

ceph-deploy mgr create node1

ceph-deploy osd create --data /dev/sdb node1

ceph-deploy osd create --data /dev/sdb node2

ceph-deploy osd create --data /dev/sdb node3

sudo ceph auth get-or-create mgr.node1 mon 'allow profile mgr' osd 'allow *' mds 'allow *'

sudo ceph-mgr -i node1

sudo ceph status

sudo ceph mgr module enable dashboard

sudo ceph config-key set mgr/dashboard/node1/server_addr 192.168.8.138

sudo netstat -nltp | grep 7000

验证ceph dashboard, 浏览器打开web页面

k8s 调用 Ceph 篇

参考文档:https://blog.51cto.com/leejia/2501080?hmsr=joyk.com&utm_source=joyk.com&utm_medium=referral

静态持久卷

每次需要使用存储空间,需要存储管理员先手动在存储上创建好对应的image,然后k8s才能使用。

创建ceph secret

需要给k8s添加一个访问ceph的secret,主要用于k8s来给rbd做map。

1,在ceph master节点执行如下命令获取admin的经过base64编码的key(生产环境可以创建一个给k8s使用的专门用户):

# ceph auth get-key client.admin | base64

QVFCd3BOQmVNMCs5RXhBQWx3aVc3blpXTmh2ZjBFMUtQSHUxbWc9PQ==2,在k8s通过manifest创建secret

# vim ceph-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

data:

key: QVFCd3BOQmVNMCs5RXhBQWx3aVc3blpXTmh2ZjBFMUtQSHUxbWc9PQ==

# kubectl apply -f ceph-secret.yaml 创建存储池

列出目前的存储池

ceph osd lspools

创建存储池

ceph osd pool create mypool 128 128

初始化

rbd pool init mypool创建image

默认情况下,ceph创建之后使用的默认pool为mypool。使用如下命令在安装ceph的客户端或者直接在ceph master节点上创建image:

# rbd create mypool/image1 --size 1024 --image-feature layering

# rbd info mypool/image1

rbd image 'image1':

size 1GiB in 256 objects

order 22 (4MiB objects)

block_name_prefix: rbd_data.12c56b8b4567

format: 2

features: layering

flags:

create_timestamp: Tue Sep 1 14:53:13 2020创建pv

# vim pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: ceph-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

- ReadOnlyMany

rbd:

monitors:

- 192.168.8.138:6789

- 192.168.8.139:6789

- 192.168.8.140:6789

pool: mypool

image: image1

user: admin

secretRef:

name: ceph-secret

fsType: ext4

persistentVolumeReclaimPolicy: Retain

# kubectl apply -f pv.yaml

persistentvolume/ceph-pv created

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ceph-pv 1Gi RWO,ROX Retain Available 76s创建pvc

# vim pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-claim

spec:

accessModes:

- ReadWriteOnce

- ReadOnlyMany

resources:

requests:

storage: 1Gi

# kubectl apply -f pvc.yaml当创建好claim之后,k8s会匹配最合适的pv将其绑定到claim,持久卷的容量需要满足claim的要求+卷的模式必须包含claim中指定的访问模式。故如上的pvc会绑定到我们刚创建的pv上。

查看pvc的绑定:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-claim Bound ceph-pv 1Gi RWO,ROX 13m# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: ceph-pod

spec:

volumes:

- name: ceph-volume

persistentVolumeClaim:

claimName: ceph-claim

containers:

- name: ceph-busybox

image: busybox

command: ["/bin/sleep", "60000000000"]

volumeMounts:

- name: ceph-volume

mountPath: /usr/share/busybox

# kubectl apply -f pod.yaml

# 写入一个文件

# kubectl exec -it ceph-pod -- "/bin/touch" "/usr/share/busybox/test.txt"验证

# 使用系统内核挂载

# rbd map mypool/image1

/dev/rbd1

# mkdir /mnt/ceph-rbd && mount /dev/rbd1 /mnt/ceph-rbd/ && ls /mnt/ceph-rbd/动态持久卷

k8s使用stroageclass动态申请ceph存储资源的时候,需要controller-manager使用rbd命令去和ceph集群交互,而k8s的controller-manager使用的默认镜像k8s.gcr.io/kube-controller-manager中没有集成ceph的rbd客户端。而k8s官方建议我们使用外部的provisioner来解决这个问题,这些独立的外部程序遵循由k8s定义的规范。

我们来根据官方建议使用外部的rbd-provisioner来提供服务,如下操作再k8s的master上执行:

# git clone https://github.com/kubernetes-incubator/external-storage.git

# cd external-storage/ceph/rbd/deploy

# sed -r -i "s/namespace: [^ ]+/namespace: kube-system/g" ./rbac/clusterrolebinding.yaml ./rbac/rolebinding.yaml

# kubectl -n kube-system apply -f ./rbac

# kubectl get pods -n kube-system -l app=rbd-provisioner

NAME READY STATUS RESTARTS AGE

rbd-provisioner-c968dcb4b-fklhw 1/1 Running 0 7m16s创建一个普通用户来给k8s做rdb的映射

在ceph集群中创建一个k8s专用的pool和用户

# ceph osd pool create kube 60

# rbd pool init kube

# ceph auth get-or-create client.kube mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=kube' -o ceph.client.kube.keyring在k8s集群创建kube用户的secret:

# admin 主账号的key 必须再kube-system下

# ceph auth get-key client.admin | base64

QVFDYjZFMWZkUEpNQkJBQWsxMmI1bE5keFV5M0NsVjFtSitYeEE9PQ==

# vim ceph-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

namespace: kube-system

data:

key: QVFDYjZFMWZkUEpNQkJBQWsxMmI1bE5keFV5M0NsVjFtSitYeEE9PQ==

# kubectl apply -f ceph-secret.yaml

secret/ceph-secret created

# kubectl get secret ceph-secret -n kube-system

NAME TYPE DATA AGE

ceph-secret Opaque 1 33s

# 普通用户secret运行在对应的namespace

# ceph auth get-key client.kube | base64

QVFCKytVMWZQT040SVJBQUFXS0JCNXZuTk94VFp1eU5UVVV0cHc9PQ==

# vim ceph-kube-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-kube-secret

data:

key: QVFCKytVMWZQT040SVJBQUFXS0JCNXZuTk94VFp1eU5UVVV0cHc9PQ==

type:

kubernetes.io/rbd

# kubectl apply -f ceph-kube-secret.yaml

# kubectl get secret ceph-kube-secret

NAME TYPE DATA AGE

ceph-kube-secret kubernetes.io/rbd 1 2m27s

修改storageclass的provisioner为我们新增加的provisioner:

# 编写 storageClass 文件

# vim sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ceph-rbd

annotations:

storageclass.beta.kubernetes.io/is-default-class: "true"

provisioner: ceph.com/rbd

parameters:

monitors: 192.168.8.138:6789,192.168.8.139:6789,192.168.8.140:6789

adminId: admin

adminSecretName: ceph-secret

adminSecretNamespace: kube-system

pool: kube

userId: kube

userSecretName: ceph-kube-secret

userSecretNamespace: default

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

# kubectl apply -f sc.yaml

storageclass.storage.k8s.io/ceph-rbd created

[root@node1 storage]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ceph-rbd (default) ceph.com/rbd Delete Immediate false 10s

创建pvc

由于我们已经指定了默认的storageclass,故可以直接创建pvc。创建完成处于pending状态,当使用的时候才会触发provisioner创建:

# 创建pv

# vim pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-claim-sc

spec:

accessModes:

- ReadWriteOnce

- ReadOnlyMany

resources:

requests:

storage: 1Gi

# 创建前

kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ceph-pv 1Gi RWO,ROX Retain Bound default/ceph-claim 77m

# rbd ls kube

# kubectl apply -f pvc.yaml

persistentvolumeclaim/ceph-claim-sc created

# 创建后

# rbd ls kube

# 这里已经看到根据pvc自动生成了一个pv

# rbd ls kube

kubernetes-dynamic-pvc-10cbb787-ec2b-11ea-a4fe-7e5ddf4fa4e9验证:

# kubectl exec -it ceph-pod-sc -- "/bin/touch" "/usr/share/busybox/test-sc.txt"

# rbd map kube/kubernetes-dynamic-pvc-10cbb787-ec2b-11ea-a4fe-7e5ddf4fa4e9

/dev/rbd3

# mkdir /mnt/ceph-rbd-sc && mount /dev/rbd3 /mnt/ceph-rbd-sc

# ls /mnt/ceph-rbd-sc/

lost+found test-sc.txt

cephfs 静态使用:

cephfs服务开启:

# su -cephu

# cd /home/cephu/my-cluster

# ceph-deploy mds create node1

# sudo netstat -nltp | grep mds

tcp 0 0 0.0.0.0:6805 0.0.0.0:* LISTEN 306115/ceph-mds

cephfs 存储池创建:

# 创建 pool

sudo ceph osd pool create fs_data 32

sudo ceph osd pool create fs_metadata 24

# 创建 cephfs

sudo ceph fs new cephfs fs_metadata fs_data

# 查看 cephfs 状态

sudo ceph fs ls

sudo ceph mds stat扩展:创建其用户,新的fs空间

本地尝试连接

# 安装依赖包

yum -y install ceph-common

# 获取 admin key

sudo ceph auth get-key client.admin

# 挂载

sudo mkdir /mnt/cephfs

sudo mount -t ceph 192.168.8.138:6789:/ /mnt/cephfs -o name=admin,secret=AQCb6E1fdPJMBBAAk12b5lNdxUy3ClV1mJ+XxA==volume 挂载

使用secret 认证

# ceph auth get-key client.admin | base64

QVFDYjZFMWZkUEpNQkJBQWsxMmI1bE5keFV5M0NsVjFtSitYeEE9PQ==

# ceph-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

data:

key: QVFDYjZFMWZkUEpNQkJBQWsxMmI1bE5keFV5M0NsVjFtSitYeEE9PQ==

# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cephfs

spec:

volumes:

- name: cephfs

cephfs:

monitors:

- 192.168.8.138:6789

path: /

secretRef:

name: ceph-secret

user: admin

containers:

- name: cephfs

image: nginx

volumeMounts:

- name: cephfs

mountPath: /cephfs