hadoop编译

学习Hadoop系列,必不可少的就是自己编译一次Hadoop,同时也为后期学习支持文件压缩做好准备。

本次编译的版本为 hadoop-2.6.0-cdh5.7.0 ,从官网下载好源码和需要的工具等

一,准备好工具,并上传至Linux software目录下

#先安装好上传文件的命令 rz

yum install lrzsz

-rw-r--r-- 1 root root 8491533 Jul 11 13:57 apache-maven-3.3.9-bin.tar.gz

-rw-r--r-- 1 root root 42610549 Jul 11 13:38 hadoop-2.6.0-cdh5.7.0-src.tar.gz

-rw-r--r-- 1 root root 153530841 Aug 2 11:28 jdk-7u80-linux-x64.tar.gz

-rw-r--r-- 1 root root 2401901 Jul 11 13:54 protobuf-2.5.0.tar.gz

为了更好的兼容性,jdk尽量选用1.7,maven版本需要大于3.3.9

二,安装好必要的环境依赖库

yum install git

yum install -y svn ncurses-devel

yum install -y gcc gcc-c++ make cmake

yum install -y openssl openssl-devel svn ncurses-devel zlib-devel libtool

yum install -y snappy snappy-devel bzip2 bzip2-devel lzo lzo-devel lzop autoconf automake cmake

三,工具准备

①在对应的用户目录下创建好相对应的文件夹

mkdir app mvn_repo source

②以及将software中的jdk,mvn,protobuf都解压至app目录下

tar -zxvf /home/hadoop/soft/jdk-7u80-linux-x64.tar.gz -C /usr/java

tar -zxvf /home/hadoop/soft/apache-maven-3.3.9-bin.tar.gz -C ~/app/

tar -zxvf /home/hadoop/soft/protobuf-2.5.0.tar.gz -C ~/app/

③配置jdk,mvn,protobuf等环境变量

export JAVA_HOME=/home/app/jdk1.7.0_80

export PATH=$JAVA_HOME/bin/:$PATH

export MAVEN_HOME=/home/app/apache-maven-3.3.9

export MAVEN_OPTS="-Xms1024m -Xmx1024m"

export PATH=$MAVEN_HOME/bin:$PATH

export PROTOBUF_HOME=/home/app/protobuf-2.5.0

export PATH=$PROTOBUF_HOME/bin:$PATH

紧接着刷新配置文件

source /etc/profile

④进入maven中修改settings.xml配置文件

#注意:本地仓库位置不要放错,放入我们已经创建好的mvn_repo文件夹中

/home/hadoop/maven_repo/

#添加阿里云中央仓库地址

#注意一定要写在

nexus-aliyun

central

Nexus aliyun

http://maven.aliyun.com/nexus/content/groups/public

⑤进入/app/protobuf-2.5.0 文件夹中执行命令

#--prefix= 是用来待会编译好的包放在为路径

[root@izj6c7pikf0xtmft4f protobuf-2.5.0]$ ./configure --prefix=/home/app/protobuf-2.5.0

[root@izj6c7pikf0xtmft4f protobuf-2.5.0]$ make

[root@izj6c7pikf0xtmft4f protobuf-2.5.0]$ make install

#测试是否生效,若出现libprotoc 2.5.0则为生效

[root@izj6c7pikf0xtmft4f protobuf-2.5.0]$ protoc --version

四,准备编译

①,将源码解压

[root@izj6c7pikf0xtmft4f software]$ tar -zxvf hadoop-2.6.0-cdh5.7.0-src.tar.gz -C ~/source/

②,进去source Hadoop源码目录下执行命令

[root@izj6c7pikf0xtmft4f hadoop-2.6.0-cdh5.7.0]$ mvn clean package -Pdist,native -DskipTests -Dtar

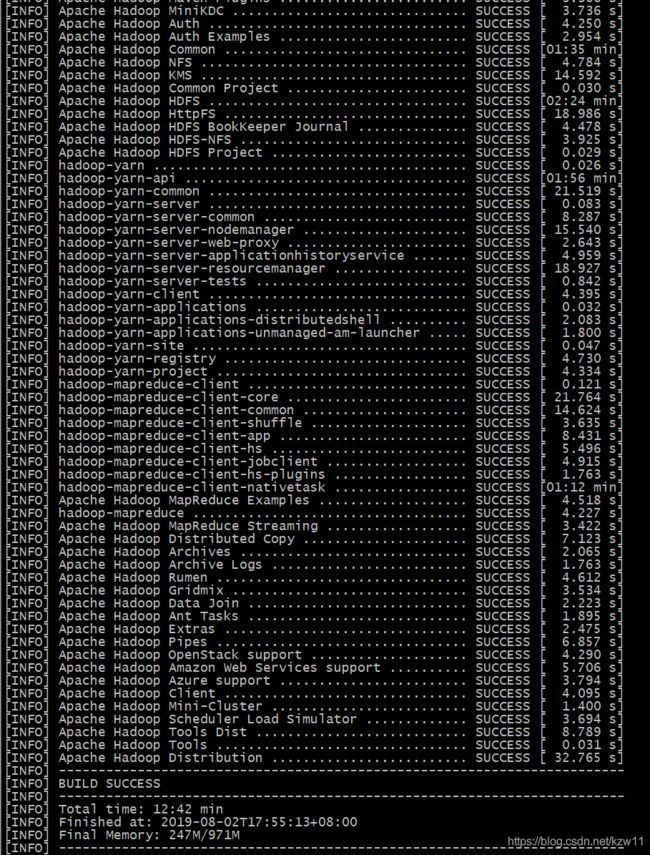

接下来耐心等待,编译需要一定时间。

只要出现 BUILD SUCCESS,即代表编译成功!

五,出现的问题

[FATAL] Non-resolvable parent POM for org.apache.hadoop:hadoop-main:2.6.0-cdh5.15.1: Could not transfer artifact com.cloudera.cdh:cdh-root:pom:5.15.1from/to cdh.repo (https://repository.cloudera.com/artifactory/cloudera-repos): Remote host closed connectio

#分析:是https://repository.cloudera.com/artifactory/cloudera-repos/com/cloudera/cdh/cdh-root/5.15.1/cdh-root-5.15.1.pom文件下载不了,但是虚拟机确实是ping通远程的仓库,很是费解为什么。

解决方法

cd /home/hadoop/maven_repo/repo/com/cloudera/cdh/cdh-root/5.15.1/

wget https://repository.cloudera.com/artifactory/cloudera-repos/com/cloudera/cdh/cdh-root/5.15.1/cdh-root-5.15.1.pom

#分析:

https://repository.cloudera.com/artifactory/cloudera-repos/com/cloudera/cdh/cdh-root/5.15.1/cdh-root-5.15.1.pom文件下载不了

#解决方案:

前往本地仓库到目标文件目录,然后 通过wget 文件,来成功获取该文件,重新执行编译命令,将需要的jar直接放到本地仓库

文件cdh-root-5.7.0.pom未下载下来,解决方式将该文件手动下载上传,

pom文件位置(com.cloudera.cdh:cdh-root:pom:5.15.1),打开这个网址https://repository.cloudera.com/artifactory/cloudera-repos/找到对应的pom文件下载后放在maven仓库的文件夹

maven仓库的文件夹位置:在/home/hadoop/maven_repo/repo目录下查看对应的是缺少哪个目录下的pom和jar包文件

重新执行 mvn clean package -Pdist,native -DskipTests -Dtar

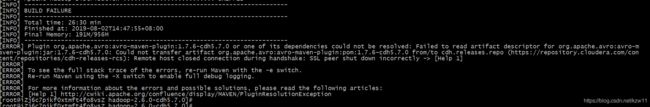

[ERROR] Plugin org.apache.avro:avro-maven-plugin:1.7.6-cdh5.15.1 or one of its dependencies could not be resolved: Failed to read artifact descriptor for org.apache.avro:avro-maven-plugin:jar:1.7.6-cdh5.15.1: Could not transfer artifact org.apache.avro:avro-parent:pom:1.7.6-cdh5.15.1 from/to cdh.releases.repo (https://repository.cloudera.com/content/repositories/cdh-releases-rcs): Remote host closed connection during handshake: SSL peer shut down incorrectly -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/PluginResolutionException

从上面的报错org.apache.avro:avro-parent:pom可以看出在/home/hadoop/maven_repo/repo/org/apache/avro/avro-parent/目录下缺少pom文件,此时只需要在这个目录下执行

wget https://repository.cloudera.com/cloudera/cdh-releases-rcs/org/apache/avro/avro-parent/1.7.6-cdh5.15.1/avro-parent-1.7.6-cdh5.15.1.pom

3.SSL peer shut down

解决方案: 当前目录的pom.xml的 该错误的URL的https改为http即可