hadoop集群搭建及zookeep的高可用

一、安装配置hadoop

- 添加hadoop用户,设置密码

[root@server1 ~]# useradd -u 800 hadoop

[root@server1 ~]# ls

hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz

[root@server1 ~]# passwd hadoop

Changing password for user hadoop.

New password:

BAD PASSWORD: it is based on a dictionary word

BAD PASSWORD: is too simple

Retype new password:

passwd: all authentication tokens updated successfully.

- 解压jdk安装包,并且做软连接

[root@server1 ~]# mv * /home/hadoop/

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ ls

hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz

[hadoop@server1 ~]$ tar zxf jdk-7u79-linux-x64.tar.gz

[hadoop@server1 ~]$ ls

hadoop-2.7.3.tar.gz jdk1.7.0_79 jdk-7u79-linux-x64.tar.gz

[hadoop@server1 ~]$ ln -s jdk1.7.0_79/ java

[hadoop@server1 ~]$ ls

hadoop-2.7.3.tar.gz java jdk1.7.0_79 jdk-7u79-linux-x64.tar.gz

- 配置java的环境变量。方便jdk更新

[hadoop@server1 ~]$ vim ~/.bash_profile

10 PATH=$PATH:$HOME/bin:/home/hadoop/java/bin

[hadoop@server1 ~]$ source ~/.bash_profile

- 在hadoop的脚本中配置java

[hadoop@server1 ~]$ tar zxf hadoop-2.7.3.tar.gz

[hadoop@server1 ~]$ cd hadoop-2.7.3/etc/hadoop/

[hadoop@server1 hadoop]$ vim hadoop-env.sh

25 export JAVA_HOME=/home/hadoop/java

- 测试hadoop

[hadoop@server1 hadoop-2.7.3]$ pwd

/home/hadoop/hadoop-2.7.3

[hadoop@server1 hadoop-2.7.3]$ bin/hadoop

[hadoop@server1 hadoop-2.7.3]$ mkdir input

[hadoop@server1 hadoop-2.7.3]$ cp etc/hadoop/*.xml input/

[hadoop@server1 hadoop-2.7.3]$ pwd

/home/hadoop/hadoop-2.7.3

[hadoop@server1 hadoop-2.7.3]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar grep input output 'dfs[a-z.]+'

这里在测试的时候要给server1加本地解析

[hadoop@server1 hadoop-2.7.3]$ su

Password:

[root@server1 hadoop-2.7.3]# vim /etc/hosts #用超级用户执行

172.25.66.1 server1

二、数据操作

1、配置hadoop

[hadoop@server1 hadoop-2.7.3]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

19

20

21 fs.defaultFS

22 hdfs://172.25.66.1:9000

23

24

[hadoop@server1 hadoop]$ vim hdfs-site.xml

19

20

21 dfs.replication

22 1

23

24

2、添加ssh

[hadoop@server1 hadoop]$ ssh-keygen

[hadoop@server1 hadoop]$ ssh-copy-id 172.25.66.1

[hadoop@server1 hadoop]$ ssh 172.25.66.1

[hadoop@server1 ~]$ logout

Connection to 172.25.66.1 closed.

[hadoop@server1 hadoop]$ ssh localhost

[hadoop@server1 ~]$ logout

Connection to localhost closed.

[hadoop@server1 hadoop]$ ssh 0.0.0.0

[hadoop@server1 ~]$ logout

Connection to 0.0.0.0 closed.

[hadoop@server1 hadoop]$ ssh server1

Last login: Tue Nov 20 19:07:02 2018 from server1

[hadoop@server1 ~]$ logout

Connection to server1 closed.

3、启动dfs

- 格式化

[hadoop@server1 ~]$ cd hadoop-2.7.3/etc/hadoop/

[hadoop@server1 hadoop]$ cat slaves

172.25.66.1

[hadoop@server1 hadoop]$ cd ..

[hadoop@server1 etc]$ cd ..

[hadoop@server1 hadoop-2.7.3]$ bin/hdfs namenode -format

- 启动dfs

[hadoop@server1 hadoop-2.7.3]$ sbin/start-dfs.sh

- 处理文件系统

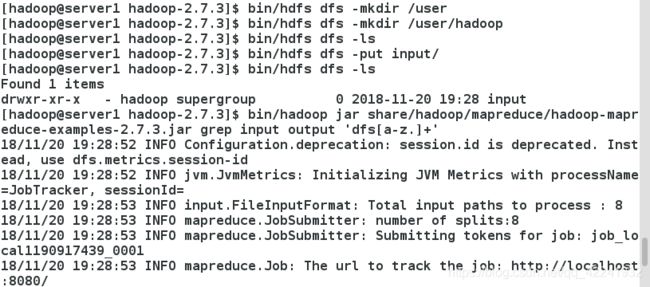

[hadoop@server1 hadoop-2.7.3]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop-2.7.3]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop-2.7.3]$ bin/hdfs dfs -ls

[hadoop@server1 hadoop-2.7.3]$ bin/hdfs dfs -put input/

[hadoop@server1 hadoop-2.7.3]$ bin/hdfs dfs -ls

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2018-11-20 19:28 input

[hadoop@server1 hadoop-2.7.3]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar grep input output 'dfs[a-z.]+'

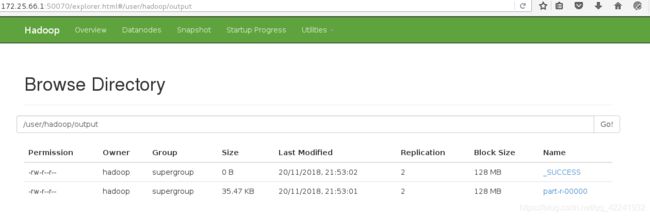

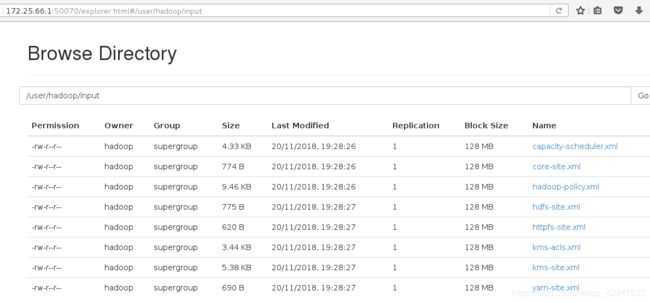

在浏览器中访问172.25.66.1:50070,可以看到由一个server1端口是活着的状态

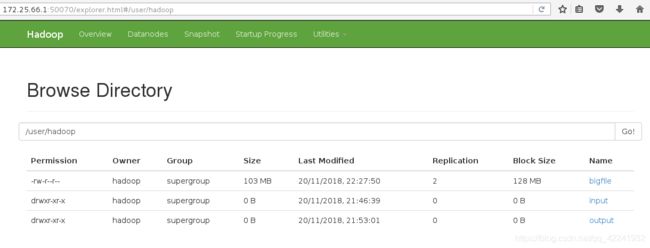

当给hadoop中上传文件,也可以在浏览器中看到,点击Utilities—Browse the file system

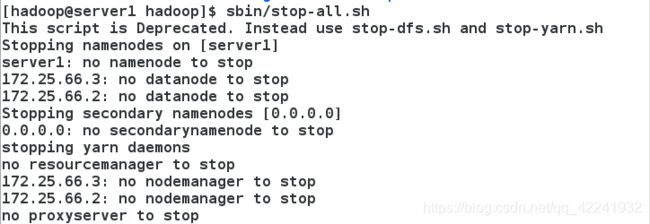

三、分布式文件存储

1、namenode

[hadoop@server1 ~]$ cd hadoop-2.7.3

[hadoop@server1 hadoop-2.7.3]$ sbin/stop-dfs.sh

[hadoop@server1 hadoop-2.7.3]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim hdfs-site.xml

19

20

21 dfs.replication

22 2

23

24

[hadoop@server1 hadoop]$ vim slaves

172.25.66.2

172.25.66.3

[hadoop@server1 hadoop]$ jps

3727 Jps

[hadoop@server1 hadoop]$ cd /tmp/

[hadoop@server1 tmp]$ ls

hadoop-hadoop Jetty_0_0_0_0_50070_hdfs____w2cu08 yum.log

hsperfdata_hadoop Jetty_0_0_0_0_50090_secondary____y6aanv

hsperfdata_root Jetty_localhost_55086_datanode____.vo0c5n

[hadoop@server1 tmp]$ rm -fr *

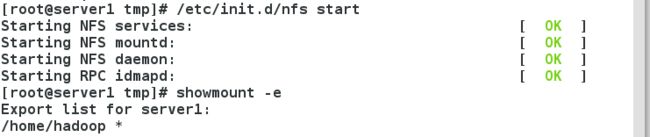

[root@server1 ~]# yum install -y nfs-utils

[root@server1 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server1 ~]# vim /etc/exports

/home/hadoop *(rw,anonuid=800,anongid=800)

[root@server1 ~]# /etc/init.d/nfs start

Starting NFS services: [ OK ]

Starting NFS mountd: [ OK ]

Starting NFS daemon: [ OK ]

Starting RPC idmapd: [ OK ]

[root@server1 ~]# exportfs -v

/home/hadoop (rw,wdelay,root_squash,no_subtree_check,anonuid=800,anongid=800)

[root@server1 ~]# exportfs -rv

exporting *:/home/hadoop

2、datanode(172.25.66.2和172.25.66.3一样的)

[root@server2 ~]# yum install -y nfs-utils

[root@server2 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server2 ~]# useradd -u 800 hadoop

[root@server2 hadoop]# mount 172.25.66.1:/home/hadoop/ /home/hadoop/

[root@server2 hadoop]# showmount -e 172.25.66.1

Export list for 172.25.66.1:

/home/hadoop *

[root@server2 hadoop]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1660020 16502332 10% /

tmpfs 510188 0 510188 0% /dev/shm

/dev/vda1 495844 33490 436754 8% /boot

172.25.66.1:/home/hadoop/ 19134336 1949248 16213120 11% /home/hadoop

3、测试ssh配置

[hadoop@server1 tmp]$ ssh server2

[hadoop@server2 ~]$ logout

[hadoop@server1 tmp]$ ssh server3

[hadoop@server3 ~]$ logout

[hadoop@server1 tmp]$ ssh 172.25.120.2

[hadoop@server2 ~]$ logout

[hadoop@server1 tmp]$ ssh 172.25.120.3

[hadoop@server2 ~]$ logout

4、重新格式化

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ ls /tmp/

hadoop-hadoop hsperfdata_hadoop

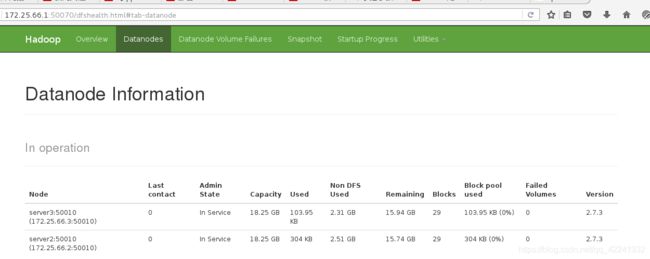

5、启动dfs:namenode和datanode分开

[hadoop@server1 hadoop-2.7.3]$ sbin/start-dfs.sh

[hadoop@server2 ~]$ jps

1425 DataNode

1498 Jps

6、处理文件(datanode端实时同步)

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -put etc/hadoop/ input

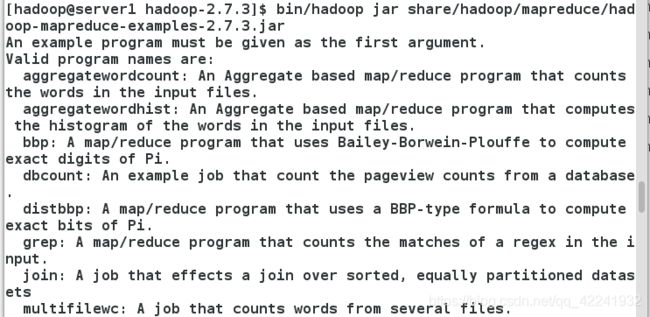

7、在浏览器中访问测试(172.25.66.1:50070)

[hadoop@server1 hadoop-2.7.3]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar

[hadoop@server1 hadoop-2.7.3]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount input output

[hadoop@server1 hadoop-2.7.3]$ rm -fr output/

[hadoop@server1 hadoop-2.7.3]$ bin/hdfs dfs -get output

四、节点的添加与删除

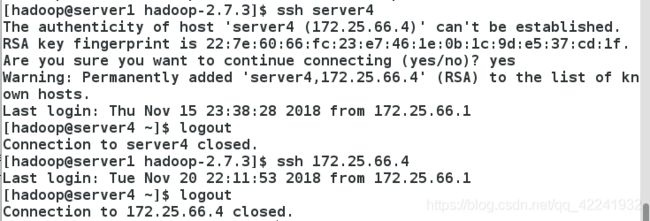

1、在线添加server4(172.25.66.4)

[root@server4 ~]# yum install -y nfs-utils

[root@server4 ~]# useradd -u 800 hadoop

[root@server4 ~]# mount 172.25.120.1:/home/hadoop/ /home/hadoop/

[root@server4 ~]# su - hadoop

[hadoop@server4 ~]$ vim hadoop/etc/hadoop/slaves

172.25.120.2

172.25.120.3

172.25.120.4

测试:

[hadoop@server1 ~]$ ssh server4

[hadoop@server4 ~]$ logout

[hadoop@server1 ~]$ ssh 172.25.120.4

[hadoop@server4 ~]$ logout

[hadoop@server4 ~]$ cd hadoop

[hadoop@server4 hadoop]$ sbin/hadoop-daemon.sh start datanode

starting datanode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-datanode-server4.out

[hadoop@server4 hadoop]$ jps

1250 Jps

1177 DataNode

[hadoop@server4 hadoop-2.7.3]$ dd if=/dev/zero of=bigfile bs=1M count=500

103+0 records in

103+0 records out

108003328 bytes (108 MB) copied, 61.2318 s, 1.8 MB/s

[hadoop@server4 hadoop-2.7.3]$ bin/hdfs dfs -put bigfile

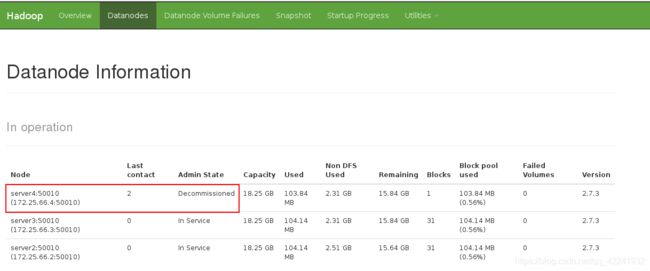

2、在线删除server2(172.25.66.2)

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop/etc/hadoop

[hadoop@server1 hadoop]$ vim hdfs-site.xml

dfs.hosts.exclude

/home/hadoop/hadoop/etc/hadoop/exclude-hosts

[hadoop@server1 hadoop]$ vim hosts-exclude

172.25.66.4 ##删除的节点IP

[hadoop@server1 hadoop]$ vim slaves

172.25.120.2

172.25.120.3

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -refreshNodes

Refresh nodes successful

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -report

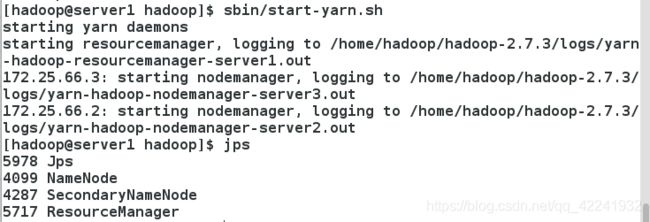

3、yarn模式

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop/etc/hadoop

[hadoop@server1 hadoop]$ cp mapred-site.xml.template mapred-site.xml

[hadoop@server1 hadoop]$ vim mapred-site.xml

19

20

21 mapreduce.framework.name

22 yarn

23

24

25

[hadoop@server1 hadoop]$ sbin/start-yarn.sh

[hadoop@server2 ~]$ jps

1761 Jps

1425 DataNode

1654 NodeManager

五、zookeeper集群搭建

清空所有节点的/tmp

1、server5主机

[root@server5 ~]# yum install- y nfs-utils

[root@server5 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server5 ~]# useradd -u 800 hadoop

[root@server5 ~]# mount 172.25.120.1:/home/hadoop/ /home/hadoop/

[root@server5 ~]# su - hadoop

[hadoop@server5 ~]$ ls

hadoop java zookeeper-3.4.9.tar.gz

hadoop-2.7.3 jdk1.7.0_79

hadoop-2.7.3.tar.gz jdk-7u79-linux-x64.tar.gz

2、server2主机

[hadoop@server2 ~]$ tar zxf zookeeper-3.4.9.tar.gz

[hadoop@server2 ~]$ cd zookeeper-3.4.9

[hadoop@server2 zookeeper-3.4.9]$ cd conf/

[hadoop@server2 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server2 conf]$ vim zoo.cfg

30 server.1=172.25.66.2:2888:3888

31 server.2=172.25.66.3:2888:3888

32 server.3=172.25.66.4:2888:3888

3、配置server2,3,4

[root@server2 zookeeper-3.4.9]# cd /tmp/zookeeper/

[root@server2 zookeeper]# echo 1 >myid

[root@server2 zookeeper-3.4.9]# bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /root/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@server3 zookeeper-3.4.9]# cd /tmp/zookeeper/

[root@server3 zookeeper]# echo 2 >myid

[root@server3 zookeeper-3.4.9]# bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /root/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@server4 zookeeper-3.4.9]# cd /tmp/zookeeper/

[root@server4 zookeeper]# echo 3 >myid

[root@server4 zookeeper-3.4.9]# bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /root/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

4、查看所有节点信息

[root@server2 zookeeper-3.4.9]# bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /root/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

[root@server3 zookeeper-3.4.9]# bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /root/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: leader

[root@server4 zookeeper-3.4.9]# bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /root/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower

5、在leader(server3)中测试

[root@server3 zookeeper-3.4.9]# bin/zkCli.sh

Connecting to localhost:2181

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls

[zk: localhost:2181(CONNECTED) 1] ls /

[zookeeper]

[zk: localhost:2181(CONNECTED) 2] quit

六、zookeeper的高可用

1、配置hadoop

- 配置slaves

[hadoop@server1 ~]$ cd hadoop/etc/

[hadoop@server1 etc]$ vim hadoop/slaves

172.25.120.2

172.25.120.3

172.25.120.4

- 配置core-site.xml

[hadoop@server1 etc]$ vim hadoop/core-site.xml

19

20

21 fs.defaultFS

22 hdfs://master

23

24

25 ha.zookeeper.quorum

26 172.25.66.2:2181,172.25.66.3:2181,172.25.66.4:2181

27

28

- 配置hdfs-site.xml

[hadoop@server1 hadoop]$ vim hdfs-site.xml

fs.defaultFS

hdfs://masters

ha.zookeeper.quorum

172.25.66.2:2181,172.25.66.3:2181,172.25.66.4:2181

dfs.namenode.rpc-address.masters.h1

172.25.66.1:9000

dfs.namenode.http-address.masters.h1

172.25.66.1:50070

dfs.namenode.rpc-address.masters.h2

172.25.66.5:9000

dfs.namenode.http-address.masters.h2

172.25.66.5:50070

dfs.namenode.shared.edits.dir

qjournal://172.25.66.2:8485;172.25.66.3:8485;172.25.66.4:8485/masters

dfs.journalnode.edits.dir

/tmp/journaldata

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.masters

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvid

er

dfs.ha.fencing.methods

sshfence

shell(/bin/true)

dfs.ha.fencing.ssh.private-key-files

/home/hadoop/.ssh/id_rsa

dfs.ha.fencing.ssh.connect-timeout

30000

- 格式化hdfs集群

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ scp -r /tmp/hadoop-hadoop 172.25.120.5:/tmp/

##查看server5主机

[root@server5 ~]# ls /tmp/

hadoop-hadoop

2、3个DN主机启动journalnod

[hadoop@server3 zookeeper-3.4.9]$ cd ~/hadoop

[hadoop@server3 hadoop]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-journalnode-server3.out

查看3个DN主机zookeeper集群状态

[hadoop@server3 hadoop]$ jps

1881 DataNode

1698 QuorumPeerMain

1983 Jps

1790 JournalNode

3、NN主机格式化zookeeper

- 格式化后,启动zookeeper

[hadoop@server1 hadoop]$ bin/hdfs zkfc -formatZK

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

- 查看zookeeper集群

[hadoop@server1 hadoop]$ jps

6694 Jps

6646 DFSZKFailoverController

6352 NameNode

[hadoop@server5 ~]$ jps

1396 DFSZKFailoverController

1298 NameNode

1484 Jps

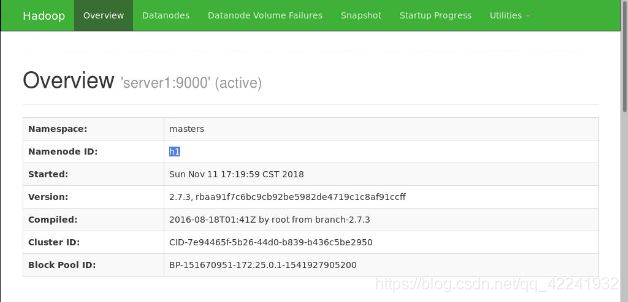

4、测试高可用

访问http://172.25.66.1:50070

上图可以看出server1为active,server5为standby

[hadoop@server1 ~]$ jps

1396 DFSZKFailoverController

1298 NameNode

1484 Jps

[hadoop@server1 ~]$ kill -9 1298

[hadoop@server1 ~]$ jps

1396 DFSZKFailoverController

1515 Jps

执行上步操作,刷新浏览器,可以看出server5切换为master

当server1再次启动时,状态为standby

[hadoop@server5 hadoop]$ sbin/hadoop-daemon.sh start namenode

5、DN主机查看

[hadoop@server2 hadoop]$ cd ~/zookeeper-3.4.9

[hadoop@server2 zookeeper-3.4.9]$ bin/zkCli.sh

Connecting to localhost:2181

[zk: localhost:2181(CONNECTED) 4] ls /hadoop-ha/masters

[ActiveBreadCrumb, ActiveStandbyElectorLock]

[zk: localhost:2181(CONNECTED) 5] get /hadoop-ha/masters/Active

ActiveBreadCrumb ActiveStandbyElectorLock

[zk: localhost:2181(CONNECTED) 5] get /hadoop-ha/masters/ActiveBreadCrumb