监控利器之Prometheus服务监控(三)

监控利器之Prometheus服务监控

接上篇监控利器之Prometheus组件安装(二),本篇主要讲解通过prometheus监控常用的服务

监控mysql数据库

1、下载监控插件

wget https://github.com/prometheus/mysqld_exporter/releases/download/v0.12.1/mysqld_exporter-0.12.1.linux-amd64.tar.gz

2、解压

cd /home/monitor

tar -xf mysqld_exporter-0.12.1.linux-amd64.tar.gz

mv mysqld_exporter-0.12.1.linux-amd64 mysqld_exporter

chown -R root:root /home/monitor/mysqld_exporter

3、配置mysql的监控账号

cd /home/monitor/node_exporters

vi .my.cnf

[client]

user=root

password=123456

host=localhost

port=3306

#sock="/tmp/mysql_3306.sock" #如果数据库需要sock文件就配置上,否则不需要此配置

4、启动node_exporters(默认监听9104端口)

cd /usr/local/prometheus/mysql_exporters

nohup ./mysqld_exporter --config.my-cnf="/usr/local/prometheus/node_exporters/.my.cnf" &

5、修改promethus配置文件,重载服务并验证数据

1)修改promethus配置文件

- job_name: mysql_monitor

static_configs:

- targets: ['mysql_exporters的ip地址:9104']

配置中的targets可以写多个被监控端的api接口地址,建议单独写更清晰些

2)重载服务并验证数据

curl -XPOST http://192.168.16.115:9090/-/reload

验证接口数据

curl http://192.168.16.115:9104/metrics

监控zk集群

1、下载监控插件

git项目地址:https://github.com/danielqsj/kafka_exporter

cd /usr/local/src

wget https://github.com/danielqsj/kafka_exporter/releases/download/v1.2.0/kafka_exporter-1.2.0.linux-amd64.tar.gz

2、解压

tar -xf kafka_exporter-1.2.0.linux-amd64.tar.gz

mv kafka_exporter-1.2.0.linux-amd64 kafka_exporter

chown -R root:root kafka_exporter

3、启动并验证

cd /usr/local/src/kafka_exporter

nohup ./kafka_exporter --web.listen-address="192.168.16.18:4222" --kafka.server=192.168.16.16:9092 >> ./nohup.out 2>&1 &

curl http://192.168.16.16:4222/metrics #查看返回值即可

4、修改promethus配置文件,以file_sd_config的方式添加zk服务

1)创建zk的file_sd_config配置文件

cd /home/monitor/prometheus/conf.d/

cat zk_node.json

[

{

"labels": {

"desc": "zk_16_16",

"group": "zookeeper",

"host_ip": "192.168.16.16",

"hostname": "wg-16-16"

},

"targets": [

"192.168.16.16:4221"

]

},

{

"labels": {

"desc": "zk_16_17",

"group": "zookeeper",

"host_ip": "192.168.16.17",

"hostname": "wg-16-17"

},

"targets": [

"192.168.16.17:4221"

]

},

{

"labels": {

"desc": "zk_16_18",

"group": "zookeeper",

"host_ip": "192.168.16.18",

"hostname": "wg-16-18"

},

"targets": [

"192.168.16.18:4221"

]

}

]

2)在promethus的配置文件中添加以下配置

cat prometheus.yml

...

...

- job_name: 'zk_node'

scrape_interval: 2m

scrape_timeout: 120s

static_configs:

file_sd_configs:

- files:

- /home/monitor/prometheus/conf.d/zk_node.json

honor_labels: true

...

...

5、重载promethus服务,web端验证

重载promethus服务

curl -XPOST http://192.168.16.115:9090/-/reload

监控kafka集群

1、下载监控插件

git项目地址:https://github.com/danielqsj/kafka_exporter

cd /usr/local/src

wget https://github.com/danielqsj/kafka_exporter/releases/download/v1.2.0/kafka_exporter-1.2.0.linux-amd64.tar.gz

2、解压

tar -xf kafka_exporter-1.2.0.linux-amd64.tar.gz

mv kafka_exporter-1.2.0.linux-amd64 kafka_exporter

chown -R root:root kafka_exporter

3、启动并验证

cd /usr/local/src/kafka_exporter

nohup ./kafka_exporter --web.listen-address="192.168.16.18:4222" --kafka.server=192.168.16.16:9092 >> ./nohup.out 2>&1 &

curl http://192.168.16.16:4222/metrics #查看返回值即可

4、通过promethus服务端以file_sd_config的方式添加kafka服务

1)创建kafka的file_sd_config配置文件

cd /home/monitor/prometheus/conf.d/

cat kafka_node.json

[

{

"labels": {

"desc": "kafka_16_16",

"group": "kafka",

"host_ip": "192.168.16.16",

"hostname": "wg-16-16"

},

"targets": [

"192.168.16.16:4222"

]

},

{

"labels": {

"desc": "kafka_16_17",

"group": "kafka",

"host_ip": "192.168.16.17",

"hostname": "wg-16-17"

},

"targets": [

"192.168.16.17:4222"

]

},

{

"labels": {

"desc": "kafka_16_18",

"group": "kafka",

"host_ip": "192.168.16.18",

"hostname": "wg-16-18"

},

"targets": [

"192.168.16.18:4222"

]

}

]

2)在promethus的配置文件中添加以下配置

在promethus的配置文件中添加以下配置

cat prometheus.yml

...

...

- job_name: 'kafka_node'

scrape_interval: 2m

scrape_timeout: 120s

static_configs:

file_sd_configs:

- files:

- /home/monitor/prometheus/conf.d/kafka_node.json

honor_labels: true

...

...

5、重载promethus服务,web端验证

重载promethus服务

curl -XPOST http://192.168.16.115:9090/-/reload

监控nginx

1、简介

用Prometheus进行nginx的监控可以自动的对相关server_name和upstream进行监控,你也可以自定义Prometheus的数据标签,实现对不同机房和不同项目的nginx进行监控。

监控Nginx主要用到以下三个模块:

nginx-module-vts:Nginx virtual host traffic status module,Nginx的监控模块,能够提供JSON格式的数据产出。

nginx-vts-exporter:Simple server that scrapes Nginx vts stats and exports them via HTTP for Prometheus consumption。主要用于收集Nginx的监控数据,并给Prometheus提供监控接口,默认端口号9913。

Prometheus:监控Nginx-vts-exporter提供的Nginx数据,并存储在时序数据库中,可以使用PromQL对时序数据进行查询和聚合。

2、安装nginx-module-vts模块

git项目地址:https://github.com/vozlt/nginx-module-vts

1)首先查看nginx安装了那些模块

/usr/local/nginx/sbin/nginx -V

2)下载nginx-module-vts模块,并添加模块到nginx

cd /root

git clone git://github.com/vozlt/nginx-module-vts.git

cd /root/nginx-1.14.1

./configure --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module --with-http_flv_module --with-http_gzip_static_module --with-stream --add-module=/root/nginx-module-vts

make #编译,不要make install,不然会覆盖

3)替换nginx二进制文件

mv /usr/local/nginx/sbin/nginx /usr/local/nginx/sbin/nginx.bak

cp objs/nginx /usr/local/nginx/sbin/

4)修改nginx.conf配置,验证安装是否成功

http {

...

vhost_traffic_status_zone;

vhost_traffic_status_filter_by_host on;

...

server {

...

location /status {

vhost_traffic_status_display;

vhost_traffic_status_display_format html;

}

}

配置解析:

1> 打开vhost过滤

vhost_traffic_status_filter_by_host on;

开启此功能,在Nginx配置有多个server_name的情况下,会根据不同的server_name进行流量的统计,否则默认会把流量全部计算到第一个server_name上。

2> 在不想统计流量的server区域禁用vhost_traffic_status,配置示例

server {

...

vhost_traffic_status off;

...

}

假如nginx没有规范配置server_name或者无需进行监控的server上,那么建议在此vhost上禁用统计监控功能。否则会出现"127.0.0.1",hostname等的域名监控信息。

5)验证

http://192.168.16.115:8080/status

3、安装nginx-vts-exporter

1)下载监控插件

cd /usr/local/src

wget https://github.com/hnlq715/nginx-vts-exporter/releases/download/v0.10.3/nginx-vts-exporter-0.10.3.linux-amd64.tar.gz

2)解压

tar -xf nginx-vts-exporter-0.10.3.linux-amd64.tar.gz

mv nginx-vts-exporter-0.10.3.linux-amd64 nginx-vts-exporter

chown -R root:root nginx-vts-exporter

3)启动并验证

nohup ./nginx-vts-exporter -nginx.scrape_uri=http://192.168.16.115:8080/status/format/json -telemetry.address="192.168.16.115:9913" >> ./nohup.out 2>&1 &

curl http://192.168.16.115:9913/metrics 查看返回值即可

4)通过promethus服务端以file_sd_config的方式添加nginx服务

4、通过promethus服务端以file_sd_config的方式添加nginx服务

1)创建nginx_node的file_sd_config配置文件

cd /home/monitor/prometheus/conf.d/

cat nginx_node.json

[

{

"labels": {

"desc": "nginx_16_115",

"group": "nginx",

"host_ip": "192.168.16.115",

"hostname": "wg-16-115"

},

"targets": [

"192.168.16.115:9913"

]

}

]

2)在promethus的配置文件中添加以下配置

cat prometheus.yml

...

...

- job_name: 'nginx_node'

scrape_interval: 2m

scrape_timeout: 120s

static_configs:

file_sd_configs:

- files:

- /home/monitor/prometheus/conf.d/nginx_node.json

honor_labels: true

...

...

5、重载promethus服务,web端验证

重载promethus服务

curl -XPOST http://192.168.16.115:9090/-/reload

6、参考链接

https://blog.csdn.net/qq_25934401/article/details/82968632

监控tomcat

1、下载jmx_exporter的jar包

cd /usr/local/src

wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.12.0/jmx_prometheus_javaagent-0.12.0.jar

2、下载jmx_exporter tomcat 配置文件

cd /usr/local/src

wget https://github.com/prometheus/jmx_exporter/blob/master/example_configs/tomcat.yml

3、配置jmx exporter

cd /usr/local/src

mkdir jmx_exporter

mv jmx_prometheus_javaagent-0.12.0.jar tomcat.yml jmx_exporter

cd jmx_exporter

查看tomcat的yml配置文件

cat tomcat.yml

---

lowercaseOutputLabelNames: true

lowercaseOutputName: true

rules:

- pattern: 'Catalina<>(\w+):'

name: tomcat_$3_total

labels:

port: "$2"

protocol: "$1"

help: Tomcat global $3

type: COUNTER

- pattern: 'Catalina<>(requestCount|maxTime|processingTime|errorCount):'

name: tomcat_servlet_$3_total

labels:

module: "$1"

servlet: "$2"

help: Tomcat servlet $3 total

type: COUNTER

- pattern: 'Catalina<>(currentThreadCount|currentThreadsBusy|keepAliveCount|pollerThreadCount|connectionCount):'

name: tomcat_threadpool_$3

labels:

port: "$2"

protocol: "$1"

help: Tomcat threadpool $3

type: GAUGE

- pattern: 'Catalina<>(processingTime|sessionCounter|rejectedSessions|expiredSessions):'

name: tomcat_session_$3_total

labels:

context: "$2"

host: "$1"

help: Tomcat session $3 total

type: COUNTER

- pattern: ".*" #让所有的jmx metrics全部暴露出来

4、配置tomcat启动脚本,启动并验证

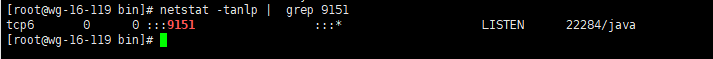

将jmx_exporter目录下的jmx_prometheus_javaagent-0.12.0.jar和tomcat.yaml配置文件拷贝到tomcat的启动目录(/usr/local/apache-tomcat/bin)下,在catalina.sh(256行)启动脚本中添加以下配置JAVA_OPTS="-javaagent:./jmx_prometheus_javaagent-0.12.0.jar=9151:./tomcat.yaml",然后重启tomcat服务并验证

cd /usr/local/apache-tomcat/bin

./shutdown.sh

./startup.sh

curl http://192.168.16.119:9151/metrics

netstat -tanlp | grep 9151

5、通过promethus服务端以file_sd_config的方式添加jmx_export服务

1)创建tomcat的file_sd_config配置文件

cd /home/monitor/prometheus/conf.d/

cat tomcat_node.json

[

{

"labels": {

"desc": "tomcat_16_119",

"group": "web",

"host_ip": "192.168.16.119",

"hostname": "wg-16-119"

},

"targets": [

"192.168.16.119:9151"

]

}

]

2)在promethus的配置文件中添加以下配置

cat prometheus.yml

...

...

- job_name: 'tomcat_node'

scrape_interval: 2m

scrape_timeout: 120s

static_configs:

file_sd_configs:

- files:

- /home/monitor/prometheus/conf.d/tomcat_node.json

honor_labels: true

....

....

6、重载promethus服务,web端验证

curl -XPOST http://192.168.16.115:9090/-/reload

7、参考链接

https://www.jianshu.com/p/4c3[添加链接描述](https://github.com/oliver006/redis_exporter/releases/download/v1.3.4/redis_exporter-v1.3.4.linux-amd64.tar.gz)

8dbfb139b

监控redis

1、下载监控插件

git项目地址:https://github.com/oliver006/redis_exporter

cd /usr/local/src

wget https://github.com/oliver006/redis_exporter/releases/download/v1.3.4/redis_exporter-v1.3.4.linux-amd64.tar.gz

2、解压

tar -xf redis_exporter-v1.3.4.linux-amd64.tar.gz

mv redis_exporter-v1.3.4.linux-amd64 redis_exporter

chown -R root:root redis_exporter

3、启动

cd /usr/local/src/redis_exporter

nohup ./redis_exporter -redis.addr=192.168.16.9:7104 -web.listen-address=':4222' >> ./nohup_7104.out 2>&1 &

nohup ./redis_exporter -redis.addr=192.168.16.9:7105 -web.listen-address=':4223' >> ./nohup_7105.out 2>&1 &

nohup ./redis_exporter -redis.addr=192.168.16.9:7106 -web.listen-address=':4224' >> ./nohup_7106.out 2>&1 &

nohup ./redis_exporter -redis.addr=192.168.16.9:6379 -web.listen-address=':4225' >> ./nohup_6379.out 2>&1 &

4、页面无法获取数据问题整理

现象:redis_exporter正常启动,通过curl可以获取到数据,但页面显示Get http://192.168.16.9:4223/metrics: context deadline exceeded

![]()

解决办法:配置scrape_timeout参数即可,scrape_timeout参数的范围依赖于scrape_interval这个参数,scrape_timeout的数值小于等于scrape_interval即可

5、通过promethus服务端以file_sd_config的方式添加redis服务

1)创建redis的file_sd_config配置文件

cd /home/monitor/prometheus/conf.d/

cat redis_cluster.json(部分展示即可)

[

{

"labels": {

"desc": "redis-16-8",

"group": "redis_node",

"host_ip": "192.168.16.8",

"hostname": "wg-16-8"

},

"targets": [

"192.168.16.8:4222"

]

},

{

"labels": {

"desc": "redis-16-8",

"group": "redis_node",

"host_ip": "192.168.16.8",

"hostname": "wg-16-8"

},

"targets": [

"192.168.16.8:4223"

]

},

{

"labels": {

"desc": "redis-16-8",

"group": "redis_node",

"host_ip": "192.168.16.8",

"hostname": "wg-16-8"

},

"targets": [

"192.168.16.8:4224"

]

},

{

"labels": {

"desc": "redis-16-9",

"group": "redis_node",

"host_ip": "192.168.16.9",

"hostname": "wg-16-9"

},

"targets": [

"192.168.16.9:4222"

]

},

{

"labels": {

"desc": "redis-16-9",

"group": "redis_node",

"host_ip": "192.168.16.9",

"hostname": "wg-16-9"

},

"targets": [

"192.168.16.9:4223"

]

},

{

"labels": {

"desc": "redis-16-9",

"group": "redis_node",

"host_ip": "192.168.16.9",

"hostname": "wg-16-9"

},

"targets": [

"192.168.16.9:4224"

]

},

{

"labels": {

"desc": "redis-16-9",

"group": "redis_node",

"host_ip": "192.168.16.9",

"hostname": "wg-16-9"

},

"targets": [

"192.168.16.9:4225"

]

}

]

2)在promethus的配置文件中添加以下配置

cat prometheus.yml

...

...

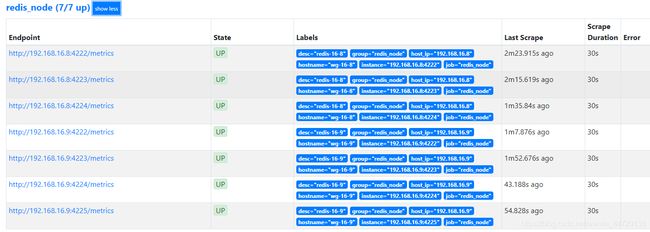

- job_name: 'redis_node'

scrape_interval: 2m

scrape_timeout: 120s

static_configs:

file_sd_configs:

- files:

- /home/monitor/prometheus/conf.d/redis_cluster.json

honor_labels: true

...

...

6、重载promethus服务,web端验证

curl -XPOST http://192.168.16.115:9090/-/reload

监控es集群

1、下载监控插件

cd /u01/isi

wget https://github.com/justwatchcom/elasticsearch_exporter/releases/download/v1.0.4rc1/elasticsearch_exporter-1.0.4rc1.linux-amd64.tar.gz

2、解压

tar -xf elasticsearch_exporter-1.0.4rc1.linux-amd64.tar.gz

mv elasticsearch_exporter-1.0.4rc1.linux-amd64 elasticsearch_exporter

chown -R isi:isi elasticsearch_exporter #调整权限,随es启动用户权限

3、启动

su isi

/u01/isi/elasticsearch_exporter

nohup ./elasticsearch_exporter --web.listen-address=":4221" --es.uri http://localhost:9200 &

4、在promethus的配置中添加配置,从而可获取es的数据

1)创建nginx_node的file_sd_config配置文件

cd /home/prome/prometheus-2.13

cat es_cluster.json

[

{

"labels": {

"desc": "es-21",

"group": "es_cluster",

"host_ip": "192.168.16.21",

"hostname": "wg-16-21"

},

"targets": [

"192.168.16.21:4221"

]

},

{

"labels": {

"desc": "es-22",

"group": "es_cluster",

"host_ip": "192.168.16.22",

"hostname": "wg-16-22"

},

"targets": [

"192.168.16.22:4221"

]

},

{

"labels": {

"desc": "es-23",

"group": "es_cluster",

"host_ip": "192.168.16.23",

"hostname": "wg-16-23"

},

"targets": [

"192.168.16.23:4221"

]

},

{

"labels": {

"desc": "es-24",

"group": "es_cluster",

"host_ip": "192.168.16.24",

"hostname": "wg-16-24"

},

"targets": [

"192.168.16.24:4221"

]

},

{

"labels": {

"desc": "es-25",

"group": "es_cluster",

"host_ip": "192.168.16.25",

"hostname": "wg-16-25"

},

"targets": [

"192.168.16.25:4221"

]

},

{

"labels": {

"desc": "es-26",

"group": "es_cluster",

"host_ip": "192.168.16.26",

"hostname": "wg-16-26"

},

"targets": [

"192.168.16.26:4221"

]

},

{

"labels": {

"desc": "es-27",

"group": "es_cluster",

"host_ip": "192.168.16.27",

"hostname": "wg-16-27"

},

"targets": [

"192.168.16.27:4221"

]

},

{

"labels": {

"desc": "es-28",

"group": "es_cluster",

"host_ip": "192.168.16.28",

"hostname": "wg-16-28"

},

"targets": [

"192.168.16.28:4221"

]

},

{

"labels": {

"desc": "es-30",

"group": "es_cluster",

"host_ip": "192.168.16.30",

"hostname": "wg-16-30"

},

"targets": [

"192.168.16.30:4221"

]

}

]

2)在promethus的配置文件中添加以下配置

cat prometheus.yml

...

...

- job_name: 'es_cluster'

scrape_interval: 1m

static_configs:

file_sd_configs:

- files:

- /home/monitor/prometheus/conf.d/es_cluster.json

honor_labels: true

...

...

5、重载promethus服务,web端验证

重载promethus服务

curl -XPOST http://192.168.16.115:9090/-/reload