GMM / MoG 聚类 Matlab 可视化 实现

GMM / MoG 聚类 Matlab 可视化 实现

-

- GMM介绍

- EM进行参数求解

- GMM动态可视化

- GMM的Matlab动态可视化代码

参考书籍:《计算机视觉 模型、学习和推理》

GMM介绍

我们知道,常规的正态分布或高斯函数只具有单峰形式,对于复杂的数据(如双峰或多峰)建模来说,很难实现较好的拟合效果。我们不妨设想,如果给予多个正态分布不同的权重 hi , 并将它们线性叠加起来,是不是就可以来通过叠加后的分布来拟合比较复杂的数据了呢?

高斯混合模型 [ GMM(Gaussian Mixture Model), MoG(Mixture of Gaussians)] 就是这样的一个模型,通过叠加多个高斯来进行数据的拟合。

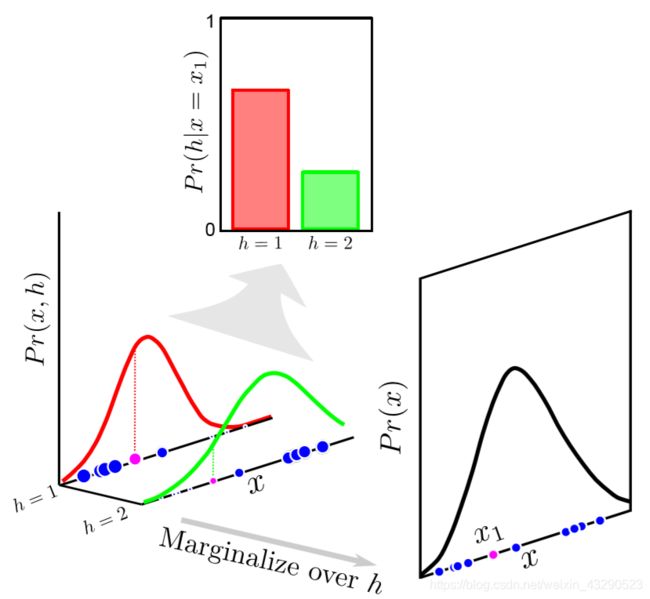

我们将权重 hi 抽象成为一个分类分布Cat{1…K}, K是高斯函数的个数,那么观测到的数据 x 和 hi 形成一个联合概率分布的边缘概率,在这里我们将hi 看成一个隐变量(hidden variable/latent variable)。

P r ( x ∣ h , θ ) = N o r m x [ μ h , Σ h ] P r ( h ∣ θ ) = C a t h [ λ ] \begin{aligned} Pr(x|h,\theta) &= Norm_x[\mu_h,\Sigma_{h}] \\Pr(h|\theta) &= Cat_h[\lambda] \end{aligned} Pr(x∣h,θ)Pr(h∣θ)=Normx[μh,Σh]=Cath[λ]

由上个公式我们可以看出,

- 在给定 第hi 个Gauss对应参数 θ{μk,Σk} 的情况下,此时观测变量就是一个正态分布。

- 在我们参数已知的情况下,对应的 hi 则是一个分类分布。

下面这张图(摘自开篇参考书籍)形象化说明这两个公式:

那么通过独立变量的联合概率和边缘概率乘积的关系,可以恢复原始的数据:

P r ( x ∣ θ ) = ∑ k = 1 K P r ( x , h = k ∣ θ ) = ∑ k = 1 K P r ( x ∣ h = k , θ ) P r ( h = k ∣ θ ) = ∑ k = 1 K λ k N o r m x [ μ k , Σ k ] \begin{aligned} Pr(\bm{x|\theta}) & = \sum_{k=1}^KPr(\bm x,h=k|\bm\theta) \\ & =\sum_{k=1}^K Pr(\bm x|h=k,\bm \theta)Pr(h=k|\bm \theta) \\ & = \sum_{k=1}^K\lambda_kNorm_x[\bm \mu_k,\bm\Sigma_k] \\ \end{aligned} Pr(x∣θ)=k=1∑KPr(x,h=k∣θ)=k=1∑KPr(x∣h=k,θ)Pr(h=k∣θ)=k=1∑KλkNormx[μk,Σk]

第一步,随机选取K个样本点,作为K个高斯函数的均值mu,整体数据的方差用来初始化K个高斯函数的方差sigma,每个高斯的权重初始化为1/K。

EM进行参数求解

如果我们直接对这多个高斯叠加而成的函数进行极大似然方法(MLE)来求解,一般来说很难求出闭式解:

P r ( x ) = ∫ P r ( x , h ) d h . θ ^ = arg max θ [ ∑ i = 1 I log [ P r ( x i ∣ θ ) ] ] \begin{aligned} &Pr(x) = \intop Pr(\bm {x,h}) d\bm h. \\ &\hat{\theta} = \argmax_\theta[\sum_{i=1}^I \log[Pr(x_i|\bm \theta)]] \end{aligned} Pr(x)=∫Pr(x,h)dh.θ^=θargmax[i=1∑Ilog[Pr(xi∣θ)]]

所以如果我们通过引入一个分布简单的隐变量 hi ,先求出它们联合概率分布,然后将隐变量 hi 给积分掉,正如下面公式:

P r ( x ∣ θ ) = ∫ P r ( x , h ∣ θ ) d h . Pr(x|\theta) = \intop Pr(\bm x,\bm h|\bm \theta) d \bm h. \\ Pr(x∣θ)=∫Pr(x,h∣θ)dh.

然后对参数θ进行求解argmax(L), L是下面的对数似然函数,同样可以达到我们的目的:

θ ^ = arg max θ [ ∑ i = 1 I log [ ∫ P r ( x i , h i ∣ θ ) d h i ] ] \hat{\theta} = \argmax_\theta[\sum_{i=1}^I \log[\intop Pr(\bm x_i,\bm h_i|\bm \theta) d \bm h_i]] θ^=θargmax[i=1∑Ilog[∫Pr(xi,hi∣θ)dhi]]

我们能不能构造一个下界函数(每一个点都小于或等于对数似然函数L)去逼近L:

B [ { q i ( h i ) } , θ ] = ∑ i = 1 I ∫ q i ( h i ) log [ P r ( x i , h i ) q i ( h i ) ] d h i ≤ ∑ i = 1 I log [ ∫ P r ( x i , h i ∣ θ ) d h i ] . \begin{aligned} B[\{q_i(\bm h_i)\},\bm \theta] &= \sum_{i=1}^I\intop q_i(\bm h_i) \log[\frac{Pr(x_i,\bm h_i)}{q_i(\bm h_i)}] d \bm h_i \\ &\leq \sum_{i=1}^I\log[\intop Pr(x_i,\bm h_i|\bm \theta) d\bm h_i]. \end{aligned} B[{ qi(hi)},θ]=i=1∑I∫qi(hi)log[qi(hi)Pr(xi,hi)]dhi≤i=1∑Ilog[∫Pr(xi,hi∣θ)dhi].

证明如下:

由詹森不等式和Log函数的性质(凹函数),可得E[log(x)]≤log(E[x])。

B [ { q i ( h i ) } , θ ] = ∑ i = 1 I ∫ q i ( h i ) log [ P r ( x i , h i ∣ θ ) q i ( h i ) ] d h i ≤ ∑ i = 1 I log [ ∫ q i ( h i ) P r ( x i , h i ∣ θ ) q i ( h i ) ] d h i = ∑ i = 1 I log [ ∫ P r ( x i , h i ∣ θ ) ] d h i \begin{aligned} B[\{q_i(h_i)\},\theta] &= \sum_{i=1}^I\intop q_i(h_i)\log[\frac{Pr(x_i,h_i|\theta)}{q_i(h_i)}]dh_i\\ &\leq\sum_{i=1}^I\log[\intop q_i(h_i)\frac{Pr(x_i,h_i|\theta)}{q_i(h_i)}]dh_i \\ &=\sum_{i=1}^I\log[\intop Pr(x_i,h_i|\theta)]dh_i \end{aligned} B[{ qi(hi)},θ]=i=1∑I∫qi(hi)log[qi(hi)Pr(xi,hi∣θ)]dhi≤i=1∑Ilog[∫qi(hi)qi(hi)Pr(xi,hi∣θ)]dhi=i=1∑Ilog[∫Pr(xi,hi∣θ)]dhi

上式B[…]又称为ELBO(证据下界),其中 qi(hi) 就是给定 xi, θ 条件下的分类分布概率: qi(hi) = Pr((hi)|xi, θ)。

在这里,我们使用EM(Expectation Maximization)算法来求解参数。

-

E-Step(固定θ,更新 qi(hi) 来提升ELBO):

求每个隐变量 hi 的后验分布Pr(hi|xi)以最大化对应的分布 qi(hi) 的边界(根据贝叶斯法则):

q i ( h i ) = P r ( h i = k ∣ x i , θ [ t ] ) = P r ( x i ∣ h i = k , θ [ t ] ) P r ( h i = k , θ [ t ] ) ∑ j = 1 K P r ( x i ∣ h i = j , θ [ t ] ) P r ( h i = j , θ [ t ] ) = λ k N o r m x i [ μ k , Σ k ] ∑ j = 1 K λ j N o r m x i [ μ j , Σ j ] = r i k . \begin{aligned} q_i(h_i) = Pr(h_i = k|\bm x_i,\bm \theta^{[t]}) &=\frac{Pr(x_i|h_i=k,\theta^{[t]})Pr(h_i=k,\theta^{[t]})}{\sum_{j=1}^KPr(x_i|h_i=j,\theta^{[t]})Pr(h_i = j,\theta^{[t]})} \\ &=\frac{\lambda_kNorm_{x_i}[\mu_k,\Sigma_k]}{\sum_{j=1}^K\lambda_jNorm_{x_i}[\mu_j,\Sigma_j]}\\ &=r_{ik}. \end{aligned} qi(hi)=Pr(hi=k∣xi,θ[t])=∑j=1KPr(xi∣hi=j,θ[t])Pr(hi=j,θ[t])Pr(xi∣hi=k,θ[t])Pr(hi=k,θ[t])=∑j=1KλjNormxi[μj,Σj]λkNormxi[μk,Σk]=rik.这里的 rk 称作对应关系(respoinsibility)。 -

M-Step(固定 qi(hi) ,更新 θ 来使得边界函数ELBO最大化):

在最大化这一步,对相应模型参数θ={λk,μk,Σk} 的边界进行最大化:

θ ^ = arg max θ [ ∑ i = 1 I ∑ k = 1 K q ^ i ( h i = k ) log [ P r ( x i , h i = k ∣ θ ) ] ] = arg max θ [ ∑ i = 1 I ∑ k = 1 K r i k log [ λ k N o r m x i [ μ k , Σ k ] ] ] . \begin{aligned} \hat \theta &= \argmax_\theta[\sum_{i=1}^I\sum_{k=1}^K\hat q_i(h_i=k)\log[Pr(x_i,h_i=k|\theta)]]\\ &= \argmax_\theta[\sum_{i=1}^I\sum_{k=1}^Kr_{ik}\log[\lambda_kNorm_{x_i}[\mu_k,\Sigma_k]]]. \end{aligned} θ^=θargmax[i=1∑Ik=1∑Kq^i(hi=k)log[Pr(xi,hi=k∣θ)]]=θargmax[i=1∑Ik=1∑Kriklog[λkNormxi[μk,Σk]]].

最后,通过拉格朗日乘子法求解,可以得到更新法则:

λ k [ t + 1 ] = ∑ i = 1 I r i k ∑ j = 1 K ∑ i = 1 I r i j μ k [ t + 1 ] = ∑ i = 1 I r i k x i ∑ i = 1 I r i k Σ k [ t + 1 ] = ∑ i = 1 I r i k ( x i − μ k [ t + 1 ] ) ( x i − μ k [ t + 1 ] ) T ∑ i = 1 I r i k \begin{aligned} \lambda_k^{[t+1]} &= \frac{\sum_{i=1}^Ir_{ik}}{\sum_{j=1}^K\sum_{i=1}^Ir_{ij}}\\ \mu_k^{[t+1]} &=\frac{\sum_{i=1}^Ir_{ik}x_i}{\sum_{i=1}^Ir_{ik}}\\ \Sigma_k^{[t+1]} &=\frac{\sum_{i=1}^Ir_{ik}(x_i-\mu_k^{[t+1]})(x_i-\mu_k^{[t+1]})^T}{\sum_{i=1}^Ir_{ik}} \end{aligned} λk[t+1]μk[t+1]Σk[t+1]=∑j=1K∑i=1Irij∑i=1Irik=∑i=1Irik∑i=1Irikxi=∑i=1Irik∑i=1Irik(xi−μk[t+1])(xi−μk[t+1])T

GMM动态可视化

图1使用2个高斯拟合2个高斯随机生成的样本点集。

图2使用3个高斯拟合2个高斯随机生成的样本点集。

图3 使用3个高斯拟合3个高斯随机生成的样本点集。

图4使用2个高斯拟合2个高斯随机生成的样本点集,在这里我们可以看出随机初始化的均值对拟合函数的收敛有影响。

图5使用3个高斯拟合3个高斯随机生成的样本点集,同样的,我们可以看出随机初始化的均值对拟合函数的收敛有影响。

GMM的Matlab动态可视化代码

% Author : Weichen GU

% Date : 3/10th/2020

% Description : Achieve dynamic visualization for GMM/MOG

% Reference : Computer Vision Models, Learning and Inference

% Initialization

clc;

clear;

clf;

% Generate two Gaussians

% data 1

mu1 = [0 0]; sigma1 = [1 -0.9; -0.9 2];

r1 = mvnrnd(mu1,sigma1, 100);

% data 2

mu2 = [5 5]; sigma2 = [3 -2; -2 2];

r2 = mvnrnd(mu2,sigma2, 100);

% data 3

%mu2 = [2 3]; sigma2 = [3 2; 2 2];

%r3 = mvnrnd(mu2,sigma2, 100);

% Plot these Gaussians

figure(1)

for i = 1:2

subplot(2,2,i);

title('Original data');

plot(r1(:,1),r1(:,2),'r+');

hold on;

plot(r2(:,1),r2(:,2),'b+');

%plot(r3(:,1),r3(:,2),'g+');

title('Original data');

%axis([-10 15 -10 15])

hold off;

end

data = [r1; r2]; % Our dataset

%data = [r1; r2; r3]; % Our dataset

% Do gmm fitting process

fit_gmm(data,2,0.1);

%% Using Gaussian Mixture Model to do clustering

% Input: data - data,

% k - the number of Gaaussians,

% threshold - the precision of the stopping threshold

% Output: lambda - the weight for Gaussians

% mu - the means for Gaussians

% sigma - the covariance matrix for Gaussians

function [lambda, mu, sigma] = fit_gmm(data, k, precision)

[num,dim] = size(data); % Get the size and dimension of data

lambda = repmat(1/k,k,1); % Initialize weight for k-th Gaussian to 1/k

randIdx = randperm(num); % do randomly permutation process

mu = data(randIdx(1:k),:); % Initialize k means for Gaaussians randomly

dataVariance = cov(data,1); % Obtain the variance of dataset ∑(x-mu)'*(x-mu)/ num

sigma = cell (1, k); % Store covariance matrices

% sigma is initialized as the covariance of the whole dataset

for i = 1 : k

sigma{

i} = dataVariance;

end

% x,y is used to draw pdf of Gaussians

x=-5:0.05:10;

y=-5:0.05:10;

iter = 0; precious_L = 100000;

while iter < 100

% E-step (Expectation)

gauss = zeros(num, k); % gauss - stores data generated from Gaussian distribution given mu & sigma

for idx = 1: k

gauss(:,idx) = lambda(idx)*mvnpdf(data, mu(idx,:), sigma{

idx});

end

respons = zeros(num, k); % respons - stores responsibilities

total = sum(gauss, 2);

for idx = 1:num

respons(idx, :) = gauss(idx,:) ./ total(idx);

end

% M-step (Maximization)

responsSumedRow = sum(respons,1);

responsSumedAll = sum(responsSumedRow,2);

for i = 1 : k

% Updata lambda

lambda(i) = responsSumedRow(i) / responsSumedAll;

% Updata mu

newMu = zeros(1, dim);

for j = 1 : num

newMu = newMu + respons(j,i) * data(j,:);

end

mu(i,:) = newMu ./ responsSumedRow(i);

% Updata sigma

newSigma = zeros(dim, dim);

for j = 1 : num

diff = data(j,:) - mu(i,:);

diff = respons(j,i) * (diff'* diff);

newSigma = newSigma + diff;

end

sigma{

i} = newSigma ./ responsSumedRow(i);

end

subplot(2,2,2)

title('Expectation Maxmization');

hold on

[X,Y]=meshgrid(x,y);

stepHandles = cell(1,k);

ZTot = zeros(size(X));

for idx = 1 : k

Z = getGauss(mu(idx,:), sigma{

idx}, X, Y);

Z = lambda(idx)*Z;

[~,stepHandles{

idx}] = contour(X,Y,Z);

ZTot = ZTot + Z;

end

hold off

subplot(2,2,3) % image 3 - PDF for 2D MOG/GMM

mesh(X,Y,ZTot),title('PDF for 2D MOG/GMM');

subplot(2,2,4) % image 4 - Projection of MOG/GMM

surf(X,Y,ZTot),view(2),title('Projection of MOG/GMM')

shading interp

%colorbar

drawnow();

% Compute the log likelihood L

temp = zeros(num, k);

for idx = 1 : k

temp(:,idx) = lambda(idx) *mvnpdf(data, mu(idx, :), sigma{

idx});

end

temp = sum(temp,2);

temp = log(temp);

L = sum(temp);

iter = iter + 1;

preciousTemp = abs(L-precious_L)

if preciousTemp < precision

break;

else

% delete plot handles in image 2

for idx = 1 : k

set(stepHandles{

idx},'LineColor','none')

end

%set(MStepHamdle,'LineColor','none')

end

precious_L = L;

end

end

function [Z] = getGauss(mean, sigma, X, Y)

dim = length(mean);

weight = 1/sqrt((2*pi).^dim * det(sigma));

[~,row] = size(X);

[~,col] = size(Y);

Z = zeros(row, col);

for i = 1 : row

sampledData = [X(i,:); Y(i,:)]';

sampleDiff = sampledData - mean;

inner = -0.5 * (sampleDiff / sigma .* sampleDiff);

Z(i,:) = weight * exp(sum(inner,2));

end

end总结:

- 使用EM算法不能保证找到非凸优化问题的全局解。

- 需要指定GMM高斯函数的数量。

最后给出一个纯净的GMM(没有可视化)的matlab程序供下载: