分布式搭建-5 Flume搭建

环境:

1..版本选择

参考: hadoop集群版本兼容问题

hadoop-2.5.0

hive-0.13.1

spark-1.2.0

hbase-0.98.6

flume-ng-1.7.0

jdk-8u161-linux-x64.tar.gz

下载地址: http://flume.apache.org/download.html

组件分布:

|

|

qy01 |

qy02 |

qy03 |

| HDFS |

NameNode DataNode |

DataNode |

DataNode |

| YARN |

ResourceManager NodeManager |

NodeManager |

NodeManager |

| Zookeeper |

Zookeeper |

Zookeeper |

Zookeeper |

| Kafka |

Kafka |

Kafka |

Kafka |

| Flume |

Flume |

Flume |

Flume |

| Spark |

Spark |

|

|

| Hive |

Hive |

|

|

| Mysql |

Mysql |

|

(1)修改文件权限

chmod u+x apache-flume-1.7.0-bin.tar.gz 解压文件,命令:

tar -zxvf apache-flume-1.7.0-bin.tar.gz -C /opt/modules/修改文件名

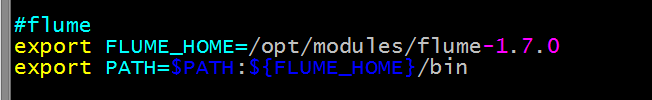

mv apache-flume-1.7.0-bin/ flume-1.7.02.配置环境变量

sudo vim ~/.bashrc

#flume

export FLUME_HOME=/opt/modules/flume-1.7.0

export PATH=$PATH:${FLUME_HOME}/bin

5.安装flume的前提:

System Requirements

- Java Runtime Environment - Java 1.8 or later

- Memory - Sufficient memory for configurations used by sources, channels or sinks

- Disk Space - Sufficient disk space for configurations used by channels or sinks

- Directory Permissions - Read/Write permissions for directories used by agent

系统要求

- Java运行时环境 - Java 1.8或更高版本

- 内存 - 源,通道或接收器使用的配置的足够内存

- 磁盘空间 - 通道或接收器使用的配置的足够磁盘空间

- 目录权限 - 代理使用的目录的读/写权限

6.修改配置文件

官网配置参考:http://flume.apache.org/releases/content/1.9.0/FlumeUserGuide.html

使用notepad++连接虚拟机:

将解压的flume分发到第二台主机

scp -r flume-1.7.0/ hadoop02:/opt/modules/

架构为:第二、三台主机从内容linux执行结果中获取数据放到 channels 中,推送的第一台主机。

1.先配置第二台主机

切换目录到 flume-1.7.0/conf

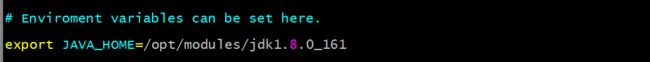

配置 flume-env.sh

mv flume-env.sh.template flume-env.sh打开 flume-env.sh 设置java路径

配置 flume-conf.properties

mv flume-conf.properties.template flume-conf.properties打开 flume-conf.properties,删除所有内容,添加以下内容

agent2.sources = r1

agent2.channels = c1

agent2.sinks = s1

agent2.sources.r1.type = exec

agent2.sources.r1.command = tail -F /opt/datas/weblog-flume.log

agent2.sources.r1.channels = c1

agent2.channels.c1.type = memory

agent2.channels.c1.capacity = 10000

agent2.channels.c1.transactionCapacity = 10000

agent2.channels.c1.keep-alive = 5

agent2.sinks.s1.type = avro

agent2.sinks.s1.channel = c1

agent2.sinks.s1.hostname = hadoop01

agent2.sinks.s1.port = 55552.配置第三台主机

将第二台配置好的flume整个文件分发到第三台主机

scp -r flume-1.7.0/ hadoop03:/opt/modules/配置 flume-conf.properties

打开 flume-conf.properties,删除所有内容,添加以下内容(主要修改进程名: agent2修改agent3)

agent3.sources = r1

agent3.channels = c1

agent3.sinks = s1

agent3.sources.r1.type = exec

agent3.sources.r1.command = tail -F /opt/datas/weblog-flume.log

agent3.sources.r1.channels = c1

agent3.channels.c1.type = memory

agent3.channels.c1.capacity = 10000

agent3.channels.c1.transactionCapacity = 10000

agent3.channels.c1.keep-alive = 5

agent3.sinks.s1.type = avro

agent3.sinks.s1.channel = c1

agent3.sinks.s1.hostname = hadoop01

agent3.sinks.s1.port = 5555

2.配置第一台主机

一个源对应两个sink

agent1.sources = r1

agent1.channels = kafkaC hbaseC

agent1.sinks = kafkaSink hbaseSink

#************************flume+habse*************************

agent1.sources.r1.type = avro

agent1.sources.r1.channels = kafkaC hbaseC

agent1.sources.r1.bind = hadoop01

agent1.sources.r1.port = 5555

agent1.sources.r1.threads = 5

agent1.channels.hbaseC.type = memory

agent1.channels.hbaseC.capacity = 100000

agent1.channels.hbaseC.transactionCapacity = 100000

agent1.channels.hbaseC.keep-alive = 20

agent1.sinks.hbaseSink.type = asynchbase

agent1.sinks.hbaseSink.table = weblogs

agent1.sinks.hbaseSink.columnFamily = info

agent1.sinks.hbaseSink.serializer = org.apache.flume.sink.hbase.KfkAsyncHbaseEventSerializer

agent1.sinks.hbaseSink.channel = hbaseC

agent1.sinks.hbaseSink.serializer.payloadColumn = datatime,userid,searchname,retorder,cliorder,cliurl

#************************flume+kafka*************************

agent1.channels.kafkaC.type = memory

agent1.channels.kafkaC.capacity = 100000

agent1.channels.kafkaC.transactionCapacity = 100000

agent1.channels.kafkaC.keep-alive = 20

agent1.sinks.kafkaSink.channel = kafkaC

agent1.sinks.kafkaSink.type = org.apache.flume.sink.kafka.KafkaSink

agent1.sinks.kafkaSink.brokerList = hadoop01:9092,hadoop02:9092,hadoop03:9092

agent1.sinks.kafkaSink.topic = weblogs

agent1.sinks.kafkaSink.zookeeperConnect = hadoop01:2181,hadoop02:2181,hadoop03:2181

agent1.sinks.kafkaSink.requiredAcks = 1

agent1.sinks.kafkaSink.batchSize = 1

agent1.sinks.kafkaSink.serializer.class = kafka.serializer.StringEncoder