SparkStreaming源码剖析2-JobGenerator任务的生成与执行流程

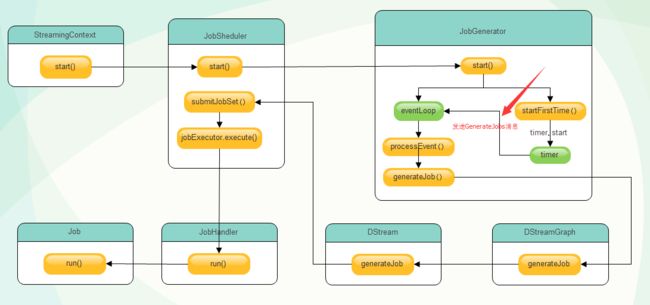

在上一节中的StreamingContext.start()方法中,其主要就是调用scheduler.start()来启动对应的JobScheduler,在scheduler.start()方法中,其会创建并启动两个最重要的组件为:ReceiverTracker和JobGenerator。上文中分析了ReceiverTracker流式数据产生与接收的基本工作及原理,接下来分析JobGenerator的基本工作与原理。

- JobGenerator:主要用来生成对应的Batch Job,并提交生成的Job到集群运行。它封装了由DStream转化而来的RDD操作,其通过定时调用DStreamingGraph的generateJob方法生成Job和清理Dstream的元数据,DStreamGraph持有构成DStream图的所有DStream对象,并调用DStream的generateJob方法生成具体Job对象。DStream生成最终的Job交给JobScheduler调度执行。

接下来从源码的角度一步步来分析下其具体的生成过程:

1、JobGenerator

JobGenerator组件的内部基本变量定义如下:

// job生成消息的事件循环

private var eventLoop: EventLoop[JobGeneratorEvent]

// 定时器,按照批处理间隔定时向eventLoop发送生成job的消息

private val timer = new RecurringTimer(

clock, ssc.graph.batchDuration.milliseconds,

longTime => eventLoop.post(GenerateJobs(new Time(longTime))), "JobGenerator"

)其在基本的JobGenerator.start()方法中,其会启动eventLoop和调用startFirstTime()方法。在eventLoop启动后,会启动一个线程来不断的接收消息,根据接收到的消息作出相应的操作;在调用startFirstTime()方法中其启动了DStreamGraph和用于定时发送生成job消息的定时器,如下:

/** Start generation of jobs */

def start(): Unit = synchronized {

// ......

// eventLoop的回调方法onReceive会调用processEvent(event)进行对应事件的处理

eventLoop = new EventLoop[JobGeneratorEvent]("JobGenerator") {

override protected def onReceive(event: JobGeneratorEvent): Unit = processEvent(event)

override protected def onError(e: Throwable): Unit = {

jobScheduler.reportError("Error in job generator", e)

}

}

eventLoop.start()

if (ssc.isCheckpointPresent) {

restart()

} else {

startFirstTime()

}

}

/** Starts the generator for the first time */

private def startFirstTime() {

val startTime = new Time(timer.getStartTime())

graph.start(startTime - graph.batchDuration)

timer.start(startTime.milliseconds)

logInfo("Started JobGenerator at " + startTime)

}在timer定时器启动后,其会定时发送GenerateJobs(new Time(longTime))的消息。eventLoop在收到消息后,会调用processEvent()方法进行处理,并调用generateJobs()方法来生成对应的job任务,如下:

// 根据时间生成job

private def generateJobs(time: Time) {

ssc.sparkContext.setLocalProperty(RDD.CHECKPOINT_ALL_MARKED_ANCESTORS, "true")

Try {

// 调用receiverTracker给当前批Job任务分配数据块

jobScheduler.receiverTracker.allocateBlocksToBatch(time) // allocate received blocks to batch

graph.generateJobs(time) // generate jobs using allocated block

} match {

case Success(jobs) => // 如果job生成成功, 调用jobScheduler.submitJobSet提交job

val streamIdToInputInfos = jobScheduler.inputInfoTracker.getInfo(time)

jobScheduler.submitJobSet(JobSet(time, jobs, streamIdToInputInfos))

case Failure(e) =>

jobScheduler.reportError("Error generating jobs for time " + time, e)

PythonDStream.stopStreamingContextIfPythonProcessIsDead(e)

}

// 完成后进行checkpoint

eventLoop.post(DoCheckpoint(time, clearCheckpointDataLater = false))

}其基本的运行流程为:

- receiverTracker将接收到的Blocks按照time时间划分到当前批次的job任务

- 在DStreamGraph中使用分配的数据块block构造当前批次的job任务

- 如果构造Jobs成功,则调用jobScheduler.submitJobSet来提交job任务

1、首先会其调用receiverTracker.allocateBlocksToBatch()给当前批任务分配需要处理的数据,其主要将当前的时间段time内的block分配给一个batch job,如下:

def allocateBlocksToBatch(batchTime: Time): Unit = synchronized {

if (lastAllocatedBatchTime == null || batchTime > lastAllocatedBatchTime) {

val streamIdToBlocks = streamIds.map { streamId =>

// 此处就是ReceiverTracker收到AddBlock(blockInfo)消息后,最终存储元数据的地方

(streamId, getReceivedBlockQueue(streamId).dequeueAll(x => true))

}.toMap

val allocatedBlocks = AllocatedBlocks(streamIdToBlocks)

// block块的分配

if (writeToLog(BatchAllocationEvent(batchTime, allocatedBlocks))) {

timeToAllocatedBlocks.put(batchTime, allocatedBlocks)

lastAllocatedBatchTime = batchTime

} else {

logInfo(s"Possibly processed batch $batchTime needs to be processed again in WAL recovery")

}

} else {

// ......

}

}2、之后便调用DStreamGraph.generatorJobs()方法,来根据我们定义的DStream之间的依赖关系和算子生成job任务:

def generateJobs(time: Time): Seq[Job] = {

logDebug("Generating jobs for time " + time)

val jobs = this.synchronized {

// 根据outputStream生成job

outputStreams.flatMap { outputStream =>

val jobOption = outputStream.generateJob(time)

jobOption.foreach(_.setCallSite(outputStream.creationSite))

jobOption

}

}

logDebug("Generated " + jobs.length + " jobs for time " + time)

jobs

}这里会遍历outputStreams来生成job,outputStreams中存放的是我们调用的outputOperation算子对应的DStream。以最开始的Socket WordCount代码为例,其最终会添加一个ForEachDStream到outputStreams中。因此这里就会调用ForEachDStream.generateJob()来生成job:

// ForEachDStream#generateJob

override def generateJob(time: Time): Option[Job] = {

parent.getOrCompute(time) match {

case Some(rdd) =>

val jobFunc = () => createRDDWithLocalProperties(time, displayInnerRDDOps) {

foreachFunc(rdd, time)

}

Some(new Job(time, jobFunc))

case None => None

}

}generateJob()方法会调用parent.getOrCompute()生成RDD,如果生成成功,以RDD和我们定义的逻辑处理函数构造Job,并返回job。在这里的parent其实就是它所依赖的上一个DStream的引用,示例中为:

lines.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_ + _).print()

以示例对此加以说明:这里的parent就是由reduceByKey算子生成的ShuffledDStream的引用,ShuffledDStream中的parent是map生成的MappedDStream的引用,MappedDStream中的parent是flatMap生成的FlatMappedDStream的引用。FlatMappedDStream中的parent就是SocketInputDStream的引用。接着来看下parent.getOrCompute(),现在的parent是ShuffledDStream的引用:

private[streaming] final def getOrCompute(time: Time): Option[RDD[T]] = {

// 已经生成的RDD集合,是以时间为key,rdd为value的HashMap

generatedRDDs.get(time).orElse {

if (isTimeValid(time)) {

val rddOption = createRDDWithLocalProperties(time, displayInnerRDDOps = false) {

// 执行compute方法,生成rdd,几乎每个DStream子类都会实现这个方法

SparkHadoopWriterUtils.disableOutputSpecValidation.withValue(true) {

compute(time)

}

}

// 对生成的rdd缓存或checkpoint,添加到已经生成的RDD集合中

rddOption.foreach { case newRDD =>

if (storageLevel != StorageLevel.NONE) {

newRDD.persist(storageLevel)

}

if (checkpointDuration != null && (time - zeroTime).isMultipleOf(checkpointDuration)) {

newRDD.checkpoint()

}

generatedRDDs.put(time, newRDD)

}

rddOption

} else {

None

}

}

}DStream中定义了一个generatedRDDs用来存储已经生成的RDD。会先去generatedRDDs中获取当前批的RDD,如果不存在则执行compute()生成RDD。按示例所示最终调用的是ShuffledDStream的compute方法:

override def compute(validTime: Time): Option[RDD[(K, C)]] = {

parent.getOrCompute(validTime) match {

case Some(rdd) => Some(rdd.combineByKey[C](

createCombiner, mergeValue, mergeCombiner, partitioner, mapSideCombine))

case None => None

}

}在ShuffledDStream的compute方法中,其又调用了parent.getOrCompute来生成RDD。其最终是根据依赖关系,循环的去调用getOrCompute和compute,直到最开始的DStream。以示例中最开始的SocketInputDStream出发,其调用SocketInputDStream实例的compute方法,也即是调用他的父类ReceiverInputDStream的compute方法。

override def compute(validTime: Time): Option[RDD[T]] = {

val blockRDD = {

if (validTime < graph.startTime) {

new BlockRDD[T](ssc.sc, Array.empty)

} else {

// 获取当前分配给当前批的块信息

val receiverTracker = ssc.scheduler.receiverTracker

val blockInfos = receiverTracker.getBlocksOfBatch(validTime).getOrElse(id, Seq.empty)

val inputInfo = StreamInputInfo(id, blockInfos.flatMap(_.numRecords).sum)

ssc.scheduler.inputInfoTracker.reportInfo(validTime, inputInfo)

// 根据批时间和块信息创建RDD,并返回

createBlockRDD(validTime, blockInfos)

}

}

Some(blockRDD)

}在根据依赖关系,循环的调用getOrCompute和compute操作DStream生成最终的RDD后,其会根据生成的RDD和用户编写的DStream处理函数封装在jobFunc中,并将其传入Job对象,并且将该Job对象返回到JobGenerator.generateJobs()中来,至此Job生成完毕。

3、Job生成成功后,其会调用jobScheduler.submitJobSet(JobSet(time, jobs, streamIdToInputInfos))来提交该batch批次的job任务,接下来分析Job的提交过程,JobScheduler负责Job任务的提交,核心代码在submitJobSet方法中:

def submitJobSet(jobSet: JobSet) {

if (jobSet.jobs.isEmpty) {

logInfo("No jobs added for time " + jobSet.time)

} else {

listenerBus.post(StreamingListenerBatchSubmitted(jobSet.toBatchInfo))

jobSets.put(jobSet.time, jobSet)

jobSet.jobs.foreach(job => jobExecutor.execute(new JobHandler(job)))

logInfo("Added jobs for time " + jobSet.time)

}

}可以看到,这里会将job封装到JobHandler中进行处理,之后便将JobHandler提交给JobExecutor去执行;jobExecutor是一个线程池,其需要注意的点如下:

private val numConcurrentJobs = ssc.conf.getInt("spark.streaming.concurrentJobs", 1)

private val jobExecutor =

ThreadUtils.newDaemonFixedThreadPool(numConcurrentJobs, "streaming-job-executor")

- 这里还是在Driver端运行的,SparkStreaming任务积压就是因为线程池中有没来得及处理的任务,这些任务存放在线程池内部的队列中。

- spark.streaming.concurrentJobs设置的就是线程池的corePoolSize和maximumPoolSize,因此如果设置超过1,线程池会启多个线程执行JobHandler。

其最终会把封装好的JobHandler在线程池jobExecutor中执行run()方法:

private class JobHandler(job: Job) extends Runnable with Logging {

import JobScheduler._

def run() {

val oldProps = ssc.sparkContext.getLocalProperties

try {

ssc.sparkContext.setLocalProperties(SerializationUtils.clone(ssc.savedProperties.get()))

val formattedTime = UIUtils.formatBatchTime(

job.time.milliseconds, ssc.graph.batchDuration.milliseconds, showYYYYMMSS = false)

val batchUrl = s"/streaming/batch/?id=${job.time.milliseconds}"

val batchLinkText = s"[output operation ${job.outputOpId}, batch time ${formattedTime}]"

ssc.sc.setJobDescription(

s"""Streaming job from $batchLinkText""")

ssc.sc.setLocalProperty(BATCH_TIME_PROPERTY_KEY, job.time.milliseconds.toString)

ssc.sc.setLocalProperty(OUTPUT_OP_ID_PROPERTY_KEY, job.outputOpId.toString)

// Checkpoint all RDDs marked for checkpointing to ensure their lineages are

// truncated periodically. Otherwise, we may run into stack overflows (SPARK-6847).

ssc.sparkContext.setLocalProperty(RDD.CHECKPOINT_ALL_MARKED_ANCESTORS, "true")

// We need to assign `eventLoop` to a temp variable. Otherwise, because

// `JobScheduler.stop(false)` may set `eventLoop` to null when this method is running, then

// it's possible that when `post` is called, `eventLoop` happens to null.

var _eventLoop = eventLoop

if (_eventLoop != null) {

_eventLoop.post(JobStarted(job, clock.getTimeMillis()))

// Disable checks for existing output directories in jobs launched by the streaming

// scheduler, since we may need to write output to an existing directory during checkpoint

// recovery; see SPARK-4835 for more details.

PairRDDFunctions.disableOutputSpecValidation.withValue(true) {

job.run()

}

_eventLoop = eventLoop

if (_eventLoop != null) {

_eventLoop.post(JobCompleted(job, clock.getTimeMillis()))

}

} else {

// JobScheduler has been stopped.

}

} finally {

ssc.sparkContext.setLocalProperties(oldProps)

}

}

}在JobHandler.run()方法中,其基本的执行流程如下:

- 封装Job为JobStarted(JobSchedulerEvent)事件,加入eventQueue,等待事件eventLoop处理

- job运行:job.run()

- 封装Job为JobCompleted(JobSchedulerEvent)事件,加入eventQueue,等待事件eventLoop处理

最终执行的job.run()方法如下:

def run() {

_result = Try(func())

}其中的func()是创建job时给定的函数,在本示例中,其最终会ForEachDStream中的jobFunc()来执行foreachFunc(rdd, time)函数,也即是我们封装进来的业务处理函数:wordCounts.print(),在print()内部其最终会调用SparkContext.runJob(),即调用Spark运行处理任务的流程。至此SparkStreaming和Spark又统一了。

最后,总结一下JobGenerator的整理调用执行流程如下:

- 启动JobScheduler,在其内部启动一个EventLoop,用于处理JobSchedulerEvent事件

- 启动JobGenerator,在其内部启动一个EventLoop,用于处理JobGeneratorEvent事件

- 启动RecurringTimer,定时向eventQueue中添加GenerateJobs(JobGeneratorEvent)

- 启一个单独的线程,不断从eventQueue中取GenerateJobs,并调用JobGenerator.EventLoop处理该事件

- 利用DStreamGraph和之前生成的处理链开始生成Job

- 将Job封装成JobHandler提交到一个线程池(Driver端)中排队等待调度处理

- JobHandler内的run()方法内,封装JobStarted(JobSchedulerEvent)事件,加入eventQueue,表示任务开始

- JobHandler内的run()方法内,执行job.run()调用构造Job时的jobFunc,再转而调用SparkContext.runJob提交任务(和Spark对上了)

- JobHandler内的run()方法内,封装JobCompleted(JobSchedulerEvent)事件,加入eventQueue,表示任务完成

2、总结

最后总结一下SparkStreaming中的Receiver数据接收流程和JobGenerator任务生成流程的整体运行和联系:

- 启动流处理引擎

- 创建并启动StreamingContext对象,其中维护了DStreamGraph和JobScheduler实例。

- DStreamGraph用来定义DStream,并管理他们的依赖关系。

- JobScheduler用来生成和调度job,其中维护了ReceiverTracker和JobGenerator实例。

- ReceiverTracker是Driver端的Receiver管理者,负责在Executor中启动ReceiverSupervisor并与之通信,ReceiverSupervisor会启动Receiver进行接收消息。

- JobGenerator用来生成Job。

- 接收并存储数据

- Executor端的Receiver启动后不断的接收消息,并调用其store()方法将数据存储。

- store方法最终会调用ReceiverSupervisorImpl.pushAndReportBlock()将数据进行存储,并汇报给Driver端的ReceiverTrackerEndpoint。

- 这里有一个重要的类:BlockGenerator,其使用ArrayBuffer对接收到的单条数据进行暂存,BlockGenerator还有一个定时器,按批处理间隔定时将ArrayBuffer中的数据封装为Block,并将Block存到一个ArrayBlockingQueue队列中。BlockGenerator中还启动了一个线程从ArrayBlockingQueue中取出Block,调用ReceiverSupervisorImpl.pushAndReportBlock()进行存储,并与Driver端汇报。

- 处理数据,也就是生成job、执行job。

- 首先在JobGenerator中维护了一个定时器,每当批处理间隔到达时,发起GenerateJobs指令,调用generateJobs生成&执行job。

- generateJobs方法中会让ReceiverTracker分配本批次对应的数据,然后让DStreamGraph根据DStream的依赖生成job;job生成成功的话会调用submitJobSet提交执行job,然后执行job。