MySQL数据库-搭建MySQL MHA实现数据库高可用( MySQL MHA概述、 搭建 MySQL MHA、 MySQL MHA 故障切换)

文章目录

- 一、 MySQL MHA概述

-

- 1.1 什么是MySQL MHA

- 1.2 MHA的优点

- 1.3 MHA 的组成

- 二、搭建MHA环境

-

- 实验目的

- 实验思路

- 实验参数

- 实验所需软件包

- 实验过程

-

- 1. 安装MySQL数据库

- 2. 配置 MySQL 一主两从

- 3. 安装 MHA 软件

- 4. 配置无密码认证

- 5. 配置 MySQL MHA 高可用

- 6. 模拟 master 故障切换

- 7. 如果宕机的master重新加入的情况

一、 MySQL MHA概述

MySQL 已经成为市场上主流数据库之一,在之前的主从复制与读写分离中,只有一台主服务器的单点问题成为企业网站架构中最大的隐患。随着技术的发展,MHA 的出现就是解决 MySQL 单点的问题,同时MySQL 多主多从架构的出现可以减轻 MySQL 本身的压力。

1.1 什么是MySQL MHA

MHA目前在MySQL高可用方面是一个相对成熟的解决方案,它由日本DeNA公司youshimaton(现就职于Facebook公司)开发,是一套优秀的作为MySQL高可用性环境下故障切换和主从提升的高可用软件。在MySQL故障切换过程中,MHA能做到在0~30秒之内自动完成数据库的故障切换操作,并且在进行故障切换的过程中,MHA能在最大程度上保证数据的一致性,以达到真正意义上的高可用。

MHA还提供在线主库切换的功能,能够安全地切换当前运行的主库到一个新的主库中(通过将从库提升为主库),大概0.5-2秒内即可完成。

1.2 MHA的优点

1)Masterfailover and slave promotion can be done very quickly

自动故障转移快

2)Mastercrash does not result in data inconsistency

主库崩溃不存在数据一致性问题

3)Noneed to modify current MySQL settings (MHA works with regular MySQL)

不需要对当前mysql环境做重大修改

4)Noneed to increase lots of servers

不需要添加额外的服务器(仅一台manager就可管理上百个replication)

5)Noperformance penalty

性能优秀,可工作在半同步复制和异步复制,当监控mysql状态时,仅需要每隔N秒向master发送ping包(默认3秒),所以对性能无影响。你可以理解为MHA的性能和简单的主从复制框架性能一样。

6)Works with any storage engine

只要replication支持的存储引擎,MHA都支持,不会局限于innodb

1.3 MHA 的组成

该软件由两部分组成:MHAManager(管理节点)和 MHANode(数据节点)。MHA Manager 可以单独部署在一台独立的机器上,管理多个 master-slave 集群;也可以部署在 一台 slave 节点上。MHANode 运行在每台MySQL 服务器上,MHAManager 会定时探测 集群中的 master 节点。当 master 出现故障时,它可以自动将最新数据的 slave 提升为新的 master,然后将所有其他的 slave 重新指向新的 master。整个故障转移过程对应用程序完全透明。

目前 MHA 主要支持一主多从的架构,要搭建 MHA 要求一个复制集群中必须最少有三 台数据库服务器,即一台充当 master,一台充当备用 master,另外一台充当从库。接下来的实验就用四台来实现MHA的架构。

二、搭建MHA环境

实验目的

通过 MHA 监控 MySQL 主服务器出现故障时实现自动切换,不影响业务

实验思路

- 安装 MySQL 数据库;

- 配置 MySQL 一主两从;

- 安装 MHA 软件;

- 配置无密码认证;

- 配置 MySQL MHA 高可用;

- 模拟 master 故障切换。

实验参数

| 主机 | 角色 | 安装软件包 |

|---|---|---|

| 14.0.0.7 | manager管理节点 (manager) | manager组件,node组件 |

| 14.0.0.17 | mysql主数据库(master) | node组件 |

| 14.0.0.27 | mysql主备/从数据库 (slave1) | node组件 |

| 14.0.0.37 | mysql从数据库(slave2) | node组件 |

这里操作系统是 CentOS7 版本,所以这里使用MHA的 版本是 0.57

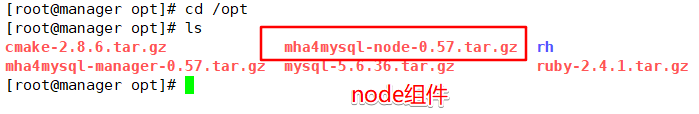

实验所需软件包

这里的软件包只针对以下版本实验,其他版本会有软件兼容性问题,导致实验不成功。

mysql-5.6.36.tar.gz

cmake-2.8.6.tar.gz

mha4mysql-manager-0.57.tar.gz

mha4mysql-node-0.57.tar.gz

实验过程

1. 安装MySQL数据库

这里安装的数据库版本为5.6.36、cmake版本为2.8.6,数据库的安装只是在主从服务器上,监控的manager管理节点服务器不用安装mysql。以下安装MySQL数据以在主服务器上安装为例。

手工编译安装cmake

[root@localhost ~]# systemctl stop firewalld.service

[root@localhost ~]# setenforce 0

[root@localhost opt]# hostnamectl set-hostname master

[root@localhost opt]# su

[root@localhost ~]# cd /opt

将软件包拷贝进当前目录

[root@localhost opt]# ls

cmake-2.8.6.tar.gz mha4mysql-node-0.57.tar.gz rh ruby安装.png

mha4mysql-manager-0.57.tar.gz mysql-5.6.36.tar.gz ruby-2.4.1.tar.gz

[root@master opt]# yum -y install ncurses-devel gcc-c++ perl-[root@master opt]# tar xzvf cmake-2.8.6.tar.gz

[root@master opt]# cd cmake-2.8.6/

[root@master cmake-2.8.6]# ./configure

[root@master cmake-2.8.6]# gmake && gmake install ##编译安装

手工安装mysql

[root@master cmake-2.8.6]# cd ..

[root@master opt]# tar xzvf mysql-5.6.36.tar.gz

[root@master opt]# cd mysql-5.6.36/

[root@master mysql-5.6.36]# cmake \

-DCMAKE_INSTALL_PREFIX=/usr/local/mysql \

-DDEFAULT_CHARSET=utf8 \

-DDEFAULT_COLLATION=utf8_general_ci \

-DWITH_EXTRA_CHARSETS=all \

-DSYSCONFIDIR=/etc

[root@master mysql-5.6.36]# make && make install

配置优化及初始化数据库

[root@slave1 mysql-5.6.36]# cp support-files/my-default.cnf /etc/my.cnf

cp:是否覆盖"/etc/my.cnf"? yes

[root@slave1 mysql-5.6.36]# cp support-files/mysql.server /etc/rc.d/init.d/mysqld

[root@slave1 mysql-5.6.36]# chmod +x /etc/rc.d/init.d/mysqld

[root@slave1 mysql-5.6.36]# chkconfig --add mysqld

[root@slave1 mysql-5.6.36]# echo "PATH=$PATH:/usr/local/mysql/bin">>/etc/profile

[root@slave1 mysql-5.6.36]# source /etc/profile

[root@slave1 mysql-5.6.36]# groupadd mysql

[root@slave1 mysql-5.6.36]# useradd -M -s /sbin/nologin mysql -g mysql

[root@slave1 mysql-5.6.36]# chown -R mysql:mysql /usr/local/mysql/

[root@slave1 mysql-5.6.36]# mkdir -p /data/mysql

[root@slave1 mysql-5.6.36]# /usr/local/mysql/scripts/mysql_install_db \

--user=mysql \

--basedir=/usr/local/mysql/ \

--datadir=/usr/local/mysql/data

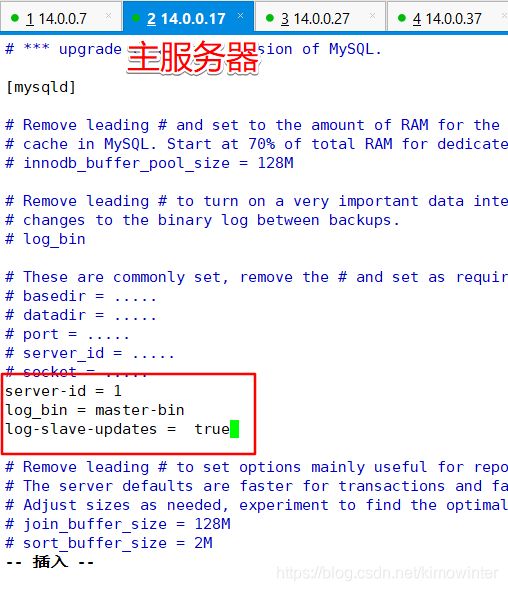

2. 配置 MySQL 一主两从

三台服务器分别对主配置文件进行设置

master:

vim /etc/my.cnf

server-id = 1

log_bin = master-bin

log-slave-updates = true

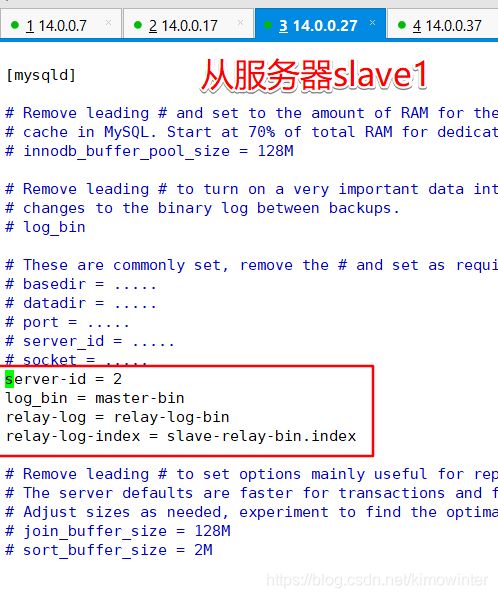

slave1:

vim /etc/my.cnf

server-id = 2

log_bin = master-bin

relay-log = relay-log-bin

relay-log-index = slave-relay-bin.index

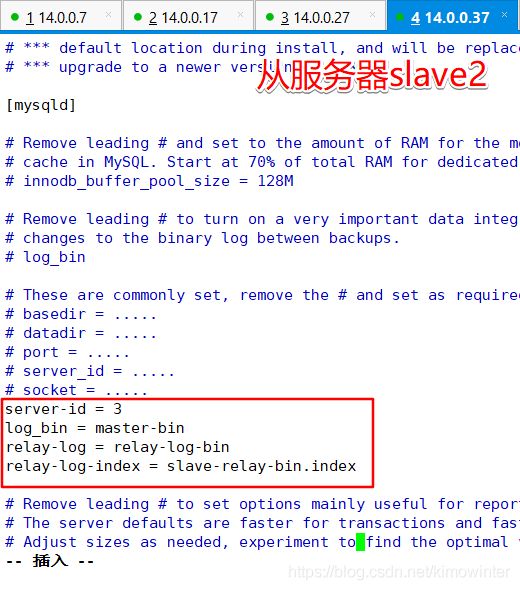

slave2:

vim /etc/my.cnf

server-id = 3

log_bin = master-bin

relay-log = relay-log-bin

relay-log-index = slave-relay-bin.index

[root@slave2 mysql-5.6.36]# ln -s /usr/local/mysql/bin/mysql /usr/sbin/

[root@slave2 mysql-5.6.36]# ln -s /usr/local/mysql/bin/mysqlbinlog /usr/sbin/

三台服务器启动mysql

[root@master mysql-5.6.36]# /usr/local/mysql/bin/mysqld_safe --user=mysql &

[1] 37383

[root@master mysql-5.6.36]# jobs

[1]+ 运行中 /usr/local/mysql/bin/mysqld_safe --user=mysql &

[root@master mysql-5.6.36]# netstat -antp | grep 3306

tcp6 0 0 :::3306 :::* LISTEN 37510/mysqld

在所有数据库节点上为两个用户放通权限,一个是从服务器同步使用,一个是被manager监控使用

[root@master mysql-5.6.36]# mysql -uroot -p ##登录数据库

Enter password: ##密码为空

mysql> grant replication slave on *.* to 'my slave'@'14.0.0.%' identified by '123';

Query OK, 0 rows affected (0.01 sec)

mysql> grant all privileges on *.* to 'mha'@'14.0.0.%' identified by 'manager';

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

下面三条授权按理论是不用添加的,但是在做案例实验时通过 MHA 检查 MySQL 主从有报错,报两个从库通过主机名连接不上主库,所以三个数据库都添加下面的授权

mysql> grant all privileges on *.* to 'mha'@'master' identified by 'manager';

mysql> grant all privileges on *.* to 'mha'@'slave1' identified by 'manager';

mysql> grant all privileges on *.* to 'mha'@'slave2' identified by 'manager';

mysql> flush privileges;

在主服务器上查看位置点,保持主服务器状态,不进行数据库操作,防止错位导致的主从同步失败。

mysql> show master status;

+-------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-------------------+----------+--------------+------------------+-------------------+

| master-bin.000001 | 1285 | | | |

+-------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

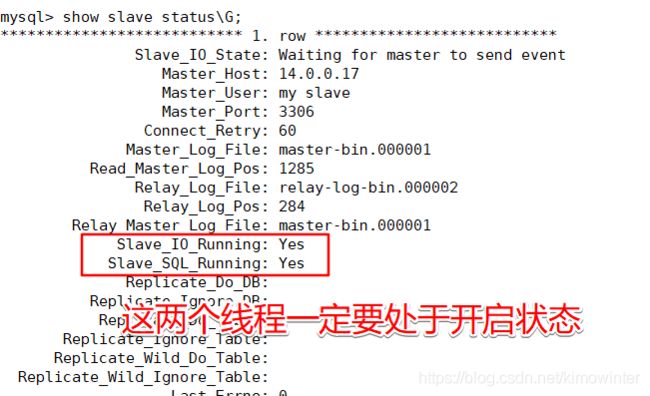

在两台从服务器上进行同步操作

mysql> change master to master_host='14.0.0.17',master_user='my slave',master_password='123',master_log_file='master-bin.000001',master_log_pos=1285;

Query OK, 0 rows affected, 2 warnings (0.02 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> start slave; ##开启slave同步

Query OK, 0 rows affected (0.01 sec)

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 14.0.0.17

Master_User: my slave

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: master-bin.000001

Read_Master_Log_Pos: 1285

Relay_Log_File: relay-log-bin.000002

Relay_Log_Pos: 284

Relay_Master_Log_File: master-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

mysql> set global read_only=1;

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.01 sec)

验证主从同步功能,在主服务器上创建一个数据库,在从服务器上查看是否同步,这里的同步是成功开启

主服务器(14.0.0.17)

mysql> create database home;

Query OK, 1 row affected (0.00 sec)

3. 安装 MHA 软件

所有服务器上都安装MHA依赖的环境,首先安装epel源,然后安装perl环境,因为MHA是perl语言写的

[root@manager ~]# yum install epel-release --nogpgcheck -y

安装perl环境

[root@manager ~]# yum install -y perl-DBD-MySQL \

perl-Config-Tiny \

perl-Log-Dispatch \

perl-Parallel-ForkManager \

perl-ExtUtils-CBuilder \

perl-ExtUtils-MakeMaker \

perl-CPAN

在所有服务器上必须先安装 node 组件,最后在 MHA-manager 节点上安装 manager 组件, 因为 manager 依赖 node 组件。

[root@manager opt]# cd /opt

[root@manager opt]# ls

cmake-2.8.6.tar.gz mha4mysql-node-0.57.tar.gz rh ruby安装.png

mha4mysql-manager-0.57.tar.gz mysql-5.6.36.tar.gz ruby-2.4.1.tar.gz

[root@manager opt]# tar zxvf mha4mysql-node-0.57.tar.gz

[root@manager opt]# cd mha4mysql-node-0.57/

[root@manager mha4mysql-node-0.57]# yum install perl-Module-Install -y

[root@manager mha4mysql-node-0.57]# perl Makefile.PL ##perl语言执行脚本

*** Module::AutoInstall version 1.06

*** Checking for Perl dependencies...

[Core Features]

- DBI ...loaded. (1.627)

- DBD::mysql ...loaded. (4.023)

*** Module::AutoInstall configuration finished.

Writing Makefile for mha4mysql::node

Writing MYMETA.yml and MYMETA.json

[root@manager mha4mysql-node-0.57]# make && make install ##编译安装

然后在manager主机(14.0.0.7)上安装manager组件,仅mha_manager服务器安装manager组件。

[root@manager mha4mysql-node-0.57]# cd /opt

[root@manager opt]# cd mha4mysql-manager-0.57/

[root@manager mha4mysql-manager-0.57]# perl Makefile.PL ##执行软件perl脚本

*** Module::AutoInstall version 1.06

*** Checking for Perl dependencies...

[Core Features]

- DBI ...loaded. (1.627)

- DBD::mysql ...loaded. (4.023)

- Time::HiRes ...loaded. (1.9725)

- Config::Tiny ...loaded. (2.14)

- Log::Dispatch ...loaded. (2.41)

- Parallel::ForkManager ...loaded. (1.18)

- MHA::NodeConst ...loaded. (0.57)

*** Module::AutoInstall configuration finished.

Checking if your kit is complete...

Looks good

Writing Makefile for mha4mysql::manager

Writing MYMETA.yml and MYMETA.json

[root@manager mha4mysql-manager-0.57]# make && make install ##编译安装

manager 安装后在/usr/local/bin 下面会生成几个工具,主要包括:

- masterha_check_ssh:检查 MHA 的 SSH 配置状况。

- masterha_check_repl:检查 MySQL 复制状况。

- masterha_manger:启动 MHA。

- masterha_check_status:检测当前 MHA 运行状态。

- masterha_master_monitor:检测 master 是否宕机。

- masterha_master_switch:控制故障转移(自动或者手动)。

- masterha_conf_host:添加或删除配置的 server 信息。

node 安装后也会在/usr/local/bin 下面会生成几个脚本(这些工具通常由 MHA Manager 的脚本触发,无需人为操作)

- save_binary_logs:保存和复制 master 的二进制日志。

- apply_diff_relay_logs:识别差异的中继日志事件并将其差异的事件应用于其他的 slave。

- filter_mysqlbinlog:去除不必要的 ROLLBACK 事件(MHA 已不再使用这个工具)。

- purge_relay_logs:清除中继日志(不会阻塞 SQL 线程)

4. 配置无密码认证

1. 在manager 上配置到所有数据库节点的无密码认证

[root@MHA-manager ~ ]# ssh-keygen -t rsa ##一路按回车键

[root@MHA-manager ~]# ssh-copy-id 14.0.0.17 ##其他用户的认证流程也都一样

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '14.0.0.17 (14.0.0.17)' can't be established.

ECDSA key fingerprint is SHA256:2+bFMzk0Bg4agHkffqTtuLwKjxHeZZiYzlFrlmGFdTo.

ECDSA key fingerprint is MD5:62:8f:74:dd:68:28:12:5b:87:dc:81:d2:0c:47:91:43.

Are you sure you want to continue connecting (yes/no)? yes ##选择yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@14.0.0.17's password: ##输入root账户的登录密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '14.0.0.17'"

and check to make sure that only the key(s) you wanted were added.

[root@MHA-manager ~]# ssh-copy-id 14.0.0.27

[root@MHA-manager ~]# ssh-copy-id 14.0.0.37

2.在Mysql master 133上配置到数据库节点Mysql slave1和Mysql slave2的无密码认证

[root@master ~]# ssh-keygen -t rsa

[root@master ~]# ssh-copy-id 14.0.0.27

[root@master ~]# ssh-copy-id 14.0.0.37

3.在Mysql slave1 134上配置到数据库节点Mysql master和Mysql slave1的无密码认证

[root@slave1 ~]# ssh-keygen -t rsa

[root@slave1 ~]# ssh-copy-id 14.0.0.17

[root@slave1 ~]# ssh-copy-id 14.0.0.37

4.在Mysql slave2上配置到数据库节点Mysql master和Mysql slave2的无密码认证

[root@slave2 ~]# ssh-keygen -t rsa

[root@slave2 ~]# ssh-copy-id 14.0.0.17

[root@slave2 ~]# ssh-copy-id 14.0.0.27

5. 配置 MySQL MHA 高可用

在manager节点上复制相关脚本到/usr/local/bin目录

[root@manager opt]# cp -ra /opt/mha4mysql-manager-0.57/samples/scripts /usr/local/bin

[root@manager opt]# ll /usr/local/bin/scripts/

总用量 32

-rwxr-xr-x. 1 1001 1001 3648 5月 31 2015 master_ip_failover ##自动切换时VIP管理的脚本

-rwxr-xr-x. 1 1001 1001 9870 5月 31 2015 master_ip_online_change ##在线切换时vip的管理

-rwxr-xr-x. 1 1001 1001 11867 5月 31 2015 power_manager ##故障发生后关闭主机的脚本

-rwxr-xr-x. 1 1001 1001 1360 5月 31 2015 send_report ##因故障切换后发送报警的脚本

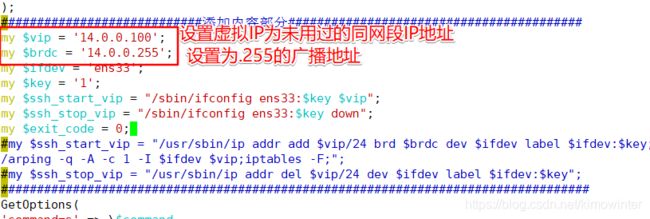

复制上述的自动切换时VIP管理的脚本到/usr/local/bin目录,这里使用脚本管理VIP

[root@manager opt]# cp /usr/local/bin/scripts/master_ip_failover /usr/local/bin/

修改内容如下: (删除原有内容,直接复制)

[root@manager opt]# vim /usr/local/bin/master_ip_failover

#!/usr/bin/env perl

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

my $vip = '14.0.0.100';

my $brdc = '14.0.0.255';

my $ifdev = 'ens33';

my $key = '1';

my $ssh_start_vip = "/sbin/ifconfig ens33:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig ens33:$key down";

my $exit_code = 0;

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

# A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

注意:这段代码复制到shell里,默认最前面会加上#号,需要把#号去掉,不然就全部注释了

:% s/^#//g //末行模式下修改

修改以下两个地址为自己的地址

创建MHA软件目录并拷贝配置文件

[root@manager opt]# cd /usr/local/bin/scripts/

[root@manager scripts]# mkdir /etc/masterha ##创建目录方便放mha的配置文件

[root@manager scripts]# cp /opt/mha4mysql-manager-0.57/samples/conf/app1.cnf /etc/masterha/

[root@manager scripts]# vim /etc/masterha/app1.cnf

[server default]

manager_log=/var/log/masterha/app1/manager.log

manager_workdir=/var/log/masterha/app1.log

master_binlog_dir=/usr/local/mysql/data

master_ip_failover_script=/usr/local/bin/master_ip_failover

master_ip_online_change_script=/usr/local/bin/master_ip_online_change

password=manager

ping_interval=1

remote_workdir=/tmp

repl_password=123

repl_user=my slave

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 14.0.0.27 -s 14.0.0.37

shutdown_script=""

ssh_user=root

user=mha

[server2]

candidate_master=1

check_repl_delay=0

hostname=14.0.0.27

port=3306

[server3]

hostname=14.0.0.37

port=3306

[root@manager scripts]# cp master_ip_online_change ../ ##将脚本文件移动到配置文件中所指路径

[root@manager scripts]# cp send_report /usr/local/ ##将脚本文件移动到配置文件中所指路径

测试 ssh 无密码认证,如果正常最后会输出 successfully

[root@manager scripts]# masterha_check_ssh -conf=/etc/masterha/app1.cnf

Sat Aug 29 21:32:36 2020 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Sat Aug 29 21:32:36 2020 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Sat Aug 29 21:32:36 2020 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Sat Aug 29 21:32:36 2020 - [info] Starting SSH connection tests..

Sat Aug 29 21:32:44 2020 - [debug]

Sat Aug 29 21:32:37 2020 - [debug] Connecting via SSH from root@14.0.0.37(14.0.0.37:22) to root@14.0.0.17(14.0.0.17:22)..

Sat Aug 29 21:32:38 2020 - [debug] ok.

Sat Aug 29 21:32:38 2020 - [debug] Connecting via SSH from root@14.0.0.37(14.0.0.37:22) to root@14.0.0.27(14.0.0.27:22)..

Sat Aug 29 21:32:44 2020 - [debug] ok.

Sat Aug 29 21:32:45 2020 - [debug]

Sat Aug 29 21:32:36 2020 - [debug] Connecting via SSH from root@14.0.0.27(14.0.0.27:22) to root@14.0.0.17(14.0.0.17:22)..

Sat Aug 29 21:32:43 2020 - [debug] ok.

Sat Aug 29 21:32:43 2020 - [debug] Connecting via SSH from root@14.0.0.27(14.0.0.27:22) to root@14.0.0.37(14.0.0.37:22)..

Sat Aug 29 21:32:44 2020 - [debug] ok.

Sat Aug 29 21:32:46 2020 - [debug]

Sat Aug 29 21:32:36 2020 - [debug] Connecting via SSH from root@14.0.0.17(14.0.0.17:22) to root@14.0.0.27(14.0.0.27:22)..

Sat Aug 29 21:32:45 2020 - [debug] ok.

Sat Aug 29 21:32:45 2020 - [debug] Connecting via SSH from root@14.0.0.17(14.0.0.17:22) to root@14.0.0.37(14.0.0.37:22)..

Sat Aug 29 21:32:46 2020 - [debug] ok.

Sat Aug 29 21:32:46 2020 - [info] All SSH connection tests passed successfully.

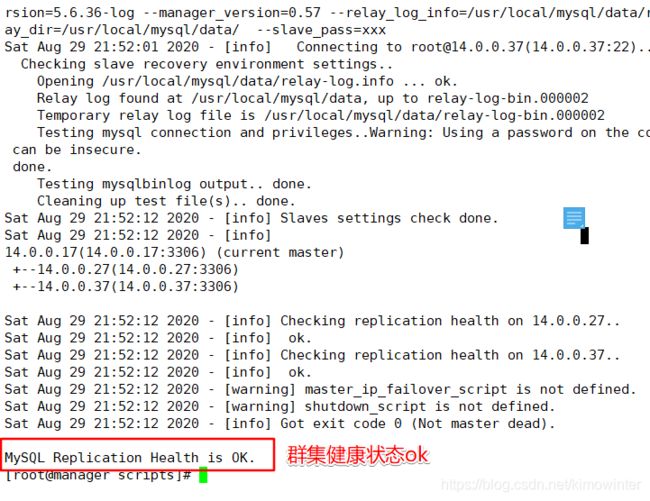

测试 mysq 主从连接情况,最后出现 MySQL Replication Health is OK 字样说明正常。

[root@manager scripts]# masterha_check_repl -conf=/etc/masterha/app1.cnf

...

省略部分检查过程

Sat Aug 29 21:52:12 2020 - [info] Checking replication health on 14.0.0.27..

Sat Aug 29 21:52:12 2020 - [info] ok.

Sat Aug 29 21:52:12 2020 - [info] Checking replication health on 14.0.0.37..

Sat Aug 29 21:52:12 2020 - [info] ok.

Sat Aug 29 21:52:12 2020 - [warning] master_ip_failover_script is not defined.

Sat Aug 29 21:52:12 2020 - [warning] shutdown_script is not defined.

Sat Aug 29 21:52:12 2020 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

在主服务器上启动虚拟IP14.0.0.100,VIP 地址不会因为 manager 节点停止 MHA 服务而消失

[root@master ~]# /sbin/ifconfig ens33:1 14.0.0.100/24

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 14.0.0.17 netmask 255.255.255.0 broadcast 14.0.0.255

inet6 fe80::7aeb:170a:a65:1b5b prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:23:02:28 txqueuelen 1000 (Ethernet)

RX packets 52323 bytes 56591994 (53.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 30919 bytes 6048437 (5.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 14.0.0.100 netmask 255.255.255.0 broadcast 14.0.0.255

ether 00:0c:29:23:02:28 txqueuelen 1000 (Ethernet)

启动mha

[root@manager masterha]# nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

[1] 17498

- 关闭MHA:masterha_stop --conf=/etc/masterha/app1.cnf

- –remove_dead_master_conf 该参数代表当发生主从切换后,老的主库的 IP 地址将会 从配置文件中移除。

- –ignore_last_failover 在缺省情况下,如果 MHA 检测到连续发生宕机,且两次宕机间 隔不足 8 小时的话,则不会进行 Failover,之所以这样限制是为了避免 ping-pong 效应。 该参数代表忽略上次 MHA触发切换产生的文件,默认情况下,MHA 发生切换后会在 日志中记录,下次再切换的时候如果发现该目录下存在该文件将不允许触发切换,除非在第一次切换后收到删除该文件。为了方便,这里设置为–ignore_last_failover。

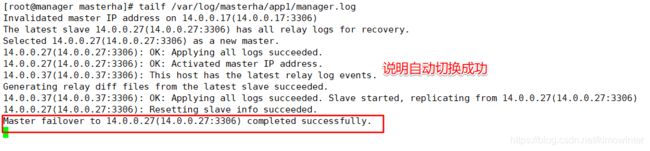

6. 模拟 master 故障切换

将主服务器master使用pkill命令杀死,然后查看虚拟IP是否会自动浮动。

[root@master ~]# pkill -9 mysqld

查看监控服务器的日志文件

[root@manager masterha]# tailf /var/log/masterha/app1/manager.log

Invalidated master IP address on 14.0.0.17(14.0.0.17:3306)

The latest slave 14.0.0.27(14.0.0.27:3306) has all relay logs for recovery.

Selected 14.0.0.27(14.0.0.27:3306) as a new master.

14.0.0.27(14.0.0.27:3306): OK: Applying all logs succeeded.

14.0.0.27(14.0.0.27:3306): OK: Activated master IP address.

14.0.0.37(14.0.0.37:3306): This host has the latest relay log events.

Generating relay diff files from the latest slave succeeded.

14.0.0.37(14.0.0.37:3306): OK: Applying all logs succeeded. Slave started, replicating from 14.0.0.27(14.0.0.27:3306)

14.0.0.27(14.0.0.27:3306): Resetting slave info succeeded.

Master failover to 14.0.0.27(14.0.0.27:3306) completed successfully.

在主备服务器(14.0.0.27)上查看,这时候已经成为主服务器,也就是新的master

[root@slave1 ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 14.0.0.27 netmask 255.255.255.0 broadcast 14.0.0.255

inet6 fe80::5ac2:5088:a2a4:302e prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:50:5c:5c txqueuelen 1000 (Ethernet)

RX packets 71164 bytes 80235747 (76.5 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 37632 bytes 7216270 (6.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 14.0.0.100 netmask 255.0.0.0 broadcast 14.255.255.255

ether 00:0c:29:50:5c:5c txqueuelen 1000 (Ethernet)

7. 如果宕机的master重新加入的情况

如果之前的master想重新加入群集,那么只能作为从服务器加入,不会抢占现在的master,也可以通过手动切换的方式重新成为master。