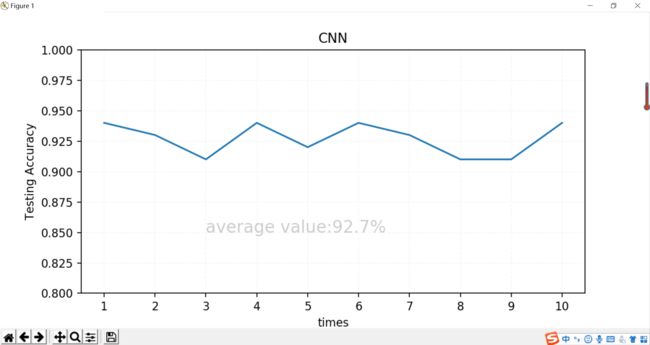

matplotlib单(多)组数据直接生成折线图

最近做毕业设计,把实验数据都做成表格后,老师要求改成图片形式,但是又不响在重新训练和测试,所以就直接用 matplotlib的画图功能将数据直接做成图片形式了。

- 只有一组数据(不用对比显示)

from matplotlib import pyplot as plt

from matplotlib import font_manager

# 设置图片大小和分辨率

fig=plt.figure(figsize=(8, 6), dpi=160)

#ResNet18不冻结数据

a1=[0.94,0.93,0.91,0.94,0.92,0.94,0.93,0.91,0.91,0.94]

x = range(1, 11)#设置横坐标范围,这里要与上面a1中的数据个数相对应

plt.ylim([0.8, 1])#设置y轴显示范围从0.8到1

# 绘制折线图

plt.plot(x, a1)

plt.xticks(x[::1]) # x坐标轴

# 描述内容信息

plt.xlabel("times") # x轴

plt.ylabel("Testing Accuracy") # y轴

plt.title("CNN")

plt.text(3, 0.85, "average value:92.7%", size = 15, alpha = 0.2)

# 绘制网格

plt.grid(alpha=0.1, linestyle="--")

plt.savefig("1.png") # 保存在当前目录中

plt.show()

from matplotlib import pyplot as plt

from matplotlib import font_manager

# 设置图片大小

fig=plt.figure(figsize=(4, 1), dpi=160)

ax1=fig.add_subplot(1,2,1)

ax2=fig.add_subplot(1,2,2)

#AlexNet

y1 = [0.4089,0.2671,0.2384,0.2055,0.1778,0.1675,0.1521,0.0938,0.0840,0.0695]

y2= [0.89,0.94,0.94,0.96,0.97,0.95,0.96,0.98,0.98,0.98]

#ResNet18不冻结

'''

from matplotlib import pyplot as plt

from matplotlib import font_manager

# 设置图片大小

fig=plt.figure(figsize=(4, 1), dpi=160)

ax1=fig.add_subplot(1,2,1)

ax2=fig.add_subplot(1,2,2)

#ResNet18不冻结

a1= [0.5501,0.3886,0.3187,0.2769,0.2404,0.2337,0.1988,0.1409,0.1348,0.1252]

b1= [0.95,0.95,0.96,0.96,0.96,0.96,0.97,0.97,0.97,0.97]

#R 123

a2=[0.9331,0.5890,0.4850,0.4495,0.4443,0.3916,0.3967,0.3804,0.3778,0.3526]

b2=[0.89,0.91,0.93,0.94,0.94,0.94,0.95,0.95,0.95,0.95]

#R12

a3=[0.8567,0.5485,0.4637,0.4139,0.3618,0.3437,0.3300,0.3236,0.3085,0.2991]

b3=[0.91,0.93,0.94,0.95,0.95,0.95,0.95,0.95,0.95,0.95]

#R34

a4=[1.3148,1.0882,0.9862,0.9135,0.8579,0.8226,0.7740,0.7660,0.7624,0.7595]

b4=[0.48,0.60,0.74,0.81,0.80,0.84,0.83,0.88,0.85,0.84]

#R14

a5=[1.1822,0.9105,0.8020,0.7475,0.7015,0.6664,0.6264,0.6080,0.6046,0.5929]

b5=[0.78,0.86,0.90,0.90,0.92,0.93,0.94,0.93,0.93,0.93]

x = range(1, 11)

plt.ylim([0, 1])

# 绘制折线图

ax1.plot(x, a1,color='green', label="unfreezing")

ax1.plot(x, a2,color='blue', label="freeze layer1&2&3")

ax1.plot(x, a3,color='pink', label="freeze layer1&2")

ax1.plot(x, a4,color='red', label="freeze layer3&4")

ax1.plot(x, a5,color='skyblue', label="freeze layer1&4")

ax2.plot(x, b1,color='green', label="unfreezing")

ax2.plot(x, b2,color='blue', label="freeze layer1&2&3")

ax2.plot(x, b3,color='pink', label="freeze layer1&2")

ax2.plot(x, b4,color='red', label="freeze layer3&4")

ax2.plot(x, b5,color='skyblue', label="freeze layer1&4")

ax1.set_xticks(x[::1]) # x坐标轴

ax2.set_xticks(x[::1]) # x坐标轴

# 描述内容信息

ax1.set_xlabel("Epochs") # x轴

ax1.set_ylabel("Training Loss") # y轴

ax2.set_xlabel("Epochs") # x轴

ax2.set_ylabel("Validing Accuracy") # y轴

ax1.set_title("ResNet18(training loss)")

ax2.set_title("ResNet18(validing accuracy)")

# 绘制网格

ax1.grid(alpha=0.1, linestyle="--")

ax2.grid(alpha=0.1, linestyle="--")

plt.legend()#用来显示每条线的标签

plt.savefig("2.png") # 保存在当前目录中

plt.show()

结果图:

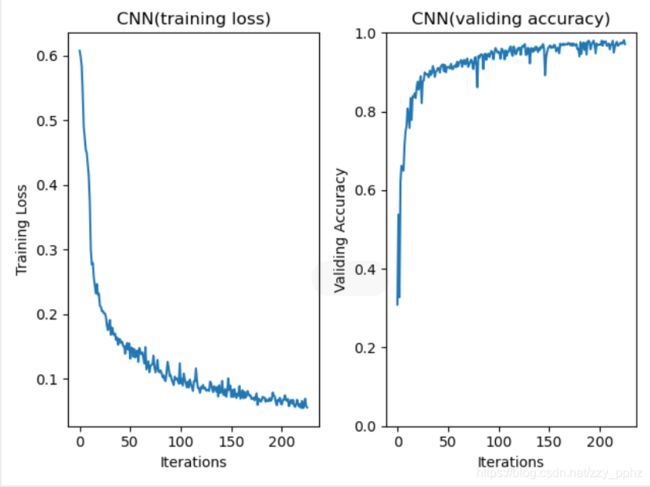

3. 有一个训练的图,有几百个数据我就没有用这个方法了,就只能再次训练一遍了。

from __future__ import print_function, division

import torch

import torchvision

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from matplotlib import pyplot as plt

train_transform = transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

valid_transform=transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

train_dataset =torchvision.datasets.ImageFolder(root='maize/train',transform=train_transform)

train_loader =DataLoader(train_dataset,batch_size=50, shuffle=True,num_workers=0)#Batch Size定义:一次训练所选取的样本数。 Batch Size的大小影响模型的优化程度和速度。

valid_dataset =torchvision.datasets.ImageFolder(root='maize/valid',transform=valid_transform)

valid_loader =DataLoader(valid_dataset,batch_size=50, shuffle=True,num_workers=0)

classes=('0','1','2','3')

########################################################################

#定义一个神经网络

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 12, 5, 1, 2)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(12, 24, 5, 1, 2)

self.conv3 = nn.Conv2d(24, 36, 5, 1, 2)

self.conv4 = nn.Conv2d(36, 36, 5, 1, 2)

self.fc1 = nn.Linear(7056, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 4)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = self.pool(F.relu(self.conv3(x)))

x = self.pool(F.relu(self.conv4(x)))

#

x = x.view(-1,7056)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

model = Net()

print(model)

# 输出网络看一下参数

params = list(model.parameters())

print(len(params))

for i in range(len(params)):

print(i, ' : ', params[i].size())

#定义损失函数和优化器

criterion = nn.CrossEntropyLoss() # 定义损失函数为交叉熵损失函数

optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9) # 采用SGD(随机梯度下降法)

########################################################################

# Train the network

# 训练

epochs = 100

steps = 0

running_loss = 0

print_every = 50

train_losses, valid_losses = [], []

valid_accuracy=[]

device=torch.device("cpu")

for epoch in range(epochs):

for inputs, labels in train_loader:

steps += 1

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

logps = model.forward(inputs)

loss = criterion(logps, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if steps % print_every == 0:

test_loss = 0

accuracy = 0

model.eval()

with torch.no_grad():

for inputs, labels in valid_loader:

inputs, labels = inputs.to(device),labels.to(device)

logps = model.forward(inputs)

batch_loss = criterion(logps, labels)

test_loss += batch_loss.item()

ps = torch.exp(logps)

top_p, top_class = ps.topk(1, dim=1)

equals =top_class == labels.view(*top_class.shape)

accuracy += torch.mean(equals.type(torch.FloatTensor)).item()

train_losses.append(running_loss / len(train_loader))

valid_losses.append(test_loss / len(valid_loader))

valid_accuracy.append(accuracy / len(valid_loader))

print(f"Epoch {epoch + 1}/{epochs}.. "

f"Train loss: {running_loss / print_every:.3f}.. "

f"Valid loss: {test_loss / len(valid_loader):.3f}.. "

f"Valid accuracy: {accuracy / len(valid_loader):.3f}")

running_loss = 0

model.train()

torch.save(model.state_dict(), "model_params.pkl")

torch.save(model.state_dict(),"model_params35(sjy30j3).pkl")

print('Finished Training')

def text_save(filename, data):#filename为写入CSV文件的路径,data为要写入数据列表.

file = open(filename,'a')

for i in range(len(data)):

s = str(data[i]).replace('[','').replace(']','')#去除[],这两行按数据不同,可以选择

s = s.replace("'",'').replace(',','') +'\n' #去除单引号,逗号,每行末尾追加换行符

file.write(s)

file.close()

print("保存文件成功")

#绘制训练和验证损失图:

fig=plt.figure(figsize=(4, 1), dpi=160)

fig=plt.figure()

ax1=fig.add_subplot(1,2,1)

ax2=fig.add_subplot(1,2,2)

plt.ylim([0, 1])

ax1.plot(train_losses)

ax2.plot(valid_accuracy)

ax1.set_xlabel("Iterations") # x轴

ax1.set_ylabel("Training Loss") # y轴

ax2.set_xlabel("Iterations") # x轴

ax2.set_ylabel("Validing Accuracy") # y轴

ax1.set_title("CNN(training loss)")

ax2.set_title("CNN(validing accuracy)")

plt.show()

text_save('CNN(training loss).txt', train_losses)

text_save('CNN(validing accuracy).txt', valid_accuracy)

我把这个程序又训练了一遍,花了很长时间,最后得到了图片,而且把实验数据写入了txt文件,具体见代码。

结果图:

其中一个txt文件的内容:

希望对你有帮助!