kubernetes 1.10 单点安装

kubernetes 1.10 单点安装

一、环境准备

本次我们安装Kubernetes不使用集群版本安装,使用单点安装。

环境如下:

| IP | 主机名 | 节点 | 服务 |

|---|---|---|---|

| 192.168.1.113 | master | master | etcd、kube-apiserver、kube-controller-manage、kube-scheduler (如果master上不安装Node可以不安装以下服务docker、kubelet) |

| 192.168.1.116 | node | node | docker、kubelet、kube-proxy |

查看系统及内核版本

[root@master ~]#cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core)

[root@master ~]#uname -a

3.10.0-327.22.2.el7.x86_64 #1 SMP Thu Jun 23 17:05:11 UTC 2016 x86_64 x86_64 x86_64 GNU/Linu

注:俩台机器共同操作!

设置主机名:

[root@master ~]# hostnamectl set-hostname master

[root@node ~]#hostnamectl set-hostname node

master 设置互信

[root@master ~]# yum install expect wget -y

编写免秘要脚本

[root@master ~]# cat ssh.sh

for i in 192.168.1.116;do

ssh-keygen -t rsa -P “” -f /root/.ssh/id_rsa

expect -c ”

spawn ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected]

expect {

\”yes/no\” {send \”yes\r\”; exp_continue}

\”password\” {send \”123456\r\”; exp_continue}

\”Password\” {send \”123456\r\”;}

} ”

done

设置host

[root@node ~]# echo “192.168.1.116 node1” >>/etc/hosts

[root@node ~]# echo “192.168.1.113 master” >>/etc/hosts

[root@master ~]# echo “192.168.1.116 node1” >>/etc/hosts

[root@master ~]# echo “192.168.1.113 master” >>/etc/hosts

设置时间同步

yum -y install ntp

systemctl enable ntpd

systemctl start ntpd

ntpdate -u cn.pool.ntp.org

hwclock –systohc

timedatectl set-timezone Asia/Shanghai

关闭swap分区

swapoff -a #临时关闭swap分区

yum install wget vim lsof net-tools lrzsz -y #安装几个常用包

关闭防火墙/selinux

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i ‘/SELINUX/s/enforcing/disabled/’ /etc/selinux/config

升级内核

rpm –import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

yum –enablerepo=elrepo-kernel install kernel-ml -y&&

sed -i s/saved/0/g /etc/default/grub&&

grub2-mkconfig -o /boot/grub2/grub.cfg && reboot

升级完后查看内核:

[root@master ~]# uname -a

Linux master 4.17.9-1.el7.elrepo.x86_64 #1 SMP Sun Jul 22 11:57:51 EDT 2018 x86_64 x86_64 x86_64 GNU/Linux

设置内核参数

echo “* soft nofile 190000” >> /etc/security/limits.conf

echo “* hard nofile 200000” >> /etc/security/limits.conf

echo “* soft nproc 252144” >> /etc/security/limits.conf

echo “* hadr nproc 262144” >> /etc/security/limits.conf

tee /etc/sysctl.conf <<-‘EOF’

net.ipv4.tcp_tw_recycle = 0

net.ipv4.ip_local_port_range = 10000 61000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_fin_timeout = 30

net.ipv4.ip_forward = 1

net.core.netdev_max_backlog = 2000

net.ipv4.tcp_mem = 131072 262144 524288

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 2048

net.ipv4.tcp_low_latency = 0

net.core.rmem_default = 256960

net.core.rmem_max = 513920

net.core.wmem_default = 256960

net.core.wmem_max = 513920

net.core.somaxconn = 2048

net.core.optmem_max = 81920

net.ipv4.tcp_mem = 131072 262144 524288

net.ipv4.tcp_rmem = 8760 256960 4088000

net.ipv4.tcp_wmem = 8760 256960 4088000

net.ipv4.tcp_keepalive_time = 1800

net.ipv4.tcp_sack = 1

net.ipv4.tcp_fack = 1

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_syn_retries = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

EOF

echo “options nf_conntrack hashsize=819200” >> /etc/modprobe.d/mlx4.conf

modprobe br_netfilter

sysctl -p

二、安装Kubernetes

Master配置

1.安装CFSSL工具

工具说明:

client certificate 用于服务端认证客户端,例如etcdctl、etcd proxy、fleetctl、docker客户端

server certificate 服务端使用,客户端以此验证服务端身份,例如docker服务端、kube-apiserver

peer certificate 双向证书,用于etcd集群成员间通信

安装CFSSL工具

[root@master ~]#wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@master ~]#chmod +x cfssl_linux-amd64

[root@master ~]#mv cfssl_linux-amd64 /usr/bin/cfssl

[root@master ~]#wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@master ~]#chmod +x cfssljson_linux-amd64

[root@master ~]#mv cfssljson_linux-amd64 /usr/bin/cfssljson

[root@master ~]#wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@master ~]#chmod +x cfssl-certinfo_linux-amd64

[root@master ~]#mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2.生成ETCD证书

etcd作为Kubernetes集群的主数据库,在安装Kubernetes各服务之前需要首先安装和启动

创建CA证书

创建etcd目录,用户生成etcd证书

mkdir /root/etcd_ssl && cd /root/etcd_ssl

cat > etcd-root-ca-csr.json << EOF

{

“key”: {

“algo”: “rsa”,

“size”: 4096

},

“names”: [

{

“O”: “etcd”,

“OU”: “etcd Security”,

“L”: “beijing”,

“ST”: “beijing”,

“C”: “CN”

}

],

“CN”: “etcd-root-ca”

}

EOF

etcd集群证书

cat > etcd-gencert.json << EOF

{

“signing”: {

“default”: {

“expiry”: “87600h”

},

“profiles”: {

“etcd”: {

“usages”: [

“signing”,

“key encipherment”,

“server auth”,

“client auth”

],

“expiry”: “87600h”

}

}

}

}

EOF

注意:过期时间设置成了 87600h

ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

signing:表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

server auth:表示client可以用该 CA 对server提供的证书进行验证;

client auth:表示server可以用该CA对client提供的证书进行验证;

etcd证书签名请求

cat > etcd-csr.json << EOF

{

“key”: {

“algo”: “rsa”,

“size”: 4096

},

“names”: [

{

“O”: “etcd”,

“OU”: “etcd Security”,

“L”: “beijing”,

“ST”: “beijing”,

“C”: “CN”

}

],

“CN”: “etcd”,

“hosts”: [

“127.0.0.1”,

“localhost”,

“192.168.1.113”

]

}

EOF

生成证书

cfssl gencert –initca=true etcd-root-ca-csr.json \

| cfssljson –bare etcd-root-ca

创建根CA

cfssl gencert –ca etcd-root-ca.pem \

–ca-key etcd-root-ca-key.pem \

–config etcd-gencert.json \

-profile=etcd etcd-csr.json | cfssljson –bare etcd

创建完证书后,目录如下:

[root@master etcd_ssl]# ll

total 36

-rw-r–r– 1 root root 1765 Jul 24 11:27 etcd.csr

-rw-r–r– 1 root root 282 Jul 24 11:20 etcd-csr.json

-rw-r–r– 1 root root 469 Jul 24 11:13 etcd-gencert.json

-rw——- 1 root root 3243 Jul 24 11:27 etcd-key.pem

-rw-r–r– 1 root root 2151 Jul 24 11:27 etcd.pem

-rw-r–r– 1 root root 1708 Jul 24 11:24 etcd-root-ca.csr

-rw-r–r– 1 root root 218 Jul 24 11:12 etcd-root-ca-csr.json

-rw——- 1 root root 3243 Jul 24 11:24 etcd-root-ca-key.pem

-rw-r–r– 1 root root 2078 Jul 24 11:24 etcd-root-ca.pem

3.安装启动ETCD

ETCD 只有apiserver和Controller Manager需要连接

[root@master etcd_ssl]# yum install etcd -y

分发etcd证书

[root@master etcd_ssl]# mkdir -p /etc/etcd/ssl && cd /root/etcd_ssl

复制证书到相关目录

[root@master etcd_ssl]# pwd

/root/etcd_ssl

[root@master etcd_ssl]# cp *.pem /etc/etcd/ssl/

[root@master etcd_ssl]# chown -R etcd:etcd /etc/etcd/ssl

[root@master etcd_ssl]# chown -R etcd:etcd /var/lib/etcd

[root@master etcd_ssl]# chmod -R 644 /etc/etcd/ssl/

[root@master etcd_ssl]# chmod 755 /etc/etcd/ssl/

配置修改ETCD-master配置:

[root@master etcd_ssl]# cp /etc/etcd/etcd.conf{,.bak} && >/etc/etcd/etcd.conf

cat >/etc/etcd/etcd.conf <# [member]

ETCD_NAME=etcd

ETCD_DATA_DIR="/var/lib/etcd/etcd.etcd"

ETCD_WAL_DIR="/var/lib/etcd/wal"

ETCD_SNAPSHOT_COUNT="100"

ETCD_HEARTBEAT_INTERVAL="100"

ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="https://192.168.1.113:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.113:2379,http://127.0.0.1:2379"

ETCD_MAX_SNAPSHOTS="5"

ETCD_MAX_WALS="5"

#ETCD_CORS=""

# [cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.113:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="etcd=https://192.168.1.113:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.113:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_STRICT_RECONFIG_CHECK="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

# [proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

# [security]

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-root-ca.pem"

ETCD_AUTO_TLS="true"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/etcd-root-ca.pem"

ETCD_PEER_AUTO_TLS="true"

# [logging]

#ETCD_DEBUG="false"

# examples for -log-package-levels etcdserver=WARNING,security=DEBUG

#ETCD_LOG_PACKAGE_LEVELS=""

EOF 启动etcd

[root@master etcd]#systemctl daemon-reload

[root@master etcd]#systemctl start etcd

[root@master etcd]#systemctl enable etcd

查看端口:

[root@master etcd]# netstat -lntup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 192.168.1.113:2379 0.0.0.0:* LISTEN 11922/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 11922/etcd

tcp 0 0 192.168.1.113:2380 0.0.0.0:* LISTEN 11922/etcd

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 935/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1020/master

tcp6 0 0 :::22 :::* LISTEN 935/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1020/master

udp 0 0 0.0.0.0:24066 0.0.0.0:* 757/dhclient

udp 0 0 0.0.0.0:68 0.0.0.0:* 757/dhclient

udp 0 0 192.168.1.113:123 0.0.0.0:* 722/ntpd

udp 0 0 127.0.0.1:123 0.0.0.0:* 722/ntpd

udp 0 0 0.0.0.0:123 0.0.0.0:* 722/ntpd

udp6 0 0 fe80::1f0a:9ae7:9f2:123 :::* 722/ntpd

udp6 0 0 ::1:123 :::* 722/ntpd

udp6 0 0 :::123 :::* 722/ntpd

udp6 0 0 :::42620 :::* 757/dhclient 测试是否可以使用:

[root@master ~]# export ETCDCTL_API=3

[root@master ~]# etcdctl –cacert=/etc/etcd/ssl/etcd-root-ca.pem –cert=/etc/etcd/ssl/etcd.pem –key=/etc/etcd/ssl/etcd-key.pem –endpoints=https://192.168.1.113:2379 endpoint health

![]()

4.安装Docker

下载Docker安装包

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm安装docker:

[root@master ~]# yum localinstall *.rpm -y

设置开机启动并启动docker

[root@master ~]# systemctl enable docker

[root@master ~]# systemctl start docker

替换docker相关配置

sed -i '/ExecStart=\/usr\/bin\/dockerd/i\ExecStartPost=\/sbin/iptables -I FORWARD -s 0.0.0.0\/0 -d 0.0.0.0\/0 -j ACCEPT' /usr/lib/systemd/system/docker.service

sed -i '/dockerd/s/$/ \-\-storage\-driver\=overlay2/g' /usr/lib/systemd/system/docker.service

重启docker

systemctl daemon-reload

systemctl restart docker5.安装Kubernetes

下载地址为:

https://github.com/kubernetes/kubernetes/

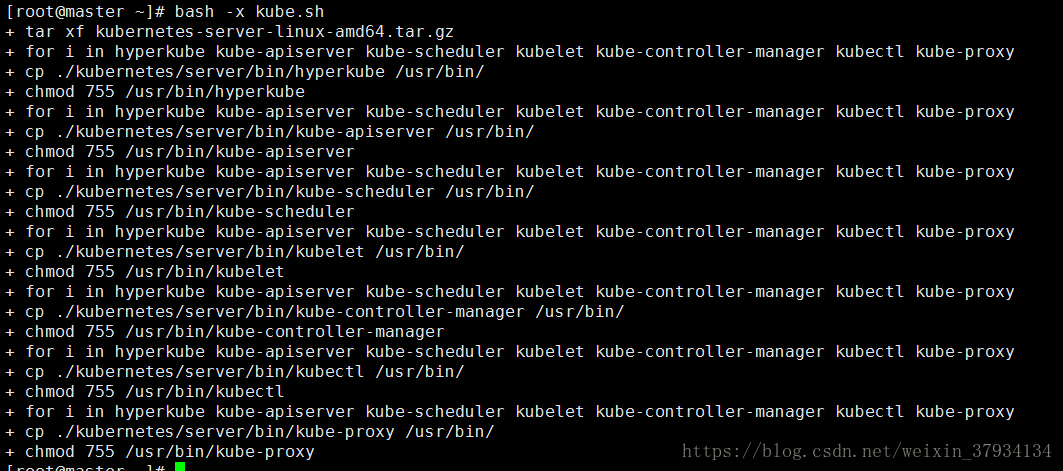

[root@master ~]# cat kube.sh

tar xf kubernetes-server-linux-amd64.tar.gz

for i in hyperkube kube-apiserver kube-scheduler kubelet kube-controller-manager kubectl kube-proxy;do

cp ./kubernetes/server/bin/$i /usr/bin/

chmod 755 /usr/bin/$i

done

[root@master ~]# bash -x kube.sh

6.生成分发Kubernetes证书

设置证书目录

[root@master ~]# mkdir /root/kubernets_ssl && cd /root/kubernets_ssl

[root@master kubernets_ssl]#

k8s-root-ca-csr.json证书:

cat > k8s-root-ca-csr.json << EOF

{

“CN”: “kubernetes”,

“key”: {

“algo”: “rsa”,

“size”: 4096

},

“names”: [

{

“C”: “CN”,

“ST”: “BeiJing”,

“L”: “BeiJing”,

“O”: “k8s”,

“OU”: “System”

}

]

}

EOF

k8s-gencert.json证书:

cat > k8s-gencert.json << EOF

{

“signing”: {

“default”: {

“expiry”: “87600h”

},

“profiles”: {

“kubernetes”: {

“usages”: [

“signing”,

“key encipherment”,

“server auth”,

“client auth”

],

“expiry”: “87600h”

}

}

}

}

EOF

kubernetes-csr.json 证书:

cat > kubernetes-csr.json << EOF

{

“CN”: “kubernetes”,

“hosts”: [

“127.0.0.1”,

“192.168.1.113”,

“localhost”,

“kubernetes”,

“kubernetes.default”,

“kubernetes.default.svc”,

“kubernetes.default.svc.cluster”,

“kubernetes.default.svc.cluster.local”

],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“ST”: “BeiJing”,

“L”: “BeiJing”,

“O”: “k8s”,

“OU”: “System”

}

]

}

EOF

kube-proxy-csr.json 证书:

cat > kube-proxy-csr.json << EOF

{

“CN”: “system:kube-proxy”,

“hosts”: [],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“ST”: “BeiJing”,

“L”: “BeiJing”,

“O”: “k8s”,

“OU”: “System”

}

]

}

EOF

admin-csr.json证书:

cat > admin-csr.json << EOF

{

“CN”: “admin”,

“hosts”: [],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“ST”: “BeiJing”,

“L”: “BeiJing”,

“O”: “system:masters”,

“OU”: “System”

}

]

}

EOF

生成Kubernetes证书:

cfssl gencert --initca=true k8s-root-ca-csr.json | cfssljson --bare k8s-root-ca

for targetName in kubernetes admin kube-proxy; do

cfssl gencert --ca k8s-root-ca.pem --ca-key k8s-root-ca-key.pem --config k8s-gencert.json --profile kubernetes $targetName-csr.json | cfssljson --bare $targetName

done#生成boostrap配置

export KUBE_APISERVER="https://127.0.0.1:6443"

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

echo "Tokne: ${BOOTSTRAP_TOKEN}"

cat > token.csv <${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF 配置证书信息

[root@master kubernets_ssl]# export KUBE_APISERVER=”https://127.0.0.1:6443”

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=k8s-root-ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

# echo "Create kube-proxy kubeconfig..."

kubectl config set-cluster kubernetes \

--certificate-authority=k8s-root-ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

# kube-proxy

kubectl config set-credentials kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# kube-proxy_config

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

# 生成高级审计配置

cat >> audit-policy.yaml < level: Metadata

EOF

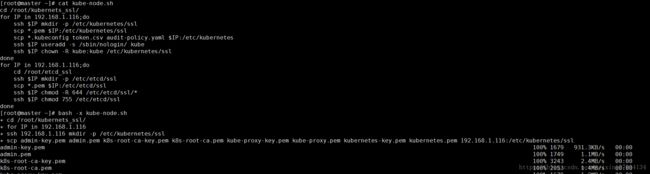

#分发kubernetes证书#####

cd /root/kubernets_ssl

mkdir -p /etc/kubernetes/ssl

cp *.pem /etc/kubernetes/ssl

cp *.kubeconfig token.csv audit-policy.yaml /etc/kubernetes

useradd -s /sbin/nologin -M kube

chown -R kube:kube /etc/kubernetes/ssl

# 生成kubectl的配置

cd /root/kubernets_ssl

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/k8s-root-ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

kubectl config use-context kubernetes

# 设置 log 目录权限

mkdir -p /var/log/kube-audit /usr/libexec/kubernetes

chown -R kube:kube /var/log/kube-audit /usr/libexec/kubernetes

chmod -R 755 /var/log/kube-audit /usr/libexec/kubernetes 7.master节点配置

证书与 rpm 都安装完成后,只需要修改配置(配置位于 /etc/kubernetes 目录)后启动相关组件即可

cd /etc/kubernetes

*config 通用配置*

cat > /etc/kubernetes/config <###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=2"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://127.0.0.1:8080"

EOF *apiserver 配置*

cat > /etc/kubernetes/apiserver <-endpoint-reconciler-type=lease \

--runtime-config=batch/v2alpha1=true \

--anonymous-auth=false \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--service-account-key-file=/etc/kubernetes/ssl/k8s-root-ca-key.pem \

--etcd-quorum-read=true \

--storage-backend=etcd3 \

--etcd-cafile=/etc/etcd/ssl/etcd-root-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--enable-swagger-ui=true \

--apiserver-count=3 \

--audit-policy-file=/etc/kubernetes/audit-policy.yaml \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-audit/audit.log \

--event-ttl=1h "

EOF

#需要修改的地址是etcd的,集群因逗号为分隔符填写 *controller-manager 配置*

cat > /etc/kubernetes/controller-manager <-service-cluster-ip-range=10.254.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/k8s-root-ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/k8s-root-ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--leader-elect=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=60s"

EOF *scheduler 配置*

cat >scheduler <###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--leader-elect=true --address=0.0.0.0"

EOF

设置服务启动脚本

Kubernetes服务的组件配置已经生成,接下来我们配置组件的启动脚本

###kube-apiserver.service服务脚本###

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/apiserver

User=root

ExecStart=/usr/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target###kube-controller-manager.service服务脚本###

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/controller-manager

User=root

ExecStart=/usr/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target###kube-scheduler.service服务脚本###

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/scheduler

User=root

ExecStart=/usr/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target启动kube-apiserver、kube-controller-manager、kube-schedule

systemctl daemon-reload

systemctl start kube-apiserver

systemctl start kube-controller-manager

systemctl start kube-scheduler

设置开机启动

systemctl enable kube-apiserver

systemctl enable kube-controller-manager

systemctl enable kube-scheduler

验证:

[root@master kubernetes]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"} #创建ClusterRoleBinding

由于 kubelet 采用了 TLS Bootstrapping,所有根绝 RBAC 控制策略,kubelet 使用的用户 kubelet-bootstrap 是不具备任何访问 API 权限的

这是需要预先在集群内创建 ClusterRoleBinding 授予其 system:node-bootstrapper Role

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

删除命令

kubectl delete clusterrolebinding kubelet-bootstrapNode节点配置

1.安装Docker

下载Docker安装包

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm安装docker:

[root@master ~]# yum localinstall *.rpm -y

设置开机启动并启动docker

[root@master ~]# systemctl enable docker

[root@master ~]# systemctl start docker

替换docker相关配置

sed -i '/ExecStart=\/usr\/bin\/dockerd/i\ExecStartPost=\/sbin/iptables -I FORWARD -s 0.0.0.0\/0 -d 0.0.0.0\/0 -j ACCEPT' /usr/lib/systemd/system/docker.service

sed -i '/dockerd/s/$/ \-\-storage\-driver\=overlay2/g' /usr/lib/systemd/system/docker.service

重启docker

systemctl daemon-reload

systemctl restart docker2.分配证书

我们需要去Master上分配证书kubernetes、etcd给Node

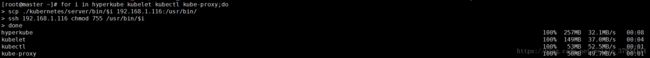

从Mster节点上将hyperkuber kubelet kubectl kube-proxy 拷贝至node上

ssh root@192.168.1.116 mkdir -p /var/log/kube-audit /usr/libexec/kubernetes &&

ssh root@192.168.1.116 chown -R kube:kube /var/log/kube-audit /usr/libexec/kubernetes &&

ssh root@192.168.1.116 chmod -R 755 /var/log/kube-audit /usr/libexec/kubernetes3.Node节点配置

node 节点上配置文件同样位于 /etc/kubernetes 目录

node 节点只需要修改 config kubelet proxy这三个配置文件,修改如下:

*config:*

cat > /etc/kubernetes/config <###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=2"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

# KUBE_MASTER="--master=http://127.0.0.1:8080"

EOF *# kubelet 配置*

cat >/etc/kubernetes/kubelet <-cluster-dns=10.254.0.2 \

--resolv-conf=/etc/resolv.conf \

--experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--cluster-domain=cluster.local. \

--hairpin-mode promiscuous-bridge \

--serialize-image-pulls=false \

--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0"

EOF

#这里的IP地址是node的IP地址和主机名 启动脚本:

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_API_SERVER \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target[root@node log]# mkdir /var/lib/kubelet -p

启动kubelet

sed -i 's#127.0.0.1#192.168.1.113#g' /etc/kubernetes/bootstrap.kubeconfig

#这里的地址是master地址

systemctl daemon-reload

systemctl restart kubelet

systemctl enable kubelet*#修改kube-proxy配置*

cat >/etc/kubernetes/proxy <###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS="--bind-address=192.168.1.116 \

--hostname-override=node1 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--cluster-cidr=10.254.0.0/16"

EOF *kube-proxy启动脚本*

路径:/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target4.创建 nginx 代理

此时所有 node 应该连接本地的 nginx 代理,然后 nginx 来负载所有 api server;以下为 nginx 代理相关配置

我们也可以不用nginx代理。需要修改 bootstrap.kubeconfig kube-proxy.kubeconfig中的 API Server 地址即可

注意: 对于在 master 节点启动 kubelet 来说,不需要 nginx 做负载均衡;可以跳过此步骤,并修改 kubelet.kubeconfig、kube-proxy.kubeconfig 中的 apiserver 地址为当前 master ip 6443 端口即可

[root@node log]# mkdir -p /etc/nginx

cat > /etc/nginx/nginx.conf <<EOF

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

upstream kube_apiserver {

least_conn;

server 192.168.1.113:6443 weight=20 max_fails=1 fail_timeout=10s;

#server中代理master的IP

}

server {

listen 0.0.0.0:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

EOF[root@node log]# chmod +r /etc/nginx/nginx.conf

为了保证 nginx 的可靠性,综合便捷性考虑,node 节点上的 nginx 使用 docker 启动,同时 使用 systemd 来守护, systemd 配置如下

[root@node ~]# docker run -it -d -p 127.0.0.1:6443:6443 -v /etc/nginx:/etc/nginx --name nginx-proxy --net=host --restart=on-failure:5 --memory=512M nginx:1.13.5-alpine

cat >/etc/systemd/system/nginx-proxy.service <<EOF

[Unit]

Description=kubernetes apiserver docker wrapper

Wants=docker.socket

After=docker.service

[Service]

User=root

PermissionsStartOnly=true

ExecStart=/usr/bin/docker start nginx-proxy

Restart=always

RestartSec=15s

TimeoutStartSec=30s

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl start nginx-proxy

systemctl enable nginx-proxy[root@node ~]# sed -i ‘s#192.168.1.113#127.0.0.1#g’ /etc/kubernetes/bootstrap.kubeconfig

启动kubelet

在启动kubelet之前最好将kube-proxy重启一下

systemctl daemon-reload

systemctl restart kube-proxy

systemctl restart kubelet

systemctl enable kubelet