ElasticSearch7.1.1集群搭建(下)

ElasticSearch7.1.1集群搭建(下)

1) 编写ES集群启动脚本

vim es-start.sh

#!/bin/bash

bin/elasticsearch -E node.name=node-1 -E cluster.name=zhangchi -E path.data=node01_data -d

bin/elasticsearch -E node.name=node-2 -E cluster.name=zhangchi -E path.data=node02_data -d

bin/elasticsearch -E node.name=node-3 -E cluster.name=zhangchi -E path.data=node03_data -d

echo "es-cluster started..."

:wq

chmod u+x es-start.sh

2)安装本地JDK环境

切换至czhang用户

解压jdk

配置环境变量

su - czhang

vim ~/.bashrc

export JAVA_HOME=/soft/jdk1.8.0_221/

export PATH=$PATH:$JAVA_HOME/bin

3) 配置kibana的配置文件

去除绑定ip 防止宿主机无法访问kibana

server.host: "0.0.0.0"

i18n.locale: "zh-CN"

补上上篇文章中nvm安装过程

nvm

配置镜像

export NVM_NODEJS_ORG_MIRROR=https://npm.taobao.org/mirrors/node/

安装nodejs最新版本

nvm install 12.10.0

nvm i -g nrm

nrm use taobao

npm i json [方便curl命令格式化json数据 ]

crul 127.0.0.1:9200 | json

curl 127.0.0.1:9200 | python -m json.tool

4)配置es的jvm参数

-Xms512m

-Xmx512m

5) 配置logstash 导入movies数据供es查询

cd logstash

vim config/logstash.conf

input {

file {

path => "/soft/movies.csv" //网盘里的资源解压到soft文件夹下

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

csv {

separator => ","

columns => ["id","content","genre"]

}

mutate {

split => { "genre" => "|" }

remove_field => ["path", "host","@timestamp","message"]

}

mutate {

split => ["content", "("]

add_field => { "title" => "%{[content][0]}"}

add_field => { "year" => "%{[content][1]}"}

}

mutate {

convert => {

"year" => "integer"

}

strip => ["title"]

remove_field => ["path", "host","@timestamp","message","content"]

}

}

output {

elasticsearch {

hosts => "http://localhost:9200"

index => "movies"

document_id => "%{id}"

}

stdout {}

}

启用ES集群

启用es脚本

需要切换非root用户

./es-start.sh

启用kibana

nohup & 表示后台运行kibana

nohup bin/kibana &

启用logstash

bin/logstash -f config/logstash.conf

es端口9200

kinana端口5601

logstash导入数据后退出即可

测试es集群状态

[root@test ~]# curl 127.0.0.1:9200/_cat/nodes

192.168.200.56 33 73 25 2.37 1.37 0.54 mdi - node-2

192.168.200.56 38 73 26 2.37 1.37 0.54 mdi * node-3

192.168.200.56 40 73 25 2.37 1.37 0.54 mdi - node-1

[root@test ~]#

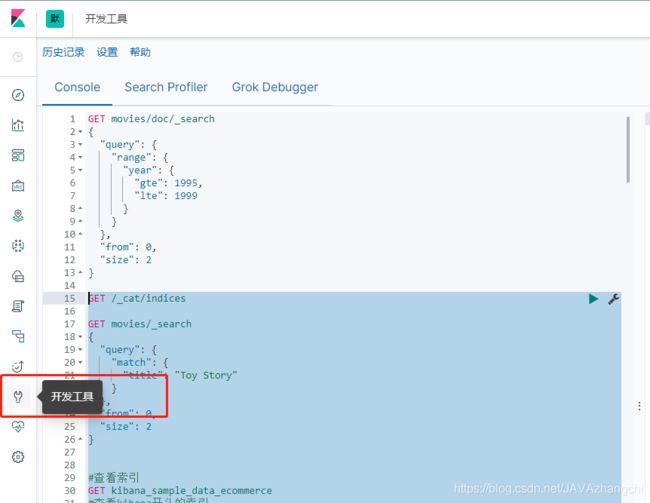

上图是kibana登陆界面,后面的restAPI都可以在devtools中进行

GET /_cat/indices

GET movies/_search

{

"query": {

"match": {

"title": "Toy Story"

}

},

"from": 0,

"size": 2

}

#查看索引

GET kibana_sample_data_ecommerce

#查看kibana开头的索引

GET /_cat/indices/kibana*?v&s=index

#查看状态绿色的索引

GET /_cat/indices?v&health=green

#按照文档个数排序

GET /_cat/indices?v&s=docs.count:asc

#查看节点信息

GET /_cat/nodes

Docker启用ES集群

1)编写docker-compose文件

version: '2.2'

services:

cerebro:

image: lmenezes/cerebro

container_name: cerebro

ports:

- "9000:9000"

command:

- -Dhosts.0.host=http://elasticsearch:9200

networks:

- elk2

kibana:

image: kibana:7.1.1

container_name: kibana7

environment:

- I18N_LOCALE=zh-CN

- XPACK_GRAPH_ENABLED=true

- TIMELION_ENABLED=true

- XPACK_MONITORING_COLLECTION_ENABLED="true"

ports:

- "5601:5601"

networks:

- elk2

elasticsearch:

image: elasticsearch:7.1.1

container_name: es7_01

environment:

- cluster.name=geektime

- node.name=es7_01

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- discovery.seed_hosts=es7_01,es7_02,es7_03

- cluster.initial_master_nodes=es7_01,es7_02,es7_03

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es7data1:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- elk2

elasticsearch2:

image: elasticsearch:7.1.1

container_name: es7_02

environment:

- cluster.name=geektime

- node.name=es7_02

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- discovery.seed_hosts=es7_01,es7_02,es7_03

- cluster.initial_master_nodes=es7_01,es7_02,es7_03

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es7data2:/usr/share/elasticsearch/data

networks:

- elk2

elasticsearch3:

image: elasticsearch:7.1.1

container_name: es7_03

environment:

- cluster.name=geektime

- node.name=es7_03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- discovery.seed_hosts=es7_01,es7_02,es7_03

- cluster.initial_master_nodes=es7_01,es7_02,es7_03

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es7data3:/usr/share/elasticsearch/data

networks:

- elk2

volumes:

es7data1:

driver: local

es7data2:

driver: local

es7data3:

driver: local

networks:

elk2:

driver: bridge

2)安装docker-compose

sudo curl -L "https://github.com/docker/compose/releases/download/1.24.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

docker-compose --version

docker-compose version 1.24.1, build 1110ad01

3)运行docker-compose.yml文件

[root@test elk7.1.1]# ll

total 669740

-rw-rw-r-- 1 czhang czhang 2099 Sep 17 09:30 docker-compose.yml

drwxr-xr-x 13 czhang czhang 242 Sep 17 09:49 elasticsearch-7.1.1

-rw-r--r-- 1 czhang czhang 346794062 Sep 16 20:56 elasticsearch-7.1.1-linux-x86_64.tar.gz

drwxr-xr-x 13 czhang czhang 263 Sep 17 08:55 kibana-7.1.1-linux-x86_64

-rw-r--r-- 1 czhang czhang 167785446 Sep 16 20:57 kibana-7.1.1-linux-x86_64.tar.gz

drwxr-xr-x 13 czhang czhang 267 Sep 17 09:47 logstash-7.1.1

-rw-r--r-- 1 czhang czhang 171222976 Sep 16 20:57 logstash-7.1.1.tar.gz

docker-compose up

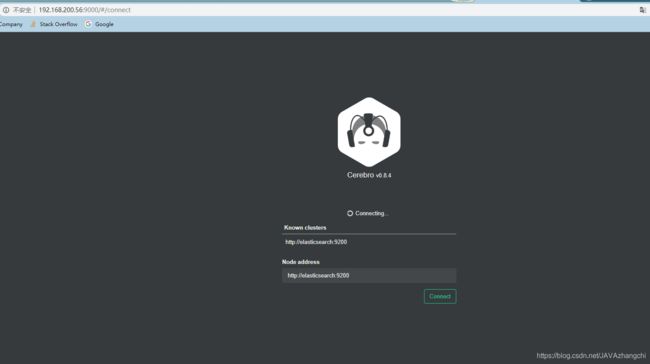

cerebro是一款类似head插件的工具,访问端口号是9000,定义在compose文件中

访问cerebro: 192.168.200.56:9000

4)访问cerebro

关闭现有的es集群

[root@test elk7.1.1]# jps

3061 Elasticsearch

3126 Elasticsearch

3191 Elasticsearch

3566 Jps

[root@test elk7.1.1]# kill 3061

[root@test elk7.1.1]# kill 3126

[root@test elk7.1.1]# kill 3191

[root@test elk7.1.1]# jps

3638 Jps

[root@test elk7.1.1]# lsof -i:5601

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

node 3410 czhang 18u IPv4 28017 0t0 TCP *:esmagent (LISTEN)

[root@test elk7.1.1]# kill 3410

su - root

docker rm -f `docker ps -qa`

docker-compose up

关闭docker集群

如果和compose文件不在同一目录下 需指定路径 -f

docker-compose -f /soft/elk7.1.1/docker-compose.yml down