NoSQL--Redis集群(Redis 集群实现方式 、Redis 集群部署方法)

文章目录

- 一、 Redis 集群的实现

-

- 1.1 客户端分片

- 1.2 代理分片

- 1.3 服务器端分片

- 二、 部署Redis集群

-

- 实验目的

- 实验环境

- 实验过程

-

- 1. 手工编译安装redis

- 2. 修改配置文件,开启集群功能

- 3. 在一台master服务器(14.0.0.47)上安装rvm,RUBY控制集群软件

- 4. 创建集群

- 5. 测试集群

- 6. 如果slave服务器宕机(手工关机slave服务器14.0.0.110)

- 7. 如果master服务器宕机(手工关机master服务器14.0.0.47)

- 8. 如果一组对应的master和slave同时宕机(14.0.0.47与14.0.0.111)

- 9. 如果三个master或者三个slave同时宕机

- 三、 实验总结

-

- 集群只有在以下两种情况下会down。

一、 Redis 集群的实现

目前 Redis 集群的实现方法一般有客户端分片、代理分片和服务器端分片三种解决方案。

1.1 客户端分片

客户端分片方案是将分片工作放在业务程序端。程序代码根据预先设置的路由规则,直 接对多个 Redis 实例进行分布式访问。

-

优点是群集不依赖于第三方分布式中间件,实现方法和代码都自己掌控,可随时调整,不用担心踩到坑。

-

缺点是升级麻烦,对研发人员的个人依赖性强,需要有较强的程序开发能力做后盾。这种方式下可运维性较差,一旦出现故障,定位和解决都得研发人员和运维人员配合解决,故障时间变长。因此这种方案,难以进行标准化运维,不太适合中小公司。

1.2 代理分片

代理分片方案是将分片工作交给专门的代理程序来做。代理程序接收到来自业务程序的 数据请求,根据路由规则,将这些请求分发给正确的 Redis 实例并返回给业务程序。这种机制下,一般会选用第三方代理程序(而不是自己研发)。因为后端有多个 Redis 实例, 所以这类程序又称为分布式中间件。这种分片机制的好处是业务程序不用关心后端 Redis 实例,维护起来也方便。虽然会因此带来些性能损耗,但对于 Redis 这种内存读写型应用, 相对而言是能容忍的。这是比较推荐的集群实现方案。像 Twemproxy、Codis 就是基于该机制的开源产品的其中代表,应用非常广泛。

1.3 服务器端分片

Redis-Cluster 是 Redis 官方的集群分片技术。Redis-Cluster 将所有 Key 映射到 16384 个 slot 中,集群中每个 Redis 实例负责一部分,业务程序是通过集成的 Redis-Cluster 客户端进行操作。客户端可以向任一实例发出请求,如果所需数据不在该实例中,则该实例引导 客户端自动去对应实例读写数据。Redis-Cluster 成员之间的管理包括节点名称、IP、端口、状态、角色等,都通过节点与之间两两通讯,定期交换信息并更新。

Redis-Cluster 是在 Redis3.0 版中推出,支持 Redis 分布式集群部署模式,采用无中心分布式架构。所有的 Redis 节点彼此互联(PING-PONG 机制),内部使用二进制协议优化传 输速度和带宽。节点的 fail 是通过集群中超过半数的节点检测失败时才生效。 客户端与 Redis节点直连,不需要中间代理层。客户端也不需要连接集群所有节点,连接集群中任何一个可用节点即可,这样即减少了代理层,也大大提高了 Redis 集群性能。

二、 部署Redis集群

实验目的

搭建Redis集群,并实现集群功能

实验环境

VMware中六台centos7.6虚拟机,三台为master服务器,三台为slave,地址规划如下:

masters:

14.0.0.47

14.0.0.48

14.0.0.49

slaves:

14.0.0.110

14.0.0.111

14.0.0.112

实验过程

实验过程以安装一台master与安装一台slave为例,在这六台虚拟机上都要安装redis数据库,这里采用手工编译安装的方式

1. 手工编译安装redis

[root@localhost ~]# iptables -F

[root@localhost ~]# setenforce 0

[root@localhost ~]# yum install gcc gcc-c++ make -y

[root@localhost ~]# tar zvxf redis-5.0.7.tar.gz -C /opt

[root@localhost ~]# cd /opt/redis-5.0.7/

[root@localhost redis-5.0.7]# make

[root@localhost redis-5.0.7]# make PREFIX=/usr/local/redis install

[root@localhost redis-5.0.7]# ln -s /usr/local/redis/bin/* /usr/local/bin/

[root@localhost redis-5.0.7]# cd utils/

[root@localhost utils]# ./install_server.sh

Welcome to the redis service installer

This script will help you easily set up a running redis server

Please select the redis port for this instance: [6379]

Selecting default: 6379

Please select the redis config file name [/etc/redis/6379.conf]

Selected default - /etc/redis/6379.conf

Please select the redis log file name [/var/log/redis_6379.log]

Selected default - /var/log/redis_6379.log

Please select the data directory for this instance [/var/lib/redis/6379]

Selected default - /var/lib/redis/6379

Please select the redis executable path [/usr/local/bin/redis-server]

Selected config:

Port : 6379

Config file : /etc/redis/6379.conf

Log file : /var/log/redis_6379.log

Data dir : /var/lib/redis/6379

Executable : /usr/local/bin/redis-server

Cli Executable : /usr/local/bin/redis-cli

Is this ok? Then press ENTER to go on or Ctrl-C to abort.

Copied /tmp/6379.conf => /etc/init.d/redis_6379

Installing service...

Successfully added to chkconfig!

Successfully added to runlevels 345!

Starting Redis server...

Installation successful!

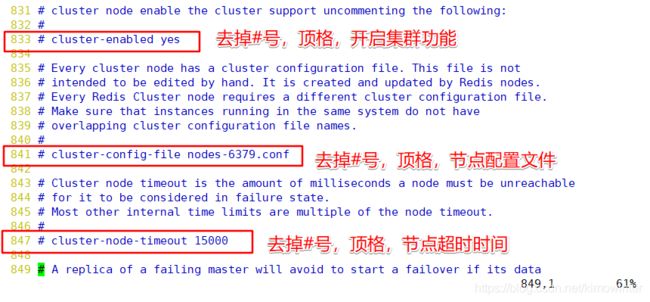

2. 修改配置文件,开启集群功能

在配置文件中对集群进行设置

89行 protected-mode no 关闭保护模式

93行 port 6379

137行 daemonize yes 以独立进程启动

833行 cluster-enabled yes 开启群集模式

841行 cluster-config-file nodes-6379.conf 群集名称文件设置

847行 cluster-node-timeout 15000 群集超时时间设置

700行 appendonly yes 开启持久化

[root@localhost utils]# /etc/init.d/redis_6379 stop ##关闭服务

Stopping ...

Waiting for Redis to shutdown ...

Redis stopped

[root@localhost utils]# /etc/init.d/redis_6379 start ##开启服务

Starting Redis server...

[root@localhost utils]# netstat -lnutp | grep 6379 ##查看监听端口6379

tcp 0 0 127.0.0.1:6379 0.0.0.0:* LISTEN 17968/redis-server

tcp 0 0 127.0.0.1:16379 0.0.0.0:* LISTEN 17968/redis-server

[root@localhost utils]# ls /var/lib/redis/6379/ ##产生了在刚刚在配置文件中修改的内容所指定的文件

appendonly.aof dump.rdb nodes-6379.conf

3. 在一台master服务器(14.0.0.47)上安装rvm,RUBY控制集群软件

导入key文件,便于按住安装rvm软件,为了获取ruby软件的版本

[root@localhost utils]# gpg --keyserver hkp://keys.gnupg.net --recv-keys 409B6B1796C275462A1703113804BB82D39DC0E3

gpg: 已创建目录‘/root/.gnupg’

gpg: 新的配置文件‘/root/.gnupg/gpg.conf’已建立

gpg: 警告:在‘/root/.gnupg/gpg.conf’里的选项于此次运行期间未被使用

gpg: 钥匙环‘/root/.gnupg/secring.gpg’已建立

gpg: 钥匙环‘/root/.gnupg/pubring.gpg’已建立

gpg: 下载密钥‘D39DC0E3’,从 hkp 服务器 keys.gnupg.net

gpg: /root/.gnupg/trustdb.gpg:建立了信任度数据库

gpg: 密钥 D39DC0E3:公钥“Michal Papis (RVM signing) <mpapis@gmail.com>”已导入

gpg: 没有找到任何绝对信任的密钥

gpg: 合计被处理的数量:1

gpg: 已导入:1 (RSA: 1)

导入key文件后,可以使用下面这条命令下载rvm,不过需要FQ,这里就直接使用FQ得到的脚本进行安装

curl-sSL https://get.rvm.io | bash-s stable

[root@localhost utils]# chmod +x rvm-installer.sh ##赋予执行权限

[root@localhost utils]# ./rvm-installer.sh ##执行脚本下载

...

省略部分信息

Thanks for installing RVM ?

Please consider donating to our open collective to help us maintain RVM.

Donate: https://opencollective.com/rvm/donate

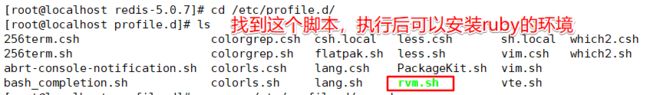

[root@localhost redis-5.0.7]# cd /etc/profile.d/

[root@localhost profile.d]# ls

[root@localhost profile.d]# source /etc/profile.d/rvm.sh ##执行这个脚本就可以安装好ruby的环境

[root@localhost profile.d]# rvm list known ##查看ruby的版本信息

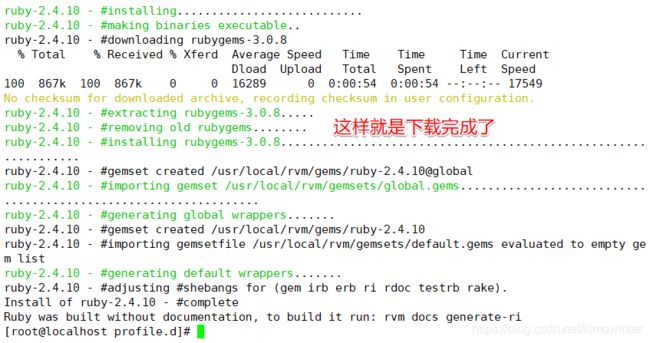

[root@localhost profile.d]# rvm install 2.4.10 ##耐心等待下载完成,下载时间完全与网速有关

[root@localhost profile.d]# rvm use 2.4.10 ##使用ruby

Using /usr/local/rvm/gems/ruby-2.4.10

[root@localhost profile.d]# ruby -v ##查看版本

ruby 2.0.0p648 (2015-12-16) [x86_64-linux]

再次安装redis

[root@localhost profile.d]# gem install redis

Fetching redis-4.2.2.gem

Successfully installed redis-4.2.2

Parsing documentation for redis-4.2.2

Installing ri documentation for redis-4.2.2

Done installing documentation for redis after 3 seconds

1 gem installed

4. 创建集群

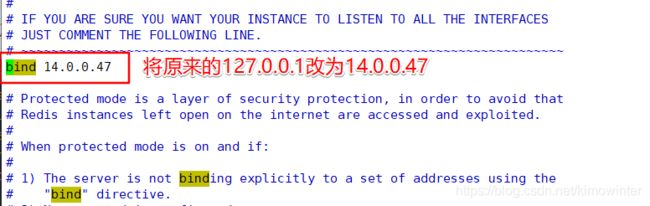

分别在每一台服务器上开启bind地址(以14.0.0.47为例)

[root@localhost profile.d]# vim /etc/redis/6379.conf

bind 14.0.0.47

[root@localhost profile.d]# /etc/init.d/redis_6379 restart

[root@localhost profile.d]# redis-cli --cluster create 14.0.0.47:6379 14.0.0.48:6379 14.0.0.49:6379 14.0.0.110:6379 14.0.0.111:6379 14.0.0.112:6379 --cluster-replicas 1 ##将六台服务器IP加入创建集群

Could not connect to Redis at 14.0.0.48:6379: Connection refused

[root@localhost profile.d]# vim /etc/redis/6379.conf

[root@localhost profile.d]# redis-cli --cluster create 14.0.0.47:6379 14.0.0.48:6379 14.0.0.49:6379 14.0.0.110:6379 14.0.0.111:6379 14.0.0.112:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 14.0.0.111:6379 to 14.0.0.47:6379

Adding replica 14.0.0.112:6379 to 14.0.0.48:6379

Adding replica 14.0.0.110:6379 to 14.0.0.49:6379

M: 824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379

slots:[0-5460] (5461 slots) master

M: 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379

slots:[5461-10922] (5462 slots) master

M: 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379

slots:[10923-16383] (5461 slots) master

S: 3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379

replicates 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf

S: 28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379

replicates 824461e1aa29a7790f6f5331b25ee961900461b7

S: 31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379

replicates 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b

Can I set the above configuration? (type 'yes' to accept): yes ##输入yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

........

>>> Performing Cluster Check (using node 14.0.0.47:6379)

M: 824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379

slots: (0 slots) slave

replicates 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf

M: 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379

slots: (0 slots) slave

replicates 824461e1aa29a7790f6f5331b25ee961900461b7

S: 31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379

slots: (0 slots) slave

replicates 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b

M: 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

上面的方法创建群集时的主从关系是随机生成的。

5. 测试集群

测试 Redis 集群是否可以写入数据,这里随便挑选一个14.0.0.48 实例插入 key 为 name、value 为 zhangsan 这条数据。

[root@my utils]# redis-cli -c -h 14.0.0.48

14.0.0.48:6379> set name zhangsan

OK

14.0.0.48:6379> get name

"zhangsan"

我们分别在各个master和slave服务器上查看,是否能够查看到

14.0.0.47服务器

[root@localhost profile.d]# redis-cli -c -h 14.0.0.47

14.0.0.47:6379> get name

-> Redirected to slot [5798] located at 14.0.0.48:6379

"zhangsan"

14.0.0.48:6379>

14.0.0.49服务器

[root@localhost utils]# redis-cli -c -h 14.0.0.49

14.0.0.49:6379> get name

-> Redirected to slot [5798] located at 14.0.0.48:6379

"zhangsan"

14.0.0.48:6379>

14.0.0.110服务器

[root@localhost utils]# redis-cli -c -h 14.0.0.110

14.0.0.110:6379> get name

-> Redirected to slot [5798] located at 14.0.0.48:6379

"zhangsan"

14.0.0.48:6379>

14.0.0.111服务器

[root@localhost utils]# redis-cli -c -h 14.0.0.111

14.0.0.111:6379> get name

-> Redirected to slot [5798] located at 14.0.0.48:6379

"zhangsan"

14.0.0.48:6379>

14.0.0.112服务器

[root@localhost utils]# redis-cli -c -h 14.0.0.112

14.0.0.112:6379> get name

-> Redirected to slot [5798] located at 14.0.0.48:6379

"zhangsan"

14.0.0.48:6379>

从上面结果可以清楚看到从各个实例跳转到了 14.0.0.48 实例。也就是说 Redis 会根据不同的类型数据存储到不同的 slot 里面。在执行测试命令时添加-c 选项表示群集模式。

14.0.0.48:6379> expire name 5 ##设置name键的自动删除时间10秒

(integer) 1

等待5秒

14.0.0.48:6379> keys * ##数据被删除

(empty list or set)

在各个节点查看也都是为空

6. 如果slave服务器宕机(手工关机slave服务器14.0.0.110)

在节点服务器的配置文件中,可以看到master和slave服务器没有任何变化

[root@localhost profile.d]# vim /var/lib/redis/6379/nodes-6379.conf

3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379@16379 slave,fail 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 1599668718349 1599668716848 4 connected

5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379@16379 master - 0 1599668732000 3 connected 10923-16383

28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379@16379 slave 824461e1aa29a7790f6f5331b25ee961900461b7 0 1599668731000 5 connected

824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379@16379 myself,master - 0 1599668733000 1 connected 0-5460

31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379@16379 slave 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 0 1599668734020 6 connected

99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379@16379 master - 0 1599668733008 2 connected 5461-10922

vars currentEpoch 6 lastVoteEpoch 0

这时候将14.0.0.110开机,再加入集群,开启redis,就自动加入了集群,一定要清空防火墙规则,关闭增强型核心防护功能。

[root@localhost ~]# iptables -F

[root@localhost ~]# setenforce 0

在14.0.0.47服务器上查看节点配置文件

[root@localhost profile.d]# vim /var/lib/redis/6379/nodes-6379.conf

3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379@16379 slave 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 0 1599669151595 4 connected

5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379@16379 master - 0 1599669150000 3 connected 10923-16383

28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379@16379 slave 824461e1aa29a7790f6f5331b25ee961900461b7 0 1599669151400 5 connected

824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379@16379 myself,master - 0 1599669149000 1 connected 0-5460

31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379@16379 slave 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 0 1599669149375 6 connected

99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379@16379 master - 0 1599669150388 2 connected 5461-10922

vars currentEpoch 6 lastVoteEpoch 0

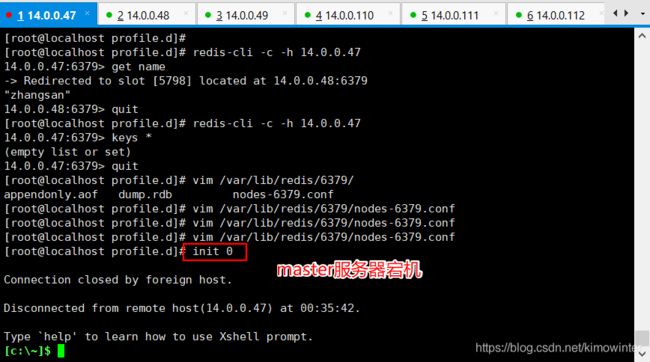

7. 如果master服务器宕机(手工关机master服务器14.0.0.47)

14.0.0.47是创建集群的服务器

通过节点配置文件发现,14.0.0.47宕机,14.0.0.47与14.0.0.111是一组,14.0.0.111成为了master

[root@my utils]# vim /var/lib/redis/6379/nodes-6379.conf

5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379@16379 master - 0 1599669418164 3 connected 10923-16383

824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379@16379 master,fail - 1599669343684 1599669343000 1 connected

31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379@16379 slave 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 0 1599669419000 6 connected

99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379@16379 myself,master - 0 1599669420000 2 connected 5461-10922

28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379@16379 master - 0 1599669419175 8 connected 0-5460

3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379@16379 slave 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 0 1599669420186 4 connected

vars currentEpoch 8 lastVoteEpoch 8

这时候重启14.0.0.47,14.0.0.47变成了slave,没有成为master。

[root@localhost ~]# iptables -F

[root@localhost ~]# setenforce 0

[root@localhost ~]# vim /var/lib/redis/6379/nodes-6379.conf

31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379@16379 slave 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 0 1599669793512 6 connected

3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379@16379 slave 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 0 1599669793512 4 connected

5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379@16379 master - 0 1599669793513 3 connected 10923-16383

28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379@16379 master - 0 1599669793513 8 connected 0-5460

99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379@16379 master - 0 1599669793513 2 connected 5461-10922

824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379@16379 myself,slave 28242fb022633872bbbdf2ddcef323cd59115b21 0 1599669793505 1 connected

vars currentEpoch 8 lastVoteEpoch 0

8. 如果一组对应的master和slave同时宕机(14.0.0.47与14.0.0.111)

集群直接关闭

14.0.0.112:6379> get name

(error) CLUSTERDOWN The cluster is down

9. 如果三个master或者三个slave同时宕机

现在模拟三台master全部宕了,这时候存数据出现了问题,说明群集瘫痪

14.0.0.48:6379> set ppp xiaohei

Error: Connection timed out

将三台master重启,并关闭防火墙,这时候会看到slave并没有抢占成为master

[root@localhost ~]# vim /var/lib/redis/6379/nodes-6379.conf

99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379@16379 master - 0 1599671178000 2 connected 5461-10922

5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379@16379 master - 0 1599671179468 3 connected 10923-16383

824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379@16379 master - 0 1599671177000 9 connected 0-5460

28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379@16379 myself,slave 824461e1aa29a7790f6f5331b25ee961900461b7 0 1599671177000 8 connected

31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379@16379 slave 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 0 1599671178969 6 connected

3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379@16379 slave 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 0 1599671176000 4 connected

vars currentEpoch 13 lastVoteEpoch 0

三台slave全部宕了,这时候仍然可以正常存取数据。

但是节点配置文件只显示一台slave宕机

[root@localhost ~]# vim /var/lib/redis/6379/nodes-6379.conf

28242fb022633872bbbdf2ddcef323cd59115b21 14.0.0.111:6379@16379 slave 824461e1aa29a7790f6f5331b25ee961900461b7 0 1599671372650 9 connected

31ffaaf34a21fad0091dccde078db079c716932a 14.0.0.112:6379@16379 slave,fail 99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 1599671357509 1599671356000 6 connected

3dd9b7671445967e9653c74c603e15d5afb62268 14.0.0.110:6379@16379 slave 5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 0 1599671369000 4 connected

99b0f22b1d0e3f0248c32eb8bfa21b25abbd4c8b 14.0.0.48:6379@16379 master - 0 1599671371000 2 connected 5461-10922

5e8506dfcf4f57dbc7e7fb4fad986c6f2b9f5daf 14.0.0.49:6379@16379 myself,master - 0 1599671371000 3 connected 10923-16383

824461e1aa29a7790f6f5331b25ee961900461b7 14.0.0.47:6379@16379 master - 0 1599671371639 9 connected 0-5460

vars currentEpoch 13 lastVoteEpoch 9

三、 实验总结

集群只有在以下两种情况下会down。

- 三台master同时宕机

- 一台master与一台对应的slave宕机