SSM集成kafka——注解,xml配置两种方式实现

引言

最近在和甲方 对接数据的时候,甲方要求通过kafka将处理完成数据回传,所以我们需要在项目中集成kafka,由于之前项目采用的是SSM框架,并且么有集成过kafka,所以在这里分享一下。

一、XML配置文件方式实现

1、引入jar 这两有两个地方需要注意

1) kafka-clients 包版本与服务器端kafka-clients版本保持一致(查看服务器kafka版本方法 在kafka安装目录下libs 中查找kafka-clients开头的jar文件)

2)引入的spring-kafka 版本在2.0或者2.X 时Spring版本在5.0才能支持

org.apache.kafka

kafka-clients

1.0.1

org.springframework.kafka

spring-kafka

1.3.5.RELEASE

org.apache.kafka

kafka-clients

2、kafka.properties文件内容

# brokers集群

kafka.producer.bootstrap.servers = ip1:9092,ip2:9092,ip3:9092

kafka.producer.acks = all

#发送失败重试次数

kafka.producer.retries = 3

kafka.producer.linger.ms = 10

# 33554432 即32MB的批处理缓冲区

kafka.producer.buffer.memory = 40960

#批处理条数:当多个记录被发送到同一个分区时,生产者会尝试将记录合并到更少的请求中。这有助于客户端和服务器的性能

kafka.producer.batch.size = 4096

kafka.producer.defaultTopic = nwbs-eval-task

kafka.producer.key.serializer = org.apache.kafka.common.serialization.StringSerializer

kafka.producer.value.serializer = org.apache.kafka.common.serialization.StringSerializer

################# kafka consumer ################## ,

kafka.consumer.bootstrap.servers = ip1:9092,ip2,ip3:9092

# 如果为true,消费者的偏移量将在后台定期提交

kafka.consumer.enable.auto.commit = true

#如何设置为自动提交(enable.auto.commit=true),这里设置自动提交周期

kafka.consumer.auto.commit.interval.ms=1000

#order-beta 消费者群组ID,发布-订阅模式,即如果一个生产者,多个消费者都要消费,那么需要定义自己的群组,同一群组内的消费者只有一个能消费到消息

kafka.consumer.group.id = sccl-nwbs

#在使用Kafka的组管理时,用于检测消费者故障的超时

kafka.consumer.session.timeout.ms = 30000

kafka.consumer.key.deserializer = org.apache.kafka.common.serialization.StringDeserializer

kafka.consumer.value.deserializer = org.apache.kafka.common.serialization.StringDeserializer3.consumer-kafka.xml 配置如下

${kafka.task.eval.topic}

${kafka.task.optimizeNetwork.topic}

${kafka.task.business.topic}

4.consumer-kafka.xml 配置如下

5. 调用Controller -这里 向kafka 中的 3个topic 发送了消息

@RestController

@RequestMapping(value = "/kafka")

public class KafkaController {

@Autowired

KafkaTemplate kafkaTemplate;

@Value("nwbs-optimizeNetwork-task")

private String optimizeTopic ;

@Value("nwbs-business-task")

private String businessTopic;

@RequestMapping(value = "/producer" , method = RequestMethod.POST)

public void producer(@RequestBody JSONObject params){

kafkaTemplate.send(optimizeTopic,params.toJSONString()+"optimizeTopic");

kafkaTemplate.send(businessTopic,params.toJSONString()+"businessTopic");

ListenableFuture> listenableFuture = kafkaTemplate.sendDefault(params.toJSONString());;

//发送成功回调

SuccessCallback> successCallback = new SuccessCallback>() {

@Override

public void onSuccess(SendResult result) {

//成功业务逻辑

System.out.println("onSuccess");

}

};

//发送失败回调

FailureCallback failureCallback = new FailureCallback() {

@Override

public void onFailure(Throwable ex) {

//失败业务逻辑

System.out.println("onFailure");

}

};

listenableFuture.addCallback(successCallback, failureCallback);

}

} 二 注解方式实现

参考spring-kafka官方文档 https://docs.spring.io/spring-kafka/reference/htmlsingle/

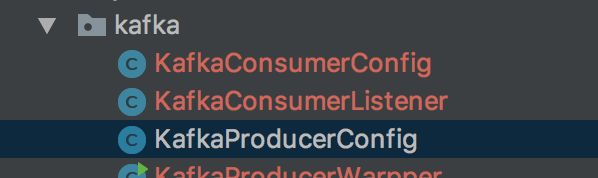

1. 文件整体结构如图

2. KafKaConsumerConfig.java代码

/**

* @author: hsc

* @date: 2018/6/21 15:58

* @description kafka 消费者配置

*/

@Configuration

@EnableKafka

public class KafkaConsumerConfig {

public KafkaConsumerConfig(){

System.out.println("kafka消费者配置加载...");

}

@Bean

KafkaListenerContainerFactory>

kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory factory =

new ConcurrentKafkaListenerContainerFactory();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(3);

factory.getContainerProperties().setPollTimeout(3000);

return factory;

}

@Bean

public ConsumerFactory consumerFactory() {

return new DefaultKafkaConsumerFactory(consumerProperties());

}

@Bean

public Map consumerProperties() {

Map props= new HashMap();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, PropertiesUtil.getInstance().getString("kafka.consumer.bootstrap.servers"));

props.put(ConsumerConfig.GROUP_ID_CONFIG, PropertiesUtil.getInstance().getString("kafka.consumer.group.id"));

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, PropertiesUtil.getInstance().getString("kafka.consumer.enable.auto.commit"));

props.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, PropertiesUtil.getInstance().getString("kafka.consumer.auto.commit.interval.ms"));

props.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, PropertiesUtil.getInstance().getString("kafka.consumer.session.timeout.ms"));

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, PropertiesUtil.getInstance().getString("kafka.consumer.key.deserializer"));

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, PropertiesUtil.getInstance().getString("kafka.consumer.value.deserializer"));

return props;

}

@Bean

public KafkaConsumerListener kafkaConsumerListener(){

return new KafkaConsumerListener();

}

} 3.KafKaProducerConfig.java

/**

* @author: hsc

* @date: 2018/6/21 21:30

* @description kafka 生产者配置

*/

@Configuration

@EnableKafka

public class KafkaProducerConfig {

public KafkaProducerConfig(){

System.out.println("kafka生产者配置");

}

@Bean

public ProducerFactory producerFactory() {

return new DefaultKafkaProducerFactory(producerProperties());

}

@Bean

public Map producerProperties() {

Map props = new HashMap();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, PropertiesUtil.getInstance().getString("kafka.producer.bootstrap.servers"));

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, PropertiesUtil.getInstance().getString("kafka.producer.key.serializer"));

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,PropertiesUtil.getInstance().getString("kafka.producer.value.serializer"));

props.put(ProducerConfig.RETRIES_CONFIG,PropertiesUtil.getInstance().getInt("kafka.producer.retries"));

props.put(ProducerConfig.BATCH_SIZE_CONFIG,PropertiesUtil.getInstance().getInt("kafka.producer.batch.size",1048576));

props.put(ProducerConfig.LINGER_MS_CONFIG,PropertiesUtil.getInstance().getInt("kafka.producer.linger.ms"));

props.put(ProducerConfig.BUFFER_MEMORY_CONFIG,PropertiesUtil.getInstance().getLong("kafka.producer.buffer.memory",33554432L));

props.put(ProducerConfig.ACKS_CONFIG,PropertiesUtil.getInstance().getString("kafka.producer.acks","all"));

return props;

}

@Bean

public KafkaTemplate kafkaTemplate() {

KafkaTemplate kafkaTemplate = new KafkaTemplate(producerFactory(),true);

kafkaTemplate.setDefaultTopic(PropertiesUtil.getInstance().getString("kafka.producer.defaultTopic","default"));

return kafkaTemplate;

}

} 4.KafkaConsumerListenser

/**

* @author: hsc

* @date: 2018/6/21 16:33

* @description 消费者listener

*/

public class KafkaConsumerListener {

/**

* @param data

*/

@KafkaListener(groupId="xxx" ,topics = "xxx")

void listener(ConsumerRecord data){

System.out.println("消费者线程:"+Thread.currentThread().getName()+"[ 消息 来自kafkatopic:"+data.topic()+",分区:"+data.partition()

+" ,委托时间:"+data.timestamp()+"]消息内容如下:");

System.out.println(data.value());

}

} 参考文章(结合官方文档一起看)

http://www.cnblogs.com/dennyzhangdd/p/7759875.html