camerax_Android CameraX OpenCV图像处理

camerax

In this tutorial, we’ll be integrating OpenCV in our Android Application. We have already discussed the basics of CameraX here. So today, we’ll see how to run OpenCV image processing on live camera feed.

在本教程中,我们将把OpenCV集成到我们的Android应用程序中。 我们已经在这里讨论了CameraX的基础知识。 因此,今天,我们将看到如何在实时摄像机源上运行OpenCV图像处理。

Android CameraX (Android CameraX)

CameraX which comes with Android Jetpack is all about use cases. Basically, a camera can be built using three core use cases – Preview, Analyse, Capture.

Android Jetpack随附的CameraX都是关于用例的。 基本上,可以使用三个核心用例来构建摄像机:预览,分析和捕获。

Having already done preview and capture previously, today our main focus is on Analysis.

之前已经完成了预览和捕获,今天我们的主要重点是分析。

Hence, we’ll be using OpenCV to analyze frames and process them in real-time.

因此,我们将使用OpenCV分析帧并进行实时处理。

什么是OpenCV? (What is OpenCV?)

OpenCV is a computer vision libraries which contains more than 2000 algorithms related to image processing. It has been written in C++.

OpenCV是一个计算机视觉库,其中包含2000多种与图像处理有关的算法。 它是用C ++编写的。

Thankfully, a lot of high-level stuff in OpenCV can be done in Java. Besides, we can always use the JNI interface to communicate between Java and C++.

值得庆幸的是,OpenCV中的许多高级内容都可以用Java完成。 此外,我们始终可以使用JNI接口在Java和C ++之间进行通信。

We can download and import the OpenCV SDK from their official GitHub repository. It’s a pretty big module. Due to size and project space constraints, we’ll be using a Gradle dependency library shown below:

我们可以从其官方GitHub存储库下载并导入OpenCV SDK。 这是一个很大的模块。 由于大小和项目空间的限制,我们将使用如下所示的Gradle依赖库:

implementation 'com.quickbirdstudios:opencv:3.4.1'Android applications that use OpenCV modules have a large APK size.

使用OpenCV模块的Android应用程序具有较大的APK大小。

In the following section using CameraX and OpenCV, we’ll convert the color spaces to give the camera a totally different outlook.

在下面的使用CameraX和OpenCV的部分中,我们将转换色彩空间以使摄像机具有完全不同的外观。

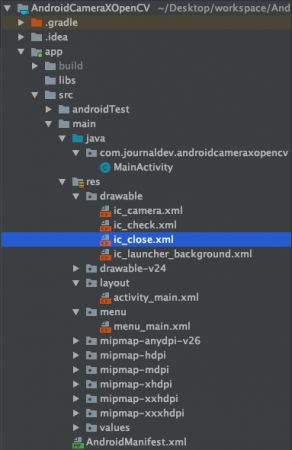

Android OpenCV项目结构 (Android OpenCV Project Structure)

Android OpenCV代码 (Android OpenCV Code)

The code for the activity_main.xml layout is given below:

下面给出了activity_main.xml布局的代码:

The code for the MainActivity.java is given below:

MainActivity.java的代码如下:

package com.journaldev.androidcameraxopencv;

import androidx.annotation.NonNull;

import androidx.annotation.Nullable;

import androidx.appcompat.app.AppCompatActivity;

import androidx.camera.core.CameraX;

import androidx.camera.core.ImageAnalysis;

import androidx.camera.core.ImageAnalysisConfig;

import androidx.camera.core.ImageCapture;

import androidx.camera.core.ImageCaptureConfig;

import androidx.camera.core.ImageProxy;

import androidx.camera.core.Preview;

import androidx.camera.core.PreviewConfig;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import android.content.pm.PackageManager;

import android.graphics.Bitmap;

import android.graphics.Matrix;

import android.os.Bundle;

import android.os.Environment;

import android.os.Handler;

import android.os.HandlerThread;

import android.util.Log;

import android.util.Rational;

import android.util.Size;

import android.view.Menu;

import android.view.MenuItem;

import android.view.Surface;

import android.view.TextureView;

import android.view.View;

import android.view.ViewGroup;

import android.widget.ImageView;

import android.widget.LinearLayout;

import android.widget.Toast;

import com.google.android.material.floatingactionbutton.FloatingActionButton;

import org.opencv.android.OpenCVLoader;

import org.opencv.android.Utils;

import org.opencv.core.Mat;

import org.opencv.imgproc.Imgproc;

import java.io.File;

public class MainActivity extends AppCompatActivity implements View.OnClickListener {

private int REQUEST_CODE_PERMISSIONS = 101;

private final String[] REQUIRED_PERMISSIONS = new String[]{"android.permission.CAMERA", "android.permission.WRITE_EXTERNAL_STORAGE"};

TextureView textureView;

ImageView ivBitmap;

LinearLayout llBottom;

int currentImageType = Imgproc.COLOR_RGB2GRAY;

ImageCapture imageCapture;

ImageAnalysis imageAnalysis;

Preview preview;

FloatingActionButton btnCapture, btnOk, btnCancel;

static {

if (!OpenCVLoader.initDebug())

Log.d("ERROR", "Unable to load OpenCV");

else

Log.d("SUCCESS", "OpenCV loaded");

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

btnCapture = findViewById(R.id.btnCapture);

btnOk = findViewById(R.id.btnAccept);

btnCancel = findViewById(R.id.btnReject);

btnOk.setOnClickListener(this);

btnCancel.setOnClickListener(this);

llBottom = findViewById(R.id.llBottom);

textureView = findViewById(R.id.textureView);

ivBitmap = findViewById(R.id.ivBitmap);

if (allPermissionsGranted()) {

startCamera();

} else {

ActivityCompat.requestPermissions(this, REQUIRED_PERMISSIONS, REQUEST_CODE_PERMISSIONS);

}

}

private void startCamera() {

CameraX.unbindAll();

preview = setPreview();

imageCapture = setImageCapture();

imageAnalysis = setImageAnalysis();

//bind to lifecycle:

CameraX.bindToLifecycle(this, preview, imageCapture, imageAnalysis);

}

private Preview setPreview() {

Rational aspectRatio = new Rational(textureView.getWidth(), textureView.getHeight());

Size screen = new Size(textureView.getWidth(), textureView.getHeight()); //size of the screen

PreviewConfig pConfig = new PreviewConfig.Builder().setTargetAspectRatio(aspectRatio).setTargetResolution(screen).build();

Preview preview = new Preview(pConfig);

preview.setOnPreviewOutputUpdateListener(

new Preview.OnPreviewOutputUpdateListener() {

@Override

public void onUpdated(Preview.PreviewOutput output) {

ViewGroup parent = (ViewGroup) textureView.getParent();

parent.removeView(textureView);

parent.addView(textureView, 0);

textureView.setSurfaceTexture(output.getSurfaceTexture());

updateTransform();

}

});

return preview;

}

private ImageCapture setImageCapture() {

ImageCaptureConfig imageCaptureConfig = new ImageCaptureConfig.Builder().setCaptureMode(ImageCapture.CaptureMode.MIN_LATENCY)

.setTargetRotation(getWindowManager().getDefaultDisplay().getRotation()).build();

final ImageCapture imgCapture = new ImageCapture(imageCaptureConfig);

btnCapture.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

imgCapture.takePicture(new ImageCapture.OnImageCapturedListener() {

@Override

public void onCaptureSuccess(ImageProxy image, int rotationDegrees) {

Bitmap bitmap = textureView.getBitmap();

showAcceptedRejectedButton(true);

ivBitmap.setImageBitmap(bitmap);

}

@Override

public void onError(ImageCapture.UseCaseError useCaseError, String message, @Nullable Throwable cause) {

super.onError(useCaseError, message, cause);

}

});

/*File file = new File(

Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES), "" + System.currentTimeMillis() + "_JDCameraX.jpg");

imgCapture.takePicture(file, new ImageCapture.OnImageSavedListener() {

@Override

public void onImageSaved(@NonNull File file) {

Bitmap bitmap = textureView.getBitmap();

showAcceptedRejectedButton(true);

ivBitmap.setImageBitmap(bitmap);

}

@Override

public void onError(@NonNull ImageCapture.UseCaseError useCaseError, @NonNull String message, @Nullable Throwable cause) {

}

});*/

}

});

return imgCapture;

}

private ImageAnalysis setImageAnalysis() {

// Setup image analysis pipeline that computes average pixel luminance

HandlerThread analyzerThread = new HandlerThread("OpenCVAnalysis");

analyzerThread.start();

ImageAnalysisConfig imageAnalysisConfig = new ImageAnalysisConfig.Builder()

.setImageReaderMode(ImageAnalysis.ImageReaderMode.ACQUIRE_LATEST_IMAGE)

.setCallbackHandler(new Handler(analyzerThread.getLooper()))

.setImageQueueDepth(1).build();

ImageAnalysis imageAnalysis = new ImageAnalysis(imageAnalysisConfig);

imageAnalysis.setAnalyzer(

new ImageAnalysis.Analyzer() {

@Override

public void analyze(ImageProxy image, int rotationDegrees) {

//Analyzing live camera feed begins.

final Bitmap bitmap = textureView.getBitmap();

if(bitmap==null)

return;

Mat mat = new Mat();

Utils.bitmapToMat(bitmap, mat);

Imgproc.cvtColor(mat, mat, currentImageType);

Utils.matToBitmap(mat, bitmap);

runOnUiThread(new Runnable() {

@Override

public void run() {

ivBitmap.setImageBitmap(bitmap);

}

});

}

});

return imageAnalysis;

}

private void showAcceptedRejectedButton(boolean acceptedRejected) {

if (acceptedRejected) {

CameraX.unbind(preview, imageAnalysis);

llBottom.setVisibility(View.VISIBLE);

btnCapture.hide();

textureView.setVisibility(View.GONE);

} else {

btnCapture.show();

llBottom.setVisibility(View.GONE);

textureView.setVisibility(View.VISIBLE);

textureView.post(new Runnable() {

@Override

public void run() {

startCamera();

}

});

}

}

private void updateTransform() {

Matrix mx = new Matrix();

float w = textureView.getMeasuredWidth();

float h = textureView.getMeasuredHeight();

float cX = w / 2f;

float cY = h / 2f;

int rotationDgr;

int rotation = (int) textureView.getRotation();

switch (rotation) {

case Surface.ROTATION_0:

rotationDgr = 0;

break;

case Surface.ROTATION_90:

rotationDgr = 90;

break;

case Surface.ROTATION_180:

rotationDgr = 180;

break;

case Surface.ROTATION_270:

rotationDgr = 270;

break;

default:

return;

}

mx.postRotate((float) rotationDgr, cX, cY);

textureView.setTransform(mx);

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

if (requestCode == REQUEST_CODE_PERMISSIONS) {

if (allPermissionsGranted()) {

startCamera();

} else {

Toast.makeText(this, "Permissions not granted by the user.", Toast.LENGTH_SHORT).show();

finish();

}

}

}

private boolean allPermissionsGranted() {

for (String permission: REQUIRED_PERMISSIONS) {

if (ContextCompat.checkSelfPermission(this, permission) != PackageManager.PERMISSION_GRANTED) {

return false;

}

}

return true;

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

getMenuInflater().inflate(R.menu.menu_main, menu);

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

switch (item.getItemId()) {

case R.id.black_white:

currentImageType = Imgproc.COLOR_RGB2GRAY;

startCamera();

return true;

case R.id.hsv:

currentImageType = Imgproc.COLOR_RGB2HSV;

startCamera();

return true;

case R.id.lab:

currentImageType = Imgproc.COLOR_RGB2Lab;

startCamera();

return true;

}

return super.onOptionsItemSelected(item);

}

@Override

public void onClick(View v) {

switch (v.getId()) {

case R.id.btnReject:

showAcceptedRejectedButton(false);

break;

case R.id.btnAccept:

File file = new File(

Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES), "" + System.currentTimeMillis() + "_JDCameraX.jpg");

imageCapture.takePicture(file, new ImageCapture.OnImageSavedListener() {

@Override

public void onImageSaved(@NonNull File file) {

showAcceptedRejectedButton(false);

Toast.makeText(getApplicationContext(), "Image saved successfully in Pictures Folder", Toast.LENGTH_LONG).show();

}

@Override

public void onError(@NonNull ImageCapture.UseCaseError useCaseError, @NonNull String message, @Nullable Throwable cause) {

}

});

break;

}

}

}In the above code, inside Image Analysis use case, we retrieve the Bitmap from the TextureView.

在上面的代码中,在“图像分析”用例内部,我们从TextureView中检索位图。

Utils.bitmapToMat is used to convert the Bitmap to Mat object. This method is a part of OpenCV android.

Utils.bitmapToMat用于将位图转换为Mat对象。 此方法是OpenCV android的一部分。

Mat class is basically used to hold the image. It consists of a matrix header and a pointer to the matrix which contains pixels values.

Mat类基本上用于保存图像。 它由矩阵标题和指向包含像素值的矩阵的指针组成。

In our image analysis, we convert the mat color space from one type to another use ImgProc.cvtColor.

在图像分析中,我们使用ImgProc.cvtColor将垫子颜色空间从一种类型转换为另一种类型。

Having converted the mat to a different color space, we then convert it a bitmap and show it on the screen in an ImageView.

将垫子转换为其他颜色空间后,我们将其转换为位图,并在ImageView的屏幕上显示。

By default, the image is of RGB type. Using the menu options we can convert the image to types GRAY, LAB, HSV.

默认情况下,图像为RGB类型。 使用菜单选项,我们可以将图像转换为GREY,LAB,HSV类型。

Let’s look at the output of the application in action.

让我们看看实际应用程序的输出。

Android Camerax Opencv Output New

Android Camerax Opencv输出新

So we were able to analyze, view the captured frame and optionally save it in our internal storage directory.

因此,我们能够分析,查看捕获的帧并将其保存到我们的内部存储目录中。

That brings an end to this tutorial. You can download the project from the link below or view the full source code in our Github repository.

这样就结束了本教程。 您可以从下面的链接下载项目,或在我们的Github存储库中查看完整的源代码。

翻译自: https://www.journaldev.com/31693/android-camerax-opencv-image-processing

camerax