作者:桂。

时间:2017-05-23 15:52:51

链接:http://www.cnblogs.com/xingshansi/p/6895710.html

一、理论描述

Kernel ridge regression (KRR)是对Ridge regression的扩展,看一下Ridge回归的准则函数:

求解

一些文章利用矩阵求逆,其实求逆只是表达方便,也可以直接计算。看一下KRR的理论推导,注意到

左乘![]() ,并右乘

,并右乘![]() ,得到

,得到

利用Ridge回归中的最优解

对于xxT的形式可以利用kernel的思想:

可以看出只需要计算内积就可以,关于核函数的选择以及特性,参考另一篇文章。

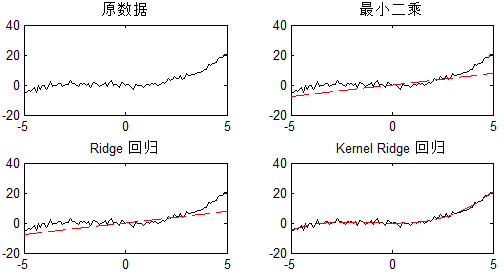

先来验证一下理论的正确性,用MATLAB仿真一下:

clc;clear all;close all;

x = [-5:.1:5]';

y = 0.1*x.^3 + 0.3*x.^2 + randn(length(x),1);

subplot (2,2,1)

plot(x,y,'k');hold on;

title('原数据')

subplot (2,2,2)

w1 = inv(x'*x)*x'*y; %lsqr

plot(x,y,'k');hold on;

plot(x,w1*x,'r--');

title('最小二乘')

subplot (2,2,3)

w2 = inv(x'*x+0.5)*x'*y; %ridge lambda = 0.5

plot(x,y,'k');hold on;

plot(x,w2*x,'r--');

title('Ridge 回归')

subplot (2,2,4)

K = (1+x*x').^3;%kernel ridge regression ,lambda = 0.5

z = K*pinv(K + 0.5)*y;

plot(x,y,'k');hold on;

plot(x,z,'r--');

title('Kernel Ridge 回归')

结果图中可以看出,kernel 起到了效果:

二、Sklearn基本基本操作

基本用法(采用交叉验证):

kr = GridSearchCV(KernelRidge(kernel='rbf', gamma=0.1), cv=5,

param_grid={"alpha": [1e0, 0.1, 1e-2, 1e-3],

"gamma": np.logspace(-2, 2, 5)})

kr.fit(X[:train_size], y[:train_size])

y_kr = kr.predict(X_plot)

应用实例:

from __future__ import division

import time

import numpy as np

from sklearn.model_selection import GridSearchCV

from sklearn.kernel_ridge import KernelRidge

import matplotlib.pyplot as plt

rng = np.random.RandomState(0)

#############################################################################

# Generate sample data

X = 5 * rng.rand(10000, 1)

y = np.sin(X).ravel()

# Add noise to targets

y[::5] += 3 * (0.5 - rng.rand(int(X.shape[0]/5)))

X_plot = np.linspace(0, 5, 100000)[:, None]

#############################################################################

# Fit regression model

train_size = 100

kr = GridSearchCV(KernelRidge(kernel='rbf', gamma=0.1), cv=5,

param_grid={"alpha": [1e0, 0.1, 1e-2, 1e-3],

"gamma": np.logspace(-2, 2, 5)})

t0 = time.time()

kr.fit(X[:train_size], y[:train_size])

kr_fit = time.time() - t0

print("KRR complexity and bandwidth selected and model fitted in %.3f s"

% kr_fit)

t0 = time.time()

y_kr = kr.predict(X_plot)

kr_predict = time.time() - t0

print("KRR prediction for %d inputs in %.3f s"

% (X_plot.shape[0], kr_predict))

#############################################################################

# look at the results

plt.scatter(X[:100], y[:100], c='k', label='data', zorder=1)

plt.hold('on')

plt.plot(X_plot, y_kr, c='g',

label='KRR (fit: %.3fs, predict: %.3fs)' % (kr_fit, kr_predict))

plt.xlabel('data')

plt.ylabel('target')

plt.title('Kernel Ridge')

plt.legend()参考

- http://scikit-learn.org/stable/modules/generated/sklearn.kernel_ridge.KernelRidge.html#sklearn.kernel_ridge.KernelRidge