mongodb复制集介绍及搭建

文章目录

- 主从复制和副本集介绍

-

- 一、主从复制和副本集

- 二、副本集工作原理

- mongodb复制集搭建

-

- 推荐步骤

-

- 故障转移切换

- 选举复制

主从复制和副本集介绍

一、主从复制和副本集

MongoDB提供了两种复制部署方案:主从复制和副本集

●1.主从复制

- 一个主节点,多个从节点,所有从节点会去主节点获取最新数据,做到主从数据保持一致。

缺点:

- 当主节点出现宕机,那么集群将不能正常运作,需要先人工将其中一个从节点作为主节点,需要停机操作,使对外服务会有一段空白时间。

●2.副本集

- 为了解决主从复制的容灾性问题。

- 没有固定的主节点,集群会自己选举出一个主节点,当这个主节点不能正常工作时,又会另外选举出其他节点作为主节点。副本集中总会有一个活跃节点和一个(多个)备份节点,当当前活跃节点出问题时,备份节点会提升为活跃节点。

- 在副本集中会有一个只参与投票选举、不复制数据的仲裁节点,用于当票数出现一致时来判决。官方推荐集群节点为奇数。

二、副本集工作原理

●1.oplog(操作日志)

- 记录数据改变操作(更新插入),oplog是一个固定集合,喂鱼每个复制节点的local的数据库里。新操作会替换旧的操作,以保证oplog不会超过预设的大小,oplog中的每个文档都代表主节点上执行的一个才做。

●2.数据同步

- 每个oplog都有时间戳,所有从节点都使用这个时间戳来追踪它们最后执行写操作的记录。当某个从节点准备更新自己时,会做三件事:首先,查看自己oplog里的最后一条时间戳;其次,查询主节点oplog里所有大于此时间戳的文档;最后,把那些文档应用到自己库里,并添加写操作文档到自己的oplog里。

●3.复制状态和本地数据库

- 复制状态的文档记录在本地数据库local中。主节点的local数据库的内容是不会被从节点复制的。如果有不想被从节点复制的文档,可以将它放在本地数据库local中。

●4.阻塞复制

- 当主节点写入操作太快时,从节点的更新状态有可能跟不上。

为避免这种情况:

- 使主节点的oplog足够大

- 阻塞复制:在主节点使用getLastError命令加参数“w”来确保数据的同步性。w越大会导致写操作越慢。

●5.心跳机制

- 心跳检测有助于发现故障进行自动选举和故障转移。默认情况下副本集成员每两分钟ping一下其他成员,来弄清自己的健康状态。

- 如果是某一从节点出现故障只会等待从节点重新上线,而如果主节点出现故障,则副本集会开始选举,重新选出新的主节点,原主节点会降级为从节点。

●6.选举机制

- 根据优先级和Bully算法(评判睡的数据最新)选举出主节点,在选举出主节点之前,整个集群服务是只读的,不能执行写操作。

- 非仲裁节点都会有优先级配置,范围为0~100,越大值越优先成为主节点,默认为1,如果是0则不能成为主节点。

- 拥有资格的从节点会向其他节点发出请求,而其他节点在收到选举提议后会判断三个条件:

- 副本集中是否有其他节点已经是主节点了

- 自己的数据是否比请求成为主节点的的那个节点上数据更新

- 副本集中其他节点的数据是否比请求成为主节点的那个节点数据更新

- 只要有一个条件城里,都会认为对方提议不可行。请求者只要收到任何一个节点返回不合适,都会退出选举。

- 选举机制会让优先级高的节点成为主节点,即使先选举出了优先级低的节点,也至少会短暂作为主节点运行一段时间。副本集还会再之后继续发出选举,直到优先级最高的节点成为主节点。(后面会有例子)

●7.数据回滚

- 在从节点成为主节点后,会认为其是副本集中的最新数据,对其他节点的操作都会回滚,即所有节点连接新的主节点重新同步。这些节点会查看自己的oplog,找出新主节点中没有执行过的操作,然后请求操作文档,替换掉自己的异常样本。

mongodb复制集搭建

推荐步骤

[root@localhost shcool]# mkdir -p /data/mongodb/mongodb{

2,3,4} ##创建数据目录

[root@localhost yum.repos.d]# mkdir -p /data/mongodb/logs ##创建日志

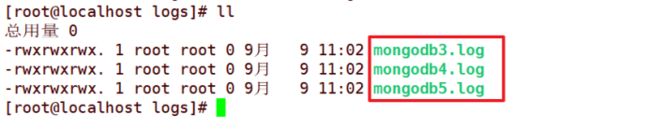

[root@localhost yum.repos.d]# touch /data/mongodb/logs/mongodb{

2,3,4}.log ##创建数据日志文件

[root@localhost yum.repos.d]# chmod +777 /data/mongodb/logs/*.log ##增加权限

[root@localhost yum.repos.d]# cd /data/mongodb/

[root@localhost mongodb]# ls

logs mongodb2 mongodb3 mongodb4

[root@localhost mongodb]# cd logs/

[root@localhost logs]# ls

mongodb2.log mongodb3.log mongodb4.log

[root@localhost logs]# vim /etc/mongod.conf

replication:

replSetName: kgcrs ##定义数据类型

#sharding:

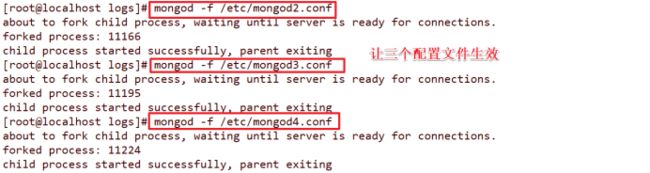

改过配置文件需要让其生效

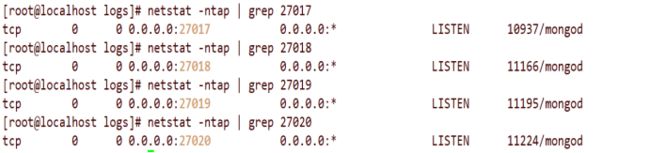

[root@localhost logs]# mongod -f /etc/mongod.conf --shutdown ##先关闭

killing process with pid: 10576

[root@localhost logs]# mongod -f /etc/mongod.conf ##在启动

about to fork child process, waiting until server is ready for connections.

forked process: 10937

child process started successfully, parent exiting

[root@localhost logs]# cp -p /etc/mongod.conf /etc/mongod2.conf

[root@localhost logs]# vim /etc/mongod2.conf

# where to write logging data.

systemLog:

destination: file

logAppend: true

path: /data/mongodb/logs/mongodb2.log ##修改实例2的日志文件位置

.....

storage:

dbPath: /data/mongodb/mongodb2 ##数据文件

....

net:

port: 27018 ##端口号改为18

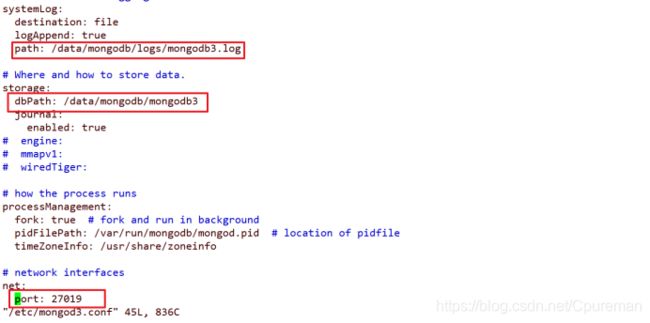

[root@localhost logs]# cp -p /etc/mongod2.conf /etc/mongod3.conf

[root@localhost logs]# vim /etc/mongod3.conf ##修改实例3的配置文件

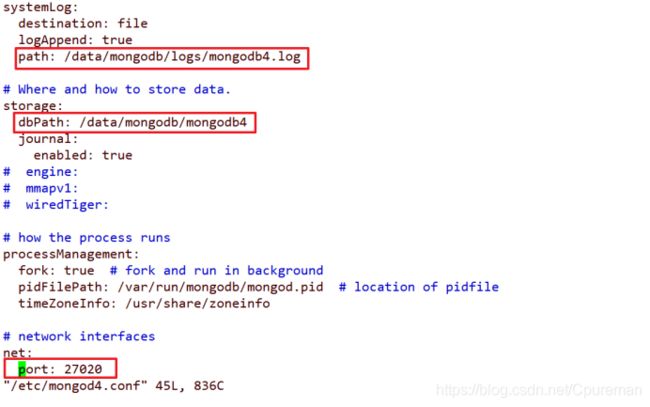

[root@localhost logs]# cp -p /etc/mongod2.conf /etc/mongod4.conf

[root@localhost logs]# vim /etc/mongod4.conf

[root@localhost logs]# mongo ##登录017的数据库

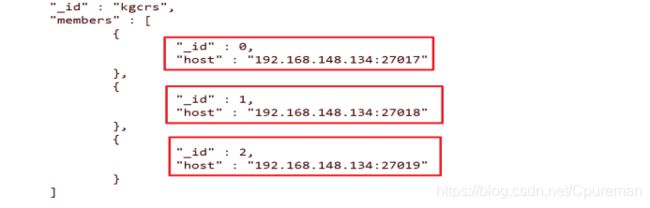

先将前三个实例做成复制集

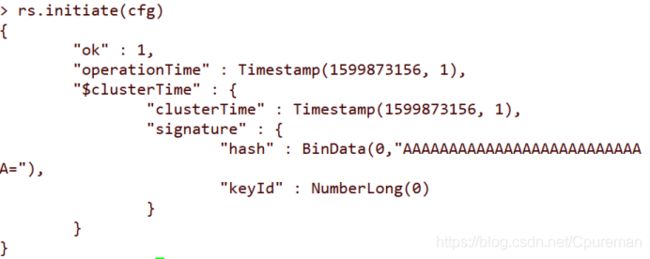

> cfg={

"_id":"kgcrs","members":[{

"_id":0,"host":"192.168.148.134:27017"},{

"_id":1,"host":"192.168.148.134:27018"},{

"_id":2,"host":"192.168.148.134:27019"}]}

> rs.initiate(cfg) ##启动复制集

kgcrs:PRIMARY> ##变为主

[root@localhost logs]# mongo --port 27018 ##切换为18

kgcrs:SECONDARY> ##18变为从

[root@localhost logs]# mongo --port 27019 ##切换为19

kgcrs:SECONDARY> ##19变为从

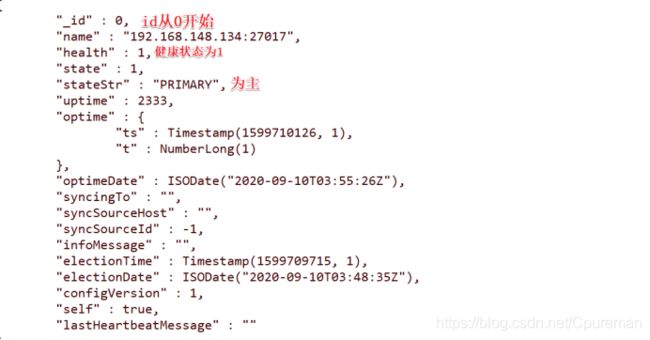

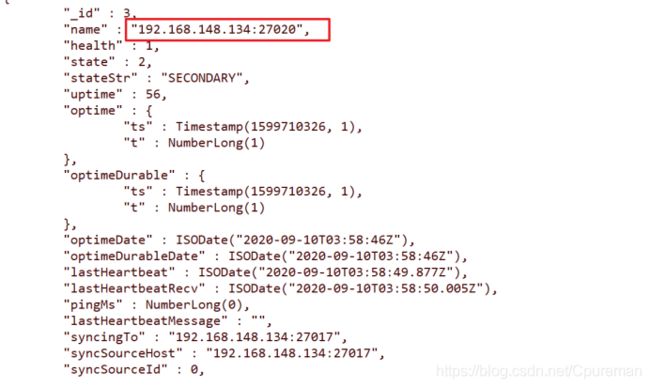

kgcrs:PRIMARY> rs.status() ##查看复制级状态

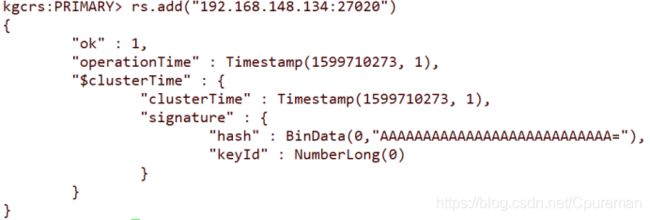

kgcrs:PRIMARY> rs.add("192.168.148.134:27020") ##添加27020的节点

kgcrs:PRIMARY> rs.status() ##查看节点是否添加

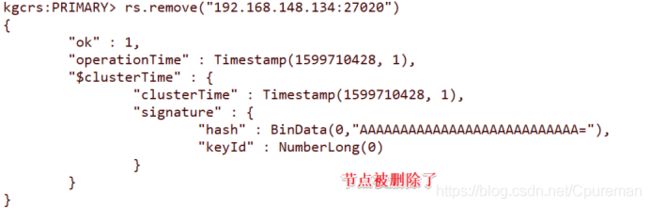

kgcrs:PRIMARY> rs.remove("192.168.148.134:27020")

故障转移切换

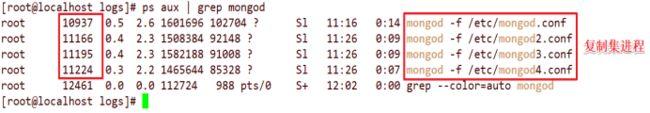

1.先查看进程

[root@localhost logs]# ps aux | grep mongod

模拟故障,复制集完成自动切换

2.将主数据库的进程删除,查看选举情况,哪台变为主键

[root@localhost logs]# kill -9 10937 ##将主键的PID号删除

[root@localhost logs]# mongo --port 27019 ##登录到27019的数据库

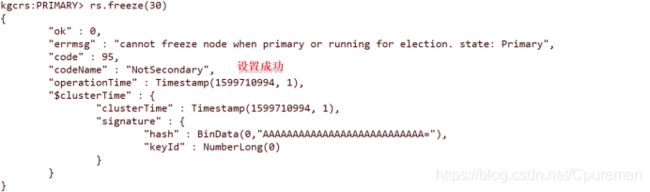

kgcrs:PRIMARY> rs.freeze(30) ##暂停30s不参与选举

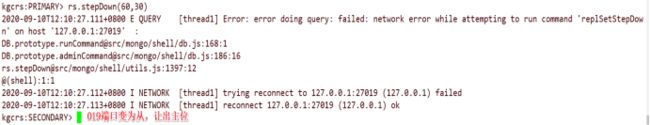

kgcrs:PRIMARY> rs.stepDown(60,30) ##交出主节点位置,维持从节点状态不少于60s,等待30s使主节点和从节点日志同步(交换主从)

[root@localhost logs]# mongo --port 27018 ##登录到018

kgcrs:PRIMARY> ##018变为主位

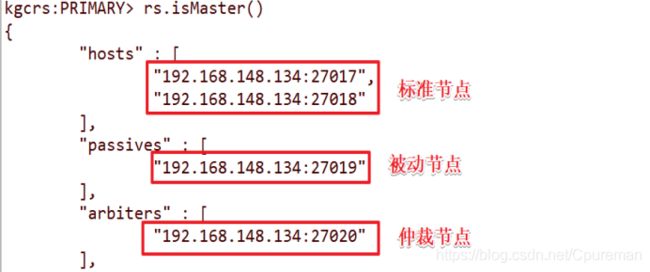

选举复制

1.标准节点 2.仲裁节点 3.被动节点

通过优先级设点,优先级高的是标准节点,低的是被动节点;最后是仲裁节点

[root@localhost logs]# mongo ##在实例1中设置优先级

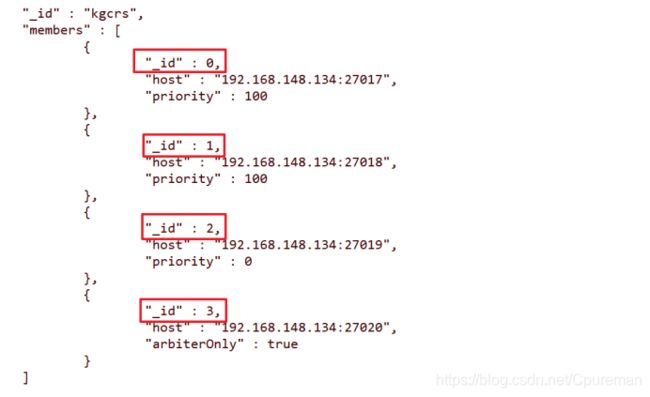

> cfg={

"_id":"kgcrs","members":[{

"_id":0,"host":"192.168.148.134:27017","priority":100},{

"_id":1,"host":"192.168.148.134:27018","priority":100},{

"_id":2,"host":"192.168.148.134:27019","priority":0},{

"_id":3,"host":"192.168.148.134:27020","arbiterOnly":true}]}

> rs.initiate(cfg) ##将(cfg)进行初始化,会发现27017变为主键

kgcrs:PRIMARY> rs.isMaster() ##查看各节点之间的身份

添加数据

kgcrs:PRIMARY> use test ##使用test库

switched to db test

kgcrs:PRIMARY> db.t1.insert({

"id":1,"name":"jack"}) #创建id为1的“jack”用户

WriteResult({

"nInserted" : 1 })

kgcrs:PRIMARY> db.t1.insert({

"id":2,"name":"tom"}) #创建id为2的“tom”用户

WriteResult({

"nInserted" : 1 })

kgcrs:PRIMARY> db.t1.find()

{

"_id" : ObjectId("5f5c2ac07a3ce48c6ccb1dd4"), "id" : 1, "name" : "jack" }

{

"_id" : ObjectId("5f5c2ac97a3ce48c6ccb1dd5"), "id" : 2, "name" : "tom" }

修改数据

kgcrs:PRIMARY> db.t1.update({

"id":1},{

$set:{

"name":"jerrt"}})

WriteResult({

"nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

kgcrs:PRIMARY> db.t1.find()

{

"_id" : ObjectId("5f5c2ac07a3ce48c6ccb1dd4"), "id" : 1, "name" : "jerrt" }

{

"_id" : ObjectId("5f5c2ac97a3ce48c6ccb1dd5"), "id" : 2, "name" : "tom" }

kgcrs:PRIMARY>

删除数据:

kgcrs:PRIMARY> db.t1.remove({

"id":2})

WriteResult({

"nRemoved" : 1 })

kgcrs:PRIMARY> db.t1.find()

{

"_id" : ObjectId("5f5c2ac07a3ce48c6ccb1dd4"), "id" : 1, "name" : "jerrt" }

查看日志信息

kgcrs:PRIMARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB ##local库存放了日志

test 0.000GB

kgcrs:PRIMARY> use local

kgcrs:PRIMARY> show collections ##查看集合

me

oplog.rs ##存放了日志信息

replset.election

replset.minvalid

startup_log

system.replset

system.rollback.id

kgcrs:PRIMARY> db.oplog.rs.find() ##这个命令可以查看日志记录

模拟节点故障查看选举情况

[root@localhost logs]# mongod -f /etc/mongod.conf --shutdown ##将主节点宕机

[root@localhost logs]# mongo --port 27018 ##用主键中的27018去登录

kgcrs:PRIMARY> ##发现27018变为主接单

这时将27018也宕机,查看27019会不会参与选举

[root@localhost logs]# mongod -f /etc/mongod2.conf --shutdown ##将27018宕机

[root@localhost logs]# mongo --port 27019

kgcrs:SECONDARY> ##发现27019不参与选举

再次将27017恢复,发现主节点依然是他

[root@localhost logs]# mongod -f /etc/mongod.conf ##恢复端口27017

kgcrs:PRIMARY> ##发现其还是变为主节点

允许从节点从主节点去读取信息

[root@localhost logs]# mongo --port 27018 ##登录到27018节点上

kgcrs:SECONDARY> show dbs ##发现查看不到数据库信息

kgcrs:SECONDARY> rs.slaveOk() ##允许从节点去读取信息

kgcrs:SECONDARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

test 0.000GB ##数据库信息就存在了

查看复制状态信息

kgcrs:SECONDARY> rs.printReplicationInfo() ##查看日志文件大小

configured oplog size: 1830.852294921875MB ##日志文件大小为1830MB

log length start to end: 1842secs (0.51hrs)

oplog first event time: Sat Sep 12 2020 09:54:46 GMT+0800 (CST)

oplog last event time: Sat Sep 12 2020 10:25:28 GMT+0800 (CST)

now: Sat Sep 12 2020 10:25:33 GMT+0800 (CST)

kgcrs:SECONDARY> rs.printSlaveReplicationInfo() ##查看哪些节点可以找主节点同步

source: 192.168.148.134:27018 ##27018

syncedTo: Sat Sep 12 2020 10:30:58 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

source: 192.168.148.134:27019 ##27019

syncedTo: Sat Sep 12 2020 10:30:58 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

发现仲裁节点不会去找主节点同步信息

更改日志文件大小

1.将27018的服务关闭

kgcrs:SECONDARY> use admin

switched to db admin

kgcrs:SECONDARY> db.shutdownServer()

2.将配置文件信息注释

[root@localhost logs]# vim /etc/mongod2.conf

net:

port: 27028 ##将端口号改为27028

bindIp: 0.0.0.0 # Listen to local interface only, comment to listen on all interfaces.

#replication: ##将参数注销

# replSetName: kgcrs

[root@localhost logs]# mongod -f /etc/mongod2.conf ##重新启动27018端口的实例,这时它就变成一个单实例

[root@localhost logs]# mongodump --port 27028 --db local --collection 'oplog.rs' ##备份日志信息

[root@localhost logs]# mongo --port 27028

> use local ##使用local库

switched to db local

> show tables

me

oplog.rs

replset.election

replset.minvalid

replset.oplogTruncateAfterPoint

startup_log

system.replset

system.rollback.id

> db.oplog.rs.drop() ##将日志库删除

true

> db.run

db.runCommand( db.runReadCommand(

db.runCommandWithMetadata(

> db.runCommand( {

create: "oplog.rs", capped: true, size: (2 * 1024 * 1024) } ) ##重建日志信息库

{

"ok" : 1 }

> use admin ##改完之后将服务停止

switched to db admin

> db.shutdownServer()

3.修改配置文件,将注释取消,修改端口号

net:

port: 27018 ##端口号改为27018

......

replication:

replSetName: kgcrs

oplogSizeMB: 2048 ##添加日志库大小为2048M

[root@localhost logs]# mongod -f /etc/mongod2.conf

[root@localhost logs]# mongo --port 27018

kgcrs:SECONDARY> rs.printReplicationInfo() ##查看文件大小

configured oplog size: 2MB

log length start to end: 240secs (0.07hrs)

oplog first event time: Sat Sep 12 2020 11:02:59 GMT+0800 (CST)

oplog last event time: Sat Sep 12 2020 11:06:59 GMT+0800 (CST)

now: Sat Sep 12 2020 11:07:04 GMT+0800 (CST)

[root@localhost logs]# mongo ##登录到主节点上

kgcrs:PRIMARY> rs.stepDown() ##将主位让出

[root@localhost logs]# mongo --port 27018 ##再次登陆的27018节点上

kgcrs:PRIMARY> ##27018变为主节点

部署认证复制

kgcrs:PRIMARY> use admin

switched to db admin

kgcrs:PRIMARY> db.createUser({

"user":"root","pwd":"123","roles":["root"]}) ##创建用户

Successfully added user: {

"user" : "root", "roles" : [ "root" ] }

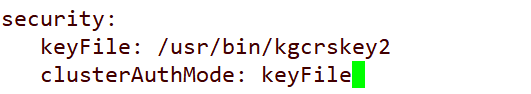

[root@localhost logs]# vim /etc/mongod.conf

security:

keyFile: /usr/bin/kgcrskey1 ##验证路径

clusterAuthMode: keyFile ##密钥文件验证

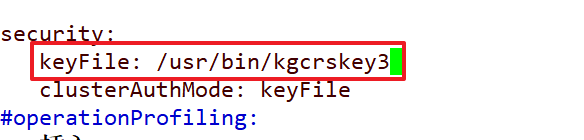

[root@localhost logs]# vim /etc/mongod2.conf

[root@localhost logs]# vim /etc/mongod3.conf

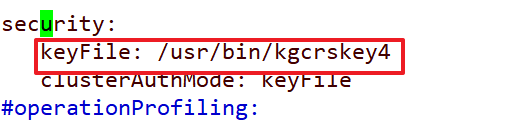

[root@localhost logs]# vim /etc/mongod4.conf

[root@localhost logs]# cd /usr/bin/

[root@localhost bin]# echo "kgcrs key" > kgcrskey1

[root@localhost bin]# echo "kgcrs key" > kgcrskey2

[root@localhost bin]# echo "kgcrs key" > kgcrskey3

[root@localhost bin]# echo "kgcrs key" > kgcrskey4

[root@localhost bin]# chmod 600 kgc* ##将文件权限改为600

将文件全部生效;这边就距离27017节点的例子

[root@localhost bin]# mongod -f /etc/mongod.conf --shutdown

killing process with pid: 11907

[root@localhost bin]# mongod -f /etc/mongod.conf

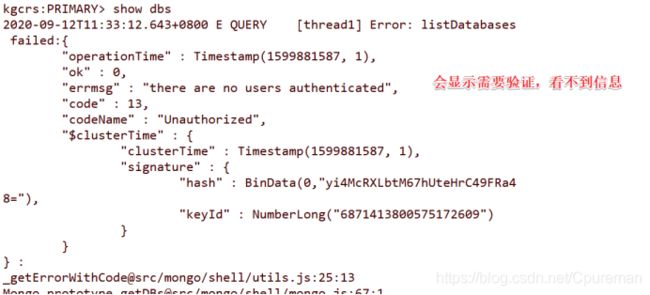

登录到主位节点,验证认证测试

[root@localhost bin]# mongo --port 27018

kgcrs:PRIMARY> show dbs

kgcrs:PRIMARY> use admin

switched to db admin

kgcrs:PRIMARY> db.auth("root","123") ##用root身份去登录

1

kgcrs:PRIMARY> show dbs ##可以查看数据库信息了

admin 0.000GB

config 0.000GB

local 0.000GB

test 0.000GB

kgcrs:PRIMARY>

从位的节点都需要登录root用户才可以查看数据库信息