python常用模块(三) —— pandas高级处理(2)

视频见:Python教程4天快速入手Python数据挖掘

1 缺失值处理

import pandas as pd

# 读取数据

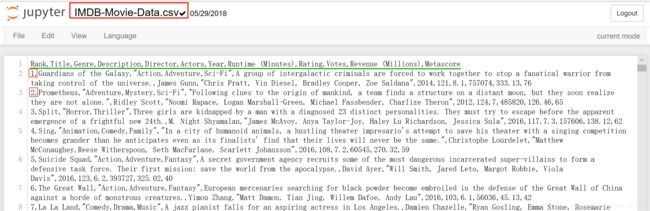

movie = pd.read_csv("./IMDB/IMDB-Movie-Data.csv")

In[1] : movie.head()1.1 处理nan

判断数据是否为NaN:pd.isnull(df),pd.notnull(df)

处理方式:

- 存在缺失值nan,并且是

np.nan:- 删除存在缺失值的:

.dropna(axis='rows', inplace=False),默认删除行- 注:不会修改原数据,需要接受返回值

- 替换缺失值:

.filna(value, inplace=False)- value:替换成的值

- inplace:

- True:会修改原数据

- False:不替换修改原数据,生成新的对象,默认

- 删除存在缺失值的:

- 不是缺失值nan,有默认标记的,比如“ ?”

1)判断是否存在NaN类型的缺失值

import numpy as np

In[1] : np.any(pd.isnull(movie)) # 返回True,说明数据中存在缺失值

Out[1]: True

In[1] : np.all(pd.notnull(movie)) # 返回False,说明数据中存在缺失值

Out[1]: False

In[1] : pd.isnull(movie).any()

Out[1]: Rank False

Title False

Genre False

Description False

Director False

Actors False

Year False

Runtime (Minutes) False

Rating False

Votes False

Revenue (Millions) True

Metascore True

dtype: bool

In[1] : pd.notnull(movie).all()

Out[1]: Rank True

Title True

Genre True

Description True

Director True

Actors True

Year True

Runtime (Minutes) True

Rating True

Votes True

Revenue (Millions) False

Metascore False

dtype: bool2)缺失值处理

# 方法1:删除含有缺失值的样本

data1 = movie.dropna()

In[1] : pd.notnull(data1).all()

Out[1]: Rank True

Title True

Genre True

Description True

Director True

Actors True

Year True

Runtime (Minutes) True

Rating True

Votes True

Revenue (Millions) True

Metascore True

dtype: bool

# 方法2:替换

# 含有缺失值的字段:Revenue(Millions),Metascore

movie["Revenue (Millions)"].fillna(movie["Revenue (Millions)"].mean(), inplace=True)

movie["Metascore"].fillna(movie["Metascore"].mean(), inplace=True)

In[1] : pd.notnull(movie).all() # 缺失值已经处理完毕,不存在缺失值

Out[1]: Rank True

Title True

Genre True

Description True

Director True

Actors True

Year True

Runtime (Minutes) True

Rating True

Votes True

Revenue (Millions) True

Metascore True

dtype: bool1.2 不是缺失值nan,有默认标记的

# 读取数据

path = "https://archive.ics.uci.edu/ml/machine-learning-databases/breast-cancer-wisconsin/breast-cancer-wisconsin.data"

data = pd.read_csv(path)

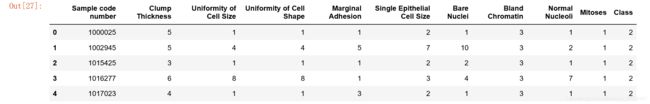

In[1] : data.head()name = ["Sample code number", "Clump Thickness", "Uniformity of Cell Size", "Uniformity of Cell Shape", "Marginal Adhesion", "Single Epithelial Cell Size", "Bare Nuclei", "Bland Chromatin", "Normal Nucleoli", "Mitoses", "Class"]

data = pd.read_csv(path, names=name)

In[1] : data.head()- 1)先替换

?为np.nantf.replace(to_replace=, value=)- to_ replace:替换前的值

- value:替换后的值

# 把一些其它值标记的缺失值,替换成np.nan

data_new = data.replace(to_replace="?", value=np.nan)

In[1] : data_new.head()- 2)在进行缺失值的处理

# 删除缺失值

data_new.dropna(inplace=True)

In[1] : data_new.isnull().any() # 全部返回False说明不存在缺失值了

Out[1]: Sample code number False

Clump Thickness False

Uniformity of Cell Size False

Uniformity of Cell Shape False

Marginal Adhesion False

Single Epithelial Cell Size False

Bare Nuclei False

Bland Chromatin False

Normal Nucleoli False

Mitoses False

Class False

dtype: bool

In[1] : type(np.nan)

Out[1]: float2 数据离散化

2.1 什么是数据离散化

连续属性的离散化就是将连续属性的值域上,将值域划分为若干个离散的区间,最后用不同的符号或整数值代表落在每个子区间中的属性值。

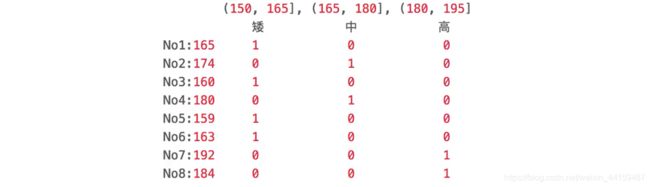

离散化有很多种方法,这使用一种最简单的方式:

- 原始的身高数据:165,174,160,180,159,163,192,184

- 假设按照身高分三个区间段,对应的标记为矮、中、高三个类别:(150,165],(165,180],(180,195]

2.2 为什么要进行数据离散化

连续属性离散化的目的是为了简化数据结构,数据离散化技术可以用来减少给定连续属性值的个数。离散化方法经常作为数据挖掘的工具。

2.3 如何实现数据的离散化

流程:

- 对数据进行分组

- 自动分组:

pd.qcut(data, bins),bin,组数 - 自定义分组:

pd.cut(data,bins) - 将数据分组一般会与

value_counts搭配使用,统计每组的个数series.value_counts():统计分组次数

- 自动分组:

- 对分好组的数据求哑变量

pandas.get_dummies(data, prefix=None)- data:array-like, Series, or DataFrame

- prefix:分组名字

# 1)准备数据

data = pd.Series([165,174,160,180,159,163,192,184], index=['No1:165', 'No2:174','No3:160', 'No4:180', 'No5:159', 'No6:163', 'No7:192', 'No8:184'])

In[1] : data

Out[1]: No1:165 165

No2:174 174

No3:160 160

No4:180 180

No5:159 159

No6:163 163

No7:192 192

No8:184 184

dtype: int64

# 2)分组

# 自动分组

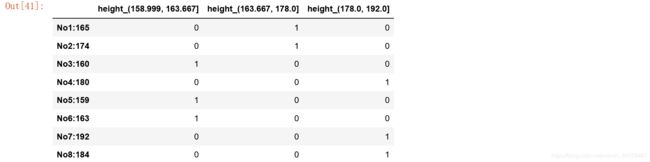

sr = pd.qcut(data, 3)

In[1] : sr

Out[1]: No1:165 (163.667, 178.0]

No2:174 (163.667, 178.0]

No3:160 (158.999, 163.667]

No4:180 (178.0, 192.0]

No5:159 (158.999, 163.667]

No6:163 (158.999, 163.667]

No7:192 (178.0, 192.0]

No8:184 (178.0, 192.0]

dtype: category

Categories (3, interval[float64]): [(158.999, 163.667] < (163.667, 178.0] < (178.0, 192.0]]

In[1] : type(sr)

Out[1]: pandas.core.series.Series

# 统计每组的个数

In[1] : sr.value_counts()

Out[1]: (178.0, 192.0] 3

(158.999, 163.667] 3

(163.667, 178.0] 2

dtype: int64

# 3)转换成one-hot编码

In[1] : pd.get_dummies(sr, prefix="height")# 自定义分组

bins = [150, 165, 180, 195]

sr = pd.cut(data, bins) # 对数据进行分组(相当于进行分类)

In[1] : sr

Out[1]: No1:165 (150, 165]

No2:174 (165, 180]

No3:160 (150, 165]

No4:180 (165, 180]

No5:159 (150, 165]

No6:163 (150, 165]

No7:192 (180, 195]

No8:184 (180, 195]

dtype: category

Categories (3, interval[int64]): [(150, 165] < (165, 180] < (180, 195]]

In[1] : sr.value_counts()

Out[1]: (150, 165] 4

(180, 195] 2

(165, 180] 2

dtype: int64

# get_dummies

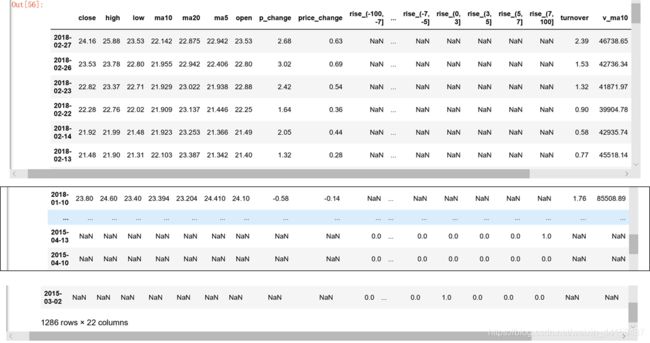

In[1] : pd.get_dummies(sr, prefix="身高")2.4 案例:股票的涨跌幅离散化

对股票每日的" p_change"进行离散化

2.4.1 读取股票的数据

先读取股票的数据,筛选出p_change数据

stock = pd.read_csv("./stock_day/stock_day.csv")

p_change = stock["p_change"]

In[1] : p_change.head()

Out[1]: 2018-02-27 2.68

2018-02-26 3.02

2018-02-23 2.42

2018-02-22 1.64

2018-02-14 2.05

Name: p_change, dtype: float642.4.2 将股票涨跌幅数据进行分组

# 1)自动分组

sr = pd.qcut(p_change, 10)

# 2)计算每组的数据个数

In[1] : sr.value_counts()

Out[1]: (5.27, 10.03] 65

(0.26, 0.94] 65

(-0.462, 0.26] 65

(-10.030999999999999, -4.836] 65

(2.938, 5.27] 64

(1.738, 2.938] 64

(-1.352, -0.462] 64

(-2.444, -1.352] 64

(-4.836, -2.444] 64

(0.94, 1.738] 63

Name: p_change, dtype: int64

# 3)离散化

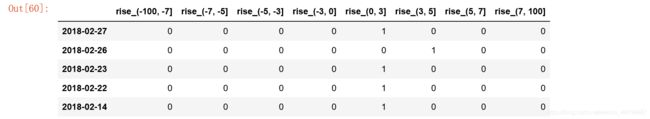

In[1] : pd.get_dummies(sr, prefix="涨跌幅")# 1)自定义分组

bins = [-100, -7, -5, -3, 0, 3, 5, 7, 100]

sr = pd.cut(p_change, bins)

# 2)计算每组的数据个数

In[1] : sr.value_counts()

Out[1]: (0, 3] 215

(-3, 0] 188

(3, 5] 57

(-5, -3] 51

(7, 100] 35

(5, 7] 35

(-100, -7] 34

(-7, -5] 28

Name: p_change, dtype: int64

# one-hot

In[1] : pd.get_dummies(sr, prefix="rise").head()3 合并

3.1 pd.concat( )实现合并

pd.concat([data1, data2], axis=0)- 按照行或列进行合并,axis=0为列索引(默认),axis=1为行索引

# 处理好的one-hot编码与原数据合并

# 按行拼接

In[1] : pd.concat([stock, stock_change], axis=1)# 按列拼接

In[1] : pd.concat([stock, stock_change], axis=0).head()3.2 pd.merge( )合并

pd_merge(left, right, how='inner', on=None, left_on=None, right_on=None, left_index=False, right_index=False, sort=True, suffixes=('_x','_y'), copy=True, indicator=False, validate=None)- 可以指定按照两组数据的共同键值对合并或者左右各自

left:A Data Frame object. 左表right:Another dataFrame object. 右表on:Columns(names) to join on. Must be found in both the left and right DataFrame objects. 索引- left_on=None,right_on=None:指定左右键

how:如何拼接,默认’inner’(应用最多)

| Merge method | SQL Join Name | Description |

|---|---|---|

| left | LEFT OUTER JOIN(左连接) | Use keys from left frame only |

| right | RIGHT OUTER JOIN(右连接) | Use keys from right frame only |

| outer | FULL OUTER JOIN(外连接) | Use union of keys from both frames |

| inner | INNER JOIN(内连接) | Use intersection of keys from both frames |

left = pd.DataFrame({

'key1': ['K0', 'K0', 'K1', 'K2'],

'key2': ['K0', 'K1', 'K0', 'K1'],

'A': ['A0', 'A1', 'A2', 'A3'],

'B': ['B0', 'B1', 'B2', 'B3']})

right = pd.DataFrame({

'key1': ['K0', 'K1', 'K1', 'K2'],

'key2': ['K0', 'K0', 'K0', 'K0'],

'C': ['C0', 'C1', 'C2', 'C3'],

'D': ['D0', 'D1', 'D2', 'D3']})

In[1]: left

In[2]: right# 内连接合并,保留"key1", "key2"共有键

In[1] : pd.merge(left, right, how="inner", on=["key1", "key2"])

# 左连接合并,仅保留左表"key1", "key2"键

In[2] : pd.merge(left, right, how="left", on=["key1", "key2"])

# 外连接合并,保留所有"key1", "key2"键

In[3] : pd.merge(left, right, how="outer", on=["key1", "key2"])

# 右连接合并,仅保留右表"key1", "key2"键

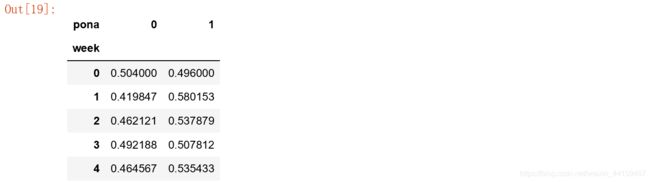

In[4] : pd.merge(left, right, how="right", on=["key1", "key2"])4 交叉表与透视表

- 找到、探索两个变量之间的关系

4.1 交叉表与透视表的作用

探究股票的涨跌与星期几有关:以下图当中表示,week代表星期几,1,0代表这一天股票的涨跌幅是好还是坏,里面的数据代表涨跌幅好坏的比例

| week \ posi_neg | 0 | 1 |

|---|---|---|

| 0 | 0.504000 | 0.49600 |

| 1 | 0.419847 | 0.580153 |

| 2 | 0.462121 | 0.537879 |

| 3 | 0.492188 | 0.507812 |

| 4 | 0.464567 | 0.535433 |

4.2 使用crosstab(交叉表)实现

- 交叉表:用于计算一列数据对于另外一列数据的分组个数(寻找两个列之间的关系)

pd_crosstab(value1, value2)

4.2.1 数据准备

- 准备两列数据,星期数据以及涨跌幅是好是坏数据

- 进行交叉表计算

# pd.crosstab(星期数据列, 涨跌幅数据列)

# 准备星期数据列

In[1] : stock.index

Out[1]: Index(['2018-02-27', '2018-02-26', '2018-02-23', '2018-02-22', '2018-02-14',

'2018-02-13', '2018-02-12', '2018-02-09', '2018-02-08', '2018-02-07',

...

'2015-03-13', '2015-03-12', '2015-03-11', '2015-03-10', '2015-03-09',

'2015-03-06', '2015-03-05', '2015-03-04', '2015-03-03', '2015-03-02'],

dtype='object', length=643)

# pandas日期类型

# 1、先根据对应的日期找到星期几

date = pd.to_datetime(stock.index)

In[1] : date

Out[1]: DatetimeIndex(['2018-02-27', '2018-02-26', '2018-02-23', '2018-02-22',

'2018-02-14', '2018-02-13', '2018-02-12', '2018-02-09',

'2018-02-08', '2018-02-07',

...

'2015-03-13', '2015-03-12', '2015-03-11', '2015-03-10',

'2015-03-09', '2015-03-06', '2015-03-05', '2015-03-04',

'2015-03-03', '2015-03-02'],

dtype='datetime64[ns]', length=643, freq=None)

In[1] : date.weekday # 星期

Out[1]: Int64Index([1, 0, 4, 3, 2, 1, 0, 4, 3, 2,

...

4, 3, 2, 1, 0, 4, 3, 2, 1, 0],

dtype='int64', length=643)

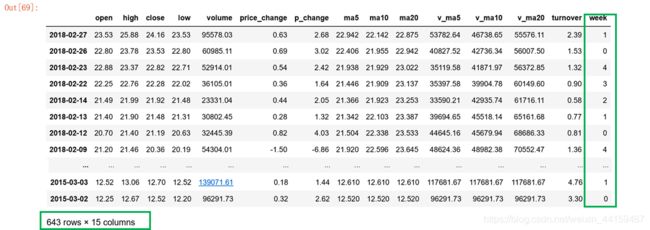

stock["week"] = date.weekday

In[1] : stock# 准备涨跌幅数据列

stock["pona"] = np.where(stock["p_change"] > 0, 1, 0)

In[1] : stock.head()4.2.2 交叉表计算

data = pd.crosstab(stock["week"], stock["pona"])

In[1] : dataIn[1] : data.sum(axis=1) # 按行求和

Out[1]: week

0 125

1 131

2 132

3 128

4 127

dtype: int64

In[1] : data.div(data.sum(axis=1), axis=0)In[1] : data.div(data.sum(axis=1), axis=0).plot(kind="bar", stacked=True)

Out[1]: <matplotlib.axes._subplots.AxesSubplot at 0x10badf7f0>4.3 pivot_table( )

DataFrame.pivot_ table([ ], index=[])- 使用透视表,刚才的过程更加简单

# 透视表操作

In[1] : stock.pivot_table(["pona"], index=["week"])5 分组与聚合

分组与聚合通常是分析数据的一种方式,通常与一些统计函数一起使用,查看数据的分组情况

想一想其实刚才的交叉表与透视表也有分组的功能,所以算是分组的一种形式,只不过他们主要是计算次数或者计算比例

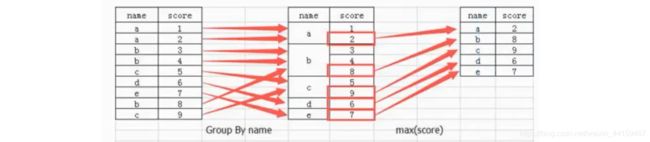

5.1 什么是分组与聚合

5.2 分组与聚合API

DataFrame.groupby(key, as_index=False)- key:分组的列数据,可以多个

- 案例不同颜色的不同笔的价格数据

col =pd.DataFrame({

'color': ['white','red','green','red','green'], 'object': ['pen','pencil','pencil','ashtray','pen'],'price1':[5.56,4.20,1.30,0.56,2.75],'price2':[4.75,4.12,1.60,0.75,3.15]})

In[1] : col# 进行分组,对颜色分组,price1进行聚合

# 用dataframe的方法进行分组聚合

In[1] : col.groupby(by="color")["price1"].max()

Out[1]: color

green 2.75

red 4.20

white 5.56

Name: price1, dtype: float64

# 用series的方法进行分组聚合

In[1] : col["price1"].groupby(col["color"]).max()

Out[1]: color

green 2.75

red 4.20

white 5.56

Name: price1, dtype: float64

col.groupby(by="color")不会输出结果,分组聚合同时使用才会输出

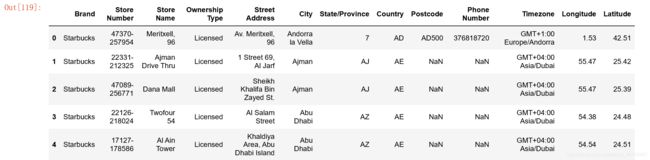

5.3 星巴克零售店铺数据案例

案例:统计全球星巴克的数据,比较美国和中国哪个国家店铺多,或者中国每个省份星巴克的数量的情况。

数据来源https://www.kaggle.com/starbucks/store-locations/data

5.3.1 数据获取

starbucks = pd.read_csv("directory.csv")

In[1] : starbucks.head()5.3.2 进行分组聚合

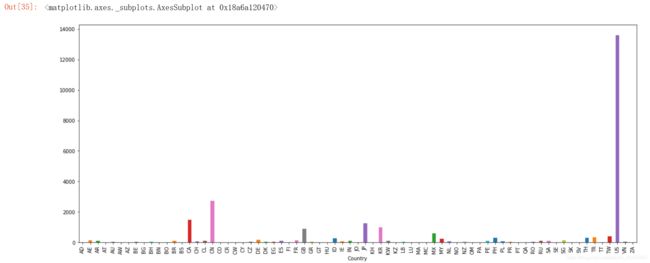

# 按照国家分组,求出每个国家的星巴克零售店数量

count = starbucks.groupby(['Country']).count()

In[1] : count# 画图显示结果

count['Brand].plot(kind='bar', figsize=(20, 8))

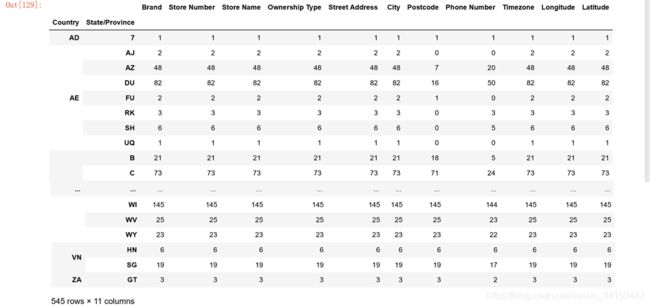

plt.show()# 按照国家分组,求出每个国家的星巴克零售店数量

In[1] : starbucks.groupby("Country").count()["Brand"].sort_values(ascending=False)[:10].plot(kind="bar", figsize=(20, 8), fontsize=40)

Out[1]: <matplotlib.axes._subplots.AxesSubplot at 0x10c7da668># 假设加入省市一起进行分组

In[1] : starbucks.groupby(by=["Country", "State/Province"]).count()6 综合案例

6.1 读取数据

数据来源于2006年到2016年1000部最流行的电影数据:https://www.kaggle.com/damianpanek/sunday-eda/data

# 1、准备数据

movie = pd.read_csv("./IMDB/IMDB-Movie-Data.csv")

In[1] : movie6.2 数据处理

问题1: 获取电影数据中评分(Rating)的平均分,导演(Director)的人数

# 评分的平均分

In[1] : movie["Rating"].mean()

Out[1]: 6.723199999999999

# 导演的人数

In[1] : np.unique(movie["Director"]).size

Out[1]: 644

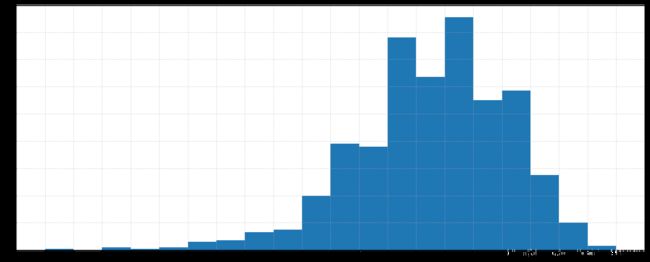

问题2: 获取rating,runtime的分布情况,并呈现数据

In[1] : movie["Rating"].plot(kind="hist", figsize=(20, 8))

Out[1]:<matplotlib.axes._subplots.AxesSubplot at 0x10c85a390>import matplotlib.pyplot as plt

# 1、创建画布

plt.figure(figsize=(20, 8), dpi=80)

# 2、绘制直方图

plt.hist(movie["Rating"], 20)

# 修改刻度

plt.xticks(np.linspace(movie["Rating"].min(), movie["Rating"].max(), 21))

# 添加网格

plt.grid(linestyle="--", alpha=0.5)

# 3、显示图像

plt.show()- 思路分析

- 创建一个全为0的 dataframe,列索引置为电影的分类,temp_df

- 遍历每一部电影,temp_df中把分类出现的列的值置为1

- 求和

先统计电影类别都有哪些

movie_genre = [i.split(",") for i in movie["Genre"]]

In[1] : movie_genreIn[1] : [j for i in movie_genre for j in i]movie_class = np.unique([j for i in movie_genre for j in i])

In[1] : len(movie_class)

Out[1]: 20

In[1] : movie

创建一个全为0的dataframe,列索引置为电影的分类,统计每个类别有几个电影

count = pd.DataFrame(np.zeros(shape=[1000, 20], dtype="int32"), columns=movie_class)

In[1] : count.head()for i in range(1000):

count.ix[i, movie_genre[i]] = 1

In[1] : count.head()count.sum(axis=0).sort_values(ascending=False).plot(kind="bar", figsize=(20, 9), fontsize=40, colormap="cool")