hive源码阅读--CliDriver

说明:根据自己捣鼓的做下记录,草稿,以后再修改

hive-1.2.1版本

根据查询调试阅读

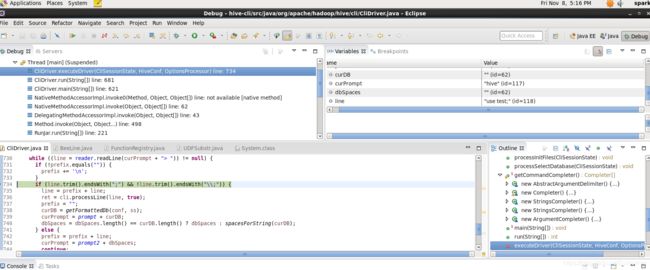

在hive-cli的debug模式下输入use test;

private int executeDriver(CliSessionState ss, HiveConf conf, OptionsProcessor oproc)

throws Exception {

CliDriver cli = new CliDriver();

cli.setHiveVariables(oproc.getHiveVariables());

// use the specified database if specified

cli.processSelectDatabase(ss);

// Execute -i init files (always in silent mode)

cli.processInitFiles(ss);

if (ss.execString != null) {

int cmdProcessStatus = cli.processLine(ss.execString);

return cmdProcessStatus;

}

try {

if (ss.fileName != null) {

return cli.processFile(ss.fileName);

}

} catch (FileNotFoundException e) {

System.err.println("Could not open input file for reading. (" + e.getMessage() + ")");

return 3;

}

setupConsoleReader();

String line;

int ret = 0;

String prefix = "";

String curDB = getFormattedDb(conf, ss);

String curPrompt = prompt + curDB;

String dbSpaces = spacesForString(curDB);

while ((line = reader.readLine(curPrompt + "> ")) != null) { //从此处开始往下执行

if (!prefix.equals("")) {

prefix += '\n';

}

if (line.trim().endsWith(";") && !line.trim().endsWith("\\;")) {

line = prefix + line;

ret = cli.processLine(line, true);

prefix = "";

curDB = getFormattedDb(conf, ss);

curPrompt = prompt + curDB;

dbSpaces = dbSpaces.length() == curDB.length() ? dbSpaces : spacesForString(curDB);

} else {

prefix = prefix + line;

curPrompt = prompt2 + dbSpaces;

continue;

}

}

return ret;

}

line ="use test;"

prefix=""

ret = cli.processLine(line, true);

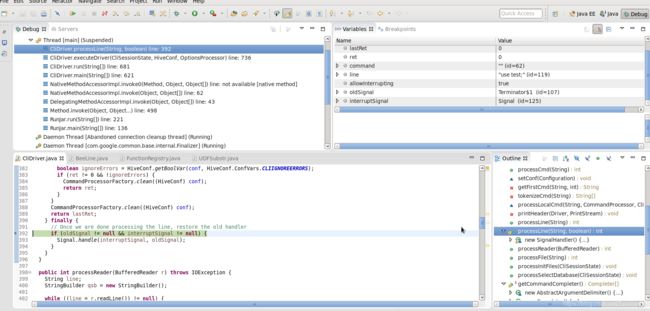

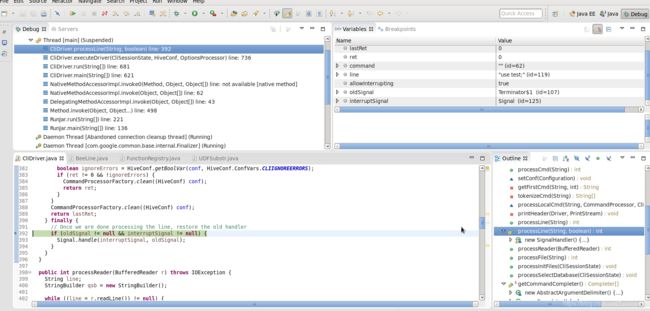

public int processLine(String line, boolean allowInterrupting) {

SignalHandler oldSignal = null;

Signal interruptSignal = null;

if (allowInterrupting) {

// Remember all threads that were running at the time we started line processing.

// Hook up the custom Ctrl+C handler while processing this line

interruptSignal = new Signal("INT");

oldSignal = Signal.handle(interruptSignal, new SignalHandler() {

private final Thread cliThread = Thread.currentThread();

private boolean interruptRequested;

@Override

public void handle(Signal signal) {

boolean initialRequest = !interruptRequested;

interruptRequested = true;

// Kill the VM on second ctrl+c

if (!initialRequest) {

console.printInfo("Exiting the JVM");

System.exit(127);

}

// Interrupt the CLI thread to stop the current statement and return

// to prompt

console.printInfo("Interrupting... Be patient, this might take some time.");

console.printInfo("Press Ctrl+C again to kill JVM");

// First, kill any running MR jobs

HadoopJobExecHelper.killRunningJobs();

TezJobExecHelper.killRunningJobs();

HiveInterruptUtils.interrupt();

}

});

}

try {

int lastRet = 0, ret = 0;

String command = "";

for (String oneCmd : line.split(";")) {

if (StringUtils.endsWith(oneCmd, "\\")) {

command += StringUtils.chop(oneCmd) + ";";

continue;

} else {

command += oneCmd;

}

if (StringUtils.isBlank(command)) {

continue;

}

ret = processCmd(command); //调用processCmd

//wipe cli query state

SessionState ss = SessionState.get();

ss.setCommandType(null);

command = "";

lastRet = ret;

boolean ignoreErrors = HiveConf.getBoolVar(conf, HiveConf.ConfVars.CLIIGNOREERRORS);

if (ret != 0 && !ignoreErrors) {

CommandProcessorFactory.clean((HiveConf) conf);

return ret;

}

}

CommandProcessorFactory.clean((HiveConf) conf);

return lastRet;

} finally {

// Once we are done processing the line, restore the old handler

if (oldSignal != null && interruptSignal != null) {

Signal.handle(interruptSignal, oldSignal);

}

}

}然后:

public int processCmd(String cmd) {

CliSessionState ss = (CliSessionState) SessionState.get();

ss.setLastCommand(cmd);

// Flush the print stream, so it doesn't include output from the last command

ss.err.flush();

String cmd_trimmed = cmd.trim();

String[] tokens = tokenizeCmd(cmd_trimmed);

int ret = 0;

if (cmd_trimmed.toLowerCase().equals("quit") || cmd_trimmed.toLowerCase().equals("exit")) {

// if we have come this far - either the previous commands

// are all successful or this is command line. in either case

// this counts as a successful run

ss.close();

System.exit(0);

} else if (tokens[0].equalsIgnoreCase("source")) {

String cmd_1 = getFirstCmd(cmd_trimmed, tokens[0].length());

cmd_1 = new VariableSubstitution().substitute(ss.getConf(), cmd_1);

File sourceFile = new File(cmd_1);

if (! sourceFile.isFile()){

console.printError("File: "+ cmd_1 + " is not a file.");

ret = 1;

} else {

try {

ret = processFile(cmd_1);

} catch (IOException e) {

console.printError("Failed processing file "+ cmd_1 +" "+ e.getLocalizedMessage(),

stringifyException(e));

ret = 1;

}

}

} else if (cmd_trimmed.startsWith("!")) {

String shell_cmd = cmd_trimmed.substring(1);

shell_cmd = new VariableSubstitution().substitute(ss.getConf(), shell_cmd);

// shell_cmd = "/bin/bash -c \'" + shell_cmd + "\'";

try {

ShellCmdExecutor executor = new ShellCmdExecutor(shell_cmd, ss.out, ss.err);

ret = executor.execute();

if (ret != 0) {

console.printError("Command failed with exit code = " + ret);

}

} catch (Exception e) {

console.printError("Exception raised from Shell command " + e.getLocalizedMessage(),

stringifyException(e));

ret = 1;

}

} else { // local mode

try {

CommandProcessor proc = CommandProcessorFactory.get(tokens, (HiveConf) conf);

ret = processLocalCmd(cmd, proc, ss);

} catch (SQLException e) {

console.printError("Failed processing command " + tokens[0] + " " + e.getLocalizedMessage(),

org.apache.hadoop.util.StringUtils.stringifyException(e));

ret = 1;

}

}

return ret;

}执行:

try {

CommandProcessor proc = CommandProcessorFactory.get(tokens, (HiveConf) conf);

ret = processLocalCmd(cmd, proc, ss);

} catch (SQLException e) {

console.printError("Failed processing command " + tokens[0] + " " + e.getLocalizedMessage(),

org.apache.hadoop.util.StringUtils.stringifyException(e));

ret = 1;

} int processLocalCmd(String cmd, CommandProcessor proc, CliSessionState ss) {

int tryCount = 0;

boolean needRetry;

int ret = 0;

do {

try {

needRetry = false;

if (proc != null) {

if (proc instanceof Driver) {

Driver qp = (Driver) proc;

PrintStream out = ss.out;

long start = System.currentTimeMillis();

if (ss.getIsVerbose()) {

out.println(cmd);

}

qp.setTryCount(tryCount);

ret = qp.run(cmd).getResponseCode();

if (ret != 0) {

qp.close();

return ret;

}

// query has run capture the time

long end = System.currentTimeMillis();

double timeTaken = (end - start) / 1000.0;

ArrayList res = new ArrayList();

printHeader(qp, out);

// print the results

int counter = 0;

try {

if (out instanceof FetchConverter) {

((FetchConverter)out).fetchStarted();

}

while (qp.getResults(res)) {

for (String r : res) {

out.println(r);

}

counter += res.size();

res.clear();

if (out.checkError()) {

break;

}

}

} catch (IOException e) {

console.printError("Failed with exception " + e.getClass().getName() + ":"

+ e.getMessage(), "\n"

+ org.apache.hadoop.util.StringUtils.stringifyException(e));

ret = 1;

}

int cret = qp.close();

if (ret == 0) {

ret = cret;

}

if (out instanceof FetchConverter) {

((FetchConverter)out).fetchFinished();

}

console.printInfo("Time taken: " + timeTaken + " seconds" +

(counter == 0 ? "" : ", Fetched: " + counter + " row(s)"));

} else {

String firstToken = tokenizeCmd(cmd.trim())[0];

String cmd_1 = getFirstCmd(cmd.trim(), firstToken.length());

if (ss.getIsVerbose()) {

ss.out.println(firstToken + " " + cmd_1);

}

CommandProcessorResponse res = proc.run(cmd_1);

if (res.getResponseCode() != 0) {

ss.out.println("Query returned non-zero code: " + res.getResponseCode() +

", cause: " + res.getErrorMessage());

}

ret = res.getResponseCode();

}

}

} catch (CommandNeedRetryException e) {

console.printInfo("Retry query with a different approach...");

tryCount++;

needRetry = true;

}

} while (needRetry);

return ret;

} ret = 0

Signal.handle(interruptSignal, oldSignal); ===> return lastRet; ===> ret = cli.processLine(line, true); (736行)