基于Docker Centos7容器搭建Docker Swarm集群

基于Docker Centos7容器搭建Docker Swarm集群

一、相关docker操作命令

一次性删除全部容器

docker rm -f $(docker ps -aq)

一次性删除全部镜像

docker rmi -f $(docker image ls)

反向删除镜像

docker rmi -f $(docker image ls | grep -v centos7-bigdata1 | grep -v registry)

删除网络

docker network rm $(docker network ls -q)

创建网络bigdata

docker network create --driver bridge --subnet 172.19.100.0/24 --gateway 172.19.100.1 bigdata

root权限启动容器mycentos1,挂载到网络bigdata上,容器ip是172.19.100.2,宿主机目录 /mycentos1挂载到容器目录/moudle

docker run --privileged=true -itd -p 33031:22 -v /mycentos1:/moudle --name mycentos1 -h Master --network=bigdata centos7-bigdata:latest /usr/sbin/init

启动容器

docker start mycentos1

进入容器

docker exec -it mycentos1 /bin/bash

二、三台centos7容器实现时间同步

1.创建网络bigdata

docker network create --driver bridge --subnet 172.19.100.0/24 --gateway 172.19.100.1 bigdata

2 启动三台centos7容器

#启动容器mycentos1,ip是172.19.100.2,主机名设置为Master

docker run --privileged=true -itd -p 33031:22 -v /mycentos1:/moudle --name mycentos1 -h Master --network=bigdata mycentos1:latest /usr/sbin/init

#启动容器mycentos2,ip是172.19.100.3,主机名设置为Slave1

docker run --privileged=true -itd -p 33032:22 -v /mycentos2:/moudle --name mycentos2 -h Slave1 --network=bigdata mycentos2:latest /usr/sbin/init

#启动容器mycentos3,ip是172.19.100.4,主机名设置为Slave2

docker run --privileged=true -itd -p 33033:22 -v /mycentos3:/moudle --name mycentos3 -h Slave2 --network=bigdata mycentos3:latest /usr/sbin/init

3.Centos7容器设置时区

设置三台Centos7容器的时区

cd /usr/share/zoneinfo/Asia

#复制上海时区到 /etc 重命名localtime文件

cp -i Shanghai /etc/localtime

cp: overwrite ‘/etc/localtime’? yes

#查询时间

date

Tue Aug 18 15:55:08 CST 2020

4.centos7容器实现时间同步

三台centos7容器安装ntpdate包,实现时间同步

#安装ntpdate包

yum install -y ntpdate

#同步时间

ntpdate -u ntp.sjtu.edu.cn

#设置crontab,实现每分钟同步

vim /etc/crontab

* * * * * root /usr/sbin/ntpdate -u ntp.sjtu.edu.cn

三、三台Centos7容器之间实现免密登录

1.设置主机名与ip地址映射

三台Centos7服务器Master、Slave1、Slave2

vim /etc/hosts

172.19.100.2 Master

172.19.100.3 Slave1

172.19.100.4 Slave2

2.为三台Centos7容器设置新的root用户密码

passwd

123456!a

3.实现三台Centos7容器之间免密登录

设置Centos7容器之间免密登录具体步骤参考Centos7服务器实现免密登录这篇博客

Centos7服务器实现免密登录

4.测试免密码登录

在Master节点上登录Slave1、Slave2

ssh Slave1

exit

ssh Slave2

exit

ssh Master

ssh Slave2

5.测试文件传输免密码

vim scp_test.txt

scp scp_test.txt Slave1:/moudle/

![]()

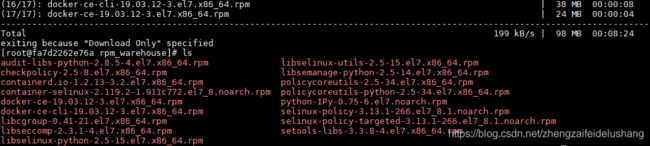

四、Centos7容器中安装docker

在线下载依赖包

yum install -y --downloadonly --downloaddir=/tmp/rpm_warehouse docker-ce

rpm -ivh ./*

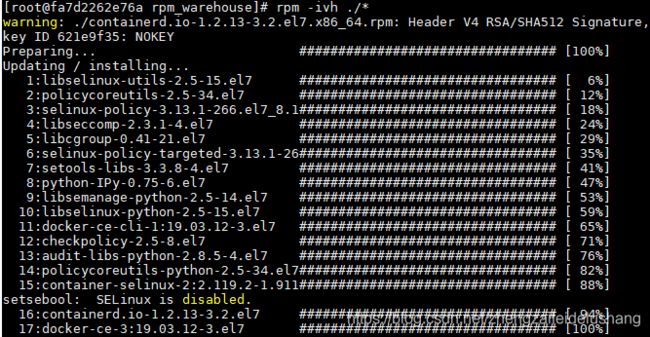

docker version

systemctl enable docker

systemctl start docker

systemctl status docker

再次查看docker版本,发现已经同时具有客户端、服务端了

docker version

五、Centos7容器重启导致/etc/hosts设置失效的解决办法

Centos7容器/etc/hosts文件中设置的主机名与ip地址映射如下:

vim /etc/hosts

172.19.100.2 Master

172.19.100.3 Slave1

172.19.100.4 Slave2

Centos7重启会导致/etc/hosts文件中的设置失效,通过设置自动执行脚本命令,把主机名与ip地址映射重新写入/etc/hosts中。

1.备份/etc/hosts文件为/etc/hosts-temp文件

cp /etc/hosts /etc/hosts-temp

cat /etc/hosts-temp

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.19.100.2 Master

172.19.100.3 Slave1

172.19.100.4 Slave2

2.开机运行的脚本如下,把备份的/etc/hosts-temp修改为/etc/hosts

vim /tmp/run.sh

#!/bin/bash

cat /etc/hosts-temp > /etc/hosts

3.设置重启自动执行脚本

#vim /root/.bashrc

vim /root/.bash_profile

/tmp/run.sh

source /etc/profile

六、Centos7容器重启导致部署在centos7容器里的docker失效的解决办法

1.Centos7容器重启后,部署在Centos7容器里的docker启动会报错。

docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor

preset: disabled)

Active: failed (Result: start-limit) since Wed 2020-08-19 10:48:54 CST; 8min ago

Docs: https://docs.docker.com

Process: 372 ExecStart=/usr/bin/dockerd -H fd:// -containerd=/run/containerd/containerd.sock (code=exited, status=1/FAILURE)

Main PID: 372 (code=exited, status=1/FAILURE)

Aug 19 10:48:52 Master systemd[1]: Failed to start Docker Application Container Engine.

Aug 19 10:48:52 Master systemd[1]: Unit docker.service entered failed state.

Aug 19 10:48:52 Master systemd[1]: docker.service failed.

Aug 19 10:48:54 Master systemd[1]: docker.service holdoff time over, scheduling restart.

Aug 19 10:48:54 Master systemd[1]: Stopped Docker Application Container Engine.

Aug 19 10:48:54 Master systemd[1]: start request repeated too quickly for docker.service

Aug 19 10:48:54 Master systemd[1]: Failed to start Docker Application Container Engine.

Aug 19 10:48:54 Master systemd[1]: Unit docker.service entered failed state.

Aug 19 10:48:54 Master systemd[1]: docker.service failed.

处理方法如下:

docker中默认存放镜像和容器的目录是:/var/lib/docker/,将/var/lib/docker/给删除并重启服务,解决了docker无法正常启动的问题。

rm -rf /var/lib/docker/

vim /etc/docker/daemon.json

{

"graph": "/mnt/docker-data",

"storage-driver": "overlay"

}

再重新启动服务,docker服务已经正常

systemctl restart docker

systemctl status docker

更多报错解决方法可以参考Centos7服务器重启Docker启动报错这篇博客

Centos7服务器重启Docker启动报错

2.设置Centos7容器重启自动执行脚本解决docker失效问题

脚本命令如下

vim /tmp/run.sh

#!/bin/bash

cat /etc/hosts-temp > /etc/hosts

rm -rf /var/lib/docker/

设置Centos7容器重启自动执行脚本

Centos7容器重启后自动执行/tmp/run.sh脚本

#vim /root/.bashrc

vim /root/.bash_profile

/tmp/run.sh

source /etc/profile

yum install -y openssh-clients openssh-server

至此基于Docker Centos7容器搭建docker集群的环境已经准备好,下面开始搭建docker集群

七、创建Docker Swarm集群

1.创建集群

在管理节点上执行如下命令来创建一个新的Swarm集群,创建成功后会自动提示如何加入更多节点到集群中。

返回的token串是集群的唯一id,加入集群的各个节点将需要这个信息。

默认的管理服务端口为2377,需要能被工作节点访问到。为了支持集群的成员发现和外部服务映射,还需要在所有节点开启7964 TCP/UDP端口和4789 UDP端口。

在Master节点初始化docker swarm,Master节点为管理节点

[root@Master ~]# docker swarm init

Swarm initialized: current node (uk8nc226p14t8dsuz0f68dwf0) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-4k7v30u07984bz4mjw684lpr7c97f60l7xk4yt1p26smzs1x5b-byj s0v09b4yt8ol6xhz188z5x 172.19.100.2:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instruct ions.

2.加入集群

在所有要加入集群的普通节点上面执行swarm join命令,表示把这台机器加入指定的集群中。

Slave1节点加入work,Slave1为工作节点

[root@Slave1 ~]# docker swarm join --token SWMTKN-1-4k7v30u07984bz4mjw684lpr7c97f60l7xk4yt1p26smzs1x5b-byjs0v09b4yt8ol6xhz188z5x 172.19.100.2:2377

This node joined a swarm as a worker.

Slave2节点加入work,Slave2为工作节点

[root@Slave2 ~]# docker swarm join --token SWMTKN-1-4k7v30u07984bz4mjw684lpr7c97f60l7xk4yt1p26smzs1x5b-byjs0v09b4yt8ol6xhz188z5x 172.19.100.2:2377

This node joined a swarm as a worker.

3.查看集群信息

通过docker info命令可以查看到集群的信息,还可以查看集群中已经加入的节点情况

docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

uk8nc226p14t8dsuz0f68dwf0 * Master Ready Active Leader 19.03.12

jfngupaws23987plwvpjf4eep Slave1 Ready Active 19.03.12

cp1qcwiytqmdkymqay9xowp5r Slave2 Ready Active 19.03.12

4.提升工作节点为普通节点,实现管理节点高可用

为了实现swarm集群高可用,避免出现单点故障,可以把工作节点转为管理节点,从而建立多个manager节点。

Docker node promote [节点名]

在Master节点上使Slave1节点的的work模式提升到manager。

[root@Master ~]# docker node promote Slave1

Node Slave1 promoted to a manager in the swarm.

再次查看集群信息发现Slave1节点变成了管理节点

docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

uk8nc226p14t8dsuz0f68dwf0 * Master Ready Active Leader 19.03.12

jfngupaws23987plwvpjf4eep Slave1 Ready Active Reachable 19.03.12

cp1qcwiytqmdkymqay9xowp5r Slave2 Ready Active 19.03.12

至此成功搭建了docker集群

八、设置Master、Slave1、Slave2三个节点指向私有镜像仓库

Docker私有镜像仓库的设置可以参考下面这篇博客:

Docker修改国内镜像源,同时搭建本地私有镜像仓库,配置其他docker服务器从私有镜像仓库拉取镜像,实现删除私有镜像仓库镜像

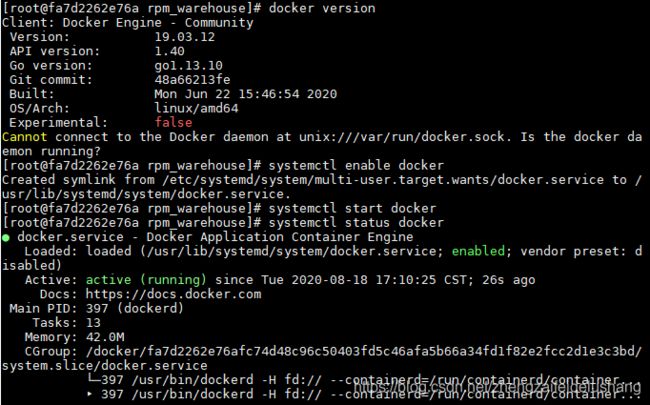

1.修改docker服务器配置文件,指向docker私有镜像仓库

vim /usr/lib/systemd/system/docker.service

[Service]

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry 10.176.233.24:5000

2.重新启动docker

systemctl daemon-reload

systemctl restart docker

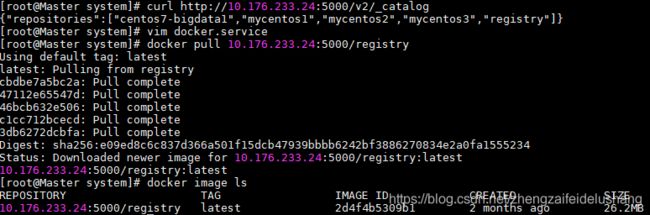

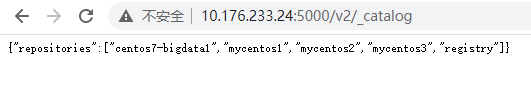

3.查看10.176.233.24服务器的镜像仓库

curl http://10.176.233.24:5000/v2/_catalog

{

"repositories":["centos7-bigdata1","mycentos1","mycentos2","mycentos3","registry"]}

4.从私有仓库下载镜像registry,查看镜像

docker pull 10.176.233.24:5000/registry

docker image ls

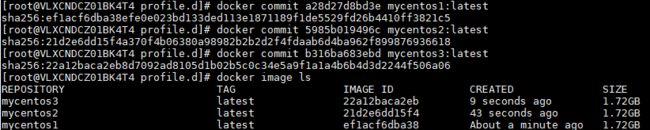

九、宿主机上把三台centos7容器保存为镜像并上传到私有镜像仓库

1.三台centos7容器保存为镜像

#保存为镜像

docker commit a28d27d8bd3e mycentos1:latest

docker commit 5985b019496c mycentos2:latest

docker commit b316ba683ebd mycentos3:latest

#查看镜像

docker image ls

docker tag mycentos1 127.0.0.1:5000/mycentos1:latest

docker tag mycentos2 127.0.0.1:5000/mycentos2:latest

docker tag mycentos3 127.0.0.1:5000/mycentos3:latest

3.上传三台Centos7镜像到10.176.233.24私有镜像仓库

docker push 127.0.0.1:5000/mycentos1:latest

docker push 127.0.0.1:5000/mycentos2:latest

docker push 127.0.0.1:5000/mycentos3:latest

4.查看10.176.233.24私有镜像仓库

发现私有镜像仓库已经有mycentos1、mycentos2、mycentos3三个镜像