flink table api 自定义数据格式解析

flink table api目前支持的数据格式(https://ci.apache.org/projects/flink/flink-docs-release-1.7/dev/table/tableApi.html )除基础数据格式外还支持pojo,但毕竟支持有限,我们希望通过固定的解析,就能直接从kafka直接消费数据,并封装成register table,以供sql查询。本文主要说明如何实现flink对binlog的支持。

1、flink的数据解析入口

以下是flink table api接入消费kafka json格式数据的代码片段,可以看到withFormat函数传入的是一个new Json()对象,我们查看一下下flink-Json的源码

StreamExecutionEnvironment env = StreamExecutionEnvironment.createLocalEnvironment();

StreamTableEnvironment tableEnv = TableEnvironment.getTableEnvironment(env);

tableEnv.connect(

new Kafka()

.version("0.10")

.topic("binlog.movieorderera01_movie_u_order_sharding")

.startFromEarliest()

.property("zookeeper.connect", "localhost:2181")

.property("bootstrap.servers", "localhost:9092"))

.withFormat(new Json().deriveSchema())

.withSchema(

new Schema()

.field("COD_USERNO","string")

.field("COD_USER_ID","string"))

.inAppendMode()

.registerTableSource("sm_user");

tableEnv.sqlQuery("select * from sm_user").printSchema();

源码目录如下,可以看出,flink-json的目录下有两个包,首先看descriptors,下面有两个类,其中Json这个类就是上面代码中调用的,所以我们需要些两个类BinLogParse和BinlogValidator,不需要做很多操作,复制改名并修改里面的成员变量名称就可以。代码如下

BinLogParse.java

public class BinLogParse extends FormatDescriptor {

private Boolean failOnMissingField;

private Boolean deriveSchema;

private String binlogSchema;

private String schema;

/**

* Format descriptor for Binlog.

*/

public BinLogParse() {

super(FORMAT_TYPE_VALUE, 1);

}

/**

* Sets flag whether to fail if a field is missing or not.

*

* @param failOnMissingField If set to true, the operation fails if there is a missing field.

* If set to false, a missing field is set to null.

*/

public BinLogParse failOnMissingField(boolean failOnMissingField) {

this.failOnMissingField = failOnMissingField;

return this;

}

/**

* Sets the JSON schema string with field names and the types according to the JSON schema

* specification [[http://json-schema.org/specification.html]].

*

* The schema might be nested.

*

* @param binlogSchema JSON schema

*/

public BinLogParse binlogSchema(String binlogSchema) {

Preconditions.checkNotNull(binlogSchema);

this.binlogSchema = binlogSchema;

this.schema = null;

this.deriveSchema = null;

return this;

}

/**

* Sets the schema using type information.

*

*

JSON objects are represented as ROW types.

*

*

The schema might be nested.

*

* @param schemaType type information that describes the schema

*/

public BinLogParse schema(TypeInformation schemaType) {

Preconditions.checkNotNull(schemaType);

this.schema = TypeStringUtils.writeTypeInfo(schemaType);

this.binlogSchema = null;

this.deriveSchema = null;

return this;

}

/**

* Derives the format schema from the table's schema described using {@link Schema}.

*

* This allows for defining schema information only once.

*

*

The names, types, and field order of the format are determined by the table's

* schema. Time attributes are ignored if their origin is not a field. A "from" definition

* is interpreted as a field renaming in the format.

*/

public BinLogParse deriveSchema() {

this.deriveSchema = true;

this.schema = null;

this.binlogSchema = null;

return this;

}

/**

* Internal method for format properties conversion.

*/

@Override

public void addFormatProperties(DescriptorProperties properties) {

if (deriveSchema != null) {

properties.putBoolean(FORMAT_DERIVE_SCHEMA(), deriveSchema);

}

if (binlogSchema != null) {

properties.putString(FORMAT_BINLOG_SCHEMA, binlogSchema);

}

if (schema != null) {

properties.putString(FORMAT_SCHEMA, schema);

}

if (failOnMissingField != null) {

properties.putBoolean(FORMAT_FAIL_ON_MISSING_FIELD, failOnMissingField);

}

}

}

BinlogValidator.java

public class BinlogValidator extends FormatDescriptorValidator {

public static final String FORMAT_TYPE_VALUE = "binlog";

public static final String FORMAT_SCHEMA = "format.schema";

public static final String FORMAT_BINLOG_SCHEMA = "format.biinlog-schema";

public static final String FORMAT_FAIL_ON_MISSING_FIELD = "format.fail-on-missing-field";

@Override

public void validate(DescriptorProperties properties) {

super.validate(properties);

properties.validateBoolean(FORMAT_DERIVE_SCHEMA(), true);

final boolean deriveSchema = properties.getOptionalBoolean(FORMAT_DERIVE_SCHEMA()).orElse(false);

final boolean hasSchema = properties.containsKey(FORMAT_SCHEMA);

final boolean hasSchemaString = properties.containsKey(FORMAT_BINLOG_SCHEMA);

if (deriveSchema && (hasSchema || hasSchemaString)) {

throw new ValidationException(

"Format cannot define a schema and derive from the table's schema at the same time.");

} else if (!deriveSchema && hasSchema && hasSchemaString) {

throw new ValidationException("A definition of both a schema and JSON schema is not allowed.");

} else if (!deriveSchema && !hasSchema && !hasSchemaString) {

throw new ValidationException("A definition of a schema or JSON schema is required.");

} else if (hasSchema) {

properties.validateType(FORMAT_SCHEMA, true, false);

} else if (hasSchemaString) {

properties.validateString(FORMAT_BINLOG_SCHEMA, false, 1);

}

properties.validateBoolean(FORMAT_FAIL_ON_MISSING_FIELD, true);

}

}

2、flink数据解析(解析部分)

入口部分有了,那我们来看看解析部分,先看formats.json这个包下的类,我们可以看到JsonRowDeserializationSchema这个文件,这是flink反序列化kafka json数据的类,同样,我们复制并改写这个类,改写deserialize 方法,代码如下:

BinlogRowDeserializationSchema.java

....

....

@Override

public Row deserialize(byte[] message) throws IOException {

try {

CanalEntry.Entry entry = BinlogEntryUtil.deserializeFromProtoBuf(message);

BinlogEntry binlogEntry = BinlogEntryUtil.serializeToBean(entry);

String tablename = binlogEntry.getTableName();

//for (BinlogRow binlogRow : binlogEntry.getRowDatas()) {

List dataRows = binlogEntry.getRowDatas();

//if (dataRows.size()>0){

BinlogRecordBean binlogRecordBean = new BinlogRecordBean(binlogEntry.getExecuteTime(), tablename, binlogEntry.getEventType(), dataRows.get(0));

JsonNode root=objectMapper.readTree(binlogRecordBean.toString());

}

return convertRow(root, (RowTypeInfo) typeInfo);

}catch (Throwable t) {

throw new IOException("Failed to deserialize JSON object.", t);

}

}

这里是将binlog解析为json,这样就能减少重写代码。

然后在重写BinlogRowFormatFactory的createDeserializationSchema,和

@Override

public DeserializationSchema createDeserializationSchema(Map properties) {

final DescriptorProperties descriptorProperties = validateAndGetProperties(properties);

// create and configure

final BinlogRowDeserializationSchema schema = new BinlogRowDeserializationSchema(createTypeInformation(descriptorProperties));

descriptorProperties.getOptionalBoolean(BinlogValidator.FORMAT_FAIL_ON_MISSING_FIELD)

.ifPresent(schema::setFailOnMissingField);

return schema;

}

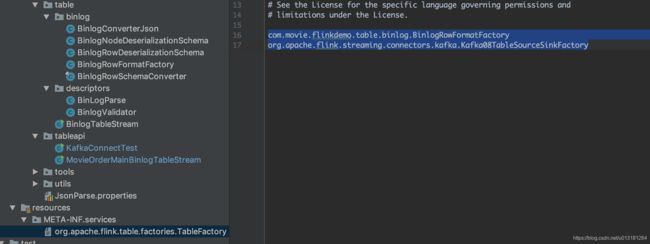

3、flink 如何运行自定义数据格式

数据解析完了,该如何让flink识别并调用呢,通过查看源码发现,flink调用接口的方式是用的jdk自带的SPI(这里不做多的介绍)方式,只需要在resources/META-INF目录下建立service目录,并在目录下建立和flink table api的 Factory类路径相同的文件(org.apache.flink.table.factories.TableFactory),并在文件里添加上flink-kafka的table source和BinlogRowFormatFactory

com.movie.flinkdemo.table.binlog.BinlogRowFormatFactory

org.apache.flink.streaming.connectors.kafka.Kafka08TableSourceSinkFactory

然后在接入数据时就可以在withFormat调用自定义的数据解析器了,如下

tableEnv.connect(

kafka)

.withFormat(new BinLogParse().deriveSchema())

.withSchema(tableSchema)

.inAppendMode()

.registerTableSource("sm_user");