CDH 5.16.1集群环境搭建

CDH 5.16.1集群环境搭建

集群节点

192.168.10.1 hadoop01 235G+4T

192.168.10.2 hadoop02 235G+4T

192.168.10.3 hadoop03 235G+4T

192.168.10.4 hadoop04 235G+4T

集群节点初始化

1.配置节点hosts

192.168.10.1 hadoop01.office.gdapi.net hadoop01

192.168.10.2 hadoop02.office.gdapi.net hadoop02

192.168.10.3 hadoop03.office.gdapi.net hadoop03

192.168.10.4 hadoop04.office.gdapi.net hadoop04

2.关闭所有节点的防⽕火墙及清空规则

systemctl stop firewalld

systemctl disable firewalld

iptables -F

3.关闭所有节点的selinux

vi /etc/selinux/config

将SELINUX=enforcing改为SELINUX=disabled

设置后需要重启才能⽣生效

4.设置所有节点的yum源

- 先备份现有centos-base.repo,再替换一下配置

# CentOS-Base.repo

#

# The mirror system uses the connecting IP address of the client and the

# update status of each mirror to pick mirrors that are updated to and

# geographically close to the client. You should use this for CentOS updates

# unless you are manually picking other mirrors.

#

# If the mirrorlist= does not work for you, as a fall back you can try the

# remarked out baseurl= line instead.

#

#

[base]

name=CentOS-$releasever - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-$releasever - Plus - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#contrib - packages by Centos Users

[contrib]

name=CentOS-$releasever - Contrib - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

5.安装ifconfig

yum install -y net-tools.x86_64

测试:ifconfig

6. 设置所有节点的时区⼀一致及时钟同步

-

设置时区:

- 查看当前时区:

timedatectl - 查看有哪些时区:

timedatectl list-timezones - 所有节点设置上海时区:

timedatectl set-timezone Asia/Shanghai

- 查看当前时区:

-

设置开启自启:

systemctl enable ntpd

7. 部署集群的JDK

mkdir /opt/apps/

tar -zxvf jdk-8u202-linux-x64.tar.gz -C /opt/apps

# 切记必须修正所属⽤用户及⽤用户组

chown -R root:root /opt/apps/jdk1.8.0_202

# 配置环境变量

echo "export JAVA_HOME=/opt/apps/jdk1.8.0_202" >> /etc/profile

echo "export PATH=$PATH:$JAVA_HOME/bin" >> /etc/profile

source /etc/profile

java -version

8.设置主机参数

8.1.建议将/proc/sys/vm/swappiness设置为最⼤大值10。

swappiness值控制操作系统尝试交换内存的积极;

swappiness=0:表示最⼤大限度使⽤用物理理内存,之后才是swap空间;

swappiness=100:表示积极使⽤用swap分区,并且把内存上的数据及时搬迁到swap空间;

如果是混合服务器器,不不建议完全禁⽤用swap,可以尝试降低swappiness。

临时调整:

sysctl vm.swappiness=10

永久调整:

cat << EOF >> /etc/sysctl.conf

# Adjust swappiness value

vm.swappiness=10

EOF

8.2.已启⽤用透明⼤大⻚页⾯面压缩,可能会导致重⼤大性能问题,建议禁⽤用此设置。

临时调整:

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

永久调整:

cat << EOF >> /etc/rc.d/rc.local

# Disable transparent_hugepage

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

EOF

# centos7.x系统,需要为"/etc/rc.d/rc.local"⽂文件赋予执⾏行行权限

chmod +x /etc/rc.d/rc.local

9. 主节点部署MySQL5.7

- 创建数据库及用户授权

CREATE DATABASE scm DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE amon DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE rman DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE hue DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE metastore DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE sentry DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE nav DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE navms DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE oozie DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

GRANT ALL ON scm.* TO 'scm'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON amon.* TO 'amon'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON rman.* TO 'rman'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON hue.* TO 'hue'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON metastore.* TO 'hive'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON sentry.* TO 'sentry'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON nav.* TO 'nav'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON navms.* TO 'navms'@'%' IDENTIFIED BY '123456Aa.';

GRANT ALL ON oozie.* TO 'oozie'@'%' IDENTIFIED BY '123456Aa.';

SHOW DATABASES;

10. 所有节点部署mysql jdbc jar

mkdir -p /usr/share/java/

cp mysql-connector-java.jar /usr/share/java/

CDH部署

1.离线部署cm server及agent

1.1.所有节点创建⽬目录及解压

mkdir /opt/cloudera-manager

tar -zxvf cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz -C /opt/cloudera-manager

/

1.2.所有节点修改agent的配置,指向server的节点hadoop01

sed -i "s/server_host=localhost/server_host=hadoop01/g" /opt/cloudera-manager/cm-

5.16.1/etc/cloudera-scm-agent/config.ini

1.3.主节点修改server的配置:

vi /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-server/db.properties

com.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=hadoop01

com.cloudera.cmf.db.name=cmf

com.cloudera.cmf.db.user=cmf

com.cloudera.cmf.db.password=password

com.cloudera.cmf.db.setupType=EXTERNAL

1.4.所有节点创建⽤用户

useradd --system --home=/opt/cloudera-manager/cm-5.16.1/run/cloudera-scm-server/ -

-no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

1.5.⽬目录修改⽤用户及⽤用户组

chown -R cloudera-scm:cloudera-scm /opt/cloudera-manager

2.hadoop01节点部署离线parcel源

2.1.部署离线parcel源

$ mkdir -p /opt/cloudera/parcel-repo

-rw-r--r--. 1 cloudera-scm cloudera-scm 2127506677 Nov 27 2018 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

-rw-r--r--. 1 cloudera-scm cloudera-scm 41 Dec 9 18:50 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha

-rw-r-----. 1 root root 81326 Dec 9 19:21 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.torrent

-rw-r--r--. 1 cloudera-scm cloudera-scm 841524318 Dec 9 18:50 cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz

-rw-r--r--. 1 cloudera-scm cloudera-scm 194042837 Dec 9 18:50 jdk-8u202-linux-x64.tar.gz

-rw-r--r--. 1 cloudera-scm cloudera-scm 68215 Dec 9 18:50 manifest.json

-rw-r--r--. 1 cloudera-scm cloudera-scm 1007502 Dec 9 18:50 mysql-connector-java.jar

$ cp CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel /opt/cloudera/parcel-repo/

#切记cp时,重命名去掉1,不不然在部署过程CM认为如上⽂文件下载未完整,会持续下载

$ cp CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1 /opt/cloudera/parcel-repo/CDH-5.1

6.1-1.cdh5.16.1.p0.3-el7.parcel.sha

$ cp manifest.json /opt/cloudera/parcel-repo/

2.2.⽬目录修改⽤用户及⽤用户组

$ chown -R cloudera-scm:cloudera-scm /opt/cloudera/

3.所有节点创建软件安装⽬目录、⽤用户及⽤用户组权限

mkdir -p /opt/cloudera/parcels

chown -R cloudera-scm:cloudera-scm /opt/cloudera/

4.hadoop01节点启动server

4.1.启动server

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server start

4.2.阿⾥里里云web界⾯面,设置该hadoop01节点防⽕火墙放开7180端⼝口

4.3.等待1min,打开 http://hadoop01:7180 账号密码:admin/admin

4.4.假如打不不开,去看server的log,根据错误仔细排查错误

5.所有节点启动agent

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-agent start

- 设置开机自启:https://www.jianshu.com/p/2a44214808ac

6.接下来,全部Web界⾯面操作

安装spark2.x

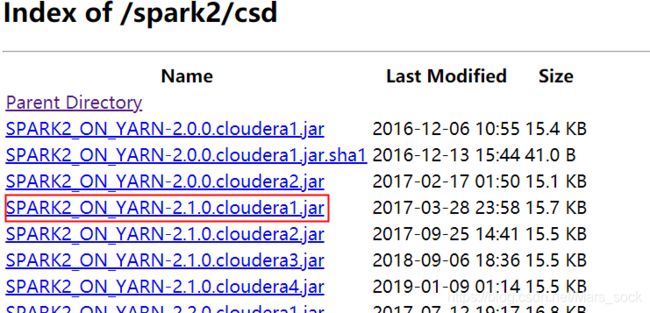

1.软件包准备

-

所需软件包:

2.安装部署

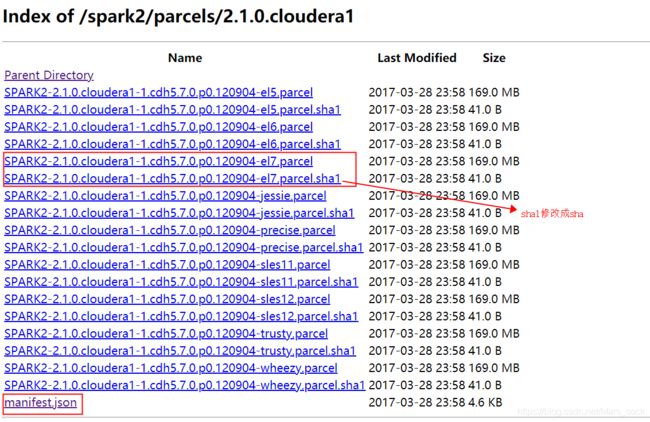

注意:下载对应版本的包,我的CentOS7,所以下载el7的包,若是CentOS6,就要下el6的包

-

安装部署(主节点操作):

- 上传csd包到/opt/cloudera/csd目录下

mkdir /opt/cloudera/csd 上传SPARK2_ON_YARN-2.1.0.cloudera1.jar包到/opt/cloudera/csd chown cloudera-scm:cloudera-scm -R /opt/cloudera/csd- 上传parcel包到/opt/cloudera/parcel-repo目录下

上传的parcel为: # 先把原有的manifest.json备份 mv /opt/cloudera/parcel-repo/manifest.json /opt/cloudera/parcel-repo/manifest.json.bak SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el7.parcel SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el7.parcel.sha1 manifest.json mv SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el7.parcel.sha1 SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el7.parcel.sha -

停止集群服务和CM、agent

# 停止集群和CM、agent

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server stop

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-agent stop

# 启动CM、agent

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server start

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-agent start

-

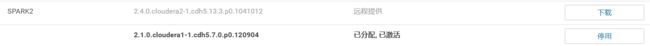

把CM和集群启动起来。然后点击主机->Parcel页面,看是否多了个spark2的选项。如下图,你这里此时应该是分配按钮,点击,等待操作完成后,点击激活按钮

-

激活后,点击你的群集-》添加服务,添加spark2服务;

3.CDH集群添加Spark sql

- CDH Spark默认是不支持Spark sql的,所以需要手动添加

- 通过查看spark2-submit命令查看其启动过程:

# which spark2-submit

/usr/bin/spark2-submit

# ll /usr/bin/spark2-submit

lrwxrwxrwx 1 root root 31 Dec 16 15:11 /usr/bin/spark2-submit -> /etc/alternatives/spark2-submit

# ll /etc/alternatives/spark2-submit

lrwxrwxrwx 1 root root 83 Dec 16 15:11 /etc/alternatives/spark2-submit -> /opt/cloudera/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/bin/spark2-submit

- 查看spark2-submit文件的内容

# cat /opt/cloudera/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/bin/spark2-submit

#!/bin/bash

# Reference: http://stackoverflow.com/questions/59895/can-a-bash-script-tell-what-directory-its-stored-in

SOURCE="${BASH_SOURCE[0]}"

BIN_DIR="$( dirname "$SOURCE" )"

while [ -h "$SOURCE" ]

do

SOURCE="$(readlink "$SOURCE")"

[[ $SOURCE != /* ]] && SOURCE="$DIR/$SOURCE"

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

done

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

CDH_LIB_DIR=$BIN_DIR/../../CDH/lib

LIB_DIR=$BIN_DIR/../lib

export HADOOP_HOME=$CDH_LIB_DIR/hadoop

# Autodetect JAVA_HOME if not defined

. $CDH_LIB_DIR/bigtop-utils/bigtop-detect-javahome

exec $LIB_DIR/spark2/bin/spark-submit "$@"

- 根据上面的脚步得知最后执行了脚本:/opt/cloudera/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/lib/spark2/bin/spark-submit

# cat /opt/cloudera/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/lib/spark2/bin/spark-submit

#!/usr/bin/env bash

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

if [ -z "${SPARK_HOME}" ]; then

source "$(dirname "$0")"/find-spark-home

fi

# disable randomized hash for string in Python 3.3+

export PYTHONHASHSEED=0

exec "${SPARK_HOME}"/bin/spark-class org.apache.spark.deploy.SparkSubmit "$@"

上面使用了find-spark-home文件,查看SPARK_HOME的值。如果存在SPARK_HOME存在,则直接返回。如果不返回, 则查看当前目录下,是否有find_spark_home.py文件。如果存在find_spark_home.py文件,则调用python执行获取结果。如果不存在,则使用当前目录为SPARK_HOME。

这里Cloudera的最后使用/opt/cloudera/parcels/SPARK2/lib/spark2目录,作为SPARK_HOME的值。

-

添加Spark sql命令

- /usr/bin/ 目录下添加 spark2-sql 链接文件,这样 spark2-sql 命令就可以被找到

ln -s /etc/alternatives/spark2-sql /usr/bin/spark2-sql ln -s /opt/cloudera/parcels/SPARK2/bin/spark2-sql /etc/alternatives/spark2-sql- 创建文件/opt/cloudera/parcels/SPARK2/bin/spark2-sql:

#!/bin/bash # Reference: http://stackoverflow.com/questions/59895/can-a-bash-script-tell-what-directory-its-stored-in SOURCE="${BASH_SOURCE[0]}" BIN_DIR="$( dirname "$SOURCE" )" while [ -h "$SOURCE" ] do SOURCE="$(readlink "$SOURCE")" [[ $SOURCE != /* ]] && SOURCE="$DIR/$SOURCE" BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )" done BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )" CDH_LIB_DIR=$BIN_DIR/../../CDH/lib LIB_DIR=$BIN_DIR/../lib export HADOOP_HOME=$CDH_LIB_DIR/hadoop # Autodetect JAVA_HOME if not defined . $CDH_LIB_DIR/bigtop-utils/bigtop-detect-javahome exec $LIB_DIR/spark2/bin/spark-sql "$@"- 修改文件权限:chmod 755 /opt/cloudera/parcels/SPARK2/bin/spark2-sql

- 创建文件:/opt/cloudera/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/lib/spark2/bin/spark-sql

#!/usr/bin/env bash # # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # if [ -z "${SPARK_HOME}" ]; then source "$(dirname "$0")"/find-spark-home fi export _SPARK_CMD_USAGE="Usage: ./bin/spark-sql [options] [cli option]" exec "${SPARK_HOME}"/bin/spark-submit --class org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver "$@"- 修改文件权限:chmod 755 /opt/cloudera/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/lib/spark2/bin/spark-sql

-

下载对应版本的spark包:http://archive.apache.org/dist/spark/spark-2.1.0/,或者下载源码自己编译(我是自己编译的spark源码)

- 将spark-2.1.0/assembly/target/scala-2.11/jars下的相关jar包拷贝到/opt/cloudera/parcels/SPARK2/lib/spark2/jars/

cd spark-2.1.0/assembly/target/scala-2.11/jars cp hive-* /opt/cloudera/parcels/SPARK2/lib/spark2/jars/ #拷贝的时候hive-metastore-1.2.1.spark2.jar不用拷贝 cp spark-hive-thriftserver_2.11-2.1.0.jar /opt/cloudera/parcels/SPARK2/lib/spark2/jars/ -

添加完jar包就可以使用spark-sql(要想多个节点都能使用需都部署spark-sql)

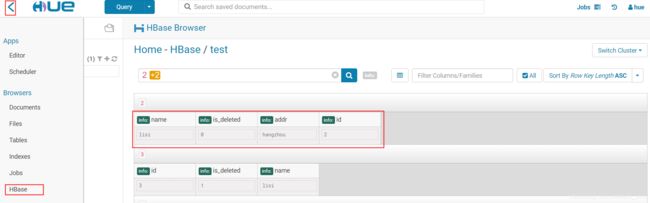

HUE整合Hbase

1.启用thrift服务

2.代理用户授权认证

- core-site.xml添加

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hbase.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hbase.groups</name>

<value>*</value>

</property>

3.检查HUE在hue_safety_valve.ini文件指定的Hbase的本地配置目录

[hbase]

hbase_conf_dir=/opt/cloudera/parcels/CDH/lib/hbase/conf

thrift_transport=buffered

4.设置hue中Hbase的服务和Thirft Server

5.更新修改后的配置并重启hue和hbase服务

QA:

1.hue测试连通性报错:Unexpected error. Unable to verify database connection

-

查看日志:

ImportError: libxslt.so.1: cannot open shared object file: No such file or directory -

原因是linux系统缺少库文件

yum install krb5-devel cyrus-sasl-gssapi cyrus-sasl-deve libxml2-devel libxslt-devel mysql mysql-devel openldap-devel python-devel python-simplejson sqlite-devel

2.cloudera-manager修改jdk

- cloudera-manager的默认安装了open jdk,将其修改为使用oracle jdk:

# whereis java

java: /usr/bin/java /usr/lib/java /etc/java /usr/share/java /opt/apps/jdk1.8.0_202/bin/java

# ll /usr/bin/java

lrwxrwxrwx 1 root root 22 Dec 16 15:00 /usr/bin/java -> /etc/alternatives/java

# ll /etc/alternatives/java

lrwxrwxrwx 1 root root 31 Dec 16 15:00 /etc/alternatives/java -> /opt/apps/jdk1.8.0_202/bin/java

# 这里可以知道最终被链接到使用的jdk路径是/opt/apps/jdk1.8.0_202/bin/java,这里显示的是我已经修改后的结果

- 使用alternatives版本管理器,切换到oracle jdk

# alternatives --display java

java - status is auto.

link currently points to /opt/apps/jdk1.8.0_202/bin/java

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/java - family java-1.8.0-openjdk.x86_64 priority 1800232

slave jjs: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/jjs

slave keytool: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/keytool

slave orbd: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/orbd

slave pack200: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/pack200

slave policytool: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/policytool

slave rmid: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/rmid

slave rmiregistry: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/rmiregistry

slave servertool: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/servertool

slave tnameserv: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/tnameserv

slave unpack200: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre/bin/unpack200

slave jre_exports: /usr/lib/jvm-exports/jre-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64

slave jre: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre

slave java.1.gz: /usr/share/man/man1/java-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave jjs.1.gz: /usr/share/man/man1/jjs-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave keytool.1.gz: /usr/share/man/man1/keytool-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave orbd.1.gz: /usr/share/man/man1/orbd-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave pack200.1.gz: /usr/share/man/man1/pack200-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave policytool.1.gz: /usr/share/man/man1/policytool-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave rmid.1.gz: /usr/share/man/man1/rmid-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave rmiregistry.1.gz: /usr/share/man/man1/rmiregistry-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave servertool.1.gz: /usr/share/man/man1/servertool-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave tnameserv.1.gz: /usr/share/man/man1/tnameserv-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

slave unpack200.1.gz: /usr/share/man/man1/unpack200-java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64.1.gz

/opt/apps/jdk1.8.0_202/bin/java - priority 1800233

slave jjs: (null)

slave keytool: (null)

slave orbd: (null)

slave pack200: (null)

slave policytool: (null)

slave rmid: (null)

slave rmiregistry: (null)

slave servertool: (null)

slave tnameserv: (null)

slave unpack200: (null)

slave jre_exports: (null)

slave jre: (null)

slave java.1.gz: (null)

slave jjs.1.gz: (null)

slave keytool.1.gz: (null)

slave orbd.1.gz: (null)

slave pack200.1.gz: (null)

slave policytool.1.gz: (null)

slave rmid.1.gz: (null)

slave rmiregistry.1.gz: (null)

slave servertool.1.gz: (null)

slave tnameserv.1.gz: (null)

slave unpack200.1.gz: (null)

Current `best' version is /opt/apps/jdk1.8.0_202/bin/java.

- 修改问需要替换的jdk

# 上面的alternatives --display java命令可以得到openjdk的优先级号为:1800232

# 使用下面命令进行替换

alternatives --install /usr/bin/java java /opt/apps/jdk1.8.0_202/bin/java 1800233

# --install 表示安装

# /usr/bin/java 表示命令存在的公共链接(必须绝对路径)

# java 表示安装的命令名称

# /opt/apps/jdk1.8.0_202/bin/java 表示命令实际上指向的位置

# 1800233 表示命令的优先级,如果你设置的优先级低于上一个版本的优先级,那么他依旧会执行上一个版本的命令

- 修改Clouder Manager Server的java环境:

# 1.关闭所有服务,不仅包括hdfs,hive等服务,而且包含cloudera server和agent的服务。 /opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server stop

# 2.在Cloudera Manager Server 主机上修改/etc/default/cloudera-scm-server 添加 export JAVA_HOME=/opt/apps/jdk1.8.0_202

# 3.启动 Cloudera Manager Server 使用命令 /opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server start

# 4.更改网页上面的配置java_home 点击顺序【主机】->【所有主机】->【配置】->【高级】

# 5.启动其他agent节点