Nginx源码解析——连接模块

由于 Nginx 工作在 master-worker 多进程模式,若所有 worker 进程在同一时间监听同一个端口,当该端口有新的连接事件出现时,每个worker 进程都会调用函数ngx_event_accept 试图与新的连接建立通信,即所有worker 进程都会被唤醒,这就是所谓的“惊群”问题,这样会导致系统性能下降。幸好在Nginx 采用了ngx_accept_mutex 同步锁机制,即只有获得该锁的worker 进程才能去处理新的连接事件,也就在同一时间只能有一个worker 进程监听某个端口。虽然这样做解决了“惊群”问题,但是随之会出现另一个问题,若每次出现的新连接事件都被同一个worker 进程获得锁的权利并处理该连接事件,这样会导致进程之间不均衡的状态,即在所有worker 进程中,某些进程处理的连接事件数量很庞大,而某些进程基本上不用处理连接事件,一直处于空闲状态。因此,这样会导致worker 进程之间的负载不均衡,会影响Nginx 的整体性能。为了解决负载失衡的问题,Nginx 在已经实现同步锁的基础上定义了负载阈值ngx_accept_disabled,当某个worker 进程的负载阈值大于 0 时,表示该进程处于负载超重的状态,则Nginx 会控制该进程,使其没机会试图与新的连接事件进行通信,这样就会为其他没有负载超重的进程创造了处理新连接事件的机会,以此达到进程间的负载均衡。

连接事件处理

新连接事件由函数 ngx_event_accept 处理。

- 首先调用accept方法试图建立新连接,如果没有准备好的新连接事件,ngx_event_accept方法会直接返回。

- 设置负载均衡阈值ngx_accept_disabled,这个阈值是进程允许的总连接数的1/8减去空闲连接数,

- 调用ngx_get_connection方法由连接池中获取一个ngx_connection_t连接对象。

- 为ngx_connection_t中的pool指针建立内存池。在这个连接释放到空闲连接池时,释放pool内存池。设置套接字的属性,如设为非阻塞套接字。

- 将这个新连接对应的读事件添加到epoll等事件驱动模块中,这样,在这个连接上如果接收到用户请求epoll_wait,就会收集到这个事件。

- 调用监听对象ngx_listening_t中的handler回调方法。ngx_listening_t结构俸的handler回调方法就是当新的TCP连接刚刚建立完成时在这里调用的。

- 最后,如果监听事件的available标志位为1,再次循环到第1步,否则ngx_event_accept方法结束。事件的available标志位对应着multi_accept配置项。当available为l时,告诉Nginx -次性尽量多地建立新连接,它的实现原理也就在这里

/* 处理新连接事件 */

void

ngx_event_accept(ngx_event_t *ev)

{

socklen_t socklen;

ngx_err_t err;

ngx_log_t *log;

ngx_uint_t level;

ngx_socket_t s;

ngx_event_t *rev, *wev;

ngx_listening_t *ls;

ngx_connection_t *c, *lc;

ngx_event_conf_t *ecf;

u_char sa[NGX_SOCKADDRLEN];

#if (NGX_HAVE_ACCEPT4)

static ngx_uint_t use_accept4 = 1;

#endif

if (ev->timedout) {

if (ngx_enable_accept_events((ngx_cycle_t *) ngx_cycle) != NGX_OK) {

return;

}

ev->timedout = 0;

}

/* 获取ngx_event_core_module模块的配置项参数结构 */

ecf = ngx_event_get_conf(ngx_cycle->conf_ctx, ngx_event_core_module);

if (ngx_event_flags & NGX_USE_RTSIG_EVENT) {

ev->available = 1;

} else if (!(ngx_event_flags & NGX_USE_KQUEUE_EVENT)) {

ev->available = ecf->multi_accept;

}

lc = ev->data;/* 获取事件所对应的连接对象 */

ls = lc->listening;/* 获取连接对象的监听端口数组 */

ev->ready = 0;/* 设置事件的状态为未准备就绪 */

ngx_log_debug2(NGX_LOG_DEBUG_EVENT, ev->log, 0,

"accept on %V, ready: %d", &ls->addr_text, ev->available);

do {

socklen = NGX_SOCKADDRLEN;

/* accept 建立一个新的连接 */

#if (NGX_HAVE_ACCEPT4)

if (use_accept4) {

s = accept4(lc->fd, (struct sockaddr *) sa, &socklen,

SOCK_NONBLOCK);

} else {

s = accept(lc->fd, (struct sockaddr *) sa, &socklen);

}

#else

s = accept(lc->fd, (struct sockaddr *) sa, &socklen);

#endif

/* 连接建立错误时的相应处理 */

if (s == (ngx_socket_t) -1) {

err = ngx_socket_errno;

if (err == NGX_EAGAIN) {

ngx_log_debug0(NGX_LOG_DEBUG_EVENT, ev->log, err,

"accept() not ready");

return;

}

level = NGX_LOG_ALERT;

if (err == NGX_ECONNABORTED) {

level = NGX_LOG_ERR;

} else if (err == NGX_EMFILE || err == NGX_ENFILE) {

level = NGX_LOG_CRIT;

}

#if (NGX_HAVE_ACCEPT4)

ngx_log_error(level, ev->log, err,

use_accept4 ? "accept4() failed" : "accept() failed");

if (use_accept4 && err == NGX_ENOSYS) {

use_accept4 = 0;

ngx_inherited_nonblocking = 0;

continue;

}

#else

ngx_log_error(level, ev->log, err, "accept() failed");

#endif

if (err == NGX_ECONNABORTED) {

if (ngx_event_flags & NGX_USE_KQUEUE_EVENT) {

ev->available--;

}

if (ev->available) {

continue;

}

}

if (err == NGX_EMFILE || err == NGX_ENFILE) {

if (ngx_disable_accept_events((ngx_cycle_t *) ngx_cycle)

!= NGX_OK)

{

return;

}

if (ngx_use_accept_mutex) {

if (ngx_accept_mutex_held) {

ngx_shmtx_unlock(&ngx_accept_mutex);

ngx_accept_mutex_held = 0;

}

ngx_accept_disabled = 1;

} else {

ngx_add_timer(ev, ecf->accept_mutex_delay);

}

}

return;

}

#if (NGX_STAT_STUB)

(void) ngx_atomic_fetch_add(ngx_stat_accepted, 1);

#endif

/*

* ngx_accept_disabled 变量是负载均衡阈值,表示进程是否超载;

* 设置负载均衡阈值为每个进程最大连接数的八分之一减去空闲连接数;

* 即当每个进程accept到的活动连接数超过最大连接数的7/8时,

* ngx_accept_disabled 大于0,表示该进程处于负载过重;

*/

ngx_accept_disabled = ngx_cycle->connection_n / 8

- ngx_cycle->free_connection_n;

/* 从connections数组中获取一个connection连接来维护新的连接 */

c = ngx_get_connection(s, ev->log);

if (c == NULL) {

if (ngx_close_socket(s) == -1) {

ngx_log_error(NGX_LOG_ALERT, ev->log, ngx_socket_errno,

ngx_close_socket_n " failed");

}

return;

}

#if (NGX_STAT_STUB)

(void) ngx_atomic_fetch_add(ngx_stat_active, 1);

#endif

/* 为新的连接创建一个连接池pool,直到关闭该连接时才释放该连接池pool */

c->pool = ngx_create_pool(ls->pool_size, ev->log);

if (c->pool == NULL) {

ngx_close_accepted_connection(c);

return;

}

c->sockaddr = ngx_palloc(c->pool, socklen);

if (c->sockaddr == NULL) {

ngx_close_accepted_connection(c);

return;

}

ngx_memcpy(c->sockaddr, sa, socklen);

log = ngx_palloc(c->pool, sizeof(ngx_log_t));

if (log == NULL) {

ngx_close_accepted_connection(c);

return;

}

/* set a blocking mode for aio and non-blocking mode for others */

/* 设置套接字的属性 */

if (ngx_inherited_nonblocking) {

if (ngx_event_flags & NGX_USE_AIO_EVENT) {

if (ngx_blocking(s) == -1) {

ngx_log_error(NGX_LOG_ALERT, ev->log, ngx_socket_errno,

ngx_blocking_n " failed");

ngx_close_accepted_connection(c);

return;

}

}

} else {

/* 使用epoll模型时,套接字的属性为非阻塞模式 */

if (!(ngx_event_flags & (NGX_USE_AIO_EVENT|NGX_USE_RTSIG_EVENT))) {

if (ngx_nonblocking(s) == -1) {

ngx_log_error(NGX_LOG_ALERT, ev->log, ngx_socket_errno,

ngx_nonblocking_n " failed");

ngx_close_accepted_connection(c);

return;

}

}

}

*log = ls->log;

/* 初始化新连接 */

c->recv = ngx_recv;

c->send = ngx_send;

c->recv_chain = ngx_recv_chain;

c->send_chain = ngx_send_chain;

c->log = log;

c->pool->log = log;

c->socklen = socklen;

c->listening = ls;

c->local_sockaddr = ls->sockaddr;

c->local_socklen = ls->socklen;

c->unexpected_eof = 1;

#if (NGX_HAVE_UNIX_DOMAIN)

if (c->sockaddr->sa_family == AF_UNIX) {

c->tcp_nopush = NGX_TCP_NOPUSH_DISABLED;

c->tcp_nodelay = NGX_TCP_NODELAY_DISABLED;

#if (NGX_SOLARIS)

/* Solaris's sendfilev() supports AF_NCA, AF_INET, and AF_INET6 */

c->sendfile = 0;

#endif

}

#endif

/* 获取新连接的读事件、写事件 */

rev = c->read;

wev = c->write;

/* 写事件准备就绪 */

wev->ready = 1;

if (ngx_event_flags & (NGX_USE_AIO_EVENT|NGX_USE_RTSIG_EVENT)) {

/* rtsig, aio, iocp */

rev->ready = 1;

}

if (ev->deferred_accept) {

rev->ready = 1;

#if (NGX_HAVE_KQUEUE)

rev->available = 1;

#endif

}

rev->log = log;

wev->log = log;

/*

* TODO: MT: - ngx_atomic_fetch_add()

* or protection by critical section or light mutex

*

* TODO: MP: - allocated in a shared memory

* - ngx_atomic_fetch_add()

* or protection by critical section or light mutex

*/

c->number = ngx_atomic_fetch_add(ngx_connection_counter, 1);

#if (NGX_STAT_STUB)

(void) ngx_atomic_fetch_add(ngx_stat_handled, 1);

#endif

#if (NGX_THREADS)

rev->lock = &c->lock;

wev->lock = &c->lock;

rev->own_lock = &c->lock;

wev->own_lock = &c->lock;

#endif

if (ls->addr_ntop) {

c->addr_text.data = ngx_pnalloc(c->pool, ls->addr_text_max_len);

if (c->addr_text.data == NULL) {

ngx_close_accepted_connection(c);

return;

}

c->addr_text.len = ngx_sock_ntop(c->sockaddr, c->socklen,

c->addr_text.data,

ls->addr_text_max_len, 0);

if (c->addr_text.len == 0) {

ngx_close_accepted_connection(c);

return;

}

}

#if (NGX_DEBUG)

{

struct sockaddr_in *sin;

ngx_cidr_t *cidr;

ngx_uint_t i;

#if (NGX_HAVE_INET6)

struct sockaddr_in6 *sin6;

ngx_uint_t n;

#endif

cidr = ecf->debug_connection.elts;

for (i = 0; i < ecf->debug_connection.nelts; i++) {

if (cidr[i].family != (ngx_uint_t) c->sockaddr->sa_family) {

goto next;

}

switch (cidr[i].family) {

#if (NGX_HAVE_INET6)

case AF_INET6:

sin6 = (struct sockaddr_in6 *) c->sockaddr;

for (n = 0; n < 16; n++) {

if ((sin6->sin6_addr.s6_addr[n]

& cidr[i].u.in6.mask.s6_addr[n])

!= cidr[i].u.in6.addr.s6_addr[n])

{

goto next;

}

}

break;

#endif

#if (NGX_HAVE_UNIX_DOMAIN)

case AF_UNIX:

break;

#endif

default: /* AF_INET */

sin = (struct sockaddr_in *) c->sockaddr;

if ((sin->sin_addr.s_addr & cidr[i].u.in.mask)

!= cidr[i].u.in.addr)

{

goto next;

}

break;

}

log->log_level = NGX_LOG_DEBUG_CONNECTION|NGX_LOG_DEBUG_ALL;

break;

next:

continue;

}

}

#endif

ngx_log_debug3(NGX_LOG_DEBUG_EVENT, log, 0,

"*%uA accept: %V fd:%d", c->number, &c->addr_text, s);

/* 将新连接对应的读事件注册到事件监控机制中;

* 注意:若是epoll事件机制,这里是不会执行,

* 因为epoll事件机制会在调用新连接处理函数ls->handler(c)

*(实际调用ngx_http_init_connection)时,才会把新连接对应的读事件注册到epoll事件机制中;

*/

if (ngx_add_conn && (ngx_event_flags & NGX_USE_EPOLL_EVENT) == 0) {

if (ngx_add_conn(c) == NGX_ERROR) {

ngx_close_accepted_connection(c);

return;

}

}

log->data = NULL;

log->handler = NULL;

/*

* 设置回调函数,完成新连接的最后初始化工作,

* 由函数ngx_http_init_connection完成

*/

ls->handler(c);

/* 调整事件available标志位,该标志位为1表示Nginx一次尽可能多建立新连接 */

if (ngx_event_flags & NGX_USE_KQUEUE_EVENT) {

ev->available--;

}

} while (ev->available);

}

/* 将监听socket连接的读事件加入到监听事件中 */

static ngx_int_t

ngx_enable_accept_events(ngx_cycle_t *cycle)

{

ngx_uint_t i;

ngx_listening_t *ls;

ngx_connection_t *c;

/* 获取监听数组的首地址 */

ls = cycle->listening.elts;

/* 遍历整个监听数组 */

for (i = 0; i < cycle->listening.nelts; i++) {

/* 获取当前监听socket所对应的连接 */

c = ls[i].connection;

/* 当前连接的读事件是否处于active活跃状态 */

if (c->read->active) {

/* 若是处于active状态,表示该连接的读事件已经在事件监控对象中 */

continue;

}

/* 若当前连接没有加入到事件监控对象中,则将该链接注册到事件监控中 */

if (ngx_event_flags & NGX_USE_RTSIG_EVENT) {

if (ngx_add_conn(c) == NGX_ERROR) {

return NGX_ERROR;

}

} else {

/* 若当前连接的读事件不在事件监控对象中,则将其加入 */

if (ngx_add_event(c->read, NGX_READ_EVENT, 0) == NGX_ERROR) {

return NGX_ERROR;

}

}

}

return NGX_OK;

}

/* 将监听连接的读事件从事件驱动模块中删除 */

static ngx_int_t

ngx_disable_accept_events(ngx_cycle_t *cycle)

{

ngx_uint_t i;

ngx_listening_t *ls;

ngx_connection_t *c;

/* 获取监听接口 */

ls = cycle->listening.elts;

for (i = 0; i < cycle->listening.nelts; i++) {

/* 获取监听接口对应的连接 */

c = ls[i].connection;

if (!c->read->active) {

continue;

}

/* 从事件驱动模块中移除连接 */

if (ngx_event_flags & NGX_USE_RTSIG_EVENT) {

if (ngx_del_conn(c, NGX_DISABLE_EVENT) == NGX_ERROR) {

return NGX_ERROR;

}

} else {

/* 从事件驱动模块移除连接的读事件 */

if (ngx_del_event(c->read, NGX_READ_EVENT, NGX_DISABLE_EVENT)

== NGX_ERROR)

{

return NGX_ERROR;

}

}

}

return NGX_OK;

}其中,负载均衡的代码如下:

/*

* ngx_accept_disabled 变量是负载均衡阈值,表示进程是否超载;

* 设置负载均衡阈值为每个进程最大连接数的八分之一减去空闲连接数;

* 即当每个进程accept到的活动连接数超过最大连接数的7/8时,

* ngx_accept_disabled 大于0,表示该进程处于负载过重;

*/

ngx_accept_disabled = ngx_cycle->connection_n / 8

- ngx_cycle->free_connection_n;

ngx_connection_t 结构体

当客户端向 Nginx 服务器发起连接请求时,此时若Nginx 服务器被动接收该连接,则相对Nginx 服务器来说称为被动连接,被动连接的表示由基本数据结构体ngx_connection_t 完成。该结构体定义在文件 src/core/ngx_connection.h 中:主要有data,读写事件,socket描述符,发送和接受数据的方法,socket地址,内存池,引用计数,缓冲区

/* TCP连接结构体 */

struct ngx_connection_s {

/*

* 当Nginx服务器产生新的socket时,

* 都会创建一个ngx_connection_s 结构体,

* 该结构体用于保存socket的属性和数据;

*/

/*

* 当连接未被使用时,data充当连接池中空闲连接表中的next指针;

* 当连接被使用时,data的意义由具体Nginx模块决定;

*/

void *data;

/* 设置该链接的读事件 */

ngx_event_t *read;

/* 设置该连接的写事件 */

ngx_event_t *write;

/* 用于设置socket的套接字描述符 */

ngx_socket_t fd;

/* 接收网络字符流的方法,是一个函数指针,指向接收函数 */

ngx_recv_pt recv;

/* 发送网络字符流的方法,是一个函数指针,指向发送函数 */

ngx_send_pt send;

/* 以ngx_chain_t链表方式接收网络字符流的方法 */

ngx_recv_chain_pt recv_chain;

/* 以ngx_chain_t链表方式发送网络字符流的方法 */

ngx_send_chain_pt send_chain;

/*

* 当前连接对应的ngx_listening_t监听对象,

* 当前连接由ngx_listening_t成员的listening监听端口的事件建立;

* 成员connection指向当前连接;

*/

ngx_listening_t *listening;

/* 当前连接已发生的字节数 */

off_t sent;

/* 记录日志 */

ngx_log_t *log;

/* 内存池 */

ngx_pool_t *pool;

/* 对端的socket地址sockaddr属性*/

struct sockaddr *sockaddr;

socklen_t socklen;

/* 字符串形式的IP地址 */

ngx_str_t addr_text;

ngx_str_t proxy_protocol_addr;

#if (NGX_SSL)

ngx_ssl_connection_t *ssl;

#endif

/* 本端的监听端口对应的socket的地址sockaddr属性 */

struct sockaddr *local_sockaddr;

socklen_t local_socklen;

/* 用于接收、缓存对端发来的字符流 */

ngx_buf_t *buffer;

/*

* 表示将当前连接作为双向连接中节点元素,

* 添加到ngx_cycle_t结构体的成员

* reuseable_connections_queue的双向链表中;

*/

ngx_queue_t queue;

/* 连接使用次数 */

ngx_atomic_uint_t number;

/* 处理请求的次数 */

ngx_uint_t requests;

unsigned buffered:8;

unsigned log_error:3; /* ngx_connection_log_error_e */

/* 标志位,为1表示不期待字符流结束 */

unsigned unexpected_eof:1;

/* 标志位,为1表示当前连接已经超时 */

unsigned timedout:1;

/* 标志位,为1表示处理连接过程出错 */

unsigned error:1;

/* 标志位,为1表示当前TCP连接已经销毁 */

unsigned destroyed:1;

/* 标志位,为1表示当前连接处于空闲状态 */

unsigned idle:1;

/* 标志位,为1表示当前连接可重用 */

unsigned reusable:1;

/* 标志为,为1表示当前连接已经关闭 */

unsigned close:1;

/* 标志位,为1表示正在将文件的数据发往对端 */

unsigned sendfile:1;

/*

* 标志位,若为1,则表示只有连接对应的发送缓冲区满足最低设置的阈值时,

* 事件驱动模块才会分发事件;

*/

unsigned sndlowat:1;

unsigned tcp_nodelay:2; /* ngx_connection_tcp_nodelay_e */

unsigned tcp_nopush:2; /* ngx_connection_tcp_nopush_e */

unsigned need_last_buf:1;

#if (NGX_HAVE_IOCP)

unsigned accept_context_updated:1;

#endif

#if (NGX_HAVE_AIO_SENDFILE)

/* 标志位,为1表示使用异步IO方式将磁盘文件发送给网络连接的对端 */

unsigned aio_sendfile:1;

unsigned busy_count:2;

/* 使用异步IO发送文件时,用于待发送的文件信息 */

ngx_buf_t *busy_sendfile;

#endif

#if (NGX_THREADS)

ngx_atomic_t lock;

#endif

};typedef ssize_t (*ngx_recv_pt)(ngx_connection_t *c, u_char *buf, size_t size);

typedef ssize_t (*ngx_recv_chain_pt)(ngx_connection_t *c, ngx_chain_t *in,

off_t limit);

typedef ssize_t (*ngx_send_pt)(ngx_connection_t *c, u_char *buf, size_t size);

typedef ngx_chain_t *(*ngx_send_chain_pt)(ngx_connection_t *c, ngx_chain_t *in,

off_t limit);连接池

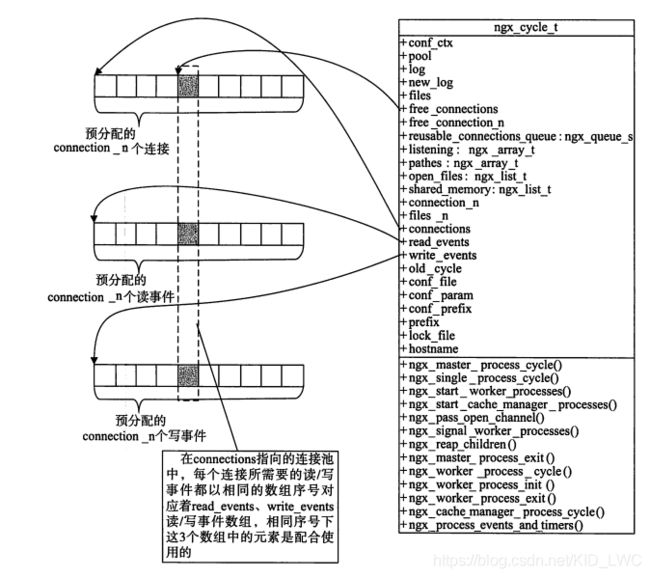

Nginx在接受客户端的连接时, 所使用的ngx_connection_t结构体都是在启动阶段就预分配好的, 使用时从连接池中中获取。

在ngx_cycle中的connections和free_connections这两个成员构成了一个连接池,一个指向整个连接池数组首地址,另一个是空闲连接首地址,除了连接池还实现了事件池,即连接,读写事件序号是一一对应的

连接池的封装如下:

struct ngx_cycle_s {

void ****conf_ctx;

ngx_pool_t *pool;

ngx_log_t *log;

ngx_log_t new_log;

ngx_uint_t log_use_stderr; /* unsigned log_use_stderr:1; */

ngx_connection_t **files;

ngx_connection_t *free_connections;

ngx_uint_t free_connection_n;

ngx_module_t **modules;

ngx_uint_t modules_n;

ngx_uint_t modules_used; /* unsigned modules_used:1; */

ngx_queue_t reusable_connections_queue;

ngx_uint_t reusable_connections_n;

ngx_array_t listening;

ngx_array_t paths;

ngx_array_t config_dump;

ngx_rbtree_t config_dump_rbtree;

ngx_rbtree_node_t config_dump_sentinel;

ngx_list_t open_files;

ngx_list_t shared_memory;

ngx_uint_t connection_n;

ngx_uint_t files_n;

ngx_connection_t *connections;

ngx_event_t *read_events;

ngx_event_t *write_events;

ngx_cycle_t *old_cycle;

ngx_str_t conf_file;

ngx_str_t conf_param;

ngx_str_t conf_prefix;

ngx_str_t prefix;

ngx_str_t lock_file;

ngx_str_t hostname;

};连接池的主要操作:

ngx_get_connection从连接池获取Connection结构体以及读写事件

ngx_connection_t *

ngx_get_connection(ngx_socket_t s, ngx_log_t *log)

{

ngx_uint_t instance;

ngx_event_t *rev, *wev;

ngx_connection_t *c;

/* disable warning: Win32 SOCKET is u_int while UNIX socket is int */

if (ngx_cycle->files && (ngx_uint_t) s >= ngx_cycle->files_n) {

ngx_log_error(NGX_LOG_ALERT, log, 0,

"the new socket has number %d, "

"but only %ui files are available",

s, ngx_cycle->files_n);

return NULL;

}

c = ngx_cycle->free_connections;

if (c == NULL) {

ngx_drain_connections((ngx_cycle_t *) ngx_cycle);

c = ngx_cycle->free_connections;

}

if (c == NULL) {

ngx_log_error(NGX_LOG_ALERT, log, 0,

"%ui worker_connections are not enough",

ngx_cycle->connection_n);

return NULL;

}

ngx_cycle->free_connections = c->data;

ngx_cycle->free_connection_n--;

if (ngx_cycle->files && ngx_cycle->files[s] == NULL) {

ngx_cycle->files[s] = c;

}

rev = c->read;

wev = c->write;

ngx_memzero(c, sizeof(ngx_connection_t));

c->read = rev;

c->write = wev;

c->fd = s;

c->log = log;

instance = rev->instance;

ngx_memzero(rev, sizeof(ngx_event_t));

ngx_memzero(wev, sizeof(ngx_event_t));

rev->instance = !instance;

wev->instance = !instance;

rev->index = NGX_INVALID_INDEX;

wev->index = NGX_INVALID_INDEX;

rev->data = c;

wev->data = c;

wev->write = 1;

return c;

}归还连接到连接池

void

ngx_free_connection(ngx_connection_t *c)

{

c->data = ngx_cycle->free_connections;

ngx_cycle->free_connections = c;

ngx_cycle->free_connection_n++;

if (ngx_cycle->files && ngx_cycle->files[c->fd] == c) {

ngx_cycle->files[c->fd] = NULL;

}

}

ngx_listening_t结构体

主要有socket地址,回调方法,连接指针,

struct ngx_listening_s {

ngx_socket_t fd;

struct sockaddr *sockaddr;

socklen_t socklen; /* size of sockaddr */

size_t addr_text_max_len;

ngx_str_t addr_text;

int type;

int backlog;

int rcvbuf;

int sndbuf;

#if (NGX_HAVE_KEEPALIVE_TUNABLE)

int keepidle;

int keepintvl;

int keepcnt;

#endif

/* handler of accepted connection */

ngx_connection_handler_pt handler;

void *servers; /* array of ngx_http_in_addr_t, for example */

ngx_log_t log;

ngx_log_t *logp;

size_t pool_size;

/* should be here because of the AcceptEx() preread */

size_t post_accept_buffer_size;

/* should be here because of the deferred accept */

ngx_msec_t post_accept_timeout;

ngx_listening_t *previous;

ngx_connection_t *connection;

ngx_uint_t worker;

unsigned open:1;

unsigned remain:1;

unsigned ignore:1;

unsigned bound:1; /* already bound */

unsigned inherited:1; /* inherited from previous process */

unsigned nonblocking_accept:1;

unsigned listen:1;

unsigned nonblocking:1;

unsigned shared:1; /* shared between threads or processes */

unsigned addr_ntop:1;

unsigned wildcard:1;

#if (NGX_HAVE_INET6)

unsigned ipv6only:1;

#endif

unsigned reuseport:1;

unsigned add_reuseport:1;

unsigned keepalive:2;

unsigned deferred_accept:1;

unsigned delete_deferred:1;

unsigned add_deferred:1;

#if (NGX_HAVE_DEFERRED_ACCEPT && defined SO_ACCEPTFILTER)

char *accept_filter;

#endif

#if (NGX_HAVE_SETFIB)

int setfib;

#endif

#if (NGX_HAVE_TCP_FASTOPEN)

int fastopen;

#endif

};