关于Spark on Yarn的一些经历

1、ERROR spark.SparkContext: Error initializing SparkContext

org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master.

这是因为jdk为java 8的原因。

在所有节点上的yarn-site.xml上加上:

yarn.nodemanager.pmem-check-enabled

false

yarn.nodemanager.vmem-check-enabled

false

重启一下start-all.sh就可以

2、Name node is in safe mode

WARN Client: Failed to cleanup staging dir

The reported blocks 38 has reached the threshold 0.9990 of total blocks 38. The number of live datanodes 3 has reached the minimum number 0. In safe mode extension. Safe mode will be turned off automatically in 9 seconds.

解决方法:https://blog.csdn.net/xw13106209/article/details/6866072

输入hadoop dfsadmin -safemode leave

3、Neither spark.yarn.jars nor spark.yarn.archive is set

解决方法:

命令行输入:

hadoop fs -mkdir /spark_jars

hadoop fs -put /spark/jars/* /spark_jars

然后在spark的conf的spark-default.conf

添加配置 :spark.yarn.jars hdfs://master:9000/spark_jars/*

![]()

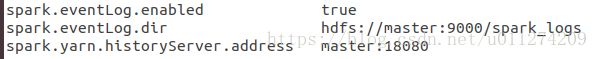

4、Spark on YARN配置日志Web UI

1)在spark的conf的spark-default.conf

添加配置:(见http://blog.51cto.com/beyond3518/1787513、http://www.cnblogs.com/luogankun/p/3981645.html)

spark.eventLog.enabled true

spark.eventLog.dir hdfs://master:9000/spark_logs

spark.yarn.historyServer.address master:18080

2)在spark的conf的spark-env.sh

添加配置:

export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -Dspark.history.fs.logDirectory=hdfs://master:9000/spark_logs"

![]() 注意,history.fs.logDirectory和上面的eventLog需要同一个文件。(见https://www.cnblogs.com/langfanyun/p/7788784.html)

注意,history.fs.logDirectory和上面的eventLog需要同一个文件。(见https://www.cnblogs.com/langfanyun/p/7788784.html)

3)在hadoop的yarn-site.xml配置加入:

yarn.resourcemanager.webapp.address

bigdata01:8088

这样,就可以直接从hadoop界面跳进spark web(见https://www.jianshu.com/p/ea85d074a494)

4)重启hadoop,然后启动./sbin/start-history-server.sh,就可以。