SpringBoot2+Mybatis的多数据源配置实现

SpringBoot2+Mybatis的多数据源配置实现

- 概述

- Multi-DataSource 模块的建立

-

- 定义TargetDataSource注解

- 定义Aop拦截器

- 定义多线程下data source type的存取器

- 定义数据源注册器

- 定义自定义数据源

- 定义EnableMultiDataSource

- 在yml里面配置多数据源参数

- 启动类必须加上注解

- 测试

- 不用Aop拦截的处理方式

概述

多数据源的实现有不同的方法, 有的是通过配置不同的datasource,不同的SqlSessionFactory,每个SqlSessionFactory管理不同位置的mapper这种方式;也有的用同一套mapper, 动态切换不同的数据源, 而因为实现需要, 我这里要实现的是第二种方式.

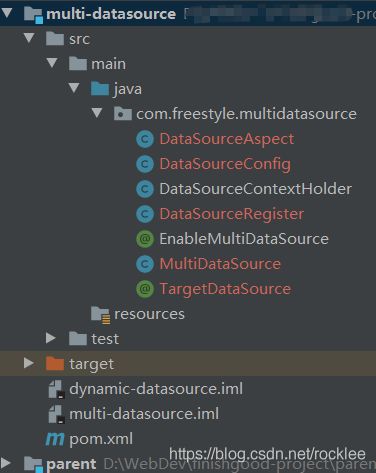

Multi-DataSource 模块的建立

在原有的maven项目里面,加入这个新的multi-datasource模块

定义TargetDataSource注解

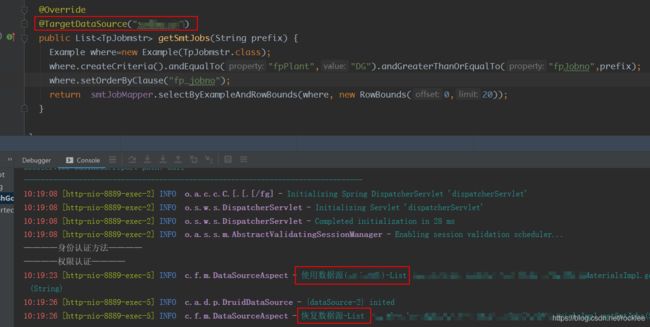

此注解用于标识当前方法用的是哪个数据源, 用于aop的filter

/**

*

* Created by rocklee on 2020/8/27 10:44

*/

@Target({

java.lang.annotation.ElementType.METHOD, java.lang.annotation.ElementType.TYPE})

@Retention(RetentionPolicy.RUNTIME)

@Documented

public @interface TargetDataSource {

String value();

}

定义Aop拦截器

/**

* Created by rocklee on 2020/8/27 10:07

*/

@Aspect

@Order(0)

@Component

public class DataSourceAspect {

private Logger logger = LoggerFactory.getLogger(DataSourceAspect.class);

@Before("@annotation(targetDataSource)")

public void changeDataSource(JoinPoint point, TargetDataSource targetDataSource) throws Throwable {

String dsId = targetDataSource.value();

if (!DataSourceContextHolder.containsDataSource(dsId)) {

logger.info("数据源(" + dsId + ")不存在-" + point.getSignature());

} else {

logger.info("使用数据源(" + dsId + ")-" + point.getSignature());

DataSourceContextHolder.setDataSourceType(targetDataSource.value());

}

}

@After("@annotation(targetDataSource)")

public void restoreDataSource(JoinPoint point, TargetDataSource targetDataSource) {

logger.info("恢复数据源-" + point.getSignature());

DataSourceContextHolder.clearDataSourceType();

}

}

此拦截器或选,后面有讲

定义多线程下data source type的存取器

/**

* 支持多线程环境下的data source type的存取

* Created by rocklee on 2020/8/27 10:00

*/

public class DataSourceContextHolder {

private static final ThreadLocal<String> contextHolder = new ThreadLocal();

private static final List<String> dataSourceIds = new ArrayList();

public static void setDataSourceType(String dataSourceType) {

contextHolder.set(dataSourceType);

}

public static String getDataSourceType() {

return (String) contextHolder.get();

}

public static void clearDataSourceType() {

contextHolder.remove();

}

public static boolean containsDataSource(String dataSourceId) {

return dataSourceIds.contains(dataSourceId);

}

public static List<String> getDataSourceIds() {

return dataSourceIds;

}

}

定义数据源注册器

注册器分别从yml配置里面获得默认的datasource和其他datasource,后面的yml参数显示, 各datasource的数据库均可自定义, 也就是说可以让boot同时使用postgresql/mysql/oracle等数据库.

/**

* Created by rocklee on 2020/8/27 10:46

*/

public class DataSourceRegister implements ImportBeanDefinitionRegistrar, EnvironmentAware {

private Logger logger = LoggerFactory.getLogger("DynamicDataSource");

private static final Object DATASOURCE_TYPE_DEFAULT = "org.apache.tomcat.jdbc.pool.DataSource";

private ConversionService conversionService = new DefaultConversionService();

//private static Map sourcePoolMap = new HashMap();

private static DataSource defaultDataSource;

private static Map<String, DataSource> customdatasources = new HashMap();

private GlobalConfig config;

public static DataSource getDefaultDataSource() {

return defaultDataSource;

}

public static DataSource getCustomDataSource(String dataSourceId){

if (dataSourceId==null||dataSourceId.isEmpty()) return defaultDataSource;

return customdatasources.get(dataSourceId);

}

public void setEnvironment(Environment environment) {

logger.debug("DynamicDataSourceRegister.setEnvironment()");

config= new GlobalConfig(environment);

initDefaultDataSource(environment);

initCustomdatasources(environment);

}

private void initDefaultDataSource(Environment env) {

if (!config.containsProperty("spring.default-datasource")){

logger.error(String.format("Missing config '%s'","spring.default-datasource"));

return;

}

String defaultDataSourceName=config.getValue("spring.default-datasource");

Map<String,Object> paramMap=config.getProperties("spring.datasources."+defaultDataSourceName,true);

if (paramMap.size()==0){

logger.error(String.format("Missing config '%s'","spring.datasources."+defaultDataSourceName));

return;

}

Map<String, Object> dsMap = new HashMap();

dsMap.put("type", paramMap.get("type"));

dsMap.put("driverClassName", paramMap.get("driver-class-name"));

dsMap.put("url", paramMap.get("url"));

dsMap.put("username", paramMap.get("username"));

dsMap.put("password", paramMap.get("password"));

paramMap=config.getProperties("spring.datasources."+defaultDataSourceName+".druid",true);

dsMap.putAll(paramMap);

defaultDataSource = buildDataSource(dsMap);

//dataBinder(defaultDataSource, env);

}

private void initCustomdatasources(Environment env) {

String defaultSource=config.getValue("spring.default-datasource");

List<String> dsSessions = config.getSessions("spring.datasources",true);

for (String dsSession : dsSessions) {

if (dsSession.equals(defaultSource)){

customdatasources.put(dsSession, defaultDataSource);

continue;

}

Map<String,Object> paramMap=config.getProperties("spring.datasources."+dsSession,true);

Map<String, Object> dsMap = new HashMap();

dsMap.put("type", paramMap.get("type"));

dsMap.put("driverClassName", paramMap.get("driver-class-name"));

dsMap.put("url", paramMap.get("url"));

dsMap.put("username", paramMap.get("username"));

dsMap.put("password", paramMap.get("password"));

paramMap=config.getProperties("spring.datasources."+dsSession+".druid",true);

dsMap.putAll(paramMap);

DataSource ds = buildDataSource(dsMap);

customdatasources.put(dsSession, ds);

//dataBinder(ds, env);

}

}

public DataSource buildDataSource(Map<String, Object> dsMap) {

Object type = dsMap.get("type");

if (type == null) {

type = DATASOURCE_TYPE_DEFAULT;

}

try {

Class<? extends DataSource> dataSourceType = (Class<? extends DataSource>) Class.forName((String) type);

String driverClassName = dsMap.get("driverClassName").toString();

String url = dsMap.get("url").toString();

String username = dsMap.get("username").toString();

String password = dsMap.get("password").toString();

DruidDataSource dataSource = new DruidDataSource();

dataSource.setUrl(url);

dataSource.setUsername(username);

dataSource.setPassword(password);

dataSource.setDriverClassName(driverClassName);

int initialSize = (Integer)dsMap.get("initialSize");

int minIdle = (Integer)dsMap.get("minIdle");

int maxActive = (Integer) dsMap.get("maxActive");

int maxWait =(Integer) dsMap.get("maxWait");

int timeBetweenEvictionRunsMillis = (Integer) dsMap.get("timeBetweenEvictionRunsMillis");

int minEvictableIdleTimeMillis = (Integer) dsMap.get("minEvictableIdleTimeMillis");

String validationQuery = (String) dsMap.get("validationQuery");

boolean testWhileIdle = (Boolean) dsMap.getOrDefault("testWhileIdle",true);

boolean testOnBorrow = (Boolean) dsMap.getOrDefault("testOnBorrow",false);

boolean testOnReturn =(Boolean) dsMap.getOrDefault("testOnReturn",true);

boolean poolPreparedStatements = (boolean)dsMap.getOrDefault("poolPreparedStatements",true);

int maxPoolPreparedStatementPerConnectionSize = (Integer) dsMap.getOrDefault("maxPoolPreparedStatementPerConnectionSize",20);

String filters = (String) dsMap.get("filters");

String connectionProperties = (String) dsMap.get("connectionProperties");

boolean useGlobaldatasourcestat = (boolean) dsMap.getOrDefault("useGlobaldatasourcestat",true);

dataSource.setInitialSize(initialSize);

dataSource.setMinIdle(minIdle);

dataSource.setMaxActive(maxActive);

dataSource.setMaxWait(maxWait);

dataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

dataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

dataSource.setValidationQuery(validationQuery);

dataSource.setTestWhileIdle(testWhileIdle);

dataSource.setTestOnBorrow(testOnBorrow);

dataSource.setTestOnReturn(testOnReturn);

dataSource.setPoolPreparedStatements(poolPreparedStatements);

dataSource.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

dataSource.setFilters(filters);

Properties properties = new Properties();

for (String item : connectionProperties.split(";")) {

String[] attr = item.split("=");

properties.put(attr[0].trim(), attr[1].trim());

}

dataSource.setConnectProperties(properties);

dataSource.setUseGlobalDataSourceStat(useGlobaldatasourcestat);

return dataSource;

} catch (RuntimeException e) {

logger.error("初始化数据源出错!");

e.printStackTrace();

} catch (Exception e) {

logger.error("初始化数据源出错!");

e.printStackTrace();

}

return null;

}

public void registerBeanDefinitions(AnnotationMetadata importingClassMetadata, BeanDefinitionRegistry registry) {

logger.debug("DynamicDataSourceRegister.registerBeanDefinitions()");

Map<Object, Object> targetdatasources = new HashMap();

targetdatasources.put("dataSource", defaultDataSource);

DataSourceContextHolder.getDataSourceIds().add("dataSource");

targetdatasources.putAll(customdatasources);

for (String key : customdatasources.keySet()) {

DataSourceContextHolder.getDataSourceIds().add(key);

}

GenericBeanDefinition beanDefinition = new GenericBeanDefinition();

beanDefinition.setBeanClass(MultiDataSource.class);

beanDefinition.setSynthetic(true);

MutablePropertyValues mpv = beanDefinition.getPropertyValues();

/*myRoutingDataSource.setDefaultTargetDataSource(masterDataSource);

myRoutingDataSource.setTargetDataSources(targetDataSources);*/

mpv.addPropertyValue("defaultTargetDataSource", defaultDataSource);

mpv.addPropertyValue("targetDataSources", targetdatasources);

registry.registerBeanDefinition("dataSource", beanDefinition);

}

}

定义自定义数据源

/**

* Created by rocklee on 2020/8/27 9:59

*/

public class MultiDataSource extends AbstractRoutingDataSource {

public Object determineCurrentLookupKey() {

return DataSourceContextHolder.getDataSourceType();

}

}

定义EnableMultiDataSource

这个注解放在启动类(xxxApplication.java)

/**

* Created by rocklee on 2020/8/27 15:40

*/

@Target({

java.lang.annotation.ElementType.TYPE})

@Retention(RetentionPolicy.RUNTIME)

@Import({

DataSourceRegister.class})

public @interface EnableMultiDataSource {

}

在yml里面配置多数据源参数

```yaml

default-datasource: db1

datasources:

db1:

driver-class-name: org.postgresql.Driver

url: jdbc:postgresql://host1:5432/db1

username: postgres

password: 123456

type: com.alibaba.druid.pool.DruidDataSource

# 数据源其他配置

druid:

initialSize: 5

minIdle: 5

maxActive: 20

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

testWhileIdle: true

testOnBorrow: false

testOnReturn: true

poolPreparedStatements: true

# 配置监控统计拦截的filters,去掉后监控界面sql无法统计,'wall'用于防火墙

#filters: stat

maxPoolPreparedStatementPerConnectionSize: 20

useGlobalDataSourceStat: true

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=200

db2:

driver-class-name: org.postgresql.Driver

url: jdbc:postgresql://host2:5432/db2

username: postgres

password: 123456

type: com.alibaba.druid.pool.DruidDataSource

# 数据源其他配置

druid:

initialSize: 5

minIdle: 5

maxActive: 20

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

# 配置监控统计拦截的filters,去掉后监控界面sql无法统计,'wall'用于防火墙

#filters: stat

maxPoolPreparedStatementPerConnectionSize: 20

useGlobalDataSourceStat: true

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=200

启动类必须加上注解

@EnableMultiDataSource不能少

如果想用注解+Aop拦截的方式来切换数据源那么一定要加上@EnableAspectJAutoProxy(proxyTargetClass=true,exposeProxy = true)

测试

不用Aop拦截的处理方式

上面显示的都是通过Aop拦截做了targetDataSource注解的方法,在执行此方法之前就切换数据源的方式来实现动态切换数据源, 但是这里有个问题,如果同一个方法需要用到不同的数据源就没法了, 这时候应该需要手动指定数据源.

若手动指定数据源的话, Aop的类可以不要,也不需要在启动项加@EnableAspectJAutoProxy(proxyTargetClass=true,exposeProxy = true) , 也不需要在方法加@TargetDataSource注解,

只需要在mapper操作前加上:

DataSourceContextHolder.setDataSourceType(“db1”); //手动切换数据源

即可.