kaggle:手写数字识别--pytorch搭建简单的cnn

题目地址

之前学svm时候就做了一下,pca+svm也有0.98左右,这次试试cnn吧。在本地跑完提交需要搭梯子,就直接在kaggle的kernel上运行了,kernel上也有很多大佬分享自己的代码,可以学到很多。

先导入一堆包进来:

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import torch

import torch.nn as nn

import torch.utils.data as Data

import torchvision

from torch.autograd import Variable

import matplotlib.pyplot as plt

from torchvision import transforms

from torch.utils.data import Dataset, DataLoader

from sklearn.model_selection import train_test_split

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list the files in the input directory

import os

print(os.listdir("../input"))

读取训练集和测试,同时将训练集再分成训练集和验证集:

data = pd.read_csv('../input/train.csv')

test = pd.read_csv('../input/test.csv')

train, valid = train_test_split(data, stratify=data.label, test_size=0.2)

定义一个自己的数据集,原本每张图为(1,784),需变成(28,28),再加一维,得到(1,28,28)

刚开始没加最后那一维,然后后面报错维度不对啥的。。。普通图片都是三通道的为(3, x, y),手写数字的图为单通道的。

class MyDataset(Dataset):

def __init__(self, df, transform, train=True):

self.df = df.values

self.transform = transform

self.train = train

def __len__(self):

return len(self.df)

def __getitem__(self, index):

if self.train == True: # 训练集or验证集

label = self.df[index, 0]

image = torch.FloatTensor(self.df[index, 1:]).view(28, 28).unsqueeze(0)

image = self.transform(image)

return image, label

else: # 测试集,没有标签

image = torch.FloatTensor(self.df[index, :]).view(28, 28).unsqueeze(0)

image = self.transform(image)

return image

加载数据:

EPOCH = 30 # 训练整批数据多少次

BATCH_SIZE = 64

LR = 0.002 # 学习率

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

tranf = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

transforms.Normalize(mean=(0.5,), std=(0.5,)),

])

train_dataset = MyDataset(df=train, transform=tranf)

train_dataloader = DataLoader(train_dataset, batch_size=BATCH_SIZE, shuffle=True)

valid_dataset = MyDataset(df=valid, transform=tranf)

valid_dataloader = DataLoader(valid_dataset, batch_size=BATCH_SIZE)

构建网络:

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential( # (1, 28, 28)

nn.Conv2d(

in_channels=1, # 输入通道数,若图片为RGB则为3通道

out_channels=32, # 输出通道数,即多少个卷积核一起卷积

kernel_size=3, # 卷积核大小

stride=1, # 卷积核移动步长

padding=1, # 边缘增加的像素,使得得到的图片长宽没有变化

),# (32, 28, 28)

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

)

self.conv2 = nn.Sequential(

nn.Conv2d(32, 32, 3, 1, 1), # (32, 28, 28)

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2), # 池化 (32, 14, 14)

)

self.conv3 = nn.Sequential(# (32, 14, 14)

nn.Conv2d(32, 64, 3, 1, 1),# (64, 14, 14)

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

)

self.conv4 = nn.Sequential(

nn.Conv2d(64, 64, 3, 1, 1),# (64, 14, 14)

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(2),# (64, 7, 7)

)

self.out = nn.Sequential(

nn.Dropout(p = 0.5), # 抑制过拟合

nn.Linear(64 * 7 * 7, 512),

nn.BatchNorm1d(512),

nn.ReLU(inplace=True),

nn.Dropout(p = 0.5),

nn.Linear(512, 512),

nn.BatchNorm1d(512),

nn.ReLU(inplace=True),

nn.Dropout(p = 0.5),

nn.Linear(512, 10),

)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = x.view(x.size(0), -1) # (batch_size, 64*7*7)

output = self.out(x)

return output

训练过程:

cnn = CNN().to(device)

print(cnn)

# 训练

optimizer = torch.optim.Adam(cnn.parameters(), lr=LR)

loss_func = nn.CrossEntropyLoss()

for epoch in range(EPOCH):

for step, (x, y) in enumerate(train_dataloader):

b_x = x.to(device)

b_y = y.to(device)

output = cnn(b_x)

loss = loss_func(output, b_y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step % 100 == 0:

print('epoch:[{}/{}], loss:{:.4f}'.format(epoch, EPOCH, loss))

验证:

cnn.eval()

with torch.no_grad():

total = 0

cor = 0

for x, y in valid_dataloader:

x = x.to(device)

y = y.to(device)

out = cnn(x)

pred = torch.max(out, 1)[1]

total += len(y)

cor += (y == pred).sum().item()

print('acc:{:.4f}'.format(cor/total))

test_dataset = MyDataset(df=test, transform=tranf, train=False)

test_dataloader = DataLoader(test_dataset, batch_size=BATCH_SIZE)

cnn.eval()

pred = []

for x in test_dataloader:

x = x.to(device)

out = cnn(x)

pre = torch.max(out, 1)[1].cpu().numpy()

pred += list(pre)

submit = pd.read_csv('../input/sample_submission.csv')

submit['Label'] = pred

submit.to_csv('submission_cnn99.csv', index=False)

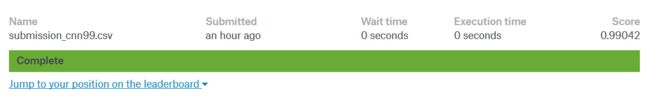

分数:

总结:

总结:

刚开始学,也碰到了好多问题,特别是数据集那里。后面构建网络时,刚开始就建了两层,结果还不如svm,然后在kernel上参考了好多国外的大佬,准确率才提高了点,感觉就跟炼丹一样。之后还可以采用对图片进行平移旋转等操作扩大数据集来提高准确率,继续加油吧。