elasticsearch-6.5.4 里面Filebeat学习

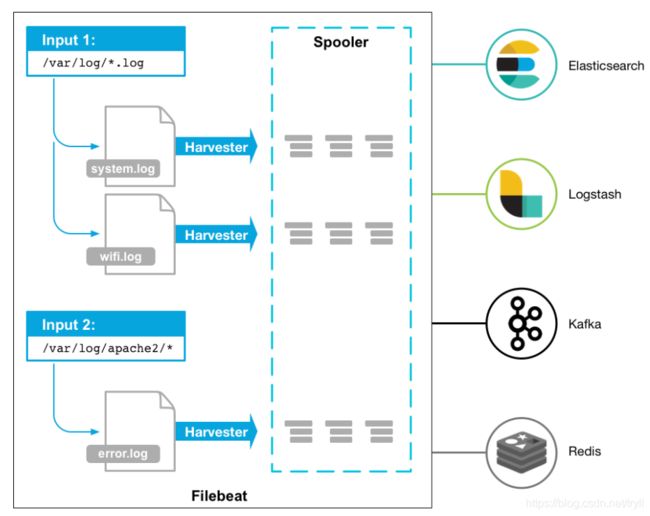

1.1架构

1.2 部署与运行

mkdir /itcast/beats

tar -xvf filebeat-6.5.4-linux-x86_64.tar.gz

cd filebeat-6.5.4-linux-x86_64

#创建如下配置文件 itcast.yml

filebeat.inputs:

- type: stdin

enabled: true

setup.template.settings:

index.number_of_shards: 3

output.console:

pretty: true

enable: true

#启动filebeat

./filebeat -e -c itcast.yml

#输入hello运行结果如下:

hello

输出信息:

{

"@timestamp": "2019-01-12T12:50:03.585Z",

"@metadata": {

#元数据信息"beat": "filebeat",

"type": "doc",

"version": "6.5.4"

},

"source": "",

"offset": 0,

"message": "hello",

#输入的内容"prospector": {

#标准输入勘探器"type": "stdin"

},

"input": {

#控制台标准输入"type": "stdin"

},

"beat": {

#beat版本以及主机信息"name": "itcast01",

"hostname": "itcast01",

"version": "6.5.4"

},

"host": {

"name": "itcast01"

}

}

1.3 读取文件

#配置读取文件项 itcast-log.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /itcast/beats/logs/*.log

setup.template.settings:

index.number_of_shards: 3

output.console:

pretty: true

enable: true

#启动filebeat

./filebeat -e -c itcast-log.yml

#/haoke/beats/logs下创建a.log文件,并输入如下内容

hello

world

输出信息:

#观察filebeat输出

{

"@timestamp": "2019-01-12T14:16:10.192Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.5.4"

},

"host": {

"name": "itcast01"

},

"source": "/haoke/beats/logs/a.log",

"offset": 0,

"message": "hello",

"prospector": {

"type": "log"

},

"input": {

"type": "log"

},

"beat": {

"version": "6.5.4",

"name": "itcast01",

"hostname": "itcast01"

}

}{

"@timestamp": "2019-01-12T14:16:10.192Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.5.4"

},

"prospector": {

"type": "log"

},

"input": {

"type": "log"

},

"beat": {

"version": "6.5.4",

"name": "itcast01",

"hostname": "itcast01"

},

"host": {

"name": "itcast01"

},

"source": "/haoke/beats/logs/a.log",

"offset": 6,

"message": "world"

}

可以看到当FileBeat检测到日志文件发生变化的时候,就会立刻读取到更新的内容,并且输出到控制台上。

1.4 自定义字段

#配置读取文件项 itcast-log.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /itcast/beats/logs/*.log

tags: ["web"] #添加自定义tag,便于后续的处理

fields: #添加自定义字段

from: itcast-im

fields_under_root: true #true为添加到根节点,false为添加到子节点中

setup.template.settings:

index.number_of_shards: 3

output.console:

pretty: true

enable: true

#启动filebeat

./filebeat -e -c itcast-log.yml

#/haoke/beats/logs下创建a.log文件,并输入如下内容

123

输出信息:

{

"@timestamp": "2019-01-12T14:37:19.845Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.5.4"

},

"offset": 0,

"tags": ["haoke-im"],

"prospector": {

"type": "log"

},

"beat": {

"name": "itcast01",

"hostname": "itcast01",

"version": "6.5.4"

},

"host": {

"name": "itcast01"

},

"source": "/itcast/beats/logs/a.log",

"message": "123",

"input": {

"type": "log"

},

"from": "haoke-im"

}

1.5 输出到elasticsearch

# itcast-log.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /itcast/beats/logs/*.log

tags: ["haoke-im"]

fields:

from: haoke-im

fields_under_root: false

setup.template.settings:

index.number_of_shards: 3 #指定索引的分区数

output.elasticsearch: #指定ES的配置

hosts: ["172.16.0.37","172.16.0.38","172.16.0.39"]

1.6 FileBaet原理

FileBeat里面主要是两个组件: Prospector 和Harvester

- Harvester:

- 负责读取单个文件的内容

- 如果文件在读取时被重命名或者是删除,FileBeat将继续读取文件。

- prospector:

- 负责管理Harvester并且找到所有需要读取的文件来源

- 如果输入类型是日志,则查找器将查找路径下匹配的所有文件,并且为每一个文件启动一个harvester.

- filebeat目前支持两种prospector类型:log stdin

- filebeat如何保持文件的状态

- filebeat 保存文件状态并且经常刷新到磁盘的data/regsiter文件里

- 该状态用于记住harvester正在读取的offset, 并确保发送所有的日志行

- 如果输出不可访问,filebeat会记住上一次发送的位置,并在输出可用的时候,在继续读取么文件。

- 在Filebeat运行时,每个prospector内存中也会保存的文件状态信息,当重新启动Filebeat时,将使用注册

文件的数据来重建文件状态,Filebeat将每个harvester在从保存的最后偏移量继续读取。

1.7 读取Nginx的日志文件

# itcast-nginx.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /usr/local/nginx/logs/*.log

tags: ["nginx"]

setup.template.settings:

index.number_of_shards: 3 #指定索引的分区数

output.elasticsearch: #指定ES的配置

hosts: ["192.168.40.133:9200","192.168.40.134:9200","192.168.40.135:9200"]

#启动

./filebeat -e -c itcast-nginx.yml

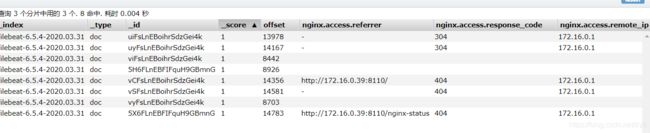

启动后,可以在Elasticsearch中看到索引以及查看数据:

可以看到,在message中已经获取到了nginx的日志,但是,内容并没有经过处理,只是读取到原数据,那么对于我们后期的操作是不利的,有办法解决吗?

1.8 Module

前面要想实现日志数据的读取以及处理都是自己手动配置的,其实,在Filebeat中,有大量的Module,可以简化我们的配置,直接就可以使用,如下:

./filebeat modules list

Enabled:

Disabled:

apache2

auditd

elasticsearch

haproxy

icinga

iis

kafka

kibana

logstash

mongodb

mysql

nginx

osquery

postgresql

redis

suricata

system

traefik

可以看到,内置了很多的module,但是都没有启用,如果需要启用需要进行enable操作:

./filebeat modules enable nginx #启动

./filebeat modules disable nginx #禁用

Enabled:

nginx

Disabled:

apache2

auditd

elasticsearch

haproxy

icinga

iis

kafka

kibana

logstash

mongodb

mysql

redis

osquery

postgresql

suricata

system

traefik

1.9配置nginx module

vim nginx.yml

- module: nginx

# Access logs

access:

enabled: true

var.paths: ["/usr/local/nginx/logs/access.log*"]

# Error logs

error:

enabled: true

var.paths: ["/usr/local/nginx/logs/error.log*"]

- 配置filebeat

#vim itcast-nginx.yml

filebeat.inputs:

#- type: log

# enabled: true

# paths:

# - /usr/local/nginx/logs/*.log

# tags: ["nginx"]

setup.template.settings:

index.number_of_shards: 3

output.elasticsearch:

hosts: ["192.168.40.133:9200","192.168.40.134:9200","192.168.40.135:9200"]

filebeat.config.modules:

path: ${

path.config}/modules.d/*.yml

reload.enabled: false

1.10 测试

./filebeat -e -c itcast-nginx.yml

#启动会出错,如下

ERROR fileset/factory.go:142 Error loading pipeline: Error loading pipeline for

fileset nginx/access: This module requires the following Elasticsearch plugins:

ingest-user-agent, ingest-geoip. You can install them by running the following

commands on all the Elasticsearch nodes:

sudo bin/elasticsearch-plugin install ingest-user-agent

sudo bin/elasticsearch-plugin install ingest-geoip

#解决:需要在Elasticsearch中安装ingest-user-agent、ingest-geoip插件

#在资料中可以找到,ingest-user-agent.tar、ingest-geoip.tar、ingest-geoip-conf.tar 3个文件

#其中,ingest-user-agent.tar、ingest-geoip.tar解压到plugins下

#ingest-geoip-conf.tar解压到config下

#问题解决。

上面所需要的3个包: 链接:https://pan.baidu.com/s/13vRIHmV_AkoseEM72kHIbw

提取码:jih9