linux 常见命令

1.软连接 (ln)

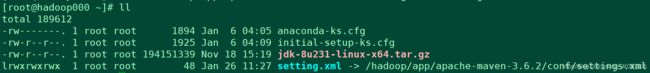

[root@hadoop000 ~]# ln -s /hadoop/app/apache-maven-3.6.2/conf/settings.xml setting.xml

[root@hadoop000 ~]# ll

total 189612

-rw-------. 1 root root 1894 Jan 6 04:05 anaconda-ks.cfg

-rw-r--r--. 1 root root 1925 Jan 6 04:09 initial-setup-ks.cfg

-rw-r--r--. 1 root root 194151339 Nov 18 15:19 jdk-8u231-linux-x64.tar.gz

lrwxrwxrwx 1 root root 48 Jan 26 11:27 setting.xml -> /hadoop/app/apache-maven-3.6.2/conf/settings.xml

2.文件搜索命令 (find)

执行权限:所有用户

语法:find 【搜索范围】 【匹配条件】

功能描述:文件搜索

2.1 -name 按文件名搜索

find /etc/ -name init 在/etc/ 中查找文件init

-iname 不区分大小写

[root@hadoop000 ~]# find /etc/ -name init

/etc/selinux/targeted/active/modules/100/init

/etc/sysconfig/init

[root@hadoop000 ~]# find /etc/ -iname init

/etc/selinux/targeted/active/modules/100/init

/etc/sysconfig/init

/etc/gdm/Init

2.2 -size 按文件大小搜索文件

find / -size +102400 在根目录下查找大于50M的文件(linux 数据块是512字节 0.5k,50m = 5 0*1024k * 2 = 1024 00,数据块是linux系统最小的数据单位)

+n 大于 -n 小于 n 等于

[root@hadoop000 ~]# find / -size +102400

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.1293

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.13a4

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.14a4

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.158b

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.1668

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.1775

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.177c

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.191a

/hadoop/app/zookeeper-3.4.14/logs/version-2/log.1a09

[root@hadoop000 ~]# ls -lh /hadoop/app/zookeeper-3.4.14/logs/version-2/

total 748K

-rw-rw-r--. 1 hadoop hadoop 65M Jan 6 18:26 log.1

-rw-rw-r-- 1 hadoop hadoop 65M Jan 7 06:07 log.1293

-rw-rw-r-- 1 hadoop hadoop 65M Jan 7 10:58 log.13a4

-rw-rw-r-- 1 hadoop hadoop 65M Jan 7 21:11 log.14a4

-rw-rw-r-- 1 hadoop hadoop 65M Jan 7 22:21 log.158b

-rw-rw-r-- 1 hadoop hadoop 65M Jan 12 10:10 log.1668

-rw-rw-r-- 1 hadoop hadoop 65M Jan 17 11:04 log.1775

-rw-rw-r-- 1 hadoop hadoop 65M Jan 19 14:18 log.177c

-rw-rw-r-- 1 hadoop hadoop 65M Jan 19 17:13 log.191a

-rw-rw-r-- 1 hadoop hadoop 65M Jan 21 16:39 log.1a09

-rw-rw-r-- 1 hadoop hadoop 65M Jan 22 14:51 log.1adb

2.3 -user 按文件所有者搜索文件

find /hadoop -user hadoop 在/hadoop目录下查找所有者为hadoop的文件

[root@hadoop000 ~]# find /hadoop -user hadoop

/hadoop/spark-works/etl/emp/logs/EmpCol-2019061810.log

/hadoop/spark-works/etl/emp/logs/EmpCol-2019061811.log

/hadoop/spark-works/etl/emp/logs/EmpCol-2019061815.log

/hadoop/spark-works/etl/emp/lib

/hadoop/spark-works/etl/emp/lib/ruoze222-1.0-SNAPSHOT.jar

/hadoop/spark-works/etl/emp/lib/hadoop-hdfs-2.6.0-cdh5.16.1.jar

/hadoop/spark-works/etl/emp/lib/line-1.0.jar

/hadoop/.bashrc

/hadoop/.viminfo

2.4 -cmin 文件修改

find /root -cmin -10 在/root目录下搜索10分钟内被修改过属性的文件和目录

-cmin 文件属性 change

-amin 访问时间 access

-mmin 文件内容 modify

[root@hadoop000 ~]# find /root -cmin -120

[root@hadoop000 ~]# find /root -amin -120

/root/.cache/abrt

[root@hadoop000 ~]# find /root -mmin -120

2.5 -a / -o 多条件搜索

-a 两个条件同时满足

-o 两个条件满足任意一个即可

find /etc -size +163840 -a -size -204800

在/etc 下搜索大于80M 和小于100M 的文件

[root@hadoop000 ~]# find / -size +163840 -a -size -204800 -exec ls -lh {} \;

-rwxr-xr-x. 1 root root 97M Jul 12 2019 /usr/lib64/firefox/libxul.so

-rwxr-xr-x. 1 root root 88M Sep 11 15:05 /usr/java/jdk1.8.0_231/jre/lib/amd64/libjfxwebkit.so

-rw-r--r-- 1 hadoop hadoop 95M Oct 8 12:01 /hadoop/software/phoenix-4.10.0-cdh5.12.0/phoenix-4.10.0-cdh5.12.0-pig.jar

2.6 -exec / -ok 对搜索的文件执行操作

find /etc -name init -exec ls -ls -l {} \;

在/etc 下查找init 文件并显示其详细信息

[root@hadoop000 ~]# find / -size +163840 -a -size -204800 -exec ls -lh {} \;

-rwxr-xr-x. 1 root root 97M Jul 12 2019 /usr/lib64/firefox/libxul.so

-rwxr-xr-x. 1 root root 88M Sep 11 15:05 /usr/java/jdk1.8.0_231/jre/lib/amd64/libjfxwebkit.so

-rw-r--r-- 1 hadoop hadoop 95M Oct 8 12:01 /hadoop/software/phoenix-4.10.0-cdh5.12.0/phoenix-4.10.0-cdh5.12.0-pig.jar

2.7 -type 根据文件类型查找

f 文件

d 目录

l 软链接文件

[root@hadoop000 ~]# find / -size +102400 -a -type f

/hadoop/software/hadoop-2.6.0-cdh5.16.1.tar.gz

/hadoop/software/hbase-1.2.0-cdh5.16.1.tar.gz

/hadoop/software/flume-ng-1.6.0-cdh5.16.1.tar.gz

/hadoop/software/kafka_2.11-1.1.1.tgz

/hadoop/software/apache-phoenix-4.14.0-cdh5.14.2-bin.tar.gz

/hadoop/software/apache-phoenix-4.14.0-cdh5.14.2-bin/phoenix-4.14.0-cdh5.14.2-hive.jar

/hadoop/software/apache-phoenix-4.14.0-cdh5.14.2-bin/phoenix-4.14.0-cdh5.14.2-client.jar

/hadoop/software/apache-phoenix-4.14.0-cdh5.14.2-bin/phoenix-4.14.0-cdh5.14.2-pig.jar

/hadoop/software/spark-2.4.4-bin-hadoop2.6.tgz

2.8 -inum 根据i节点搜索

[root@hadoop000 ~]# ls -il

total 189612

100663362 -rw-------. 1 root root 1894 Jan 6 04:05 anaconda-ks.cfg

100663364 -rw-r--r--. 1 root root 1925 Jan 6 04:09 initial-setup-ks.cfg

102139148 -rw-r--r--. 1 root root 194151339 Nov 18 15:19 jdk-8u231-linux-x64.tar.gz

103669271 lrwxrwxrwx 1 root root 48 Jan 26 11:27 setting.xml -> /hadoop/app/apache-maven-3.6.2/conf/settings.xml

[root@hadoop000 ~]# find / -inum 100663362

/root/anaconda-ks.cfg

3. grep 在文件内容中查找

命令所在路径: /bin/grep

执行权限: 所有用户

语法:grep -iv [指定字符串] [文件]

功能描述:在文件中搜索字符串匹配的行并输出

- -i 不区分大小写

- -v 排除指定字符串

- -v ^# /etc/inittab 排除以#开头的行

范例: grep mysql /root/install.log

grep -v ^# /etc/inittab 排除以#开头的行

4.远程拷贝 scp

从本地复制到远程

拷贝目录

scp -r /home/test/ [email protected]:/home/test/

scp -r /root/cdh [email protected]:/root/cdh