spark 内存管理模型

MemoryManager

An abstract memory manager that enforces how memory is shared between execution and storage. In this context, execution memory refers that used for computation in shuffles, joins, sorts and aggregations, while storage memory refers to that used for caching and propagating internal data across the cluster. There exists one MemoryManager per JVM.

一个抽象的内存管理,控制在执行和存储之间的内存共享

在这个上下文,执行内存指的是那些在shuffles joins sorts 和 aggregations的计算,而存储内存指的是用来缓存和传播在集群中的内部数据(比如广播变量),一个内存管理一个Jvm.

private[spark] abstract class MemoryManager(

conf: SparkConf,

numCores: Int,

onHeapStorageMemory: Long, //用于存储的堆内存大小

onHeapExecutionMemory: Long //用于执行的堆内存大小

) extends Logging {

// -- Methods related to memory allocation policies and bookkeeping ------------------------------

//用于堆内存的存储内存池

@GuardedBy("this")

protected val onHeapStorageMemoryPool = new StorageMemoryPool(this, MemoryMode.ON_HEAP)

@GuardedBy("this")

//用于存储的堆外内存

protected val offHeapStorageMemoryPool = new StorageMemoryPool(this, MemoryMode.OFF_HEAP)

@GuardedBy("this")

//用于执行的堆内存池

protected val onHeapExecutionMemoryPool = new ExecutionMemoryPool(this, MemoryMode.ON_HEAP)

@GuardedBy("this")

//用于执行的推外内存

protected val offHeapExecutionMemoryPool = new ExecutionMemoryPool(this, MemoryMode.OFF_HEAP)

//大小由参数决定

onHeapStorageMemoryPool.incrementPoolSize(onHeapStorageMemory)

onHeapExecutionMemoryPool.incrementPoolSize(onHeapExecutionMemory)

//堆外内存的最大值,默认为0

protected[this] val maxOffHeapMemory = conf.get(MEMORY_OFFHEAP_SIZE)

//堆外内存用于存储的部分

protected[this] val offHeapStorageMemory =

(maxOffHeapMemory * conf.getDouble("spark.memory.storageFraction", 0.5)).toLong

offHeapExecutionMemoryPool.incrementPoolSize(maxOffHeapMemory - offHeapStorageMemory)

offHeapStorageMemoryPool.incrementPoolSize(offHeapStorageMemory)

- spark内存管理是由4部分组成

- 堆存储池,堆执行池

- 非堆存储池,非堆执行池

- 执行指的 是执行内存指的是那些在shuffles joins sorts 和 aggregations的计算,也就是说你自己在代码里写一句话的内存其实没有被spark管理.你new一个超大对象还是会OOM.所以,例如spark堆内存管理并不是管理所有的jvm内存,还要留一些给我们的代码使用.

- 存储内存指的是用来缓存和传播在集群中的内部数据(比如广播变量),一个内存管理一个Jvm.

onHeapStorageMemoryPool.incrementPoolSize(onHeapStorageMemory)

onHeapExecutionMemoryPool.incrementPoolSize(onHeapExecutionMemory)

//堆外内存的最大值,默认为0

protected[this] val maxOffHeapMemory = conf.get(MEMORY_OFFHEAP_SIZE)

//堆外内存用于存储的部分

protected[this] val offHeapStorageMemory =

(maxOffHeapMemory * conf.getDouble("spark.memory.storageFraction", 0.5)).toLong

offHeapExecutionMemoryPool.incrementPoolSize(maxOffHeapMemory - offHeapStorageMemory)

offHeapStorageMemoryPool.incrementPoolSize(offHeapStorageMemory)

上面的代码用来初始化这四个内存池,可以知道,两个堆的池是由构造函数传递过来的,而非堆的内存默认是0,如果想设置,通过spark.memory.offHeap.size

private[spark] val MEMORY_OFFHEAP_SIZE = ConfigBuilder("spark.memory.offHeap.size")

.doc("The absolute amount of memory in bytes which can be used for off-heap allocation. " +

"This setting has no impact on heap memory usage, so if your executors' total memory " +

"consumption must fit within some hard limit then be sure to shrink your JVM heap size " +

"accordingly. This must be set to a positive value when spark.memory.offHeap.enabled=true.")

.bytesConf(ByteUnit.BYTE)

.checkValue(_ >= 0, "The off-heap memory size must not be negative")

.createWithDefault(0)

单位是字节的内存,用来申请堆外内存,这个设置对堆内存没有影响,

所以如果你的executor的总内存消费必须在一个固定的值,那么确保缩小你的jvm堆内存.

这个值必须非负,当spark.memory.offHeap.enabled=true

conf.getDouble(“spark.memory.storageFraction”, 0.5),非堆内存用于存储的默认值是0.5,而且可以发现,非堆内存分成两部分,一部分用于存储,一部分用于计算,它们俩把非堆用完了.

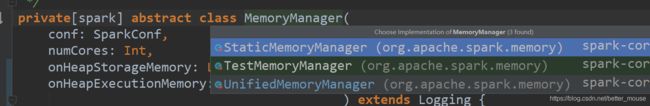

UnifiedMemoryManager

val useLegacyMemoryManager = conf.getBoolean("spark.memory.useLegacyMode", false)

val memoryManager: MemoryManager =

if (useLegacyMemoryManager) {

//静态内存管理

new StaticMemoryManager(conf, numUsableCores)

} else {

//统一内存管理

UnifiedMemoryManager(conf, numUsableCores)

}

由上可知,spark现在默认用的就是UnifiedMemoryManager,而StaticMemoryManager是它以前使用的.

A MemoryManager that enforces a soft boundary between execution and storage such that either side can borrow memory from the other.

The region shared between execution and storage is a fraction of (the total heap space - 300MB) configurable through spark.memory.fraction (default 0.6). The position of the boundary within this space is further determined by spark.memory.storageFraction (default 0.5). This means the size of the storage region is 0.6 * 0.5 = 0.3 of the heap space by default.

Storage can borrow as much execution memory as is free until execution reclaims its space. When this 2happens, cached blocks will be evicted from memory until sufficient borrowed memory is released to satisfy the execution memory request. Similarly, execution can borrow as much storage memory as is free. However, execution memory is never evicted by storage due to the complexities involved in implementing this. The implication is that attempts to cache blocks may fail if execution has already eaten up most of the storage space, in which case the new blocks will be evicted immediately according to their respective storage levels.

一个MemoryManager,在执行和存储控制一个软边界,在这种情况下,每边可以向另一边借内存.

执行和存储的共享区域是 (总堆内存-300M)的一小部分,通过spark.memory.fraction配置,默认是0.6,此空间内边界的位置由spark.memory.storageFraction(确定是0.5)进一步确定.这意味着默认的存储区域的内存的大小是堆内存的0.6 * 0.5 = 0.3

当执行内存是空闲的时候存储可能尽可能的借执行内存直到执行回收它的内存空间.当这发生的时候,缓存的块将被从内存中移除直到借的内存被释放来满足执行内存的请求.相同的,当存储内存空闲的时候执行内存也可以借存储内存.但是执行内存永远不会驱逐存储,是因为这个操作的复杂性.这意味着如果执行已经吃掉了大多数存储空间尝试缓存一个块可能失败.在这种情况下,新块将根据它们各自的存储级别立即被逐出.

/**

* Return the total amount of memory shared between execution and storage, in bytes.

*/

private def getMaxMemory(conf: SparkConf): Long = {

//得到系统的最大堆内存

val systemMemory = conf.getLong("spark.testing.memory", Runtime.getRuntime.maxMemory)

val reservedMemory = conf.getLong("spark.testing.reservedMemory",

if (conf.contains("spark.testing")) 0 else RESERVED_SYSTEM_MEMORY_BYTES)

val minSystemMemory = (reservedMemory * 1.5).ceil.toLong

if (systemMemory < minSystemMemory) {

throw new IllegalArgumentException(s"System memory $systemMemory must " +

s"be at least $minSystemMemory. Please increase heap size using the --driver-memory " +

s"option or spark.driver.memory in Spark configuration.")

}

// SPARK-12759 Check executor memory to fail fast if memory is insufficient

if (conf.contains("spark.executor.memory")) {

val executorMemory = conf.getSizeAsBytes("spark.executor.memory")

if (executorMemory < minSystemMemory) {

throw new IllegalArgumentException(s"Executor memory $executorMemory must be at least " +

s"$minSystemMemory. Please increase executor memory using the " +

s"--executor-memory option or spark.executor.memory in Spark configuration.")

}

}

//减300M

val usableMemory = systemMemory - reservedMemory

val memoryFraction = conf.getDouble("spark.memory.fraction", 0.6)

//乘以0.6,得到最大可用内存

(usableMemory * memoryFraction).toLong

}

可以调用的参数

- 黄色的:

(Jvm内存-300M)*0.6(spark.memory.fraction)

spark.memory.storageFraction(默认0.5)分配上下两个黄的内存 - 绿色的的:

spark.memory.offHeap.enabled=true

spark.memory.offHeap.size 设置堆内存

spark.memory.storageFraction(默认0.5)分配上下两个绿的内存 - 可以看到堆内的内存留了很多给我们代码用,而堆外内存完的分完了.这个内存模型主要是给spark自己来使用的,我们的代码不受它的控制.