一、什么是ThreadPoolExecutor

ThreadPoolExecutor是Java 1.5开始引入的,作为线程存放的集合池子——线程池,主要是为了解决:

- 重用线程资源,降低线程创建和销毁的开销;

- 集中维护和管理多个线程;

二、编码体验

JDK已经为我们封装好了线程池的工具类Executors,提供了几个便利的静态方法,简单列举几个;

- newFixedThreadPool:定长线程池;

- newSingleThreadExecutor:单线程的线程池;

- newCachedThreadPool:可缓存的线程池;

- newScheduledThreadPool:可延迟执行或周期执行线程池;

这里采用newFixedThreadPool作为例子,两种线程池提交方式为例:

ExecutorService service = Executors.newFixedThreadPool(1);

service.submit(() -> System.out.println("submit提交,开启多线程..."));

service.execute(() -> System.out.println("execute提交,开启多线程..."));

这样就创建了长度为1的线程池,并且分别用submit和execute两种方式提交了任务,可以看出不需要我们手动new新的线程,也不需要我们手动start线程。

三、源码剖析

为什么定义好了线程池submit或execute了任务就可以自动执行,jdk底层又是如何实现的呢?

根据上面Executors的几个静态方法(除了newScheduledThreadPool),最终都是指向ThreadPoolExecutor的构造方法:

1、构造方法

/**

* Creates a new {@code ThreadPoolExecutor} with the given initial

* parameters.

*

* @param corePoolSize the number of threads to keep in the pool, even

* if they are idle, unless {@code allowCoreThreadTimeOut} is set

* @param maximumPoolSize the maximum number of threads to allow in the

* pool

* @param keepAliveTime when the number of threads is greater than

* the core, this is the maximum time that excess idle threads

* will wait for new tasks before terminating.

* @param unit the time unit for the {@code keepAliveTime} argument

* @param workQueue the queue to use for holding tasks before they are

* executed. This queue will hold only the {@code Runnable}

* tasks submitted by the {@code execute} method.

* @param threadFactory the factory to use when the executor

* creates a new thread

* @param handler the handler to use when execution is blocked

* because the thread bounds and queue capacities are reached

* @throws IllegalArgumentException if one of the following holds:

* {@code corePoolSize < 0}

* {@code keepAliveTime < 0}

* {@code maximumPoolSize <= 0}

* {@code maximumPoolSize < corePoolSize}

* @throws NullPointerException if {@code workQueue}

* or {@code threadFactory} or {@code handler} is null

*/

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

if (corePoolSize < 0 ||

maximumPoolSize <= 0 ||

maximumPoolSize < corePoolSize ||

keepAliveTime < 0)

throw new IllegalArgumentException();

if (workQueue == null || threadFactory == null || handler == null)

throw new NullPointerException();

this.corePoolSize = corePoolSize;

this.maximumPoolSize = maximumPoolSize;

this.workQueue = workQueue;

this.keepAliveTime = unit.toNanos(keepAliveTime);

this.threadFactory = threadFactory;

this.handler = handler;

}

构造方法的参数还是比较多的,根据源码注释逐个分析:

corePoolSize

核心线程数,除非设置了allowCoreThreadTimeOut,否则需要保留在池中的线程数大小,即使这些线程处于空闲状态;maximumPoolSize

允许线程池中最大的线程数量;keepAliveTime

当线程数量超过了核心线程数,多出闲置的线程在等待新任务的最长时间;unit

keepAliveTime的时间单位;workQueue

执行任务前用于保存任务的队列,该队列仅仅包含提交的Runnable的任务;threadFactory

executor创建新线程使用的线程工厂;handler

线程池饱和处理策略;

构造方法具体只是做了参数非空校验,以及全局变量的初始化,接下来看看execute方法:

2、execute

/**

* Executes the given task sometime in the future. The task

* may execute in a new thread or in an existing pooled thread.

*

* If the task cannot be submitted for execution, either because this

* executor has been shutdown or because its capacity has been reached,

* the task is handled by the current {@code RejectedExecutionHandler}.

*

* @param command the task to execute

* @throws RejectedExecutionException at discretion of

* {@code RejectedExecutionHandler}, if the task

* cannot be accepted for execution

* @throws NullPointerException if {@code command} is null

*/

public void execute(Runnable command) {

if (command == null)

throw new NullPointerException();

/*

* Proceed in 3 steps:

*

* 1. If fewer than corePoolSize threads are running, try to

* start a new thread with the given command as its first

* task. The call to addWorker atomically checks runState and

* workerCount, and so prevents false alarms that would add

* threads when it shouldn't, by returning false.

*

* 2. If a task can be successfully queued, then we still need

* to double-check whether we should have added a thread

* (because existing ones died since last checking) or that

* the pool shut down since entry into this method. So we

* recheck state and if necessary roll back the enqueuing if

* stopped, or start a new thread if there are none.

*

* 3. If we cannot queue task, then we try to add a new

* thread. If it fails, we know we are shut down or saturated

* and so reject the task.

*/

int c = ctl.get();

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command))

reject(command);

else if (workerCountOf(recheck) == 0)

addWorker(null, false);

}

else if (!addWorker(command, false))

reject(command);

}

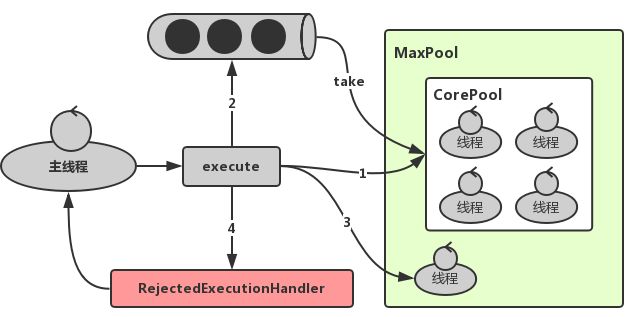

源码注释已经说明得很清楚,线程池工作流程分为3步:

- 如果当前线程池的线程数量小于corePoolSize,那么尝试新建一个线程执行该任务,会通过检查当前状态runState和线程池线程数量workerCount来进行原子操作addWorker,根据返回值决定操作成功与否;

- 否则检查当前任务是否可以排队(大于corePoolSize,小于maximumPoolSize),就算确认可以添加到workQueue中排队等待,我们还是需要recheck重新检查当先线程池状态,可能由于之前的工作线程已经died或者当前线程池shutdown;

- 如果当前任务都无法排队(等待队列已满),那么尝试新建一个线程执行该任务,如果仍然失败(线程池数量大于maximumPoolSize),那么执行拒绝策略reject;

执行逻辑还是比较复杂的,因为添加队列、修改状态均使用了无锁原子操作,附以图示:

ok,回到execute方法源码来,特别注意这个全局变量ctl,她便是线程池的数据核心。

3、ctl

/**

* The main pool control state, ctl, is an atomic integer packing

* two conceptual fields

* workerCount, indicating the effective number of threads

* runState, indicating whether running, shutting down etc

*

* In order to pack them into one int, we limit workerCount to

* (2^29)-1 (about 500 million) threads rather than (2^31)-1 (2

* billion) otherwise representable. If this is ever an issue in

* the future, the variable can be changed to be an AtomicLong,

* and the shift/mask constants below adjusted. But until the need

* arises, this code is a bit faster and simpler using an int.

*

* The workerCount is the number of workers that have been

* permitted to start and not permitted to stop. The value may be

* transiently different from the actual number of live threads,

* for example when a ThreadFactory fails to create a thread when

* asked, and when exiting threads are still performing

* bookkeeping before terminating. The user-visible pool size is

* reported as the current size of the workers set.

*

* The runState provides the main lifecycle control, taking on values:

*

* RUNNING: Accept new tasks and process queued tasks

* SHUTDOWN: Don't accept new tasks, but process queued tasks

* STOP: Don't accept new tasks, don't process queued tasks,

* and interrupt in-progress tasks

* TIDYING: All tasks have terminated, workerCount is zero,

* the thread transitioning to state TIDYING

* will run the terminated() hook method

* TERMINATED: terminated() has completed

*

* The numerical order among these values matters, to allow

* ordered comparisons. The runState monotonically increases over

* time, but need not hit each state. The transitions are:

*

* RUNNING -> SHUTDOWN

* On invocation of shutdown(), perhaps implicitly in finalize()

* (RUNNING or SHUTDOWN) -> STOP

* On invocation of shutdownNow()

* SHUTDOWN -> TIDYING

* When both queue and pool are empty

* STOP -> TIDYING

* When pool is empty

* TIDYING -> TERMINATED

* When the terminated() hook method has completed

*

* Threads waiting in awaitTermination() will return when the

* state reaches TERMINATED.

*

* Detecting the transition from SHUTDOWN to TIDYING is less

* straightforward than you'd like because the queue may become

* empty after non-empty and vice versa during SHUTDOWN state, but

* we can only terminate if, after seeing that it is empty, we see

* that workerCount is 0 (which sometimes entails a recheck -- see

* below).

*/

private final AtomicInteger ctl = new AtomicInteger(ctlOf(RUNNING, 0));

private static final int COUNT_BITS = Integer.SIZE - 3;

private static final int CAPACITY = (1 << COUNT_BITS) - 1;

// runState is stored in the high-order bits

private static final int RUNNING = -1 << COUNT_BITS;

private static final int SHUTDOWN = 0 << COUNT_BITS;

private static final int STOP = 1 << COUNT_BITS;

private static final int TIDYING = 2 << COUNT_BITS;

private static final int TERMINATED = 3 << COUNT_BITS;

// Packing and unpacking ctl

private static int runStateOf(int c) { return c & ~CAPACITY; }

private static int workerCountOf(int c) { return c & CAPACITY; }

private static int ctlOf(int rs, int wc) { return rs | wc; }

第一句话就概括了ctl,The main pool control state, ctl, is an atomic integer packing two conceptual fields,workerCount,runState。这个AtomicInteger类型的变量,存储了工作线程数量和线程池状态两类数据,那么是怎么打包到一个变量中呢?

后面也有解释说明,In order to pack them into one int, we limit workerCount to (2^29)-1 (about 500 million) threads rather than (2^31)-1 (2 billion) otherwise representable,int类型为32位,低29位用于存储workCount而不是全部位数,高3位便用于存储runState。

先用二级制表示出CAPACITY的存储:

0001 1111 1111 1111 1111 1111 1111 1111

然后举个例子,一个RUNNING的线程池有5个工作线程,那么用ctl来表示为:

1110 0000 0000 0000 0000 0000 0000 0101

再回来看runStateOf()和workerCountOf()、ctlOf()三个方法,变得清晰多了。

既然已经清楚了ctl的工作原理,那么回到execute源码,分析下添加任务addWorker方法原理。

4、addWorker

/*

* Methods for creating, running and cleaning up after workers

*/

/**

* Checks if a new worker can be added with respect to current

* pool state and the given bound (either core or maximum). If so,

* the worker count is adjusted accordingly, and, if possible, a

* new worker is created and started, running firstTask as its

* first task. This method returns false if the pool is stopped or

* eligible to shut down. It also returns false if the thread

* factory fails to create a thread when asked. If the thread

* creation fails, either due to the thread factory returning

* null, or due to an exception (typically OutOfMemoryError in

* Thread.start()), we roll back cleanly.

*

* @param firstTask the task the new thread should run first (or

* null if none). Workers are created with an initial first task

* (in method execute()) to bypass queuing when there are fewer

* than corePoolSize threads (in which case we always start one),

* or when the queue is full (in which case we must bypass queue).

* Initially idle threads are usually created via

* prestartCoreThread or to replace other dying workers.

*

* @param core if true use corePoolSize as bound, else

* maximumPoolSize. (A boolean indicator is used here rather than a

* value to ensure reads of fresh values after checking other pool

* state).

* @return true if successful

*/

private boolean addWorker(Runnable firstTask, boolean core) {

retry:

for (;;) {

int c = ctl.get();

int rs = runStateOf(c);

// Check if queue empty only if necessary.

if (rs >= SHUTDOWN &&

! (rs == SHUTDOWN &&

firstTask == null &&

! workQueue.isEmpty()))

return false;

for (;;) {

int wc = workerCountOf(c);

if (wc >= CAPACITY ||

wc >= (core ? corePoolSize : maximumPoolSize))

return false;

if (compareAndIncrementWorkerCount(c))

break retry;

c = ctl.get(); // Re-read ctl

if (runStateOf(c) != rs)

continue retry;

// else CAS failed due to workerCount change; retry inner loop

}

}

boolean workerStarted = false;

boolean workerAdded = false;

Worker w = null;

try {

w = new Worker(firstTask);

final Thread t = w.thread;

if (t != null) {

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

// Recheck while holding lock.

// Back out on ThreadFactory failure or if

// shut down before lock acquired.

int rs = runStateOf(ctl.get());

if (rs < SHUTDOWN ||

(rs == SHUTDOWN && firstTask == null)) {

if (t.isAlive()) // precheck that t is startable

throw new IllegalThreadStateException();

workers.add(w);

int s = workers.size();

if (s > largestPoolSize)

largestPoolSize = s;

workerAdded = true;

}

} finally {

mainLock.unlock();

}

if (workerAdded) {

t.start();

workerStarted = true;

}

}

} finally {

if (! workerStarted)

addWorkerFailed(w);

}

return workerStarted;

}

源码有点长,但我还是全部贴出来了,方便后续整体回顾,细细品读还是别有一番滋味,下面逐段分析下。

开头定义了一个标签retry,用于内层嵌套for循环的控制,然后是一段简单的校验逻辑,对当前线程池状态、提交的任务及阻塞队列进行校验;

// Check if queue empty only if necessary.

if (rs >= SHUTDOWN &&

! (rs == SHUTDOWN &&

firstTask == null &&

! workQueue.isEmpty()))

return false;

然后是无限循环CAS增加workerCount,很有意思的一段代码;

for (;;) {

int wc = workerCountOf(c);

if (wc >= CAPACITY ||

wc >= (core ? corePoolSize : maximumPoolSize))

return false;

if (compareAndIncrementWorkerCount(c))

break retry;

c = ctl.get(); // Re-read ctl

if (runStateOf(c) != rs)

continue retry;

// else CAS failed due to workerCount change; retry inner loop

}

当CAS执行成功,即break到开头的retry标签,进行后面的操作。否则的话,说明执行期间ctl发生了改变,那么重新获取ctl,并且判断当前状态是否改变。如果runState没有改变继续执行内层for循环,没有必要执行外层循环初始化变量和参数校验逻辑,如果改变了就continue到retry标签,完全重来一次。

当CAS成功后,代表当前线程池workerCount已经增加了,那么现在便需要创建新的线程来执行了:

boolean workerStarted = false;

boolean workerAdded = false;

Worker w = null;

try {

w = new Worker(firstTask);

final Thread t = w.thread;

if (t != null) {

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

// Recheck while holding lock.

// Back out on ThreadFactory failure or if

// shut down before lock acquired.

int rs = runStateOf(ctl.get());

if (rs < SHUTDOWN ||

(rs == SHUTDOWN && firstTask == null)) {

if (t.isAlive()) // precheck that t is startable

throw new IllegalThreadStateException();

workers.add(w);

int s = workers.size();

if (s > largestPoolSize)

largestPoolSize = s;

workerAdded = true;

}

} finally {

mainLock.unlock();

}

if (workerAdded) {

t.start();

workerStarted = true;

}

}

} finally {

if (! workerStarted)

addWorkerFailed(w);

}

return workerStarted;

注意这里两个finnaly,第一个是ReetrantLock的释放,第二个是addWorker的校验回滚,当出现线程池shutdown,或者是新建的线程非存活状态,都需要回滚之前增加workerCount的操作,也就是之前CAS的操作,否则便start启动创建的线程并初始化两个bool标识位,附上addWorkerFailed的源码:

/**

* Rolls back the worker thread creation.

* - removes worker from workers, if present

* - decrements worker count

* - rechecks for termination, in case the existence of this

* worker was holding up termination

*/

private void addWorkerFailed(Worker w) {

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

if (w != null)

workers.remove(w);

decrementWorkerCount();

tryTerminate();

} finally {

mainLock.unlock();

}

}

有必要看下workers的定义了;

/**

* Set containing all worker threads in pool. Accessed only when

* holding mainLock.

*/

private final HashSet workers = new HashSet();

Set containing all worker threads in pool. Accessed only when holding mainLock.线程池中所有工作线程的集合,只有在持有mainLock锁的情况下才能访问该workers。这也就印证了每次对workers的操作,都需要获取锁mainLock.lock();了。

5、Worker

/**

* Class Worker mainly maintains interrupt control state for

* threads running tasks, along with other minor bookkeeping.

* This class opportunistically extends AbstractQueuedSynchronizer

* to simplify acquiring and releasing a lock surrounding each

* task execution. This protects against interrupts that are

* intended to wake up a worker thread waiting for a task from

* instead interrupting a task being run. We implement a simple

* non-reentrant mutual exclusion lock rather than use

* ReentrantLock because we do not want worker tasks to be able to

* reacquire the lock when they invoke pool control methods like

* setCorePoolSize. Additionally, to suppress interrupts until

* the thread actually starts running tasks, we initialize lock

* state to a negative value, and clear it upon start (in

* runWorker).

*/

private final class Worker

extends AbstractQueuedSynchronizer

implements Runnable

{

/**

* This class will never be serialized, but we provide a

* serialVersionUID to suppress a javac warning.

*/

private static final long serialVersionUID = 6138294804551838833L;

/** Thread this worker is running in. Null if factory fails. */

final Thread thread;

/** Initial task to run. Possibly null. */

Runnable firstTask;

/** Per-thread task counter */

volatile long completedTasks;

/**

* Creates with given first task and thread from ThreadFactory.

* @param firstTask the first task (null if none)

*/

Worker(Runnable firstTask) {

setState(-1); // inhibit interrupts until runWorker

this.firstTask = firstTask;

this.thread = getThreadFactory().newThread(this);

}

/** Delegates main run loop to outer runWorker */

public void run() {

runWorker(this);

}

// Lock methods

//

// The value 0 represents the unlocked state.

// The value 1 represents the locked state.

protected boolean isHeldExclusively() {

return getState() != 0;

}

protected boolean tryAcquire(int unused) {

if (compareAndSetState(0, 1)) {

setExclusiveOwnerThread(Thread.currentThread());

return true;

}

return false;

}

protected boolean tryRelease(int unused) {

setExclusiveOwnerThread(null);

setState(0);

return true;

}

public void lock() { acquire(1); }

public boolean tryLock() { return tryAcquire(1); }

public void unlock() { release(1); }

public boolean isLocked() { return isHeldExclusively(); }

void interruptIfStarted() {

Thread t;

if (getState() >= 0 && (t = thread) != null && !t.isInterrupted()) {

try {

t.interrupt();

} catch (SecurityException ignore) {

}

}

}

}

可以看到Worker类是ThreadPoolExecutor的内部类,并且实现了Runnable接口,继承自AbstractQueuedSynchronizer,仔细看她的构造方法,将自己的实例作为参数执行this.thread = getThreadFactory.newThread(this);,所以结合之前的addWorker方法中,执行t.start();,因此实际就是触发的Worker的run方法也就是外层runWorker方法(ThreadPoolExecutor的方法);

理所当然,这个runWorker方法,才是线程池中线程执行的核心;

6、runWorker

/**

* Main worker run loop. Repeatedly gets tasks from queue and

* executes them, while coping with a number of issues:

*

* 1. We may start out with an initial task, in which case we

* don't need to get the first one. Otherwise, as long as pool is

* running, we get tasks from getTask. If it returns null then the

* worker exits due to changed pool state or configuration

* parameters. Other exits result from exception throws in

* external code, in which case completedAbruptly holds, which

* usually leads processWorkerExit to replace this thread.

*

* 2. Before running any task, the lock is acquired to prevent

* other pool interrupts while the task is executing, and then we

* ensure that unless pool is stopping, this thread does not have

* its interrupt set.

*

* 3. Each task run is preceded by a call to beforeExecute, which

* might throw an exception, in which case we cause thread to die

* (breaking loop with completedAbruptly true) without processing

* the task.

*

* 4. Assuming beforeExecute completes normally, we run the task,

* gathering any of its thrown exceptions to send to afterExecute.

* We separately handle RuntimeException, Error (both of which the

* specs guarantee that we trap) and arbitrary Throwables.

* Because we cannot rethrow Throwables within Runnable.run, we

* wrap them within Errors on the way out (to the thread's

* UncaughtExceptionHandler). Any thrown exception also

* conservatively causes thread to die.

*

* 5. After task.run completes, we call afterExecute, which may

* also throw an exception, which will also cause thread to

* die. According to JLS Sec 14.20, this exception is the one that

* will be in effect even if task.run throws.

*

* The net effect of the exception mechanics is that afterExecute

* and the thread's UncaughtExceptionHandler have as accurate

* information as we can provide about any problems encountered by

* user code.

*

* @param w the worker

*/

final void runWorker(Worker w) {

Thread wt = Thread.currentThread();

Runnable task = w.firstTask;

w.firstTask = null;

w.unlock(); // allow interrupts

boolean completedAbruptly = true;

try {

while (task != null || (task = getTask()) != null) {

w.lock();

// If pool is stopping, ensure thread is interrupted;

// if not, ensure thread is not interrupted. This

// requires a recheck in second case to deal with

// shutdownNow race while clearing interrupt

if ((runStateAtLeast(ctl.get(), STOP) ||

(Thread.interrupted() &&

runStateAtLeast(ctl.get(), STOP))) &&

!wt.isInterrupted())

wt.interrupt();

try {

beforeExecute(wt, task);

Throwable thrown = null;

try {

task.run();

} catch (RuntimeException x) {

thrown = x; throw x;

} catch (Error x) {

thrown = x; throw x;

} catch (Throwable x) {

thrown = x; throw new Error(x);

} finally {

afterExecute(task, thrown);

}

} finally {

task = null;

w.completedTasks++;

w.unlock();

}

}

completedAbruptly = false;

} finally {

processWorkerExit(w, completedAbruptly);

}

}

Main worker run loop. Repeatedly gets tasks from queue and executes them, while coping with a number of issues,主要Worker进行循环,重复从队列中获取task任务并执行她们,同时处理一些问题;

值得注意的是这里task.run();前后两个处理方法beforeExecute(wt, task);和afterExecute(task, thrown);,都是两个空的方法,方便我们自定义线程池进行拓展;

其实看到这里还没有涉及到等待队列queue的数据交互,但是没关系,结合前面execute方法的解析,也有个一知半解,这里task执行完并不会结束该线程,而是会从queue中获取等待的task,while (task != null || (task = getTask()) != null),第一个条件当然是worker本身的task任务,后面肯定是从队列中获取task了;

7、getTask

/**

* Performs blocking or timed wait for a task, depending on

* current configuration settings, or returns null if this worker

* must exit because of any of:

* 1. There are more than maximumPoolSize workers (due to

* a call to setMaximumPoolSize).

* 2. The pool is stopped.

* 3. The pool is shutdown and the queue is empty.

* 4. This worker timed out waiting for a task, and timed-out

* workers are subject to termination (that is,

* {@code allowCoreThreadTimeOut || workerCount > corePoolSize})

* both before and after the timed wait, and if the queue is

* non-empty, this worker is not the last thread in the pool.

*

* @return task, or null if the worker must exit, in which case

* workerCount is decremented

*/

private Runnable getTask() {

boolean timedOut = false; // Did the last poll() time out?

for (;;) {

int c = ctl.get();

int rs = runStateOf(c);

// Check if queue empty only if necessary.

if (rs >= SHUTDOWN && (rs >= STOP || workQueue.isEmpty())) {

decrementWorkerCount();

return null;

}

int wc = workerCountOf(c);

// Are workers subject to culling?

boolean timed = allowCoreThreadTimeOut || wc > corePoolSize;

if ((wc > maximumPoolSize || (timed && timedOut))

&& (wc > 1 || workQueue.isEmpty())) {

if (compareAndDecrementWorkerCount(c))

return null;

continue;

}

try {

Runnable r = timed ?

workQueue.poll(keepAliveTime, TimeUnit.NANOSECONDS) :

workQueue.take();

if (r != null)

return r;

timedOut = true;

} catch (InterruptedException retry) {

timedOut = false;

}

}

}

这里有一行比较关键:

// Are workers subject to culling?

boolean timed = allowCoreThreadTimeOut || wc > corePoolSize;

这个timed的值直接决定后面workQueue取值的方式,是采用poll还是take,区别便是前者具有队列取值可以指定阻塞await时长,而后者一直阻塞await等待,贴一段LinkedBlockingQueue的poll的代码;

public E poll(long timeout, TimeUnit unit) throws InterruptedException {

E x = null;

int c = -1;

long nanos = unit.toNanos(timeout);

final AtomicInteger count = this.count;

final ReentrantLock takeLock = this.takeLock;

takeLock.lockInterruptibly();

try {

while (count.get() == 0) {

if (nanos <= 0)

return null;

nanos = notEmpty.awaitNanos(nanos);

}

x = dequeue();

c = count.getAndDecrement();

if (c > 1)

notEmpty.signal();

} finally {

takeLock.unlock();

}

if (c == capacity)

signalNotFull();

return x;

}

注意到while循环体里面的nanos = notEmpty.awaitNanos(nanos);,结合之前timed的定义,就知道线程池里面工作线程的生命周期了,当allowCoreThreadTimeOut || wc > corePoolSize为true是,该线程会从workQueue中取值并指定等待时长即构造方法中的keepAliveTime,超过该时长还是取不到task的话,getTask返回null,结束runWorker的while循环,线程结束;

顺便提及一句allowCoreThreadTimeOut默认是false,可以通过ThreadPoolExecutor的allowCoreThreadTimeOut方法修改默认值;

8、execute

分析到这里,也就差不多弄清楚了execute方法的第一步,但也是最重要的一步,接着execute方法来看,后面就变得简单多了,为了方便翻阅,重贴下execute方法(需要看源码注释的往上翻 ↑);

public void execute(Runnable command) {

int c = ctl.get();

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command))

reject(command);

else if (workerCountOf(recheck) == 0)

addWorker(null, false);

}

else if (!addWorker(command, false))

reject(command);

}

若当前workerCount已经超过了corePoolSize,那么会执行到下面的入队offer操作,offer的返回值说明是否入队成功,取决于等待队列workQueue是否容量已满,然而Executors提供的好几个静态工厂生成的ThreadPoolExecutor的阻塞队列,都是new LinkedBlockingQueue,贴下LinkedBlockingQueue的构造方法;

/**

* Creates a {@code LinkedBlockingQueue} with a capacity of

* {@link Integer#MAX_VALUE}.

*/

public LinkedBlockingQueue() {

this(Integer.MAX_VALUE);

}

/**

* Creates a {@code LinkedBlockingQueue} with the given (fixed) capacity.

*

* @param capacity the capacity of this queue

* @throws IllegalArgumentException if {@code capacity} is not greater

* than zero

*/

public LinkedBlockingQueue(int capacity) {

if (capacity <= 0) throw new IllegalArgumentException();

this.capacity = capacity;

last = head = new Node(null);

}

默认容量是Integer.MAX_VALUE,也就是(2^31) - 1,所以这个默认阻塞队列有点难满,所以阿里Java规范也推荐手写线程池构造参数,加深理解;

回到上面execute流程,入队之后还做了一次recheck,这个recheck两个条件非常有必要,一是判断当前线程池状态,而是判断当前工作线程数是否为0,分别进行对应处理;

最后如果的确是队列已满,则继续执行addWorker方法,区别是传入的第二个参数为false,这个决定了workerCount的边界,是corePoolSize还是maximumPoolSize,若仍然执行失败则会进行reject处理,最后贴一下ThreadPoolExecutor默认的handler;

/**

* A handler for rejected tasks that throws a

* {@code RejectedExecutionException}.

*/

public static class AbortPolicy implements RejectedExecutionHandler {

/**

* Creates an {@code AbortPolicy}.

*/

public AbortPolicy() { }

/**

* Always throws RejectedExecutionException.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

* @throws RejectedExecutionException always

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

throw new RejectedExecutionException("Task " + r.toString() +

" rejected from " +

e.toString());

}

}

默认会抛出一个异常,当然我们也可以实现这个RejectedExecutionHandler接口进行我们reject的自定义需求。

四、结束语

其实很早就想到要写一篇线程池的源码分析,但由于各种原因写到一半被搁置了很久。最近工作轻松不少,当我重新开始窥探Java的奥秘,还真的发自内心地感叹起前辈们的思想,多么的深邃远见,自己积攒的不过是管中窥豹。

文章的编写顺序与自己翻阅源码的过程完全一致,就算一次性通读全文,也不会感觉到太大的思想跳跃。而且本着刨根问底的思想,我尽可能地贴出对应完整的源码,不会因为相关注释过长便落下。恰巧是这些源码中的注释,才是我觉得理解源码的最好帮助。