部署k8s多节点

文章目录

- k8s多节点

-

- 配置部署环境

- 负载均衡部署

- 部署keepalived服务

- k8s拓展知识

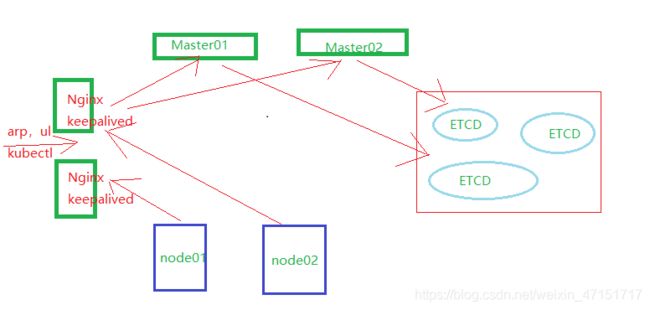

| 主机名 | ip地址 | 安装包 |

|---|---|---|

| Master01 | 192.168.136.88 | kube-apiserver kube-controller-manager kube-scheduler etcd |

| Master02 | 192.168.136.60 | kube-apiserver kube-controller-manager kube-scheduler |

| Ndoe01 | 192.168.136.40 | kubelet kube-proxy docker flannel etcd |

| Ndoe02 | 192.168.136.30 | kubelet kube-proxy docker flannel etcd |

| nginx01 | 192.168.136.10 | nginx、keepalived |

| nginx02 | 168.168.136.20 | nginx、keepalived |

k8s多节点

配置部署环境

优先关闭防火墙和selinux服务

在master01上操作

复制kubernetes目录到master02

[root@localhost ~]# scp -r /opt/kubernetes/ [email protected]:/opt

复制master中的三个组件启动脚本到master02上

scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service [email protected]:/usr/lib/systemd/system/

需要拷贝master01上已有的etcd证书给master02使用

特别注意:master02一定要有etcd证书

[root@localhost ~]# scp -r /opt/etcd/ [email protected]:/opt/

master02上操作

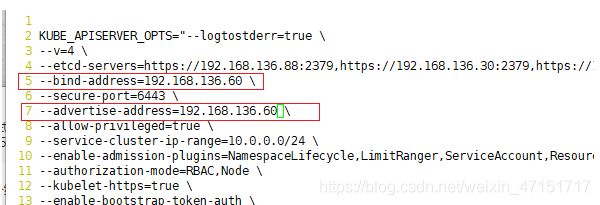

修改配置文件

cd /opt/kubernetes/cfg/

[root@master2 cfg]# vim kube-apiserver

5 --bind-address=192.168.136.60 \

6 --secure-port=6443 \

7 --advertise-address=192.168.136.60 \

启动master02中的三个组件服务

[root@localhost cfg]# systemctl start kube-apiserver.service

[root@localhost cfg]# systemctl start kube-controller-manager.service

[root@localhost cfg]# systemctl start kube-scheduler.service

增加环境变量

[root@localhost cfg]# vim /etc/profile

#末尾添加

export PATH=$PATH:/opt/kubernetes/bin/

[root@matser02 cfg]# source /etc/profile

检查是否开启

[root@matser02 cfg]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.136.30 Ready 24h v1.12.3

192.168.136.40 Ready 25h v1.12.3

负载均衡部署

在01和b02上操作

负载均衡

192.168.136.10",

192.168.136.20",

[root@localhost ~]# yum clean all

[root@localhost ~]# yum list

systemctl stop NetworkManager

systemctl disable NetworkManager

b01 和b02操作,建立nginx源

[root@localhost ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

安装nginx

[root@localhost ~]# yum install nginx -y

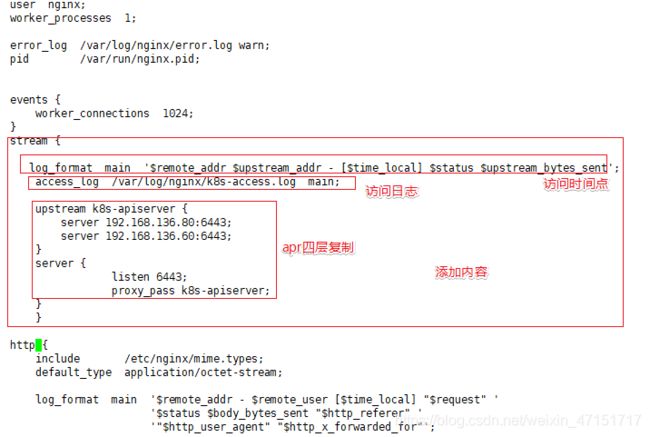

添加apr四层转发

所谓四层就是基于IP+端口的负载均衡,通过虚拟IP+端口接收请求,然后再分配到真实的服务器

[root@localhost ~]# vim /etc/nginx/nginx.conf

events {

worker_connections 1024;

}

-----------------------------------添加

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.136.80:6443;

server 192.168.136.60:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

-----------------------------------------

http {

开启服务查看端口

[root@localhost ~]# systemctl start nginx

[root@localhost ~]# netstat -ntap | grep nginx

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 67128/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 67128/nginx: master

部署keepalived服务

配置01和02节点

[root@localhost ~]# yum install keepalived -y

修改配置文件nide1节点

删除原有的配置文件,复制下面的代码

[root@localhost ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

[email protected]

[email protected]

[email protected]

}

# 邮件发送地址

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

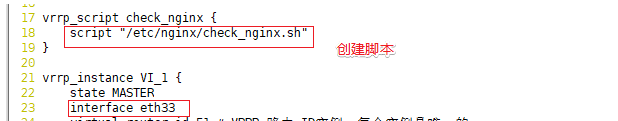

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.136.100/24

}

track_script {

check_nginx

}

}

修改配置文件nide2节点

删除原有的配置文件,复制下面的代码

[root@localhost ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

[email protected]

[email protected]

[email protected]

}

# 邮件发送地址

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.136.100/24

}

track_script {

check_nginx

}

}

在fznode1和fznode2上建立nginx脚本(目的nginx关闭keepalived也随之关闭)

[root@localhost ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

加权限

[root@localhost ~]# chmod +x /etc/nginx/check_nginx.sh

开启服务

[root@localhost ~]# systemctl start keepalived

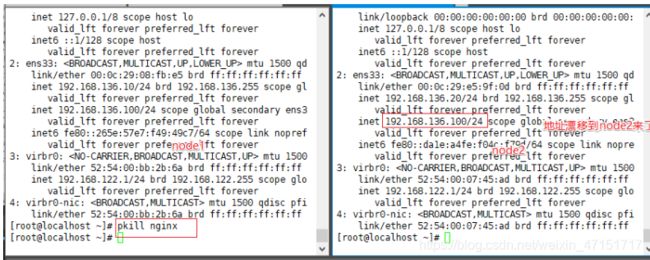

//查看lb01地址信息

验证地址漂移

(fz01中使用pkill nginx,再在fz02中使用ip a 查看)

//恢复操作(在lb01中先启动nginx服务,再启动keepalived服务)

开始修改node节点配置文件统一VIP

注意不是负载均衡节点是下面,两个都要改

node节点

192.168.136.40

192.168.136.30

[root@localhost ~]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig

[root@localhost ~]# vim /opt/kubernetes/cfg/kubelet.kubeconfig

[root@localhost ~]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

//统统修改为VIP

server: https://192.168.195.100:6443

[root@localhost cfg]# systemctl restart kubelet.service

[root@localhost cfg]# systemctl restart kube-proxy.service

开启服务

[root@localhost cfg]# systemctl restart kubelet.service

[root@localhost cfg]# systemctl restart kube-proxy.service

替换完成直接自检

[root@localhost cfg]# grep 100 *

bootstrap.kubeconfig: server: https://192.168.136.100:6443

kubelet.kubeconfig: server: https://192.168.136.100:6443

kube-proxy.kubeconfig: server: https://192.168.136.100:6443

在lb01上查看nginx的k8s日志

[root@localhost ~]# tail /var/log/nginx/k8s-access.log

192.168.136.40 192.168.136.60:6443 - [05/Oct/2020:23:23:59 +0800] 200 1121

192.168.136.30 192.168.136.80:6443, 192.168.136.60:6443 - [05/Oct/2020:23:24:00 +0800] 200 0, 1119

192.168.136.40 192.168.136.80:6443, 192.168.136.60:6443 - [05/Oct/2020:23:24:00 +0800] 200 0, 1119

192.168.136.30 192.168.136.80:6443, 192.168.136.60:6443 - [05/Oct/2020:23:24:00 +0800] 200 0, 1121

在master01上操作

测试创建pod

kubectl run nginx --image=nginx

查看状态

[root@localhost ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-nf9sk 0/1 ContainerCreating 0 33s //正在创建中

[root@localhost ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-nf9sk 1/1 Running 0 80s //创建完成,运行中

k8s拓展知识

k8s注意日志问题

看不了日志因为我们是匿名

[root@localhost ~]# kubectl logs nginx-dbddb74b8-c8gjh

Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-c8gjh)

解决:

赋予管理员权限

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

再次查看就可以看到

[root@localhost ~]# kubectl logs nginx-dbddb74b8-c8gjh

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

查看日志

查看pod网络(mster端口操作)

[root@localhost ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-c8gjh 1/1 Running 0 8m57s 172.17.54.2 192.168.136.40

在对应网段的node节点上操作可以直接访问

[root@localhost cfg]# curl 172.17.54.2

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

访问就会产生日志

[root@localhost ~]# kubectl get pods -o wide

172.17.54.1 - - [05/Oct/2020:15:39:24 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-475LFUDj-1601987675158)(C:\Users\19437\AppData\Roaming\Typora\typora-user-images\image-20201005210010602.png)]](http://img.e-com-net.com/image/info8/8364676f31af4aaaa7fe5afdd7e3cfae.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-DQWOAnf0-1601987675168)(C:\Users\19437\AppData\Roaming\Typora\typora-user-images\image-20201005224658967.png)]](http://img.e-com-net.com/image/info8/f854405c01794093ba64cdb6e19dcf28.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-sedgsqZX-1601987675175)(C:\Users\19437\AppData\Roaming\Typora\typora-user-images\image-20201005232044752.png)]](http://img.e-com-net.com/image/info8/9314fd4c0b4845e6a909e7b221aefb92.png)