RK3399pro 使用TNN日记 2(Linux系统)

RK3399pro 使用TNN日记 2(Linux系统)

- 一、TNN的demo调试

-

- 1.下载链接

- 2.编译TNN

- 3.从摄像头获取数据图像并检测

- 二.torch模型转tnn

- 暂时目前流程跑通,需要进行另外模型训练工作,先到这里吧。。。。

一、TNN的demo调试

1.下载链接

TNN模型下载链接 model

CSDN下载链接

百度网盘下载链接

提取码:a904

2.编译TNN

简单粗暴

(1) 进入到 TNN_PATH/scripts/

(2) 在终端中运行

./build_aarch64_linux.sh

一般都没有问题的,然后便已完成

(3) 进入到 TNN_PATH/examples/linux,

然后终端中执行 ./build_aarch64_linux.sh

会自动进行编译,然后进入到自动创建的 build目录下面,已经生成可执行文件“demo_arm_linux_facedetector”

(4) 阅读demo的代码可知,需要传参模型文件,可以在 TNN_PATH/model,运行“download_model.sh”下载(也可以在文章开头的链接可以下载)

(5) 至此,下载完demo,运行demo时候把地址传进去就可以了,确保环境没有问题

3.从摄像头获取数据图像并检测

说个建议:最好在PC端安装QT5 或者vscode进行代码测试,在PC端编译TNN和demo的方式和在ARM上一样,选择对应的build_*.sh就可以了。

正式开始:

(1) 首先修改CMakelist.txt,如下,在里面配置一些参数,然后可以使用qt打开这个cmake项目,方便调试

cmake_minimum_required(VERSION 3.1)

project(TNN-demo)

message(${

CMAKE_SOURCE_DIR})

#message(${TNN_LIB_PATH})

set(TNN_LIB_PATH "/home/firefly/TNN/TNN-master/scripts/build_aarch64_linux")

set(CMAKE_SYSTEM_NAME Linux)

set(TNN_OPENMP_ENABLE ON)

set(CMAKE_CXX_STANDARD 11)

set(TNN_ARM_ENABLE ON)

set(CMAKE_SYSTEM_PROCESSOR aarch64)

#设置clang编译,也可以不加,使用G++编译就可以了

set(CMAKE_C_COMPILER "/usr/bin/clang") # 填写绝对路径

set(CMAKE_C_FLAGS "-Wall -std=c99")

set(CMAKE_C_FLAGS_DEBUG "-g")

set(CMAKE_C_FLAGS_MINSIZEREL "-Os -DNDEBUG")

set(CMAKE_C_FLAGS_RELEASE "-O4 -DNDEBUG")

set(CMAKE_C_FLAGS_RELWITHDEBINFO "-O2 -g")

set(CMAKE_CXX_COMPILER "/usr/bin/clang++") # 填写绝对路径

set(CMAKE_CXX_FLAGS "-Wall")

set(CMAKE_CXX_FLAGS_DEBUG "-g")

set(CMAKE_CXX_FLAGS_MINSIZEREL "-Os -DNDEBUG")

set(CMAKE_CXX_FLAGS_RELEASE "-O4 -DNDEBUG")

set(CMAKE_CXX_FLAGS_RELWITHDEBINFO "-O2 -g")

#配置

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -O3 -std=c++11 -fPIC")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -O3 -std=c++11 -pthread -fPIC")

#指定路径

set(TNNRoot ${

CMAKE_CURRENT_SOURCE_DIR}/../../)

set(TNNInclude ${

TNNRoot}/include)

include_directories(${

TNNInclude})

include_directories(${

TNNRoot}/third_party/stb)

include_directories(${

CMAKE_SOURCE_DIR}/include)

include_directories(${

CMAKE_SOURCE_DIR}/../base)

link_directories(${

TNN_LIB_PATH})

link_libraries(-Wl,--whole-archive TNN -Wl,--no-whole-archive)

file(GLOB_RECURSE SRC "${CMAKE_SOURCE_DIR}/../base/*.cc")

find_package(OpenCV REQUIRED)

#关联openMP

find_package(OpenMP REQUIRED)

if(OPENMP_FOUND)

message("OPENMP FOUND")

set(CMAKE_C_FLAGS"${CMAKE_C_FLAGS} $({OpenMP_C_FLAGS}")

set(CMAKE_CXX_FLAGS"${CMAKE_CXX_FLAGS} ${OpenMP_CXX_FLAGS}")

set(CMAKE_EXE_LINKER_FLAGS"${CMAKE_EXE_LINKER_FLAGS} ${OpenMP_EXE_LINKER_FLAGS}")

endif()

#add_executable(demo_arm_linux_imageclassify src/image_classify.cc ${SRC})

add_executable(demo_arm_linux_facedetector src/face_detector.cc ${

SRC})

target_link_libraries(demo_arm_linux_facedetector ${

OpenCV_LIBS} ${

TNN_LIB_PATH})

(2) 修改源文件,在 TNN_PATH/examples/linux/src目录下

比如:

修改人脸识别例程,face_detector.cc,直接上代码吧,因为当时一点点修改,也忘了改哪里了,具体可以对比两个代码看。

int main(int argc, char** argv) {

cv::TickMeter tm;

tm.start();

VideoCapture capture;

capture.open(0);

if(!capture.isOpened())

return -1;

auto proto_content = fdLoadFile("/home/firefly/model/face_detector/version-slim-320_simplified.tnnproto");

auto model_content = fdLoadFile("/home/firefly/model/face_detector/version-slim-320_simplified.tnnmodel");

int h = 240, w = 320;

if(argc >= 5) {

h = std::atoi(argv[3]);

w = std::atoi(argv[4]);

}

auto option = std::make_shared<UltraFaceDetectorOption>();

{

option->proto_content = proto_content;

option->model_content = model_content;

option->library_path = "";

option->compute_units = TNN_NS::TNNComputeUnitsGPU;

option->input_width = w;

option->input_height = h;

option->score_threshold = 0.95;

option->iou_threshold = 0.15;

}

auto predictor = std::make_shared<UltraFaceDetector>();

std::vector<int> nchw = {

1, 3, h, w};

cout<<"Start Init"<<endl;

//Init

std::shared_ptr<TNNSDKOutput> sdk_output = predictor->CreateSDKOutput();

CHECK_TNN_STATUS(predictor->Init(option));

tm.stop();

double time=0;

time=tm.getTimeMilli();

cout<<"Init Time = "<<time<<"\n--------"<<endl;

cout<<"Start Detection"<<endl;

while(1)

{

tm.reset();

tm.start();

cv::Mat frame;

capture>>frame;

//cvtColor(frame,edges,CVBGR2GRAY)

std::vector<int> nchw = {

1, 3, 240, 320};

cv::resize(frame,frame,Size(320,240));

//Predict

auto image_mat = std::make_shared<TNN_NS::Mat>(TNN_NS::DEVICE_ARM, TNN_NS::N8UC3,nchw,frame.data);

CHECK_TNN_STATUS(predictor->Predict(std::make_shared<UltraFaceDetectorInput>(image_mat), sdk_output));

std::vector<FaceInfo> face_info;

if (sdk_output && dynamic_cast<UltraFaceDetectorOutput *>(sdk_output.get())) {

auto face_output = dynamic_cast<UltraFaceDetectorOutput *>(sdk_output.get());

face_info = face_output->face_list;

}

// cout<<"Start rectangle"<

cv::Mat resImg=frame;

for (int i = 0; i < face_info.size(); i++) {

auto face = face_info[i];

rectangle(resImg, Rect(face.x1, face.y1, face.x2-face.x1,

face.y2-face.y1), CV_RGB(0, 255, 0), 2);

}

tm.stop();

time=0;

time=tm.getTimeMilli();

int fps=0;

fps=tm.getFPS();

cout<<"Detection Time = "<<time<<endl;

cout<<"Detection FPS = "<<fps<<"\n--------"<<endl;

imshow("123",resImg);

waitKey(30);

}

return 0;

}

(3) 根据代码来讲,这个例程由于官方做个一次封装,所以跑起来不难,优化的话比较麻烦,还需要看源码,可以根据qt索引找到对应调用详细看一下。

说下这部分内容吧,比如这个例程,跑通问题不大,检测速度在30ms~80ms,波动挺大的,而且集中在下面这个位置,需要进入源码查看调用,再做修改。

CHECK_TNN_STATUS(predictor->Predict(std::make_shared<UltraFaceDetectorInput>(image_mat), sdk_output));

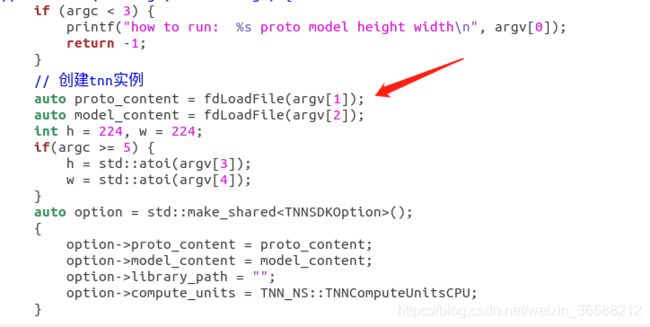

二.torch模型转tnn

其实这一方面跟着官方文档走,也挺容易的,这里我做了一个torchvision中resnet34模型的转换并调用的demo,稍微整理了一下需要的文件,依然是使用官方封装的TNN模型。

主要问题不大,流程是:

pytorch模型加载 —— 输出onnx模型(torch.onnx.export()) —— 调用TNN/tools/onnx2tnn.py(代码内容比较简单,可以自己看了按需修改)—— 获得tnn模型

下面放个下载链接

CSDN下载

百度网盘 提取码:okdx