pytorch入门实战笔记(二)——使用resnet网络识别是否患有新冠肺炎

本文使用的数据集为COVID-CT数据集,链接:https://pan.baidu.com/s/1gLtBkxO3_LSWxsziDJGSPQ

提取码:k3xo

项目结构如图:data文件夹下存放数据集,runner为运行代码,Resnet_0.00001文件夹下保存实验过程曲线图。

resnet模型实现

此部分参考博文 https://blog.csdn.net/qq_40700490/article/details/107967767)

Resnet作者通过构建恒等映射来解决随着网络层数的增加发生的梯度爆炸和梯度消失现象,设原本网络输入为x,输出为H(x)。则在resnet网络中令H(x)=F(x)+x,因此网络中就只需要学习输出残差F(x)=H(x)-x,且学习残差F(x)=H(x)-x会比直接学习原始特征H(x)简单的多。

#定义3*3卷积核

def conv3x3(in_channels, out_channels, stride=1):

return nn.Conv2d(in_channels, out_channels, kernel_size=3,

stride=stride, padding=1, bias=False)

#定义残差块

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(ResidualBlock, self).__init__()

self.conv1 = conv3x3(in_channels, out_channels, stride)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(out_channels, out_channels)

self.bn2 = nn.BatchNorm2d(out_channels)

self.downsample = downsample

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample:

#对x进行downsample操作,来解决上下层之间输入输出深度不一致的问题

residual = self.downsample(x)

#将bn2得到的结果与原本shortcut的结果加在一起

out += residual

out = self.relu(out)

return out

#定义resnet网络结构

class ResNet(nn.Module):

def __init__(self, block, layers, num_classes=2):

super(ResNet, self).__init__()

self.in_channels = 16

self.conv = conv3x3(3, 16)

self.bn = nn.BatchNorm2d(16)

self.relu = nn.ReLU(inplace=True)

self.layer1 = self.make_layer(block, 16, layers[0])

self.layer2 = self.make_layer(block, 32, layers[1], 2)

self.layer3 = self.make_layer(block, 64, layers[2], 2)

self.avg_pool = nn.AvgPool2d(8)

self.fc = nn.Linear(64, num_classes)

def make_layer(self, block, out_channels, blocks, stride=1):

#传入的blocks数量代表当前这个layer中的残差块数量

downsample = None

if (stride != 1) or (self.in_channels != out_channels):

downsample = nn.Sequential(

conv3x3(self.in_channels, out_channels, stride=stride),

nn.BatchNorm2d(out_channels))

layers = []

layers.append(block(self.in_channels, out_channels, stride, downsample))

self.in_channels = out_channels

for i in range(1, blocks):

layers.append(block(out_channels, out_channels))

return nn.Sequential(*layers)

def forward(self, x):

out = self.conv(x)

out = self.bn(out)

out = self.relu(out)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.avg_pool(out)

out = out.view(out.size(0), -1)

out = self.fc(out)

return out定义训练函数:

def train_runner(model, device, train_loader, optimizer, epoch):

#训练模型, 启用 BatchNormalization 和 Dropout, 将BatchNormalization和Dropout置为True

model.train()

#统计总数量

total = 0

#统计正确数量

correct = 0.0

#统计损失率

total_loss = 0.0

#迭代训练集数据

for i ,data in enumerate(train_loader, 0):

#获取数据的内容

inputs, labels = data

#部署到gpu

inputs, labels = inputs.to(device), labels.to(device)

#对优化器的参数进行初始化

optimizer.zero_grad()

#得到模型输出

outputs = model(inputs)

#计算损失,cross_entropy为交叉熵损失函数

loss = F.cross_entropy(outputs, labels)

#统计总损失

total_loss += loss.item()

#获取最大概率的预测结果

predict = outputs.argmax(dim=1)

#统计数据数量

total += labels.size(0)

#计算正确个数

correct += (predict == labels).sum().item()

#反向传播

loss.backward()

#优化器参数更新

optimizer.step()

if i%1000 == 0:

#输出本次迭代精确度、损失率

print("Train Epoch{} \t Loss: {:.6f}, accuracy: {:.6f}%".format(epoch, (total_loss/total), 100*(correct/total)))

#返回此次epoch计算所得的损失率和正确率

return total_loss/total, correct/total定义测试函数:

def test_runner(model, device, test_loader):

#调用eval()将不启用 BatchNormalization 和 Dropout, BatchNormalization和Dropout置为False

#模型验证函数必须要写, 否则只要有输入数据, 即使不训练, 它也会改变权值

model.eval()

#统计正确个数

correct = 0.0

#统计测试集损失率

test_loss = 0.0

#统计数据个数

total = 0

#torch.no_grad将不会计算梯度, 也不会进行反向传播

with torch.no_grad():

#迭代测试机中的数据

for data, targets in test_loader:

#部署到服务器

data, targets = data.to(device), targets.to(device)

#获取模型输出

output = model(data)

#计算损失值

current_loss = F.cross_entropy(output, targets).item()

test_loss += current_loss

#获取预测概率值最大的预测结果

predict = output.argmax(dim=1)

#获取数据长度

total += targets.size(0)

#获取正确个数

correct += (predict == targets).sum().item()

#输出正确率和损失率

print("test_loss: {:.6f}, accuracy: {:.6f}%".format(test_loss/total, 100*(correct/total)))

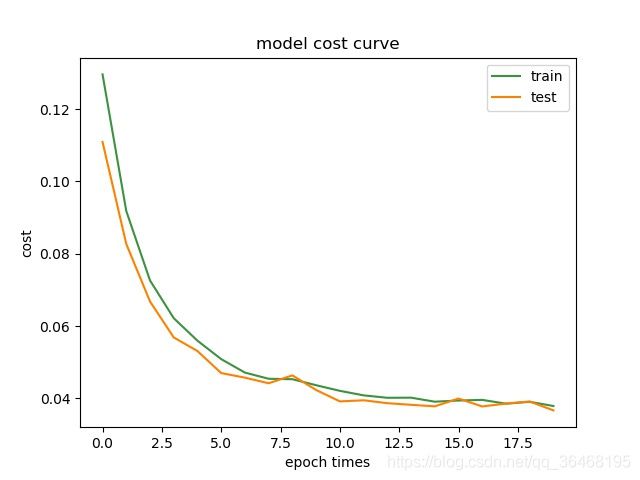

return test_loss/total, correct/total绘制曲线:

#传入训练集和测试集每轮epoch的正确率和损失率,标签值,标题和迭代的epoch轮数

def draw_curve(train_curve, test_curve, title, y_label, train_iterations, test_iterations):

plt.plot(range(train_iterations), train_curve, label='train', color="#3D9140")

plt.plot(range(test_iterations), test_curve, label='test', color="#FF8000")

plt.xlabel('epoch times')

#设置图例显示位置

plt.legend(loc="upper right")

#设置图像y轴标签

plt.ylabel(y_label)

#图像标题

plt.title(title)

#定义保存位置

plt.savefig('./Resnet_0.00001/'+title+'.jpg')

#显示图像

plt.show()主函数:

def main():

#调用gpu

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

#数据集路径

data_path = "E:\practice\CONVD_CT\data"

#图像预处理

transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomVerticalFlip(),

transforms.RandomResizedCrop(300),

transforms.Resize(32),

transforms.ToTensor(),

transforms.Normalize(mean, std)

])

#读取数据

image_sets = datasets.ImageFolder(os.path.join(data_path), transform=transform)

#划定训练集测试集所占比例

train_length = int(0.8 * len(image_sets))

test_length = len(image_sets) - train_length

#切分数据集、测试集

train_set, test_set = torch.utils.data.random_split(image_sets, [train_length, test_length])

train_set = train_set.dataset

test_set = test_set.dataset

train_loader = torch.utils.data.DataLoader(train_set, batch_size=16, shuffle=True)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=16, shuffle=False)

#部署模型,传入每层layer中使用的block数量

model = ResNet(ResidualBlock, [2, 2, 2]).to(device)

#定义优化器

optimizer = optim.Adam(model.parameters(), lr=0.00001)

epoch = 20

#保存图像训练集测试集每轮epoch的损失率、正确率

train_cost_curve = []

train_accuracy_curve = []

test_cost_curve = []

test_accuracy_curve = []

#迭代

for epoch in range(1, epoch+1):

#输出每轮迭代的起始时间

print("Epoch:{}, start_time:".format(epoch), time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())))

#训练

train_cost_curve_current, train_accuracy_curve_current = train_runner(model, device, train_loader, optimizer,

#测试 epoch)

test_cost_curve_current, test_accuracy_curve_current = test_runner(model, device, test_loader)

#向数组中添加数据以便绘制图形

train_accuracy_curve.append(train_accuracy_curve_current)

train_cost_curve.append(train_cost_curve_current)

test_accuracy_curve.append(test_accuracy_curve_current)

test_cost_curve.append(test_cost_curve_current)

#输出结束时间

print("end_time: ", time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())), '\n')

#绘图

draw_curve(train_accuracy_curve, test_accuracy_curve, 'model accuracy curve', 'cost', epoch, epoch)

draw_curve(train_cost_curve, test_cost_curve, 'model cost curve', 'cost', epoch, epoch)实验结果:

完整代码:

import torch

from torchvision import transforms, datasets

import matplotlib.pyplot as plt

import os

import torch.nn.functional as F

import torch.nn as nn

import torch.optim as optim

import time

mean = [0.485, 0.456, 0.406]

std = [0.229, 0.224, 0.225]

def conv3x3(in_channels, out_channels, stride=1):

return nn.Conv2d(in_channels, out_channels, kernel_size=3,

stride=stride, padding=1, bias=False)

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(ResidualBlock, self).__init__()

self.conv1 = conv3x3(in_channels, out_channels, stride)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(out_channels, out_channels)

self.bn2 = nn.BatchNorm2d(out_channels)

self.downsample = downsample

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, layers, num_classes=2):

super(ResNet, self).__init__()

self.in_channels = 16

self.conv = conv3x3(3, 16)

self.bn = nn.BatchNorm2d(16)

self.relu = nn.ReLU(inplace=True)

self.layer1 = self.make_layer(block, 16, layers[0])

self.layer2 = self.make_layer(block, 32, layers[1], 2)

self.layer3 = self.make_layer(block, 64, layers[2], 2)

self.avg_pool = nn.AvgPool2d(8)

self.fc = nn.Linear(64, num_classes)

def make_layer(self, block, out_channels, blocks, stride=1):

downsample = None

if (stride != 1) or (self.in_channels != out_channels):

downsample = nn.Sequential(

conv3x3(self.in_channels, out_channels, stride=stride),

nn.BatchNorm2d(out_channels))

layers = []

layers.append(block(self.in_channels, out_channels, stride, downsample))

self.in_channels = out_channels

for i in range(1, blocks):

layers.append(block(out_channels, out_channels))

return nn.Sequential(*layers)

def forward(self, x):

out = self.conv(x)

out = self.bn(out)

out = self.relu(out)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.avg_pool(out)

out = out.view(out.size(0), -1)

out = self.fc(out)

return out

def train_runner(model, device, train_loader, optimizer, epoch):

model.train()

total = 0

correct = 0.0

total_loss = 0.0

for i ,data in enumerate(train_loader, 0):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = F.cross_entropy(outputs, labels)

total_loss += loss.item()

predict = outputs.argmax(dim=1)

total += labels.size(0)

correct += (predict == labels).sum().item()

loss.backward()

optimizer.step()

if i%1000 == 0:

print("Train Epoch{} \t Loss: {:.6f}, accuracy: {:.6f}%".format(epoch, (total_loss/total), 100*(correct/total)))

return total_loss/total, correct/total

def test_runner(model, device, test_loader):

model.eval()

correct = 0.0

test_loss = 0.0

total = 0

with torch.no_grad():

for data, targets in test_loader:

data, targets = data.to(device), targets.to(device)

output = model(data)

current_loss = F.cross_entropy(output, targets).item()

test_loss += current_loss

predict = output.argmax(dim=1)

total += targets.size(0)

correct += (predict == targets).sum().item()

print("test_loss: {:.6f}, accuracy: {:.6f}%".format(test_loss/total, 100*(correct/total)))

return test_loss/total, correct/total

def draw_curve(train_curve, test_curve, title, y_label, train_iterations, test_iterations):

plt.plot(range(train_iterations), train_curve, label='train', color="#3D9140")

plt.plot(range(test_iterations), test_curve, label='test', color="#FF8000")

plt.xlabel('epoch times')

plt.legend(loc="upper right")

plt.ylabel(y_label)

plt.title(title)

plt.savefig('E:/practice/CONVD_CT/compare/Resnet_0.00001/'+title+'.jpg')

plt.show()

def main():

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

data_path = "E:\practice\CONVD_CT\data"

transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomVerticalFlip(),

transforms.RandomResizedCrop(300),

transforms.Resize(32),

transforms.ToTensor(),

transforms.Normalize(mean, std)

])

image_sets = datasets.ImageFolder(os.path.join(data_path), transform=transform)

train_length = int(0.8 * len(image_sets))

test_length = len(image_sets) - train_length

train_set, test_set = torch.utils.data.random_split(image_sets, [train_length, test_length])

train_set = train_set.dataset

test_set = test_set.dataset

train_loader = torch.utils.data.DataLoader(train_set, batch_size=16, shuffle=True)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=16, shuffle=False)

model = ResNet(ResidualBlock, [2, 2, 2]).to(device)

optimizer = optim.Adam(model.parameters(), lr=0.00001)

epoch = 10

train_cost_curve = []

train_accuracy_curve = []

test_cost_curve = []

test_accuracy_curve = []

for epoch in range(1, epoch+1):

print("Epoch:{}, start_time:".format(epoch), time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())))

train_cost_curve_current, train_accuracy_curve_current = train_runner(model, device, train_loader, optimizer,

epoch)

test_cost_curve_current, test_accuracy_curve_current = test_runner(model, device, test_loader)

train_accuracy_curve.append(train_accuracy_curve_current)

train_cost_curve.append(train_cost_curve_current)

test_accuracy_curve.append(test_accuracy_curve_current)

test_cost_curve.append(test_cost_curve_current)

print("end_time: ", time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())), '\n')

draw_curve(train_accuracy_curve, test_accuracy_curve, 'model accuracy curve', 'cost', epoch, epoch)

draw_curve(train_cost_curve, test_cost_curve, 'model cost curve', 'cost', epoch, epoch)

if __name__ == '__main__':

main()实验结果精确度不算高,还有很多需要改进的地方,请大佬们多多指教