Linux云计算虚拟化-使用rancher搭建k8s集群并发布电商网站

文章目录

- Linux云计算虚拟化-使用Rancher搭建k8s集群并使用lnmp架构发布电商网站

-

- 1. Rancher介绍

- 2. 使用Rancher搭建k8s集群

-

- 2.1 实验环境介绍

- 2.2 在rancher主机上部署rancher平台

-

- 2.2.1 导入docker镜像(在线pull很慢)

- 2.2.2 安装rancher2.3.3

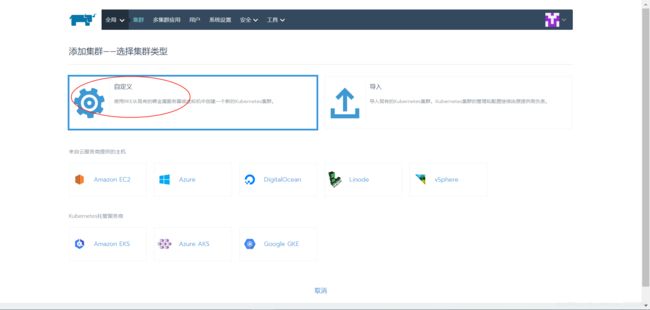

- 3. 添加集群并为集群添加节点

-

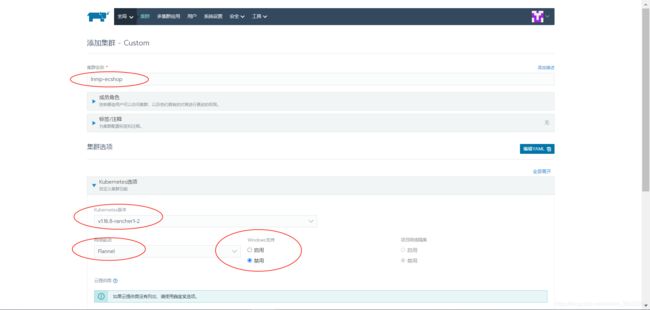

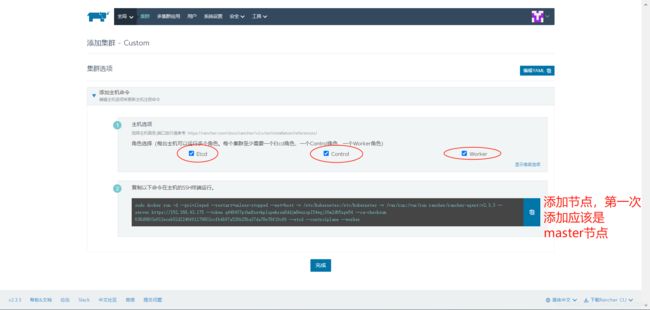

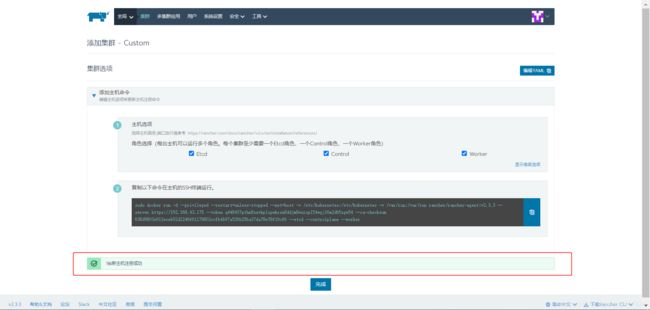

- 3.1 创建集群及添加master节点

- 3.2 添加node到k8s集群

- 4. 使用rancher自带监控查看k8s集群运行状态

- 5. 分布式LNMP架构部署电商网站

-

- 5.1 CoreDNS集群内部服务发现

- 5.2 在rancher节点安装并测试kubectl

- 5.3 在rancher节点上使用kubectl命令为node节点部署php服务

- 5.4 部署nginx服务

- 5.5 部署mysql

- 5.6 ingress实时监听apiserver

- 5.7 安装ecshop

- 6. 总结

Linux云计算虚拟化-使用Rancher搭建k8s集群并使用lnmp架构发布电商网站

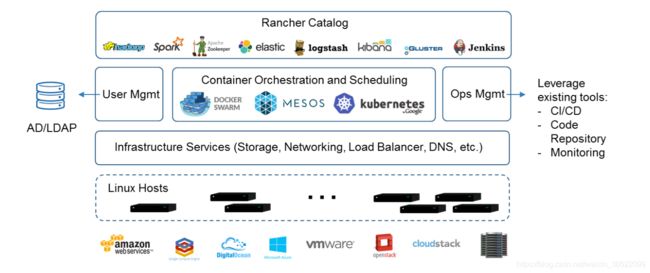

1. Rancher介绍

Rancher是一个开源容器管理平台,可以帮助企业在生产环境中轻松快捷的部署和管理容器。Rancher可以轻松管理各个环境的kubernetes,满足IT需求并为devops团队提供支持。

k8s已经成为容器编排标准,也成为了各类云与虚拟化厂商提供的标准基础架构。Rancher用户可以选择使用Rancher Kubernetes Engine(RKE)创建集群,也可以使用GKE`AKS\EKS`等云k8s服务。Rancher用户还可以导入和管理现有的k8s集群。

- GKE:Google Kubernetes Engine, Google 的 k8s 托管服务

- AKS:Azure Kubernetes 服务 (AKS) ,微软的 k8s 托管服务

- EKS:Amazon Elastic Container Service for Kubernetes ,Amazon 的 K8S 托管服务

Rancher为 DevOps 工程师提供了一个直观的用户界面来管理他们的服务容器,用户不需要深入了解 Kubernetes 概念就可以开始使用 Rancher。 Rancher 包含应用商店,支持一键式部署 Compose模板。

docker-compose 是 Docker 容器进行编排的工具,定义和运行多容器的应用,可以一条命令启动多个容器,使用 docker-compose不再需要使用 shell 脚本来启动容器。

docker-compose 默认的模板文件是 docker-compose.yml,其中定义的每个服务都必须通过image 指令指定镜像或 build 指令(需要 Dockerfile)来自动构建。

Rancher组成部分:

①基础设施编排

- Rancher可以使用任何公有云或者私有云的Linux主机资源。

- Linux主机可以是虚拟机,也可以是物理机。

- Rancher仅需要主机有CPU,内存,本地磁盘和网络资源。

- 从Rancher的角度来说,一台云厂商提供的云主机和一台自己的物理机是一样的。

- Rancher为运行容器化的应用实现了一层灵活的基础设施服务。Rancher的基础设施服务包括网络, 存储, 负载均衡, DNS和安全模块。

- Rancher的基础设施服务也是通过容器部署的,所以同样Rancher的基础设施服务可以运行在任何Linux主机上。

②容器编排与调度

- Rancher包含了当前全部主流的编排调度引擎,例如Docker Swarm,Kubernetes,和Mesos。

- Rancher还支持自己的Cattle容器编排调度引擎。

- Cattle被广泛用于编排Rancher自己的基础设施服务以及用于Swarm集群,Kubernetes集群和Mesos集群的配置,管理与升级。

③应用商店

- Rancher的用户可以在应用商店里一键部署由多个容器组成的应用。

- 用户可以管理这个部署的应用,并且可以在这个应用有新的可用版本时进行自动化的升级。

- Rancher提供了一个由Rancher社区维护的应用商店,其中包括了一系列的流行应用。

- Rancher的用户也可以创建自己的私有应用商店。

④企业级权限管理

- Rancher支持灵活的插件式的用户认证。支持Active Directory,LDAP, Github等 认证方式。

- Rancher支持在环境级别的基于角色的访问控制 (RBAC),可以通过角色来配置某个用户或者用户组对开发环境或者生产环境的访问权限。

rancher官网:https://rancher.com/ https://www.rancher.cn/

中文官方文档:https://docs.rancher.cn/rancher2/

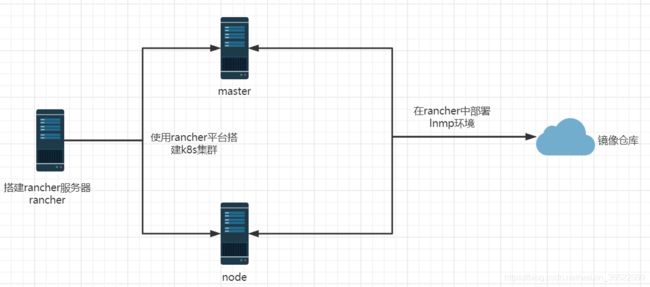

2. 使用Rancher搭建k8s集群

2.1 实验环境介绍

实验拓扑图:

各主机介绍:

| 主机名 | IP地址 | 系统版本 | 作用 |

|---|---|---|---|

| rancher | 192.168.43.175 | centos7.6 | rancher服务器 |

| master | 192.168.43.245 | centos7.6 | k8s-master,计算服务器,Etcd , Control,Worker节点 |

| node | 192.168.80.147 | centos7.6 | k8s-node,计算服务器 |

介绍一个小技巧:当某条指令需要在所有主机上执行,可以用xshell远程连接上所有主机,然后选择所有会话执行命令,这样就不需要一台一台执行。

以下内容所有主机都要操作:

# 所有主机都关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

# 禁用selinx

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

# 卸载交换内存

swapoff -a

sed -i 's/.*swap.*/#&/g' /etc/fstab

# 修改主机名

hostnamectl set-hostname rancher

hostnamectl set-hostname master

hostnamectl set-hostname node

# 移除原先的yum源,安装aliyun的centos7和epel源

# 这里视情况选择是否执行

mv /etc/yum.repos.d/* /opt

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all

yum makecache

所有主机安装docker:

# 所有节点都安装docker

yum install -y yum-utils device-mapper-persistent-data lvm2

# 添加docker源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装docker19

# yum install docker-ce docker-ce-cli containerd.io -y【直接安装会安装docker20版本,在rancher中会导致创建集群失败。】

yum install -y https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/Packages/containerd.io-1.2.6-3.3.el7.x86_64.rpm

yum install -y https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/Packages/docker-ce-cli-19.03.9-3.el7.x86_64.rpm

yum install -y https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/Packages/docker-ce-19.03.9-3.el7.x86_64.rpm

# 启动docker并加入到开机自启

systemctl start docker && systemctl enable docker.service && systemctl status docker

# 配置docker镜像加速器,加快pull镜像到本地的速度

tee /etc/docker/daemon.json << EOF

{

"registry-mirrors":["http://e9yneuy4.mirror.aliyuncs.com"]

}

EOF

# 重新加载daemon配置文件

systemctl daemon-reload && systemctl restart docker

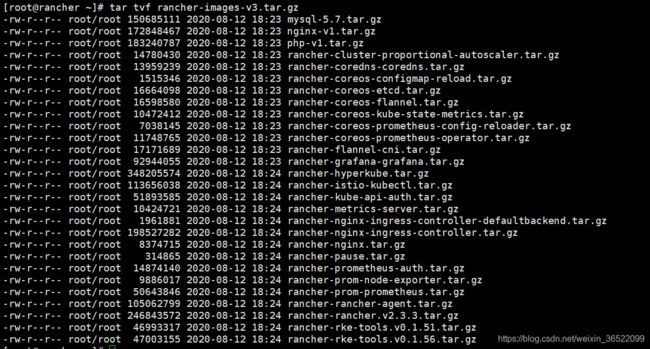

2.2 在rancher主机上部署rancher平台

2.2.1 导入docker镜像(在线pull很慢)

上传已打包好的镜像:

[root@rancher ~]# tar tvf rancher-images-v3.tar.gz

-rw-r--r-- root/root 150685111 2020-08-12 18:23 mysql-5.7.tar.gz

-rw-r--r-- root/root 172848467 2020-08-12 18:23 nginx-v1.tar.gz

-rw-r--r-- root/root 183240787 2020-08-12 18:23 php-v1.tar.gz

-rw-r--r-- root/root 14780430 2020-08-12 18:23 rancher-cluster-proportional-autoscaler.tar.gz

-rw-r--r-- root/root 13959239 2020-08-12 18:23 rancher-coredns-coredns.tar.gz

-rw-r--r-- root/root 1515346 2020-08-12 18:23 rancher-coreos-configmap-reload.tar.gz

-rw-r--r-- root/root 16664098 2020-08-12 18:23 rancher-coreos-etcd.tar.gz

-rw-r--r-- root/root 16598580 2020-08-12 18:23 rancher-coreos-flannel.tar.gz

-rw-r--r-- root/root 10472412 2020-08-12 18:23 rancher-coreos-kube-state-metrics.tar.gz

-rw-r--r-- root/root 7038145 2020-08-12 18:23 rancher-coreos-prometheus-config-reloader.tar.gz

-rw-r--r-- root/root 11748765 2020-08-12 18:23 rancher-coreos-prometheus-operator.tar.gz

-rw-r--r-- root/root 17171689 2020-08-12 18:23 rancher-flannel-cni.tar.gz

-rw-r--r-- root/root 92944055 2020-08-12 18:23 rancher-grafana-grafana.tar.gz

-rw-r--r-- root/root 348205574 2020-08-12 18:24 rancher-hyperkube.tar.gz

-rw-r--r-- root/root 113656038 2020-08-12 18:24 rancher-istio-kubectl.tar.gz

-rw-r--r-- root/root 51893585 2020-08-12 18:24 rancher-kube-api-auth.tar.gz

-rw-r--r-- root/root 10424721 2020-08-12 18:24 rancher-metrics-server.tar.gz

-rw-r--r-- root/root 1961881 2020-08-12 18:24 rancher-nginx-ingress-controller-defaultbackend.tar.gz

-rw-r--r-- root/root 198527282 2020-08-12 18:24 rancher-nginx-ingress-controller.tar.gz

-rw-r--r-- root/root 8374715 2020-08-12 18:24 rancher-nginx.tar.gz

-rw-r--r-- root/root 314865 2020-08-12 18:24 rancher-pause.tar.gz

-rw-r--r-- root/root 14874140 2020-08-12 18:24 rancher-prometheus-auth.tar.gz

-rw-r--r-- root/root 9886017 2020-08-12 18:24 rancher-prom-node-exporter.tar.gz

-rw-r--r-- root/root 50643846 2020-08-12 18:24 rancher-prom-prometheus.tar.gz

-rw-r--r-- root/root 105062799 2020-08-12 18:24 rancher-rancher-agent.tar.gz

-rw-r--r-- root/root 246843572 2020-08-12 18:24 rancher-rancher.v2.3.3.tar.gz

-rw-r--r-- root/root 46993317 2020-08-12 18:24 rancher-rke-tools.v0.1.51.tar.gz

-rw-r--r-- root/root 47003155 2020-08-12 18:24 rancher-rke-tools.v0.1.56.tar.gz

拷贝到所有节点,并使用docker加载镜像:

# 拷贝镜像到所有节点

[root@rancher ~]# tar xf rancher-images-v3.tar.gz -C /opt/

[root@rancher ~]# scp /opt/*.tar.gz [email protected]:/opt/

[root@rancher ~]# scp /opt/*.tar.gz [email protected]:/opt/

# 使用sed -r批量创建docker load语句

[root@rancher ~]# ll /opt/*.tar.gz|awk '{print $NF}'|sed -r 's#(.*)#docker load -i \1#'

docker load -i /opt/mysql-5.7.tar.gz

docker load -i /opt/nginx-v1.tar.gz

docker load -i /opt/php-v1.tar.gz

docker load -i /opt/rancher-cluster-proportional-autoscaler.tar.gz

docker load -i /opt/rancher-coredns-coredns.tar.gz

docker load -i /opt/rancher-coreos-configmap-reload.tar.gz

docker load -i /opt/rancher-coreos-etcd.tar.gz

docker load -i /opt/rancher-coreos-flannel.tar.gz

docker load -i /opt/rancher-coreos-kube-state-metrics.tar.gz

docker load -i /opt/rancher-coreos-prometheus-config-reloader.tar.gz

docker load -i /opt/rancher-coreos-prometheus-operator.tar.gz

docker load -i /opt/rancher-flannel-cni.tar.gz

docker load -i /opt/rancher-grafana-grafana.tar.gz

docker load -i /opt/rancher-hyperkube.tar.gz

docker load -i /opt/rancher-istio-kubectl.tar.gz

docker load -i /opt/rancher-kube-api-auth.tar.gz

docker load -i /opt/rancher-metrics-server.tar.gz

docker load -i /opt/rancher-nginx-ingress-controller-defaultbackend.tar.gz

docker load -i /opt/rancher-nginx-ingress-controller.tar.gz

docker load -i /opt/rancher-nginx.tar.gz

docker load -i /opt/rancher-pause.tar.gz

docker load -i /opt/rancher-prometheus-auth.tar.gz

docker load -i /opt/rancher-prom-node-exporter.tar.gz

docker load -i /opt/rancher-prom-prometheus.tar.gz

docker load -i /opt/rancher-rancher-agent.tar.gz

docker load -i /opt/rancher-rancher.v2.3.3.tar.gz

docker load -i /opt/rancher-rke-tools.v0.1.51.tar.gz

docker load -i /opt/rancher-rke-tools.v0.1.56.tar.gz

# 批量导入镜像,在所有节点都要导入。

[root@rancher ~]# ll /opt/*.tar.gz|awk '{print $NF}'|sed -r 's#(.*)#docker load -i \1#' |bash

[root@master ~]# ll /opt/*.tar.gz|awk '{print $NF}'|sed -r 's#(.*)#docker load -i \1#' |bash

[root@node ~]# ll /opt/*.tar.gz|awk '{print $NF}'|sed -r 's#(.*)#docker load -i \1#' |bash

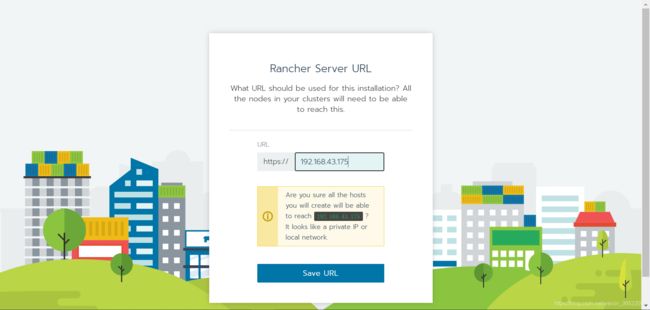

2.2.2 安装rancher2.3.3

目前最新版本是rancher2.5.3,可以单独pull最新版的rancher镜像替换2.3.3。

# 使用镜像rancher/rancher:v2.3.3创建容器

[root@rancher ~]# sudo docker run --privileged -d --restart=unless-stopped -p 80:80 -p 443:443 -v/var/lib/rancher/:/var/lib/rancher/ rancher/rancher:v2.3.3

66c866de813f5d4284098d626fefc192994f95bea694fbcf162a540494273cda

[root@rancher ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

66c866de813f rancher/rancher:v2.3.3 "entrypoint.sh" 56 seconds ago Up 47 seconds 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp sad_hopper

输入网址:https://192.168.43.175/

①设置登录密码:

④注销重新登录:https://192.168.43.175/login

3. 添加集群并为集群添加节点

3.1 创建集群及添加master节点

# 创建k8s集群的master节点,即使用镜像运行一个容器作为master节点

[root@master ~]# sudo docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.3.3 --server https://192.168.43.175 --token q448457pthn8zzvkplxpwkrsn8ddjm8wzrspl54wgjl6m2d65zgw54 --ca-checksum 638d9803e912eceb52d2246f61179802ccfb4b97a526b25ba37da78e78f19cf6 --etcd --controlplane --worker

dea4bf2d798ccc25db021d5d6e3635f28a3938d5cc6187cd7b24b9f54b2fec8d

点击“完成”即可。

⑤查看k8s集群创建进度,大概几分钟就能安装好。【镜像在本地,不用pull的情况下】

看到“Active”就表明k8s集群已经安装成功了。

# 如果遇到报错,可能是master节点之前安装过k8s导致的,可以先把原来安装的k8s先删掉,再执行上述操作。

# 如果遇到pull有问题,可能是pull太慢导致的,可以考虑先pull到本地,然后使用本地镜像。

# 可以通过查看k8s的日志和docker容器的日志查看安装情况:

docker logs 容器名 # docker容器日志

tail -100 /var/log/messages # k8s日志

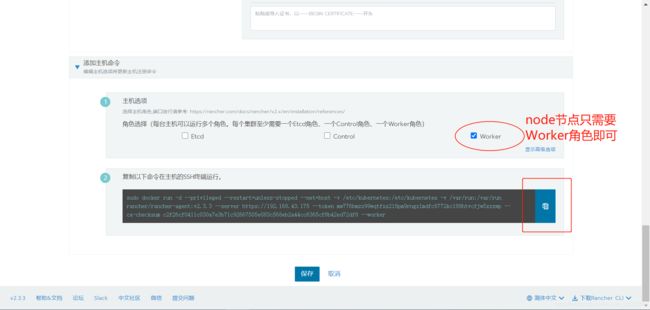

3.2 添加node到k8s集群

# 在node上运行

[root@node ~]# sudo docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.3.3 --server https://192.168.43.175 --token mw776bmzz99wqtfxx2l8pm9rngzlmdfc8772kcl88htvcfjw5xzxwp --ca-checksum c2f26cf0411c030a7e3b71c92667505e083c568eb2a44cc6365cf8b42ed72df8 --worker

③查看集群添加节点情况:

可以看到,node节点也添加进k8s集群中了。

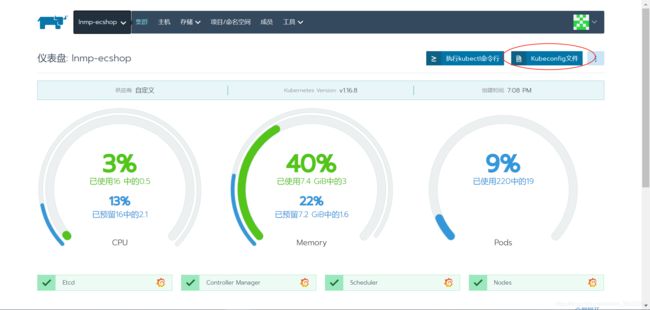

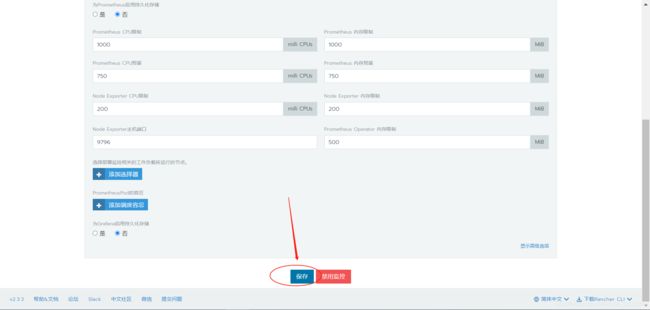

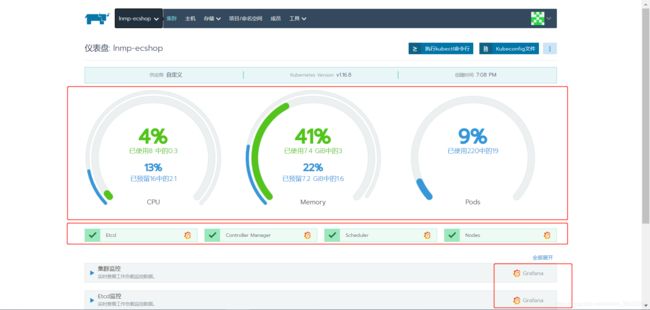

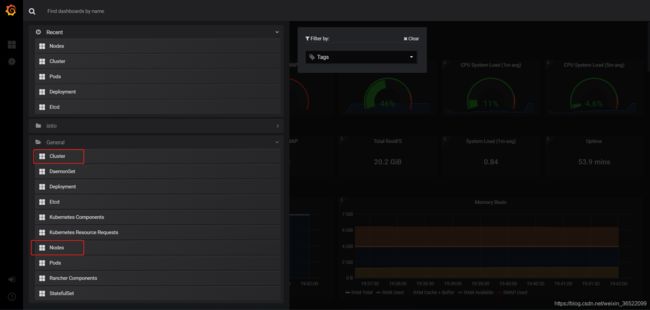

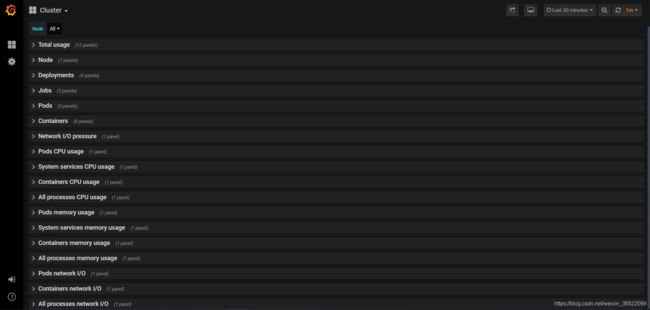

4. 使用rancher自带监控查看k8s集群运行状态

①点击“启用监控并查看实时监控指标”

②等待安装grafana组件,可视化监控数据

看到下图就算安装成功了:

④查看grafana监控数据

⑤可以选择监控哪个节点

以上内容就是集群的创建及集群各指标的监控。

5. 分布式LNMP架构部署电商网站

5.1 CoreDNS集群内部服务发现

-

CoreDNS,从kubernetes1.2开始成为了k8s的默认DNS服务器。

-

kubeadm安装的k8s集群,默认装有CoreDNS,使用命令

kubeadm init --feature-gates=CoreDNS=true启用即可。 -

在

kubernetes集群中,直接访问service name,然后通过CoreDNS域名解析服务找到集群IP。 -

在rancher环境中已经安装了CoreDNS服务,无需重复安装。

5.2 在rancher节点安装并测试kubectl

①安装kubectl

# 配置kubernetes的yum源

[root@rancher ~]# tee /etc/yum.repos.d/kubernetes.repo << 'EOF'

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@rancher ~]# yum install -y kubectl

# 将设置保存到~/.kube/config

# 然后下载kubectl(如有需要)并运行。

apiVersion: v1

kind: Config

clusters:

- name: "lnmp-ecshop"

cluster:

server: "https://192.168.43.175/k8s/clusters/c-g644f"

certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM3akNDQ\

WRhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFvTVJJd0VBWURWUVFLRXdsMGFHVXQKY\

21GdVkyZ3hFakFRQmdOVkJBTVRDV05oZEhSc1pTMWpZVEFlRncweU1ERXlNekV4TURVNE5EaGFGd\

zB6TURFeQpNamt4TURVNE5EaGFNQ2d4RWpBUUJnTlZCQW9UQ1hSb1pTMXlZVzVqYURFU01CQUdBM\

VVFQXhNSlkyRjBkR3hsCkxXTmhNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ\

0tDQVFFQTBuckNaOUZmakorRUl5QlcKQlZBL21jT2JuL2RZSHZxY0pyT200NHZSSmsybTVGZHFWW\

nV1M1pUMXo5YkltK2svdkRTemkwZGI3OUhiUjREbwp4SC9lUTFtWDdEemNuWE9GZ084SnpMbEZkM\

Gl1V21rdXdGNDBFbDRhT3pseGdlcHVDU1RkMW1YdVBmUjlWWHNHCkYxaTgxNEorOVVFRXdaa0V4d\

m1odDJ6aDhYYnpYOWpldTVwbTNjak9LVWRKVk50RXhac2U3My90cDNnMnFiUk8KTVpqN0hBbVpOZ\

ldNL1VlZVQ0S0RDTk53bXFxREh3em1TU1FRYW1aMDI3Y1JmVWxVZktYYjFSUlpNNEN5eGg4aQpBa\

GJYMHUwK3k4WlZiRVFhTXZzbW9vRzNobFdMZHNBbHBrc0tQYmUwczcrOFJIWS9zdEdiQjlQa1E2N\

0ZxbHNmCkIvdXhwUUlEQVFBQm95TXdJVEFPQmdOVkhROEJBZjhFQkFNQ0FxUXdEd1lEVlIwVEFRS\

C9CQVV3QXdFQi96QU4KQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBbm1FVGM4MGFpSUhXUm5OMmZ6Y\

2dzY3BRYVNsano1T1k5UlZEVTVZbQpKd0ROSXhVRUJBL2lmWERhc0ViRHZvWFFtckhJaXNEb1N1e\

DFKS0o3VCs3N1l1RklEeGRQMGtQUGkrT0Jnc0JCCmM3TlRoN2J5S2hrQjNVdWpqdXU2WUhCeXpKZ\

k9jZjJRM1BGYlZ3YkIwd0hjRGdFaUZZc015NkIyNXdnUzFRYlcKaGNEaTNuMXdyeVRJK2VBUzQ5Q\

jB6VHRYcG96MHAwaGt0bVpZSDVlb2NTUnhiZXJubFBNcE43M2dlR1Z6TG9NKwpIV3dFSkdVenZFU\

EV0MVlwRS93RFhMeTZ4bDhJQ0RRK0dZdldpclpia1hsK2h5S21RMTk3bkF4dXNKL1VidHdICkJjb\

ExoTFJaMWJML0V5MHZPenk0dWZKS29TaVBRcGREY0cwcFpzWGRyTHlBK1E9PQotLS0tLUVORCBDR\

VJUSUZJQ0FURS0tLS0t"

- name: "lnmp-ecshop-master"

cluster:

server: "https://192.168.43.245:6443"

certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN3akNDQ\

WFxZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFTTVJBd0RnWURWUVFERXdkcmRXSmwKT\

FdOaE1CNFhEVEl3TVRJek1URXhNRGd6TWxvWERUTXdNVEl5T1RFeE1EZ3pNbG93RWpFUU1BNEdBM\

VVFQXhNSAphM1ZpWlMxallUQ0NBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ\

0VCQU5yN3gyMmJYNHpOCndVV3F3YVh6RVIzODIxSjZTR1JEQUpCUDlxZ2VlcGUvU3MrU0VlcW5mR\

HJ5bmRjU09INUhLQ2NqOCtQa0ZmaEgKMURib3k1QXZ1WE5lY05RRitTSHZmbE9oVjZuTmUxTnZ0Z\

0FaNW9JRmFOODM1NDNNaE92U0pPSUhONXJIRXhoVwp2SWQ0azFLbkx4Q08zUC9BVldCR0xJZjIxR\

GlhTzZFbUxwNGV3dEo4ekRSZ2ZsS3BXOEQxYS8xZmg0amVsU2tQCldmeGM2T0lBRjBpbzZWNXVCe\

nYxTVJEWWttWFY3UVlLSWxBQVM0aFVodzVrL0owVVNjTzYxbHl0ZGVMd29zTXYKLzg4NlVPYjA2U\

kFNRVc1d0FOMTJOenFVbTdhUnRXUHRLSkF4ZlJ6blZJMjBxTzk0bzNvcGZvZFUweVJKajFkcgpwd\

lBZYzNqSFpkc0NBd0VBQWFNak1DRXdEZ1lEVlIwUEFRSC9CQVFEQWdLa01BOEdBMVVkRXdFQi93U\

UZNQU1CCkFmOHdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBQlAwaXRnSWFDdXhtQnZXYSs4UkJEM\

2ZqUTRHUGRFVWVMRUcKT0J1dHRDS0tzUWQrV1FiNXRsQ0x3VXlRY1JaVm0rbmNqNkI2dWxuZG5PN\

1VKWndnMS9CVmxsams2eDYxYnBCTgpsZkNpd1Y5RXZKcWJCeG5YTGdaOWJuaXRWaURZNmx6SUtlU\

ndxMW1ZMkNJVVA5TGVPSUdXdlhwWnRXSDdrOGFWCmlHZXdSczlEdjFrMzVOMy9ObFFub3JsY1c4c\

WlwUk02UkQwdnJFM2tVTGcwT09iVUdWV2g3S0Q3M3RYNWJuNHMKNVFaRytibGtmZ3VPTEpWdWYyL\

2tJVm9nQ0plU3RMaCtuS2ZUd2RHcWxDMUl3WWdITE85aUd0SzQ2d2tlTzdkTwp6WitDckwwcTJCK\

1J4TUEyNUErazNQVy9YOHRhWS8zcFFJcVY5cjJDV0w2ZHRBc1JkNjA9Ci0tLS0tRU5EIENFUlRJR\

klDQVRFLS0tLS0K"

users:

- name: "lnmp-ecshop"

user:

token: "kubeconfig-user-2vp8p.c-g644f:g5xhgj4dbpht58gmlrcrfbmvvmg8qc59mf5f47ckvxh9cnpmkbtmbz"

contexts:

- name: "lnmp-ecshop"

context:

user: "lnmp-ecshop"

cluster: "lnmp-ecshop"

- name: "lnmp-ecshop-master"

context:

user: "lnmp-ecshop"

cluster: "lnmp-ecshop-master"

current-context: "lnmp-ecshop"

③将配置文件剪切到rancher节点中

[root@rancher ~]# mkdir ~/.kube

[root@rancher ~]# vim ~/.kube/config

④测试kubectl命令

[root@rancher ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready controlplane,etcd,worker 60m v1.16.8

node Ready worker 40m v1.16.8

5.3 在rancher节点上使用kubectl命令为node节点部署php服务

# 上传yaml资源文件和ecshop的安装包

[root@rancher ~]# tar tvf lnmp-v5.tar.gz

drwxr-xr-x root/root 0 2020-08-10 11:57 lnmp/

drwxr-xr-x root/root 0 2020-08-04 15:37 lnmp/conf/

-rw-r--r-- root/root 1437 2020-08-04 15:37 lnmp/conf/nginx.conf

drwxr-xr-x root/root 0 2020-07-31 11:11 lnmp/php/

-rw-r--r-- root/root 434 2020-07-28 16:08 lnmp/php/Dockerfile

drwxr-xr-x root/root 0 2020-08-05 11:48 lnmp/nginx/

-rw-r--r-- root/root 281 2020-07-31 14:17 lnmp/nginx/Dockerfile

-rw-r--r-- root/root 65163191 2019-04-18 16:03 lnmp/ecshop.zip

-rw-r--r-- root/root 727 2020-08-04 13:56 lnmp/php-deployment.yaml

-rw-r--r-- root/root 149 2020-08-04 14:10 lnmp/php-svc.yaml

-rw-r--r-- root/root 212 2020-08-04 15:26 lnmp/nginx-svc.yaml

-rw-r--r-- root/root 779 2020-08-04 15:26 lnmp/nginx-deployment.yaml

-rw-r--r-- root/root 202 2020-08-04 15:44 lnmp/mysql-svc.yaml

-rw-r--r-- root/root 739 2020-08-10 11:50 lnmp/mysql-deployment.yaml

[root@rancher ~]# tar xf lnmp-v5.tar.gz

[root@rancher ~]# ll /root/lnmp/

总用量 63660

drwxr-xr-x 2 root root 24 8月 4 15:37 conf

-rw-r--r-- 1 root root 65163191 4月 18 2019 ecshop.zip

-rw-r--r-- 1 root root 739 8月 10 11:50 mysql-deployment.yaml

-rw-r--r-- 1 root root 202 8月 4 15:44 mysql-svc.yaml

drwxr-xr-x 2 root root 24 8月 5 11:48 nginx

-rw-r--r-- 1 root root 779 8月 4 15:26 nginx-deployment.yaml

-rw-r--r-- 1 root root 212 8月 4 15:26 nginx-svc.yaml

drwxr-xr-x 2 root root 24 7月 31 11:11 php

-rw-r--r-- 1 root root 727 8月 4 13:56 php-deployment.yaml

-rw-r--r-- 1 root root 149 8月 4 14:10 php-svc.yaml

①编辑deploy和pod的yaml资源配置文件

[root@rancher ~]# cat /root/lnmp/php-deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-server

labels:

name: php-server

spec:

replicas: 1

selector:

matchLabels:

app: php-server

template:

metadata:

labels:

app: php-server

spec:

nodeSelector:

kubernetes.io/hostname: master # 修改这里即可

containers:

- name: php-server

image: php:v1 # 可以使用docker images命令查看该镜像有没有

volumeMounts:

- mountPath: /var/www/html/

name: nginx-data

ports:

- containerPort: 9000

lifecycle:

postStart:

exec:

command: [ "/bin/bash","-c","chown apache:apache /var/www/html -R" ]

volumes:

- name: nginx-data

hostPath:

path: /web/html

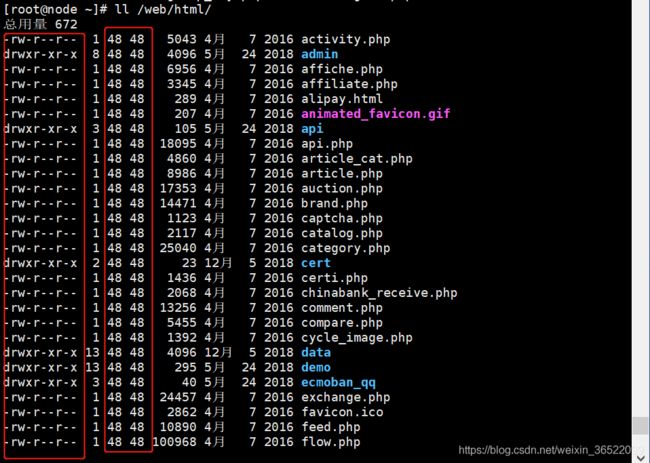

②在node节点上配置ecshop数据文件

[root@rancher ~]# scp /root/lnmp/ecshop.zip [email protected]:/root/

[root@node ~]# unzip ecshop.zip

[root@node ~]# mkdir -p /web/{html,data}

[root@node ~]# mv ecshop/* /web/html/

[root@node ~]# ls /web/html/

activity.php certi.php htaccess.txt respond.php

admin chinabank_receive.php images robots.txt

affiche.php comment.php includes search.php

affiliate.php compare.php index.php sitemaps.php

alipay.html cycle_image.php install snatch.php

animated_favicon.gif data js tag_cloud.php

api demo languages temp

api.php ecmoban_qq message.php themes

article_cat.php exchange.php mobile topic.php

article.php favicon.ico myship.php user.php

auction.php feed.php package.php vote.php

brand.php flow.php pick_out.php wap

captcha.php gallery.php pm.php wholesale.php

catalog.php goods.php quotation.php widget

category.php goods_script.php receive.php

cert group_buy.php region.php

[root@rancher ~]# kubectl apply -f /root/lnmp/php-deployment.yaml

deployment.apps/php-server created

[root@rancher ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

php-server 1/1 1 1 16s

[root@rancher ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

php-server-5c7759b5f8-wrnmg 1/1 Running 0 25s

在node节点上,查看/web/html数据文件是否可写,并且属主和属组都变为了id,而不是用户名。

③创建service的资源配置文件

[root@rancher ~]# cat /root/lnmp/php-svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: php

spec:

ports:

- name: php

port: 9000

protocol: TCP

selector:

app: php-server

[root@rancher ~]# kubectl apply -f /root/lnmp/php-svc.yaml

service/php created

[root@rancher ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 113m

php ClusterIP 10.43.70.88 <none> 9000/TCP 17s

5.4 部署nginx服务

①查看nginx配置文件的模板

[root@rancher ~]# cat /root/lnmp/conf/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

include /etc/nginx/conf.d/*.conf;

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name _;

root /usr/share/nginx/html;

include /etc/nginx/default.d/*.conf;

location / {

index index.php;

}

location ~ \.php$ {

# root html;

fastcgi_pass php:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /var/www/html/$fastcgi_script_name;

include fastcgi_params;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

}

nginx的站点根目录是/usr/share/nginx/htmlphp的解析目录是/var/www/html/fastcgi_pass指定后端 php 解析地址时只需要指定php-svc的名称

②使用ConfigMap创建nginx配置文件

ConfigMap 是 k8s 中非常重要的一个资源对象,简称 cm,常用于向容器中注入配置文件等操作,不仅可以保存单个属性值,也可以保存整个配置文件。

[root@rancher ~]# kubectl create configmap lnmp-nginx-config --from-file=/root/lnmp/conf/nginx.conf

configmap/lnmp-nginx-config created

③创建nginx-deployment文件并部署nginx

[root@rancher ~]# cat /root/lnmp/nginx-deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-shop

spec:

selector:

matchLabels:

app: nginx-shop

replicas: 1

template:

metadata:

labels:

app: nginx-shop

spec:

nodeSelector:

kubernetes.io/hostname: node

containers:

- name: nginx-shop

image: nginx:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- name: nginx-data

mountPath: /usr/share/nginx/html

- name: nginx-conf

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

volumes:

- name: nginx-data

hostPath:

path: /web/html/

- name: nginx-conf

configMap:

name: lnmp-nginx-config

[root@rancher ~]# kubectl apply -f /root/lnmp/nginx-deployment.yaml

deployment.apps/nginx-shop created

[root@rancher ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-shop 1/1 1 1 111s

php-server 1/1 1 1 17m

[root@rancher ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-shop-6bd98dfd4d-9t6xv 1/1 Running 0 118s

php-server-5c7759b5f8-wrnmg 1/1 Running 0 17m

④创建nginx-svc文件,并部署service

[root@rancher ~]# cat /root/lnmp/nginx-svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: nginx-shop

spec:

type: NodePort

ports:

- name: nginx

port: 80

protocol: TCP

targetPort: 80

nodePort: 30010

selector:

app: nginx-shop

[root@rancher ~]# kubectl apply -f /root/lnmp/nginx-svc.yaml

service/nginx-shop created

[root@rancher ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 124m

nginx-shop NodePort 10.43.145.215 <none> 80:30010/TCP 16s

php ClusterIP 10.43.70.88 <none> 9000/TCP 10m

可以测试下php解析有没有问题:

http://192.168.43.147:30010/

看到以上内容,说明nginx服务已经正确部署,并可以正确解析php。

5.5 部署mysql

①部署deployment和pods

[root@rancher ~]# cat /root/lnmp/mysql-deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

namespace: default

labels:

k8s-app: mysql

spec:

selector:

matchLabels:

k8s-app: mysql

replicas: 1

template:

metadata:

labels:

k8s-app: mysql

spec:

nodeSelector:

kubernetes.io/hostname: node # 部署节点

containers:

- name: mysql

image: mysql:5.7 # mysql镜像

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306 # 容器端口号

protocol: TCP

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456" # root用户密码

volumes:

- name: mysql-data

hostPath:

path: /web/data/ # 数据目录

[root@rancher ~]# kubectl apply -f /root/lnmp/mysql-deployment.yaml

deployment.apps/mysql created

[root@rancher ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

mysql 1/1 1 1 105s

nginx-shop 1/1 1 1 12m

php-server 1/1 1 1 27m

[root@rancher ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-57595b8f98-f2lnl 1/1 Running 0 109s

nginx-shop-6bd98dfd4d-9t6xv 1/1 Running 0 12m

php-server-5c7759b5f8-wrnmg 1/1 Running 0 27m

②部署service

[root@rancher ~]# cat /root/lnmp/mysql-svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

k8s-app: mysql

spec:

ports:

- name: mysql

port: 3306

protocol: TCP

targetPort: 3306

selector:

k8s-app: mysql

[root@rancher ~]# kubectl apply -f /root/lnmp/mysql-svc.yaml

service/mysql created

[root@rancher ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 134m

mysql ClusterIP 10.43.25.213 <none> 3306/TCP 15s

nginx-shop NodePort 10.43.145.215 <none> 80:30010/TCP 10m

php ClusterIP 10.43.70.88 <none> 9000/TCP 20m

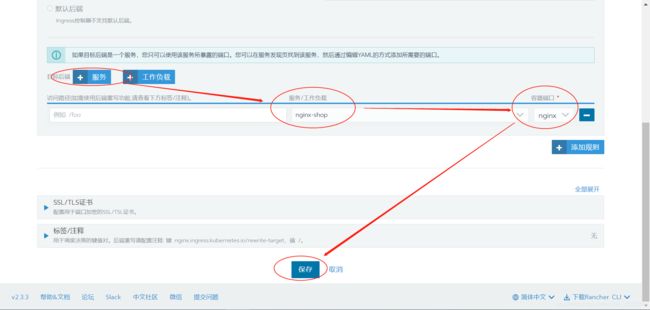

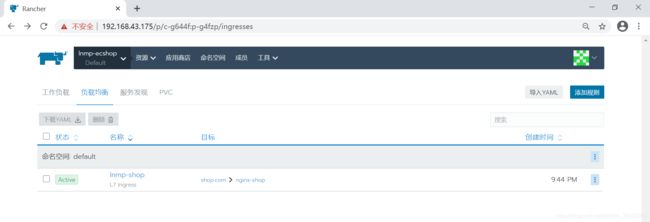

5.6 ingress实时监听apiserver

- 发布服务是通过反向代理实现的,即nginx反向代理k8s中的服务。但是nginx并不能实时监测后端pod的变化,若修改了后端服务,需手动修改nginx配置。

- 对于ingress,拥有一个监听器,可以通过监听kube-apiserver来感知后端service和pod的变化,然后根据ingress的配置将后端信息更新给nginx做反向代理。

- 边缘节点:k8s中对外提供服务的节点,如果k8s集群中每一台主机都拥有公网IP,那所有的都是边缘节点。

- ingress工作原理:发布服务时,将域名解析到集群边缘节点,然后ingress匹配域名规则,将请求转发至相应的服务。

- 在rancher中,自带服务发布功能,是通过nginx实现 ,建议使用

Traefik。

添加服务->服务选择“nginx-shop”->端口(服务名)选择“nginx”

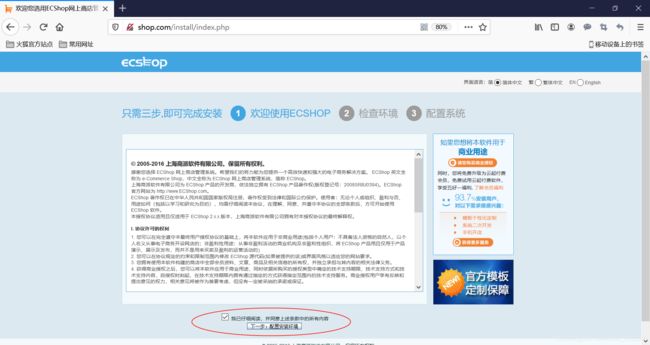

5.7 安装ecshop

①配置域名解析:

在本地配置域名与IP对应关系:

C:\Windows\System32\drivers\etc

# 用notepad++打开,记事本打开应该保存不了

# 在hosts文件末尾加上这句

192.168.43.147 shop.com

②安装ecshop

输入网址:http://shop.com

谷歌应该打不开,要用火狐。

③打开首页及后台:

首页:http://shop.com/

后台:http://shop.com/admin/privilege.php?act=login

6. 总结

- docker装的是19版本,镜像仓库用的是aliyun的,加快pull速度。

- 在线pull安装rancher的速度非常慢,建议先pull需要的镜像,保存到本地。

- 如果所有的镜像都有,那创建k8s集群是很快的,如果5分钟还没搞定,可能是因为有的镜像没有,docker在线pull中。

- 如果所有的镜像都有,但还是报错,可能是装rancher、master、node的主机之前装过k8s,要把之前的文件删除干净。还原也可以。

- 部署php、nginx、mysql的方法差不多,都是先部署deployment和pod,再部署service

- 把ecshop的网页数据放到调用的位置即可,本文放到node节点上的/web/html目录,而mysql数据的存放位置在node节点的/web/data目录下。

- 通过ingress添加规则,将域名解析到边缘节点,然后外部用户就可以通过域名访问k8s集群中的服务。