自己的数据集由json转为voc数据集

开发环境:python3.7

下面以pascal voc2012为例进行演示:

1.修改 json_to_dataset.py,这个文件在解析json文件的时候会用到;

json_to_dataset.py路径为:/home/xxx/anaconda3/envs/labelme/lib/python3.7/site-packages/labelme/cli

修改:

// json_to_dataset.py

import argparse

import base64

import json

import os

import os.path as osp

import imgviz

import PIL.Image

from labelme.logger import logger

from labelme import utils

def main():

logger.warning(

"This script is aimed to demonstrate how to convert the "

"JSON file to a single image dataset."

)

logger.warning(

"It won't handle multiple JSON files to generate a "

"real-use dataset."

)

parser = argparse.ArgumentParser()

parser.add_argument("json_file")

parser.add_argument("-o", "--out", default=None)

args = parser.parse_args()

json_file = args.json_file

if args.out is None:

out_dir = osp.basename(json_file).replace(".", "_")

out_dir = osp.join(osp.dirname(json_file), out_dir)

else:

out_dir = args.out

if not osp.exists(out_dir):

os.mkdir(out_dir)

data = json.load(open(json_file))

imageData = data.get("imageData")

if not imageData:

imagePath = os.path.join(os.path.dirname(json_file), data["imagePath"])

with open(imagePath, "rb") as f:

imageData = f.read()

imageData = base64.b64encode(imageData).decode("utf-8")

img = utils.img_b64_to_arr(imageData)

label_name_to_value = {

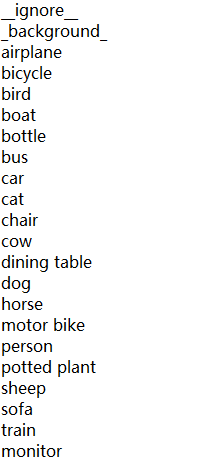

"_background_": 0, "airplane": 1, "bicycle": 2, "bird": 3, "boat": 4, "bottle": 5, "bus": 6, "car": 7, "cat": 8, "chair": 9, "cow": 10, "dining table": 11, "dog":12, "horse": 13, "motor bike": 14, "person": 15, "potted plant": 16, "sheep": 17, "sofa": 18. "train": 19, "monitor": 20 } # 添加指定标签对应的标签值

#注释下面代码

#for shape in sorted(data["shapes"], key=lambda x: x["label"]):

# label_name = shape["label"]

# if label_name in label_name_to_value:

# label_value = label_name_to_value[label_name]

# else:

# label_value = len(label_name_to_value)

# label_name_to_value[label_name] = label_value

lbl, _ = utils.shapes_to_label(

img.shape, data["shapes"], label_name_to_value

)

label_names = [None] * (max(label_name_to_value.values()) + 1)

for name, value in label_name_to_value.items():

label_names[value] = name

lbl_viz = imgviz.label2rgb(

label=lbl, img=imgviz.asgray(img), label_names=label_names, loc="rb"

)

PIL.Image.fromarray(img).save(osp.join(out_dir, "img.png"))

utils.lblsave(osp.join(out_dir, "label.png"), lbl)

PIL.Image.fromarray(lbl_viz).save(osp.join(out_dir, "label_viz.png"))

with open(osp.join(out_dir, "label_names.txt"), "w") as f:

for lbl_name in label_names:

f.write(lbl_name + "\n")

logger.info("Saved to: {}".format(out_dir))

if __name__ == "__main__":

main()

2.修改 label.py 文件

label.py路径:/home/xxx/anaconda3/envs/labelme/lib/python3.7/site-packages/imgviz

修改:

// label.py

import numpy as np

from . import color as color_module

from . import draw as draw_module

def label_colormap(n_label=256, value=None):

"""Label colormap.

Parameters

----------

n_labels: int

Number of labels (default: 256).

value: float or int

Value scale or value of label color in HSV space.

Returns

-------

cmap: numpy.ndarray, (N, 3), numpy.uint8

Label id to colormap.

"""

def bitget(byteval, idx):

return (byteval & (1 << idx)) != 0

cmap = np.zeros((n_label, 3), dtype=np.uint8)

for i in range(0, n_label):

id = i

r, g, b = 0, 0, 0

for j in range(0, 8):

r = np.bitwise_or(r, (bitget(id, 0) << 7 - j))

g = np.bitwise_or(g, (bitget(id, 1) << 7 - j))

b = np.bitwise_or(b, (bitget(id, 2) << 7 - j))

id = id >> 3

cmap[i, 0] = r

cmap[i, 1] = g

cmap[i, 2] = b

#添加自己的colormap

cmap[1,:]=[128,0,0] #airplane

cmap[2,:]=[0,128,0] #bicycle

cmap[3,:]=[128,128,0] #bird

cmap[4,:]=[0,0,128] #boat

cmap[5,:]=[128,0,128] #bottle

cmap[6,:]=[0,128,128] #bus

cmap[7,:]=[128,128,128] #car

cmap[8,:]=[64,0,0] #cat

cmap[9,:]=[192,0,0] #chair

cmap[10,:]=[64,128,0] #cow

cmap[11,:]=[192,128,0] #dining table

cmap[12,:]=[64,0,128] #dog

cmap[13,:]=[192,128,0] #horse

cmap[14,:]=[64,128,128] #motor bike

cmap[15,:]=[192,128,128] #person

cmap[16,:]=[0,64,0] #potted plant

cmap[17,:]=[128,64,0] #sheep

cmap[18,:]=[0,192,0] #sofa

cmap[19,:]=[128,192,0] #train

cmap[20,:]=[0,64,128] #monitor

if value is not None:

hsv = color_module.rgb2hsv(cmap.reshape(1, -1, 3))

if isinstance(value, float):

hsv[:, 1:, 2] = hsv[:, 1:, 2].astype(float) * value

else:

assert isinstance(value, int)

hsv[:, 1:, 2] = value

cmap = color_module.hsv2rgb(hsv).reshape(-1, 3)

return cmap

cmap的值要跟 json_to_dataset.py 中的label_name_to_value对应

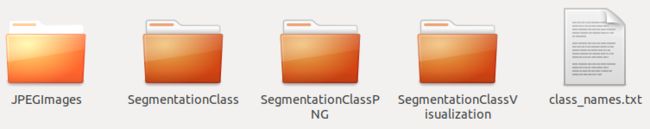

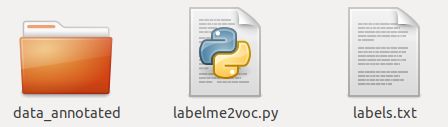

3.之后,新建文件夹,在新建文件夹下,将自己的数据(原始图片和对应的json文件)放入同一个 data_annotated 文件夹;之后,制作自己的 labels.txt,拷贝 labelme2voc.py 文件不需改动;准备情况如下:

labels.txt内容如下(类别顺序与 json_to_dataset.py 和 label.py 一致):

labelme2voc.py 文件目录 /labelme/examples/semantic_segmentation 下载链接,因为只用到该文件,大家可以不用去githup下载,这里直接给出 labelme2voc.py :

// labelme2voc.py

#!/usr/bin/env python

from __future__ import print_function

import argparse

import glob

import json

import os

import os.path as osp

import sys

import imgviz

import numpy as np

import PIL.Image

import labelme

def main():

parser = argparse.ArgumentParser(

formatter_class=argparse.ArgumentDefaultsHelpFormatter

)

parser.add_argument('input_dir', help='input annotated directory')

parser.add_argument('output_dir', help='output dataset directory')

parser.add_argument('--labels', help='labels file', required=True)

parser.add_argument(

'--noviz', help='no visualization', action='store_true'

)

args = parser.parse_args()

if osp.exists(args.output_dir):

print('Output directory already exists:', args.output_dir)

sys.exit(1)

os.makedirs(args.output_dir)

os.makedirs(osp.join(args.output_dir, 'JPEGImages'))

os.makedirs(osp.join(args.output_dir, 'SegmentationClass'))

os.makedirs(osp.join(args.output_dir, 'SegmentationClassPNG'))

if not args.noviz:

os.makedirs(

osp.join(args.output_dir, 'SegmentationClassVisualization')

)

print('Creating dataset:', args.output_dir)

class_names = []

class_name_to_id = {

}

for i, line in enumerate(open(args.labels).readlines()):

class_id = i - 1 # starts with -1

class_name = line.strip()

class_name_to_id[class_name] = class_id

if class_id == -1:

assert class_name == '__ignore__'

continue

elif class_id == 0:

assert class_name == '_background_'

class_names.append(class_name)

class_names = tuple(class_names)

print('class_names:', class_names)

out_class_names_file = osp.join(args.output_dir, 'class_names.txt')

with open(out_class_names_file, 'w') as f:

f.writelines('\n'.join(class_names))

print('Saved class_names:', out_class_names_file)

for label_file in glob.glob(osp.join(args.input_dir, '*.json')):

print('Generating dataset from:', label_file)

with open(label_file) as f:

base = osp.splitext(osp.basename(label_file))[0]

out_img_file = osp.join(

args.output_dir, 'JPEGImages', base + '.jpg')

out_lbl_file = osp.join(

args.output_dir, 'SegmentationClass', base + '.npy')

out_png_file = osp.join(

args.output_dir, 'SegmentationClassPNG', base + '.png')

if not args.noviz:

out_viz_file = osp.join(

args.output_dir,

'SegmentationClassVisualization',

base + '.jpg',

)

data = json.load(f)

img_file = osp.join(osp.dirname(label_file), data['imagePath'])

img = np.asarray(PIL.Image.open(img_file))

PIL.Image.fromarray(img).save(out_img_file)

lbl = labelme.utils.shapes_to_label(

img_shape=img.shape,

shapes=data['shapes'],

label_name_to_value=class_name_to_id,

)

labelme.utils.lblsave(out_png_file, lbl)

np.save(out_lbl_file, lbl)

if not args.noviz:

viz = imgviz.label2rgb(

label=lbl,

img=imgviz.rgb2gray(img),

font_size=15,

label_names=class_names,

loc='rb',

)

imgviz.io.imsave(out_viz_file, viz)

if __name__ == '__main__':

main()

最后,在新建文件夹下打开终端,激活labelme虚拟环境,运行:

python labelme2voc.py data_annotated data_dataset_voc --labels labels.txt

JPEGImages存放原图

SegmentationClass存放ground truth(mask)的二进制文件

SegmentationClassPNG存放原图对应的ground truth(mask)

SegmentationClassVisualization存放原图与ground truth融合后的图

感谢各位读者朋友指正